GCP – What’s new with the AI Hypercomputer? vLLM on TPU, and more

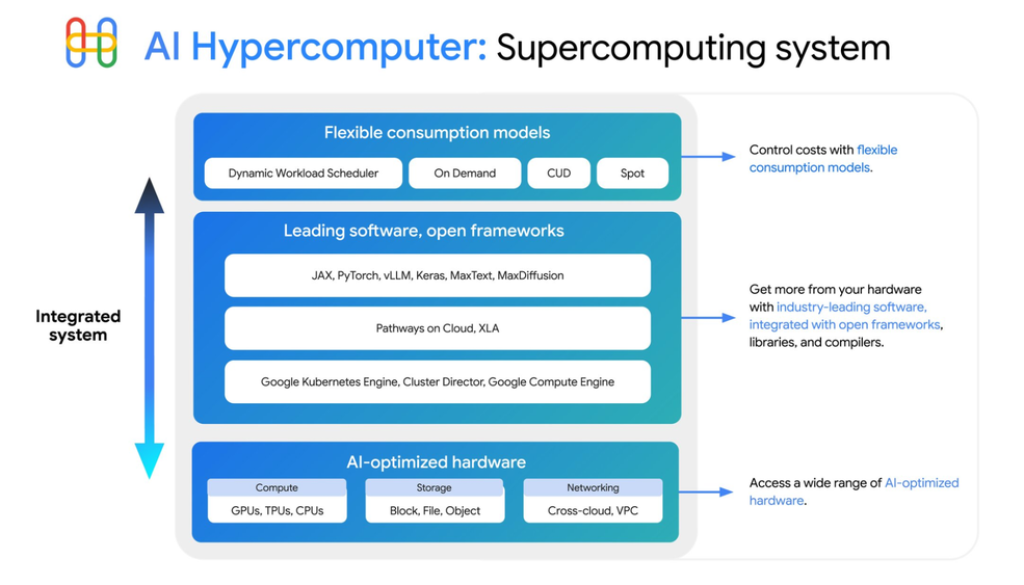

The Google Cloud AI Hypercomputer combines AI-optimized hardware, leading software, and flexible consumption models to help you tackle any AI workload efficiently. Every three months we share a roundup of the latest AI Hypercomputer news, resources, events, learning opportunities, and more. Today, we’re excited to share the latest developments to make your AI journey faster, more efficient, and more insightful, starting with awesome news about inference.

Announcing the new vLLM TPU

For ML practitioners working with large language models (LLMs), serving inference workloads with amazing price-performance is the ultimate goal. That’s why we are thrilled to announce our biggest update this quarter: bringing the performance of JAX and our industry leading Cloud TPUs to vLLM, the most popular open-source LLM inference engine.

vLLM TPU is now powered by tpu-inference, an expressive and powerful new hardware plugin unifying JAX and Pytorch under a single runtime. It is not only faster than the previous generation of vLLM TPU, but also offers broader model coverage (e.g., Gemma, Llama, Qwen) and feature support. vLLM TPU is a framework for developers to:

-

Push the limits of TPU hardware performance in open source

-

Provide more flexibility to JAX and Pytorch users by running Pytorch model definitions performantly on TPU without any additional code changes, while also extending native support to JAX

-

Retain vLLM standardization: keep the same user experience, telemetry, and interface

Today, vLLM TPU is significantly more performant than the first TPU backend prototype that we released back in Feb 2025, with improved model support and feature coverage. With this new foundation in place, our customers will now be able to push the boundaries of TPU inference performance further than ever before in open source, in as little as a few configuration changes.

You can read more about the technical details in vLLM’s most recent blog post here.

More tools for your AI toolkit

Here are some additional AI Hypercomputer updates to give you more control, insight, and choice.

Find and fix bottlenecks faster with the improved XProf Profiler

Debugging performance is one of the most time-consuming parts of ML development. To make it easier, we’ve supercharged the XProf profiler and released the new Cloud Diagnostics XProf library. This gives you a unified, advanced profiling experience across JAX and PyTorch/XLA, helping you pinpoint model bottlenecks with powerful tools previously used only by internal Google teams. Spend less time hunting for performance issues and more time innovating.

Openness in action: A new recipe for NVIDIA Dynamo

We built AI Hypercomputer on the principle of choice, and want you to use the best tools for the job at hand. To that end, the new AI inference recipe for using NVIDIA Dynamo on AI Hypercomputer demonstrates how to deploy a disaggregated inference architecture, separating the “prefill” and “decode” phases across distinct GPU pools managed by GKE. It’s a powerful demonstration of how our open architecture allows you to combine best-in-class technologies from across the ecosystem to solve complex challenges.

Accelerate Reinforcement Learning with NVIDIA NeMo RL

Reinforcement Learning (RL) is rapidly becoming the essential training technique for complex AI agents and workflows requiring advanced reasoning. For teams pushing the boundaries with reinforcement learning, new reproducible recipes are available for getting started with NVIDIA NeMo RL on Google Cloud. NeMo RL is a high-performance framework designed to tackle the complex scaling and latency challenges inherent in RL workloads. It provides optimized implementations of key algorithms like GRPO and PPO, making it easier to train large models. The new recipes run on A4 VMs (powered by NVIDIA HGX B200) with GKE and vLLM, offering a simplified path to setting up and scaling your RL development cycle for models like Llama 3.1 8B and Qwen2.5 1.5B.

Scale high-performance inference cost-effectively

A user’s experience of a generative AI application highly depends on both a fast initial response to a request and a smooth streaming of the response through to completion. To streamline and standardize LLM serving, GKE Inference Gateway and Quickstart are now generally available. Inference Gateway simplifies serving with new features like prefix-aware load balancing, which dramatically improves latency for workloads with recurring prompts. The Inference Quickstart helps you find the optimal, most cost-effective hardware and software configuration for your specific model, saving you months of manual evaluation. With these new features, we’ve improved time-to-first-token (TTFT) and time-per-output-token (TPOT) on AI Hypercomputer.

Build your future on a comprehensive system

The progress we’ve shared today — from bringing vLLM to TPUs to enabling advanced profiling and third-party integrations — all stems from the premise that AI Hypercomputer is a supercomputing system, constantly evolving to meet the demands of the next generation of AI.

We’ll continue to update and optimize AI Hypercomputer based on our learnings from training Gemini, to serving quadrillions of tokens a month. To learn more about using AI Hypercomputer for your own AI workloads, read here. Curious about the last quarterly round up? Please see the previous post here. To stay up to date on our progress or ask us questions, join our community and access our growing AI Hypercomputer resources repository on GitHub. We can’t wait to see what you build with it.

Read More for the details.