GCP – The Dataproc advantage: Advanced Spark features that will transform your analytics and AI

With its exceptional price-performance, Google Cloud’s Dataproc has evolved from a simple, managed open-source software (OSS) service to a powerhouse in Apache Spark and open lakehouses, driving the analytics and AI workloads of many leading global enterprises. The recent launch of the Lightning Engine for Spark, a multi-layer optimization engine, makes Dataproc’s performance even more compelling.

But while performance is a cornerstone of the Dataproc offering, its capabilities go beyond that. To address contemporary enterprise requirements, we’ve invested in supporting open lakehouses, accelerating AI/ML workloads, fostering deeper integrations with BigQuery and Google Cloud Storage, and providing enterprise-grade security. In this post, we go into these advances, and how Dataproc is differentiated from on-premises and do-it-yourself configurations, or platforms from alternative cloud providers.

Open-source engines and open lakehouse

Whether you’re migrating from an existing on-prem data lake, cloud-based DIY clusters, or developing a multi-cloud strategy, Dataproc provides a feature-rich, and performant OSS stack, with strong compatibility and performance for open ecosystems. Some of the benefits in this regard include:

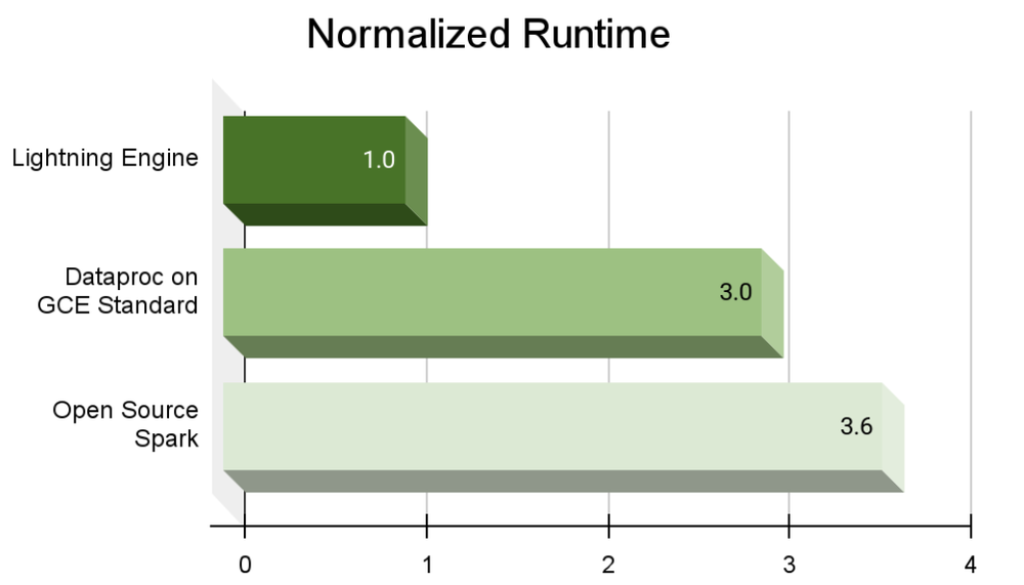

- High performance: Currently in preview, the Lightning Engine Spark engine provides 3.6x better performance compared to open-source Apache Spark, thanks for traditional optimization techniques like query and execution optimizations, as well as optimizations in the file-system layer and connectors. It is fully compatible with open-source Apache Spark, and can plug into existing workloads running Dataproc on Google Compute Engine.

-

Optimized cost and efficiency: Native support for Spot VMs, intelligent autoscaling, and storage-aware optimizations reduce total cost of ownership. Recent enhancements to Dataproc autoscaling have shown to decrease cluster VM expenditures by up to 40% and to reduce cumulative job runtime by 10%, according to our evaluations.

-

Open lakehouse support: Out-of-the-box compatibility with leading open table formats, including Apache Iceberg, Delta Lake, and Apache Hudi. Dataproc offers improved lakehouse integration through catalog support, advanced optimizations, metadata caching, and comprehensive observability features.

-

Open Metastore integration: Support for BigLake Metastore, Iceberg Rest API compliant and Hive Metastore (HMS) compliant metastores help ensure an open and interoperable architecture. This enables you to work with your existing metastores easily, especially during migrations.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud data analytics’), (‘body’, <wagtail.rich_text.RichText object at 0x3e1edbacc940>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectPath=/bigquery/’), (‘image’, None)])]>

Optimizations across the storage layers

Spark with Cloud Storage

We’ve integrated Dataproc with Cloud Storage to optimize data access patterns and reduce costs. Key improvements include:

-

Smarter API retries: Rate-limit aware retry mechanisms help ensure resilient and efficient data access from Cloud Storage, even during periods of high demand.

-

Reduced metadata overhead: Optimized connectors significantly decrease the number of metadata calls to the Cloud Storage API, providing direct cost savings. The graph below shows the metadata optimizations of the Lightning Engine with Cloud Storage compared to open-source connectors.

-

Intelligent caching and prefetching: To enhance data retrieval efficiency from Cloud Storage, Dataproc integrates block-level caching and sub-query fusion. Additionally, scan bottlenecks are mitigated through the implementation of vectorized scans and the proactive pre-fetching of Parquet row groups.

Spark with BigQuery

Lightning Engine offers significant advantages in accessing data in BigQuery. Some of the important ones are:

-

Spark in BigQuery notebooks: You can now use multiple query engines on a single copy of data. You can author and interactively execute Spark code directly in BigQuery Studio notebooks. Write Spark SQL or PySpark code in the same notebook.

-

Accelerated, high-throughput connectivity: The optimized BigQuery connector for Spark utilizes the BigQuery Storage API for massively parallel reads, delivering up to 4x performance improvements over previous versions. The connector also reads data directly in the Apache Arrow format, eliminating costly serialization steps.

-

Intelligent query pushdown: Smart filter pushdown minimizes data movement by ensuring only necessary data is sent to the Spark cluster.

-

Unified data discovery: By using BigLake metastore as a federated Spark metastore, BigQuery tables become instantly discoverable in your Dataproc Spark environment, creating a unified analytics experience.

The below graph shows the performance improvements of Lightning Engine with Big Query compared to the open-source Spark with BQ connector.

AI/ML features

Dataproc streamlines the path from large-scale data processing to impactful AI and machine learning outcomes, lowering the barrier to entry for onboarding AI workloads. It provides a flexible and powerful environment where data scientists can focus on model development, not infrastructure management. Key advantages include:

-

Zero-scale clusters: Accelerate data analysis and notebook jobs without the overhead of maintaining traditional, long-running clusters. Zero-scale clusters let you scale down your worker nodes to zero.

-

Library management: AI/ML engineers frequently try new libraries for AI/ML development. We introduced a simple addArtifacts method to Dataproc to dynamically add PyPI packages to your Spark session. This installs specified packages and their dependencies in the Spark environment, making them available to workers for your UDFs.

-

Accelerated, GPU-powered ML: Dataproc clusters can be provisioned with powerful NVIDIA GPUs. Our ML images come pre-configured with GPU drivers, Spark RAPIDS, CUDA, cuDNN, NCCL and ML libraries like XGBoost, PyTorch, tokenizers, transformers, and more to accelerate ML tasks out of the box.

-

A clear path to advanced AI: Dataproc’s deep integration with Vertex AI provides easy access to Google’s state-of-the-art models along with third-party and open-source models, enabling at-scale batch inference and other advanced MLOps workflows directly from Dataproc jobs.

Enterprise features and security

Dataproc is built on Google Cloud’s secure foundation and provides security and governance features designed for the most demanding enterprises.

-

Organization Policy/fleet management: Organization Policy offers centralized, programmatic control over resources. As an administrator, you can define policies with constraints that apply to Dataproc resources including Dataproc cluster operations, sizing and cost.

-

Granular access control and authentication: Dataproc integrates with Google Cloud’s Identity and Access Management (IAM). For even more fine-grained control, you can enable Kerberos for strong, centralized authentication within your cluster. Furthermore, personal authentication support allows you to configure clusters so that jobs and notebooks are run using the end user’s own credentials, enabling precise, user-level access control and auditing.

-

Proactive vulnerability management: We maintain a robust Common Vulnerabilities & Exposures (CVE) detection and patching process, regularly updating Dataproc images with the latest security patches. When a critical vulnerability is discovered, we release new image versions promptly, allowing you to easily recreate clusters with the patched version, minimizing vulnerability exposure.

-

Comprehensive audit logging and lineage: Dataproc, like all Google Cloud services, generates detailed Cloud Audit Logs for all administrative activities and data access events, providing a clear, immutable record of who did what, and when. For end-to-end governance, you can leverage Dataplex Universal Catalog to automatically discover, catalog, and track data lineage across all your data assets in Dataproc, BigQuery, and Cloud Storage, providing a holistic view for compliance and impact analysis.

-

Easy monitoring and AI-driven troubleshooting: Serverless Spark UI makes it easy to access all Spark metrics without requiring to set up a Persistent History Server, removing manual overhead. Google Cloud Assist uses the latest Gemini AI models to identify issues with your jobs and recommends fixes and optimizations.

Performance, plus innovation and productivity

The enhancements across performance, open-source support, tight integrations with Google services, security, and AI/ML give Dataproc a competitive advantage over other Spark offerings. By managing the underlying complexity, Dataproc allows you to focus on driving business value from data, not on solving infrastructure challenges and open-source concerns. Whether you are migrating from an on-prem Hadoop environment, DIY clusters on the cloud, building a cloud-native lakehouse, or onboarding a new AI workload, Dataproc provides the performance, security, and flexibility at competitive pricing to meet your goals.

To learn more about how Dataproc can accelerate your data strategy, explore the official documentation or contact our sales team to schedule a demo.

Read More for the details.