GCP – Storage Insights datasets: How to optimize storage spend with deep visibility

Managing vast amounts of data in cloud storage can be a challenge. While Google Cloud Storage offers strong scalability and durability, storage admins sometimes sometimes struggle with questions like:

-

What’s driving my storage spend?

-

Where is all my data in Cloud Storage and how is it distributed?

-

How can I search across my data for specific metadata such as age or size?

Indeed, to achieve cost optimization, security, and compliance, you need to understand what you have, where it is, and how it’s being used. That’s where Storage Insights datasets, a feature of Storage Intelligence for Cloud Storage, comes in. Storage Intelligence is a unified management product that offers multiple powerful capabilities to analyze large storage estates and easily take actions. It helps you explore your data, optimize costs, enforce security, and implement governance policies. Storage insights datasets help you deeply analyze your storage footprint and you can use Gemini Cloud Assist for quick analysis in natural language. Based on these analyses, you can take action, such as relocating buckets and performing large-scale batch operations.

In this blog, we focus on how you can use Insights datasets for cost management and visibility, exploring a variety of common use cases. This is especially useful for cloud administrators and FinOps teams performing cloud cost allocation, monitoring and forecasting.

What are Storage Insights datasets?

Storage Insights datasets provide a powerful, automated way to gain deep visibility into your Cloud Storage data. Instead of manual scripts, custom one-off reports for buckets or managing your own collection pipelines, Storage Insights datasets generate comprehensive reports about your Cloud Storage objects and their activities, placing them directly in a BigQuery linked dataset.

Think of it as X-ray vision for your Cloud Storage buckets. It transforms raw storage metadata into structured, queryable data that you can analyze with familiar BigQuery tools to gain crucial insights, with automatic data refreshes delivered every 24hrs (after the initial set up, which could take up to 48hrs for the first load).

Key features

-

Customizable scope: Set the dataset scope to be at the level of the organization, a folder containing projects, a project / set of projects, or a specific bucket.

-

Metadata dataset: It provides a queryable dataset that contains bucket and object metadata directly in BigQuery.

-

Regular updates and retention: After the first load, datasets update with metadata every 24 hours and can retain data for up to 90 days.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘Try Google Cloud for free’), (‘body’, <wagtail.rich_text.RichText object at 0x3e2267780f40>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

Use cases

Calculate routine showback

Understanding who/which applications are consuming what storage is often the first step in effective cost management, especially for larger organizations. With Storage Insights datasets, your object and bucket metadata is available in BigQuery. You can run SQL queries to aggregate storage consumption by specific teams, projects, or applications. You can then attribute storage consumption by buckets or prefixes for internal chargeback or cost attribution, for example: “Department X used 50TB of storage in gs://my-app-data/department-x/ last month”. This transparency fosters accountability and enables accurate internal showback.

Here’s an example SQL query to determine the total storage per bucket and prefix in the dataset:

- code_block

- <ListValue: [StructValue([(‘code’, “SELECTrn bucket,rn SPLIT(name, ‘/’)[rnOFFSETrn (0)] AS top_level_prefix,rn SUM(size) AS total_size_bytesrnFROMrn object_attributes_viewrnGROUP BYrn bucket, top_level_prefixrnORDER BYrn total_size_bytes DESC;rn//Running queries in Datasets accrue BQ query costs, refer to the pricing details page for further details.”), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e2265b20670>)])]>

Understand how much data you have across storage classes

Storage Insights datasets identifies the storage class for every object in your buckets. By querying storageClass, timeCreated, updated in the object metadata view in BigQuery, you can quickly visualize your data distribution across various classes (standard, nearline, coldline, archive) for objects beyond a certain age, as well as when they were last updated. This lets you identify potentially misclassified data. It also provides valuable insights into whether you have entire buckets with coldline or archived data or if your objects unexpectedly moved across storage classes (for example, a file expected to be in archive is now in standard class) using the timeStorageClassUpdated object metadata.

Here’s an example SQL query to see all objects created two years ago, without any updates since and in standard class:

- code_block

- <ListValue: [StructValue([(‘code’, “SELECTrn bucket,rn name,rn size,rn storageClass,rn timeCreated,rn updatedrnFROM object_attributes_latest_snapshot_viewrnWHERErn EXTRACT(YEARrn FROMrn timeCreated) = EXTRACT(YEARrn FROMrn DATE_SUB(CURRENT_DATE(), INTERVAL 24 MONTH))rn AND (updated IS NULLrn OR updated = timeCreated)rn AND storageClass = ‘STANDARD’rnORDER BYrn timeCreated;rn//Running queries in Datasets accrue BQ query costs, refer to the pricing details page for further details.”), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e2265b20f10>)])]>

Set lifecycle and autoclass policies: Automating your savings

Manual data management is time-consuming and prone to error. Storage Insights datasets helps you identify where the use of Object Lifecycle Management (OLM) or Autoclass might reduce costs.

-

Locate the buckets that don’t have OLM or Autoclass configured: Through Storage Insights datasets, you can query bucket metadata to see which buckets lack defined lifecycle policies by using the field

lifecycle, autoclass.enabled. If a bucket contains data that should naturally transition to colder storage or be deleted after a certain period, but has no policy, you can take the appropriate action by knowing which parts of your estate you need to investigate further. Storage Insights datasets provides the data to flag these “unmanaged” buckets, helping you enforce best practices.

Here’s an example SQL query to see all buckets with lifecycle or autoclass configurations enabled and all those without any active configuration:

- code_block

- <ListValue: [StructValue([(‘code’, “SELECTrn name AS bucket_name,rn storageClass AS default_class,rn CASErn WHEN lifecycle = TRUE OR autoclass.enabled = TRUE THEN ‘Managed’rn ELSE ‘Unmanaged’rnENDrn AS lifecycle_autoclass_statusrnFROM bucket_attributes_latest_snapshot_viewrn//Running queries in Datasets accrue BQ query costs, refer to the pricing details page for further details.”), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e224229a370>)])]>

- Evaluate Autoclass impact: Autoclass automatically transitions objects between storage classes based on a fixed access timeline. But how do you know if it’s working as expected or if further optimization is needed? With Storage Insights datasets, you can find the buckets with autoclass enabled using the

autoclass.enabledfield and analyze object metadata by tracking thestorageClass, timeStorageClassUpdatedfield over time for specific objects within Autoclass-enabled buckets. This allows you to evaluate the effectiveness of Autoclass, verify if the objects specified are indeed moving to optimal classes, and understand the real-world impact on your costs. For example, once you configure Autoclass on a bucket, you can visualize the movement of your data between storage classes on Day 31 as compared to Day 1 and understand how autoclass policies take effect on your bucket. -

Evaluate Autoclass suitability: Analyze your bucket’s data to determine if it’s appropriate to use Autoclass with it. For example, if you have short-lived data (less than 30 days old) in a bucket (you can assess objects in daily snapshots to determine the average life of an object in your bucket using

timeCreatedandtimeDeleted), you may not want to turn on Autoclass.

Here’s an example SQL query to find a count of all objects with age more than 30 days and age less than 30 days in bucketA and bucketB:

- code_block

- <ListValue: [StructValue([(‘code’, “SELECTrn SUM(rn CASErn WHEN TIMESTAMP_DIFF(t1.timeDeleted, t1.timeCreated, DAY) < 30 THEN 1rn ELSE 0rn ENDrn ) AS age_less_than_30_days,rn SUM(rn CASErn WHEN TIMESTAMP_DIFF(t1.timeDeleted, t1.timeCreated, DAY) > 30 THEN 1rn ELSE 0rn ENDrn ) AS age_more_than_30_daysrnFROMrn `object_attributes_view` AS t1rnWHERErn t1.bucket IN ( ‘bucketA’, ‘bucketB’)rn AND t1.timeCreated IS NOT NULLrn AND t1.timeDeleted IS NOT NULL;rn//Running queries in Datasets accrue BQ query costs, refer to the pricing details page for further details.”), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e224229aca0>)])]>

Proactive cleanup and optimization

Beyond routine management, Storage Insights datasets can help you proactively find and eliminate wasted storage.

-

Quickly find duplicate objects: Accidental duplicates are a common cause of wasted storage. You can use object metadata like

size, name or evencrc32cchecksums in your BigQuery queries to identify potential duplicates. For example, finding multiple objects with the exact same size, checksum and similar names might indicate redundancy, prompting further investigation.

Here’s an example SQL query to list all objects where their size, crc32c checksum field and name are the same values (indicating potential duplicates):

- code_block

- <ListValue: [StructValue([(‘code’, ‘SELECTrn name,rn bucket,rn timeCreated,rn crc32c,rn sizernFROM (rn SELECTrn name,rn bucket,rn timeCreated,rn crc32c,rn size,rn COUNT(*) OVER (PARTITION BY name, size, crc32c) AS duplicate_countrn FROMrn `object_attributes_latest_snapshot_view` )rnWHERErn duplicate_count > 1rnORDER BYrnsize DESC;rn//Running queries in Datasets accrue BQ query costs, refer to the pricing details page for further details.’), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e224229a8b0>)])]>

-

Find temporary objects to be cleaned up: Many applications generate temporary files that, if not deleted, accumulate over time. Storage Insights datasets allows you to query for objects matching specific naming conventions (e.g.,

*_temp,*.tmp), or located in “temp” prefixes, along with their creation dates. This enables you to systematically identify and clean up orphaned temporary data, freeing up valuable storage space.

Here’s an example SQL query to find all log files created a month ago:

- code_block

- <ListValue: [StructValue([(‘code’, ‘SELECTrn name, bucket, timeCreated, sizern FROMrn ‘object_attributes_latest_snapshot_view’rn WHERErn name LIKE “%.log”rnAND DATE(timeCreated) <= DATE_SUB(CURRENT_DATE(), INTERVAL 1 MONTH)rnORDER BYrnsize DESC;rn//Running queries in Datasets accrue BQ query costs, refer to the pricing details page for further details.’), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e224229a850>)])]>

- List all objects older than a certain date for easy actioning: Need to archive or delete all images older than five years for compliance? Or perhaps you need to clean up logs that are older than 90 days? Storage Insights datasets provides

timeCreatedandcontentTypefor every object. A simple BigQuery query can list all objects older than your specified date, giving you a clear, actionable list of objects for further investigation. You can use Storage Intelligence batch operations, which allows you to action on billions of objects in a serverless manner. - Check SoftDelete suitability: Find buckets that have a high storage size of data that has been soft deleted by querying for the presence of

softDeleteTimeandsizein the object metadata tables. In those cases, data seems temporary and you may need to investigate soft delete cost optimization opportunities.

Taking your analysis further

The true power of Storage Intelligence Insights datasets lies not just in the raw data it provides, but in the insights you can derive and the subsequent actions you can take. Once your Cloud Storage metadata is in BigQuery, the possibilities for advanced analysis and integration are vast.

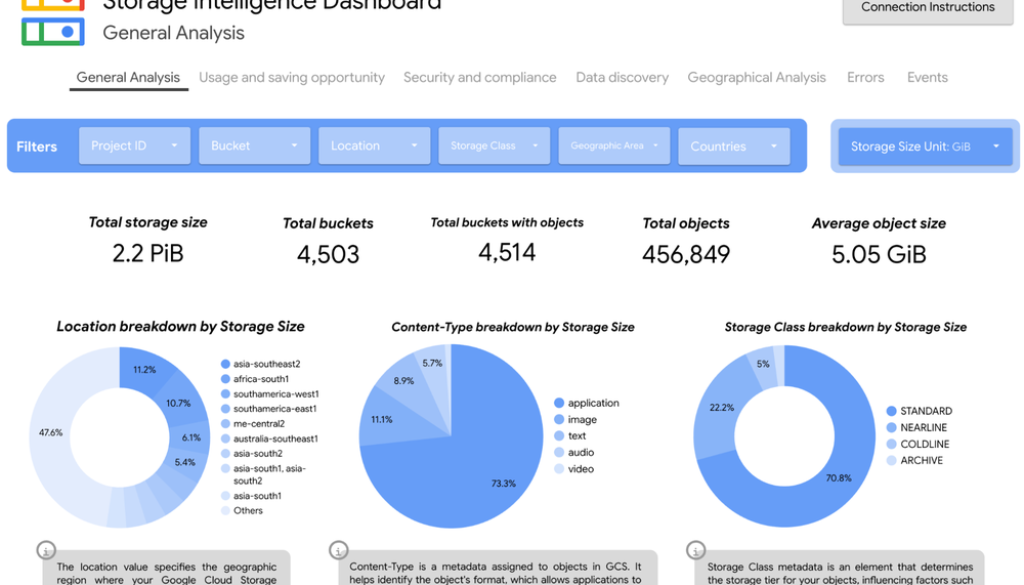

For example, you can use Looker Studio, Google Cloud’s no-cost data visualization and dashboarding tool, to directly connect to your BigQuery Insights datasets, transforming complex queries into intuitive, interactive dashboards. Now you can:

-

Visualize cost trends: Create dashboards that show storage consumption by project, department, or storage class over time. This allows teams to easily track spending, identify spikes, and forecast future costs.

-

Track fast-growing buckets: Analyze the buckets with the most growth in the past week or month, and compare them against known projects for accurate cost attribution. Use Looker’s alerting capabilities to notify you when certain thresholds are met, such as a sudden increase in the total size of data in a bucket.

-

Set up custom charts for common analysis: For routine FinOps use cases (such as tracking buckets without OLM policies configured or objects past their retention expiration time), you can generate weekly reports to relevant teams for easy actioning.

You can also use our template here to connect to your dataset for quick analysis or you can create your own custom dashboard.

Get started

Configure Storage Intelligence and create your dataset to start analyzing your storage estate via a 30-day trial today. Please refer to our pricing documentation for cost details.

Set up your dataset to a scope of your choosing and start analyzing your data:

-

Configure a set of Looker Studio dashboards based on team or departmental usage for monthly analysis by the central FinOps team.

-

Use BigQuery for ad-hoc analysis and to retrieve specific insights.

-

For a complete cost picture, you can integrate your Storage Insights dataset with your Google Cloud billing export to BigQuery. Your billing export provides granular details on all your Google Cloud service costs, including Cloud Storage.

Read More for the details.