GCP – Spanner columnar engine: Powering next-generation analytics on operational data

For years, organizations have struggled with the workload conflict between online transaction processing (OLTP) and analytical query processing. OLTP systems such as Spanner are optimized for high-volume, low-latency transactions, and use row-oriented storage that’s efficient for individual record access. Analytical workloads, conversely, require rapid aggregations and scans across large datasets. These tasks are traditionally handled by separate data warehouses that employ columnar storage and incoming data pipelines from transaction systems. Separating OLTP and analytical workflows requires periodic data transfers, which often leads to stale data, complex ETL pipelines, and operational overhead.

Today, we’re thrilled to announce Spanner columnar engine, which brings new analytical capabilities directly to Spanner databases. Just as AlloyDB’s columnar engine enhanced PostgreSQL analytics, Spanner’s new columnar engine lets you analyze vast amounts of operational data in real-time, all while maintaining Spanner’s global consistency, high availability, and strong transactional guarantees — and without impacting transactional workloads.

The power of Spanner columnar engine helps organizations, such as Verisoul.ai, eliminate the problem of data silos typically found when combining high volume transaction systems with fast analytics. “Detecting fraud in real time is only half the story—showing customers the ‘why’ helps them act faster and turn trust into measurable ROI,” says Raine Scott and Niel Ketkar, founders of Verisoul.ai, a machine-learning platform that stops fake users and fraud. “Spanner’s new columnar engine allows high-velocity transactional writes and rich analytics in one place, eliminating data copies and replication lag so customers get instant answers.”

Columnar storage meets vectorized execution

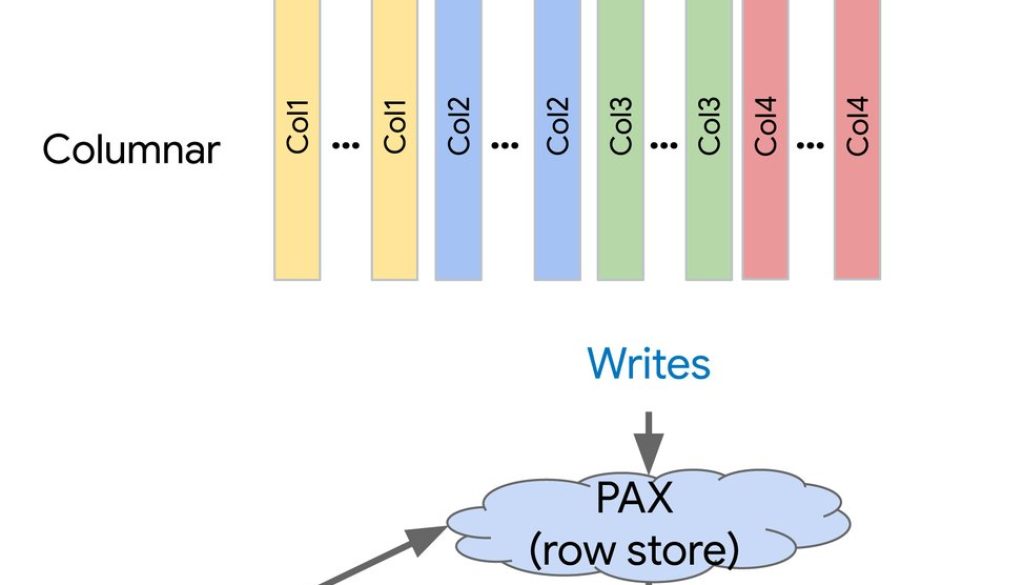

Figure: Spanner columnar engine architecture

The heart of the Spanner columnar engine is its innovative architecture, which combines columnar storage with vectorized query execution.

Columnar Storage in Spanner: Hybrid Architecture

Unlike traditional row-oriented storage, where an entire row is stored contiguously, columnar storage stores data column by column. This offers several advantages for analytical workloads:

-

Reduced I/O: Analytical queries often access only a few columns at a time. With columnar storage, only the relevant columns need to be read from disk, significantly reducing I/O operations.

-

Improved compression: Data within a single column is typically of the same data type and often exhibits similar storage patterns, leading to much higher compression ratios. This means more data can fit in memory and fewer bytes need to be read.

-

Efficient scans: When scanning a column, consecutive values can be processed together, for more efficient data processing.

Spanner columnar engine integrates a columnar format alongside its existing row-oriented storage. This unified transactional and analytical processing design allows Spanner to maintain its OLTP performance while accelerating analytical queries up to 200X on your live operational data.

Vectorized execution: turbocharging your queries

To complement columnar storage, the columnar engine makes use of Spanner’s vectorized execution capabilities. While traditional query engines process data tuple-by-tuple (row by row), a vectorized engine processes data in batches (vectors) of rows. This approach dramatically improves CPU utilization, with:

-

Reduced function call overhead: Instead of calling a function for each individual row, vectorized engines call functions once for an entire batch, significantly reducing overhead.

-

Optimized memory access: Vectorized processing often results in more cache-friendly memory access patterns, further boosting performance.

The combination of columnar storage and vectorized execution means that analytical queries on Spanner can run orders of magnitude faster, allowing for real-time insights on your global-scale data.

Better with BigQuery: Accelerating federated queries

The Spanner columnar engine takes its integration with Google’s Data Cloud ecosystem a step further, specifically enhancing integrations between Spanner and BigQuery. For enterprises that leverage BigQuery for data warehousing and analytics, federating queries directly to Spanner has always been a valuable capability. Now, with the Spanner columnar engine, this integration becomes even more potent, by delivering faster insights on operational data.

Data Boost, Spanner’s fully managed, elastically scalable compute service for analytical workloads, is at the forefront of this acceleration. When BigQuery issues a federated query to Spanner, and that query can benefit from columnar scans and vectorized execution, Data Boost automatically leverages the Spanner columnar engine. This provides:

-

Faster analytical insights: Complex analytical queries initiated from BigQuery that target your Spanner data execute significantly faster, bringing near-real-time operational data into your broader analytical landscape.

-

Reduced impact on OLTP: Data Boost helps ensure that analytical workloads are offloaded from your primary Spanner compute resources, preventing impact on transactional operations.

-

Simplified data architecture: You can get the best of both worlds – Spanner’s transactional consistency and BigQuery’s analytical prowess – without the need for complex ETL pipelines to duplicate data.

This integration empowers data analysts and scientists to combine Spanner’s live operational data with other datasets in BigQuery for richer, more timely insights and decision-making.

Columnar engine in action: Accelerating your analytical queries

Let’s look at some sample queries that should see significant acceleration with the Spanner columnar engine. These types of queries, common in analytical and graph workloads, benefit from columnar scans and vectorized processing.

Scenario: Imagine a large e-commerce database; for demonstration purposes, we’ll use the same schema as the TPC-H benchmark.

Query 1: Revenue from discounted shipments in a given Year

SQL

- code_block

- <ListValue: [StructValue([(‘code’, ‘@{scan_method=columnar}rnSELECTrn sum(l.l_extendedprice * l.l_discount) AS revenuernFROMrn lineitem lrnWHERErn l.l_shipdate >= date “1994-01-01″rn AND l.l_shipdate < date_add(date “1994-01-01”, INTERVAL 1 year)rn AND l.l_discount BETWEEN 0.08 – 0.01 AND 0.08 + 0.01rn AND l.l_quantity < 25;’), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e333c7ada60>)])]>

Acceleration: This query heavily benefits from scanning only the l_shipdate, l_extendedprice, l_discount, and l_quantity columns from the lineitem table. Vectorized execution rapidly applies the date, discount, and quantity filters to identify qualifying rows.

Query 2: Total quantity of non-discounted items

SQL

- code_block

- <ListValue: [StructValue([(‘code’, ‘@{scan_method=columnar}rnSELECTrn sum(l_quantity)rnFROMrn lineitemrnWHERErn l_discount = 0;’), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x3e333c325250>)])]>

Acceleration: This query heavily benefits from scanning only the l_discount and l_quantity columns from the lineitem table. Vectorized execution rapidly applies the equality filter (l_discount = 0) to identify matching rows.

Query 3: Item count and discount range for specific tax brackets..

SQL

- code_block

- <ListValue: [StructValue([(‘code’, ‘@{scan_method=columnar}rnSELECTrn count(*),rn min(l_discount),rn max(l_discount)rnFROMrn lineitemrnWHERErn l_tax IN (0.01, 0.02);’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e333c325340>)])]>

Acceleration: This query benefits heavily from scanning only the l_tax and l_discount columns from the lineitem table. Vectorized execution rapidly applies the IN filter on the l_tax column to identify all matching rows.

Query 4: Scan friend relationships to find the N most connected people in the graph using Spanner Graph

GQL

- code_block

- <ListValue: [StructValue([(‘code’, ‘@{scan_method=columnar}rnGRAPH social_graphrnMATCH (p:Person)-[k:Knows]->(:Person)rnRETURN COUNT(k) AS friend_count GROUP BY p ORDER BY friend_count DESC LIMIT 10;’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e334f6545b0>)])]>

Acceleration:This query benefits heavily from scanning subgraphs and by loading only relevant columns from the graph.

Query 5: Perform a K-nearest neighbor vector similarity search to retrieve the top 10 most semantically similar embeddings with perfect recall

GQL

- code_block

- <ListValue: [StructValue([(‘code’, ‘@{scan_method=columnar}rnSELECT e.Id as key, COSINE_DISTANCE(@vector_param, e.Embedding) as distancernFROM Embeddings ernORDER BY distance LIMIT 10;’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e334f654e20>)])]>

Acceleration:This query benefits heavily from scanning contiguously stored vector embeddings and by loading only relevant columns from the table.

Get started with Spanner columnar engine today!

The Spanner columnar engine is designed for businesses looking to unlock faster, deeper, real-time insights from their operational data without compromising Spanner’s foundational strengths. We are incredibly excited about the possibilities this opens up for developers and data analysts alike. We invite you to be among the first to try the Spanner columnar engine. Request access to the Preview of Spanner columnar engine today by signing up at bit.ly/spannercolumnar. We look forward to seeing what you build!

Read More for the details.