GCP – Scaling high-performance inference cost-effectively

At Google Cloud Next 2025, we announced new inference capabilities with GKE Inference Gateway, including support for vLLM on TPUs, Ironwood TPUs, and Anywhere Cache.

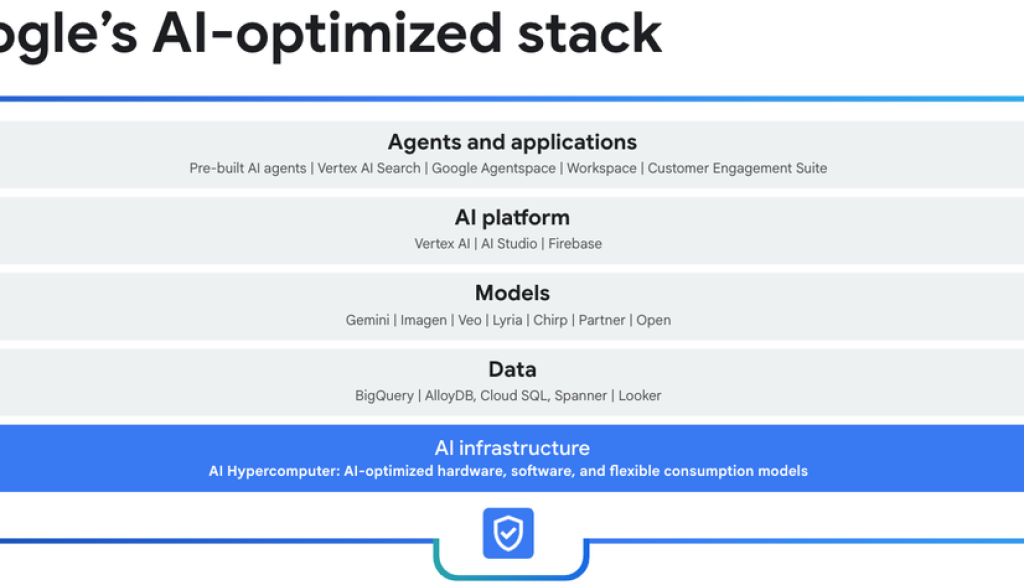

Our inference solution is based on AI Hypercomputer, a system built on our experience running models like Gemini and Veo 3, which serve over 980 trillion tokens a month to more than 450 million users. AI Hypercomputer services provide intelligent and optimized inferencing, including resource management, workload optimization and routing, and advanced storage for scale and performance, all co-designed to work together with industry leading GPU and TPU accelerators.

Today, GKE Inference Gateway is generally available, and we are launching new capabilities that deliver even more value. This underscores our commitment to helping companies deliver more intelligence, with increased performance and optimized costs for both training and serving.

Let’s take a look at the new capabilities we are announcing.

Efficient model serving and load balancing

A user’s experience of a generative AI application highly depends on both a fast initial response to a request and a smooth streaming of the response through to completion. With these new features, we’ve improved time-to-first-token (TTFT) and time-per-output-token (TPOT) on AI Hypercomputer. TTFT is based on the prefill phase, a compute-bound process where a full pass through the model creates a key-value (KV) cache. TPOT is based on the decode phase, a memory-bound process where tokens are generated using the KV cache from the prefill stage.

We improve both of these in a variety of ways. Generative AI applications like chatbots and code generation often reuse the same prefix in API calls. To optimize for this, GKE Inference Gateway now offers prefix-aware load balancing. This new, generally available feature improves TTFT latency by up to 96% at peak throughput for prefix-heavy workloads over other clouds by intelligently routing requests with the same prefix to the same accelerators, while balancing the load to prevent hotspots and latency spikes.

Consider a chatbot for a financial services company that helps users with account inquiries. A user starts a conversation to ask about a recent credit card transaction. Without prefix-aware routing, when the user asks follow up questions, such as the date of the charge or the confirmation number, the LLM has to re-read and re-process the entire initial query before it can answer the follow up question. The re-computation of the prefill phase is very inefficient and adds unnecessary latency, with the user experiencing delays between each question. With prefix-aware routing, the system intelligently reuses the data from the initial query by routing the request back to the same KV cache. This bypasses the prefill phase, allowing the model to answer almost instantly. Less computation also means fewer accelerators for the same workload, providing significant cost savings.

To further optimize inference performance, you can now also run disaggregated serving using AI Hypercomputer, which can improve throughput by 60%. Enhancements in GKE Inference Gateway, llm-d, and vLLM, work together to enable dynamic selection of prefill and decode nodes based on query size. This significantly improves both TTFT and TPOT by increasing the utilization of compute and memory resources at scale.

Take an example of an AI-based code completion application, which needs to provide low-latency responses to maintain interactivity. When a developer submits a completion request, the application must first process the input codebase; this is referred to as the prefill phase. Next, the application generates a code suggestion token by token; this is referred to as the decode phase. These tasks have dramatically different demands on accelerator resources — compute-intensive vs. memory-intensive processing. Running both phases on a single node results in neither being fully optimized, causing higher latency and poor response times. Disaggregated serving assigns these phases to separate nodes, allowing for independent scaling and optimization of each phase. For example, if the user base of developers submit a lot of requests based on large codebases, you can scale the prefill nodes. This improves latency and throughput, making the entire system more efficient.

Just as prefix-aware routing optimizes the reuse of conversational context, and disaggregated serving enhances performance by intelligently separating the computational demands of model prefill and token decoding, we have also addressed the fundamental challenge of getting these massive models running in the first place. As generative AI models grow to hundreds of gigabytes in size, they can often take over ten minutes to load, leading to slow startup and scaling. To solve this, we now support the Run:ai model streamer with Google Cloud Storage and Anywhere Cache for vLLM, with support for SGLang coming soon. This enables 5.4 GiB/s of direct throughput to accelerator memory, reducing model load times by over 4.9x, resulting in a better end user experience.

vLLM Model Load Time

Get started faster with data-driven decisions

Finding the ideal technology stack for serving AI models is a significant industry challenge. Historically, customers have had to navigate rapidly evolving technologies, the switching costs that impact hardware choices, and hundreds of thousands of possible deployment architectures. This inherent complexity makes it difficult to quickly achieve the best price-performance for your inference environment.

The GKE Inference Quickstart, now generally available, can save you time, improve performance, and reduce costs when deploying AI workloads by helping determine the right accelerator for your workloads in the right configuration, suggesting the best accelerators, model server, and scaling configuration for your AI/ML inference applications. New improvements to GKE Inference Quickstart include cost insights and benchmarked performance best practices, so you can easily compare costs and understand latency profiles, saving you months on evaluation and qualification.

GKE Inference Quickstart’s recommendations are grounded in a living repository of model and accelerator performance data that we generate by benchmarking our GPU and TPU accelerators against leading large language models like Llama, Mixtral, and Gemma more than 100 times per week. This extensive performance data is then enriched with the same storage, network, and software optimizations that power AI inferencing on Google’s global-scale services like Gemini, Search, and YouTube.

Let’s say you’re tasked with deploying a new, public-facing chatbot. The goal is to provide fast, high-quality responses at the lowest cost. Until now, finding the most optimal and cost-effective solution for deploying AI models was a significant challenge. Developers and engineers had to rely on a painstaking process of trial and error. This involved manually benchmarking countless combinations of different models, accelerators, and serving architectures, with all the data logged into a spreadsheet to calculate the cost per query for each scenario. This manual, weeks-long, or even months-long, project was prone to human error and offered no guarantee that the best possible solution was ever found.

Using Google Colab and the built-in optimizations in the Google Cloud console, GKE Inference Quickstart lets you choose the most cost-effective accelerators for, say, serving a Llama 3-based chatbot application that needs a TTFT of less than 500ms. These recommendations are deployable manifests, making it easy to choose a technology stack that you can provision from GKE in your Google Cloud environment. With GKE Inference Quickstart, your evaluation and qualification effort has gone from months to days.

Views from the Google Colab that helps the engineer with their evaluation.

Try these new capabilities for yourself. To get started with GKE Inference QuickStart, from the Google Cloud console, go to Kubernetes Engine > AI/ML, and select “+ Deploy Models” near the top of the screen. Use the Filter to select Optimized > Values = True. This will show you all of the models that have price/performance optimization to select from. Once you select a model, you’ll see a sliding bar to select latency. The compatible accelerators from the drop-down will change to ones that match the performance of the latency you are selecting. You will notice that the cost/million output token will also change based on your selections.

Then, via Google Colab, you can plot and view the price/performance of leading AI models on Google Cloud. Chatbot Arena ratings are integrated to help you determine the best model for your needs based on model size, rating, and price per million tokens. You can also pull in your organization’s in-house quality measures into the colab to join with Google’s comprehensive benchmarks to make data-driven decisions.

Dedicated to optimizing inference

At Google Cloud, we are committed to helping companies deploy and improve their AI inference workloads at scale. Our focus is on providing a comprehensive platform that delivers unmatched performance and cost-efficiency for serving large language models and other generative AI applications. By leveraging a codesigned stack of industry-leading hardware and software innovations — including the AI Hypercomputer, GKE Inference Gateway, and purpose-built optimizations like prefix-aware routing, disaggregated serving, and model streaming — we ensure that businesses can deliver more intelligence with faster, more responsive user experiences and lower total cost of ownership. Our solutions are designed to address the unique challenges of inference, from model loading times to resource utilization, enabling you to deliver on the promise of generative AI. To learn more and get started, visit our AI Hypercomputer site.

Read More for the details.