GCP – Scalable AI starts with storage: Guide to model artifact strategies

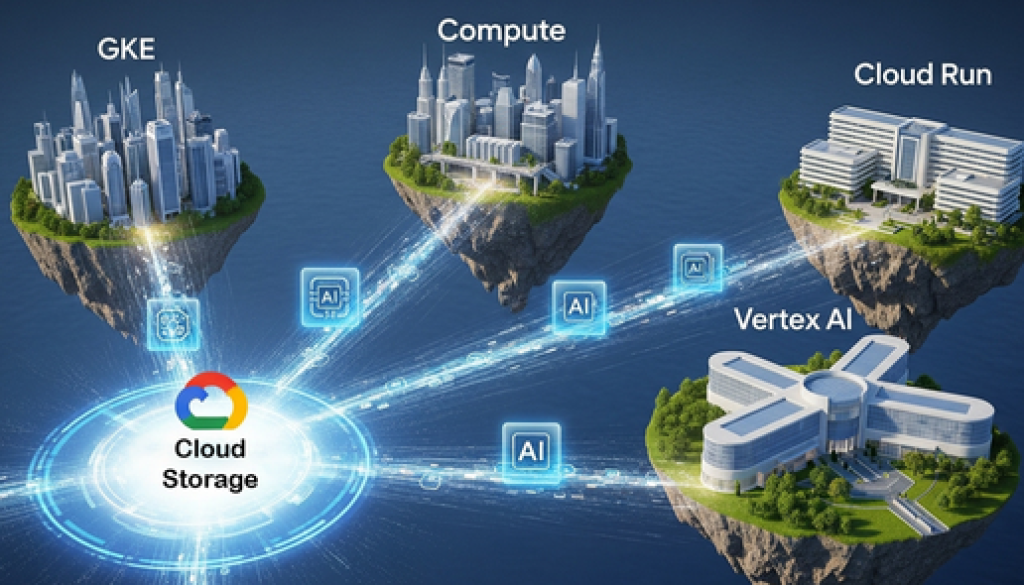

Managing large model artifacts is a common bottleneck in MLOps. Baking models into container images leads to slow, monolithic deployments, and downloading them at startup introduces significant delays. This guide explores a better way: decoupling your models from your code by hosting them in Cloud Storage and accessing them efficiently from GKE and Cloud Run. We’ll compare various loading strategies, from traditional methods to the high-performance Cloud Storage FUSE CSI driver, to help you build a more agile and scalable ML serving platform.

Optimizing the artifact

To optimize the artifact, we recommend that you centralize in Cloud Storage, and then use quantization and cache warming.

Centralizing in Cloud Storage

The most important step toward a scalable ML serving architecture is to treat the model artifact as a first-class citizen, with its own lifecycle, independent of the application code. The best way to do this is to use Cloud Storage as the central, versioned, and secure source of truth for all model assets, such as .safetensors, .gguf, .pkl, or .joblib files.

This architectural pattern does more than just provide a convenient place to store files. It establishes a unified model plane that is logically separate from the compute plane where inference occurs. The model plane is hosted on Cloud Storage, and it handles the governance of the ML asset: its versioning, storage durability, and access control.

The compute plane—be it GKE, Cloud Run, Vertex AI, or even a local development machine—handles execution: loading the model into GPU memory and processing inference requests. This separation provides immense strategic flexibility. The same versioned model artifact in a Cloud Storage bucket can be consumed by a GKE cluster for a high-throughput batch prediction job; by a Cloud Run service for bursty, real-time inference; and by a fully managed Vertex AI Endpoint for ease of use, all without duplicating the underlying asset. This storage method prevents model sprawl and ensures that all parts of the organization are working from a single, auditable source.

To implement this architecture effectively, you need a structured approach to artifact organization. Best practices suggest the use of a Cloud Storage bucket structure that facilitates robust MLOps workflows. This approach includes using clear naming conventions that incorporate model names and versions (for example, a bucket named gs://my-model-artifacts/gemma-2b/v1.0/) and separate prefixes or even distinct buckets for different environments (such as dev, staging, and prod).

With this approach, access control should be managed with precision using Identity and Access Management (IAM) policies. For example, CI/CD service accounts for production deployments should only have read access to the production models bucket, data scientists might have write access only to development or experimentation buckets, and automated tests should gate promotion of development images to production pipelines.

You can also make specific objects or entire buckets publicly readable through IAM roles like roles/storage.objectViewer assigned to the allUsers principal, though this should be used with caution. This disciplined approach to storage and governance transforms Cloud Storage from a simple file repository into the foundational layer of a scalable and secure MLOps ecosystem.

That scalability is critical for performance, especially when serving large models. Model load is a bursty, high throughput workload, with up to thousands of GPUs trying to load the same model weights simultaneously as quickly as possible. Anywhere Cache should always be used for this scenario, which can provide up to 2.5 TB/s in BW at lower latency. As a managed, SSD-backed caching layer for Cloud Storage, Anywhere Cache colocates data with your compute resources. It transparently serves read requests from a high-speed local cache, benefiting any Cloud Storage client in the zone—including GKE, Compute Engine, and Vertex AI—and dramatically reducing model load times.

Quantization

Quantization is the process of reducing the precision of a model’s weights (for example, from 32-bit floating point to 4-bit integer). From a storage perspective, the size of a model’s weights is a function of its parameters and their precision (precision × number of parameters = model size). By reducing the precision, you can dramatically shrink the model’s storage footprint.

Quantization has two major benefits:

-

Smaller model size: A quantized model takes up significantly less disk space, leading to faster downloads and less memory consumption.

-

Faster inference: Many modern CPUs and GPUs can perform integer math much faster than floating-point math.

For the best results, use modern, quantization-aware model formats like GGUF, which are designed for fast loading and efficient inference.

Cache warming

For many LLMs, the initial processing of a prompt is the most computationally expensive part. You can pre-process common prompts or a representative sample of your data during the build process and save the resulting cache state. Your application can then load this warmed cache at startup, allowing it to skip the expensive initial processing for common requests. Serving frameworks like VLLM provide capabilities like Automated Prefix Caching that support this.

Loading the artifact

Choosing the right model loading strategy is a critical architectural decision. Here’s a breakdown of the most common approaches:

- Cloud Storage FUSE CSI driver: The recommended approach for most modern ML serving workloads on GKE is to use the Cloud Storage FUSE CSI driver. This approach mounts a Cloud Storage bucket directly into the pod’s filesystem as a volume, so the application can read the model as if it were a local file. This implementation provides near-instantaneous pod startup and fully decouples the model from the code.

initcontainer download: A more flexible approach is to use a Kubernetesinitcontainer to download the model from Cloud Storage to a sharedemptyDirvolume before the main application starts. This implementation decouples the model from the image, so that you can update the model without rebuilding the container. However, this implementation can significantly increase pod startup time and add complexity to your deployment. This approach is a good option for medium-sized models where the startup delay is acceptable.- Concurrent download: Similar to the

initcontainer, you can download the model concurrently within your application. This approach can be faster than a simplegsutil cpcommand because it allows for parallelization. A prime example of this is the vLLM Run:ai Model Streamer, which you can enable when you use the vLLM serving framework. This feature parallelizes the download of large model files by splitting them into chunks and fetching them concurrently, which significantly accelerates the initial load. - Baking into the image: The simplest approach is to copy the model directly into the container image during the

docker buildprocess. This approach makes the container self-contained and portable, but it also creates very large images, which can be slow to build and transfer. This tight coupling of the model and code also means that any model update requires a full image rebuild. This strategy is best for small models or quick prototypes where simplicity is the top priority.

Direct access with Cloud Storage FUSE

The Cloud Storage FUSE CSI driver is a significant development for ML workloads on GKE. It lets you mount a Cloud Storage bucket directly into your pod’s filesystem, so that the objects in the bucket appear as local files. This configuration is accomplished by injecting a sidecar container into your pod that manages the FUSE mount. This setup eliminates the need to copy data, resulting in near-instantaneous pod startup times.

It’s important to note that although the Cloud Storage FUSE CSI driver is compatible with both GKE Standard and Autopilot clusters, Autopilot’s security constraints prevent the use of the SYS_ADMIN capability, which is typically required by FUSE. The CSI driver is designed to work without this privileged access, but it’s a critical consideration when you deploy to Autopilot.

Performance tuning

Out of the box, Cloud Storage FUSE is a convenient way to access your models. But to unlock its full potential for read-heavy inference workloads, you need to tune its caching and prefetching capabilities.

-

Parallel downloads: For very large model files, you can enable parallel downloads to accelerate the initial read from Cloud Storage into the local file cache. This is enabled by default when file caching is enabled.

-

Metadata caching & prefetching: The first time that you access a file, FUSE needs to get its metadata (like size and permissions) from Cloud Storage. To keep the metadata in memory, you can configure a

statcache. For even better performance, you can enable metadata prefetching, which proactively loads the metadata for all files in a directory when the volume is mounted. You can enable metadata prefetching by setting themetadata-cache:stat-cache-max-size-mbandmetadata-cache:ttl-secsoptions in yourmountOptionsconfiguration.

For more information, see the Performance tuning best practices in the Cloud Storage documentation. For an example of a GKE Deployment manifest that mounts a Cloud Storage bucket with performance-tuned FUSE settings, see the sample configuration YAML files.

Advanced storage on GKE

Cloud Storage FUSE offers a direct and convenient way to access model artifacts. GKE also provides specialized, high-performance storage solutions designed to eliminate I/O bottlenecks for the most demanding AI/ML workloads. These options, Google Cloud Managed Lustre, and Hyperdisk ML, offer alternatives that can provide high performance and stability by leveraging dedicated parallel file and block storage.

Managed Lustre

For the most extreme performance requirements, Google Cloud Managed Lustre provides a fully managed, parallel file system. Managed Lustre is designed for workloads that demand ultra-low, sub-millisecond latency and massive IOPS, such as HPC simulations and AI training and inference jobs. It’s POSIX-compliant, which ensures compatibility with existing applications and workflows.

This service, powered by DDN’s EXAScaler, scales to multiple PBs and streams data up to 1 TB/s, making it ideal for large-scale AI jobs that need to feed hungry GPUs or TPUs. It’s intended for high-throughput data access rather than long-term storage archiving. Although its primary use case is persistent storage for training data and checkpoints, it can handle millions of small files and random reads with extremely low latency and high throughput. It’s therefore a powerful tool for complex inference pipelines that might need to read or write many intermediate files.

To use Managed Lustre with GKE, you first enable the Managed Lustre CSI driver on your GKE cluster. Then, you define a StorageClass resource that references the driver and a PersistentVolumeClaim request to either dynamically provision a new Lustre instance or connect to an existing one. Finally, you mount the PersistentVolumeClaim as a volume in your pods, which lets them access the high-throughput, low-latency parallel file system.

Hyperdisk ML

Hyperdisk ML is a network block storage option that’s purpose-built for AI/ML workloads, particularly for accelerating the loading of static data like model weights. Unlike Cloud Storage FUSE, which provides a file system interface to an object store, Hyperdisk ML provides a high-performance block device that can be pre-loaded, or hydrated, with model artifacts from Cloud Storage.

Its standout feature for inference serving is its support for READ_ONLY_MANY access, which allows a single Hyperdisk ML volume to be attached as a read-only device to up to 2,500 GKE nodes concurrently. In this architecture, every pod can access the same centralized, high-performance copy of the model artifact without duplication. You can therefore use it to scale out stateless inference services that provide high throughput with smaller TB sized volumes. Note that the read-only nature of Hyperdisk ML introduces operational process changes each time a model is updated.

To integrate Hyperdisk ML, you first create a Hyperdisk ML volume and populate it with your model artifacts from Cloud Storage. Then you define a StorageClass resource and a PersistentVolumeClaim request in your GKE cluster to make the volume available to your pods. Finally, you mount the PersistentVolumeClaim as a volume in your Deployment manifest.

Serving the artifact on Cloud Run

Cloud Run also supports mounting Cloud Storage buckets as volumes, which makes it a viable platform for serving ML models, especially with the addition of GPU support. You can configure a Cloud Storage volume mount directly in your Cloud Run service definition. This implementation provides a simple and effective way to give your serverless application access to the models that are stored in Cloud Storage.

Here is an example of how to mount a Cloud Storage bucket as a volume in a Cloud Run service by using the gcloud command-line tool:

- code_block

- <ListValue: [StructValue([(‘code’, ‘gcloud run deploy my-ml-service \rn –image gcr.io/my-project/my-ml-app:latest \rn –add-volume=name=model-volume,type=cloud-storage,bucket=my-gcs-bucket \rn –add-volume-mount=volume=model-volume,mount-path=/models’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7fa15cfe4eb0>)])]>

Automating the artifact lifecycle

To automate the artifact lifecycle, you build an ingestion pipeline that includes a scripted Cloud Run job, and then you stream directly to Cloud Storage.

Building an ingestion pipeline

For a production environment, you need an automated, repeatable process for ingesting models, which you can build by using a Cloud Run job. The core of this pipeline is a Cloud Run job that runs a containerized script. This job can be triggered manually or on a schedule to create a robust, serverless pipeline for transferring models from Hugging Face into your Cloud Storage bucket.

Streaming directly to Cloud Storage

Instead of downloading the entire model to the Cloud Run job’s local disk before uploading it to Cloud Storage, we can stream it directly. The obstore library is perfect for this. It lets you treat a Hugging Face repository and a Cloud Storage bucket as object stores and stream data between them asynchronously. This is highly efficient, especially for very large models, because it minimizes local disk usage and maximizes network throughput.

Here is a simplified Python snippet that shows the core logic of streaming a file from Hugging Face to Cloud Storage by using the obstore library:

- code_block

- <ListValue: [StructValue([(‘code’, ‘import osrnimport asynciornfrom urllib.parse import urlparsernfrom huggingface_hub import hf_hub_urlrnimport obstore as obsrnfrom obstore.store import GCSStore, HTTPStorernrnasync def stream_file_to_gcs(file_name, hf_repo_id, gcs_bucket_name, gcs_path_prefix):rn “””Streams a file from a Hugging Face repo directly to Cloud Storage.”””rn rn # 1. Configure the source (Hugging Face) and destination (Cloud Storage) storesrn http_store = HTTPStore.from_url(“https://huggingface.co”)rn gcs_store = GCSStore(bucket=gcs_bucket_name)rnrn # 2. Get the full download URL for the filern full_download_url = hf_hub_url(repo_id=hf_repo_id, filename=file_name)rn download_path = urlparse(full_download_url).pathrn rn # 3. Define the destination path in Cloud Storagern gcs_destination_path = os.path.join(gcs_path_prefix, file_name)rnrn # 4. Get the download stream from Hugging Facern streaming_response = await obs.get_async(http_store, download_path)rnrn # 5. Stream the file to Cloud Storagern await obs.put_async(gcs_store, gcs_destination_path, streaming_response)rnrn print(f”Successfully streamed ‘{file_name}’ to Cloud Storage.”)rnrn# Example usage:rn# asyncio.run(stream_file_to_gcs(“model.safetensors”, “google/gemma-2b”, “my-gcs-bucket”, “gemma-2b-model/”))’), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7fa15cfe4b50>)])]>

Conclusion

By moving your model artifacts out of your container images and into a centralized Cloud Storage bucket, you gain a tremendous amount of flexibility and agility. This decoupled approach simplifies your CI/CD pipeline, accelerates deployments, and lets you manage your code and models independently.

For the most demanding ML workloads on GKE, the Cloud Storage FUSE CSI driver is an excellent choice, providing direct, high-performance access to your models without a time-consuming copy step. For even greater performance, consider using Managed Lustre or Hyperdisk ML. When you combine these options with an automated ingestion pipeline and build-time best practices, you can create a truly robust, scalable, and future-proof ML serving platform on Google Cloud.

The journey to a mature MLOps platform is an iterative one. By starting with a solid foundation of artifact-centric design, you can build a system that is not only powerful and scalable today, but also adaptable to the ever-changing landscape of machine learning. Share your tips on managing model artifacts with me on LinkedIn, X, and Bluesky.

Read More for the details.