GCP – Run OpenAI’s new gpt-oss model at scale with Google Kubernetes Engine

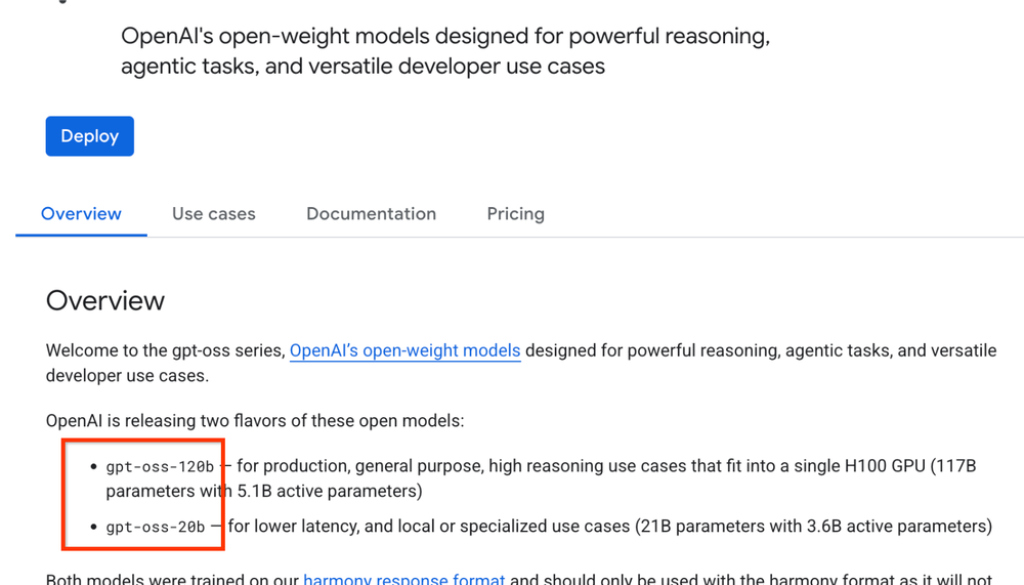

It’s exciting to see OpenAI contribute to the open ecosystem with the release of their new open weights model, gpt-oss. In keeping with our commitment to provide the best platform for open AI innovation, we’re announcing immediate support for deploying gpt-oss-120b and gpt-oss-20b on Google Kubernetes Engine (GKE). To help customers make informed decisions while deploying their infrastructure, we’re giving customers detailed benchmarks of gpt-oss-120b on accelerators on Google Cloud. You can access it here.

This continues our support for a broad and diverse ecosystem of models, from Google’s own Gemma family, to models like Llama 4, and now, OpenAI’s gpt-oss. We believe that offering choice and leveraging the best of the open community is critical for the future of AI.

Run demanding AI workloads at scale

The new gpt-oss model is expected to be large and require significant computational power, likely needing multiple NVIDIA H100 Tensor Core GPUs for optimal performance. This is where Google Cloud and GKE shine. GKE is designed to handle large-scale, mission-critical workloads, providing the scalability and performance needed to serve today’s most demanding models. With GKE, you can leverage Google Cloud’s advanced infrastructure, including both GPU and TPU accelerators, to power your generative AI applications.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud containers and Kubernetes’), (‘body’, <wagtail.rich_text.RichText object at 0x7f38673cdcd0>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectpath=/marketplace/product/google/container.googleapis.com’), (‘image’, None)])]>

Get started in minutes with GKE Inference Quickstart

To make deploying gpt-oss as simple as possible, we have made optimized configurations available through our GKE Inference Quickstart (GIQ) tool. GIQ provides validated, performance-tuned deployment recipes that let you serve state-of-the-art models with just a few clicks. Instead of manually configuring complex YAML files, you can use our pre-built configurations (refer to the screenshots below) to get up and running quickly.

GKE Inference Quickstart provides benchmarking and quick-start capabilities to ensure you are running with the best possible performance. You can learn more about how to use it in our official documentation.

You can also deploy the new OpenAI gpt-oss model via the gcloud CLI by simply setting up access to the weights from the OpenAI organization on Hugging Face and use the gcloud CLI to deploy the model on a GKE cluster with the appropriate accelerators. For example:

- code_block

- <ListValue: [StructValue([(‘code’, ‘gcloud alpha container ai profiles manifests create \rn –model=google/gpt-oss-20b \rn –model-server=vllm \rn –accelerator-type=nvidia-h100-80gb \rn –target-ntpot-milliseconds=200’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f38673cdd30>)])]>

Our commitment to open models

Our support for gpt-oss is part of a broader, systematic effort to bring the most popular open models to GKE as soon as they are released while also giving customers detailed benchmarks to make informed choices while deploying their infrastructure. Get started with the new OpenAI gpt-oss model on GKE today.

Read More for the details.