GCP – Mastering secure AI on Google Cloud, a practical guide for enterprises

Introduction

As we continue to see rapid AI adoption across the industry, organizations still often struggle to implement secure solutions because of the new challenges around data privacy and security.

We want customers to be successful as they develop and deploy AI, and that means carefully considering risk mitigation and proactive security measures.

The four cornerstones of a secure AI platform

When adopting AI, it is crucial to consider a platform-based approach, rather than focus solely on individual models.

A secure AI platform, like a secure storage facility, requires strong foundational cornerstones. These are infrastructure, data, security and responsible AI (RAI).

- Infrastructure is your foundation. Like the physical security of a storage facility, secure Google Cloud infrastructure (compute, networking, storage) is the AI platform on which your AI models and applications operate.

- Data is your protected fuel. Data security is vital when developing AI powered applications. Protecting data from unauthorized access, modification, and theft is essential. Protected data can help safeguard AI integrity, ensure privacy compliance, and build customer trust.

- Security is your shield. This layer protects the entire AI ecosystem by detecting, preventing, and responding to threats similar to a storage facility’s security systems. A strong AI security strategy should minimize your attack surface, detect incidents, and maintain confidentiality, integrity, and availability.

- Responsible AI is your ethical compass. Building trust in enterprise AI systems is just as important as securing them. Responsible AI ensures that AI systems are used ethically and in a way that benefits society. This is like ensuring that a storage facility is used for its intended purpose and not for any illegal or unethical activities. RAI is based on the following:

- Fairness: Use bias mitigation to ensure AI models are free from bias, and treat all users fairly. This requires careful data selection, model evaluation, and ongoing monitoring.

- Explainability: Model transparency can make AI models transparent and understandable, so you can identify and address potential issues. Explainable AI can help build trust in AI systems.

- Privacy: Data protection and compliance can protect user data and comply with privacy regulations. This includes implementing appropriate data anonymization and de-identification techniques.

- Accountability: Establish clear lines of responsibility for the development and deployment of enterprise AI systems to ensure that there is accountability for the ethical implications of enterprise AI systems.

Responsible AI is essential for building trust in AI systems and ensuring that they are used in a way that is ethical and beneficial. By prioritizing fairness, explainability, privacy, and accountability, organizations can build AI systems that are both secure and trustworthy. To support our customers on their AI journey, we’ve provided the following design considerations.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud security products’), (‘body’, <wagtail.rich_text.RichText object at 0x3e89d7dc61f0>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectPath=/welcome’), (‘image’, None)])]>

Key security design considerations on Vertex AI

Vertex AI provides a secured managed environment for building and deploying machine-learning models as well as accessing foundation models. However, building on Vertex requires thoughtful security design.

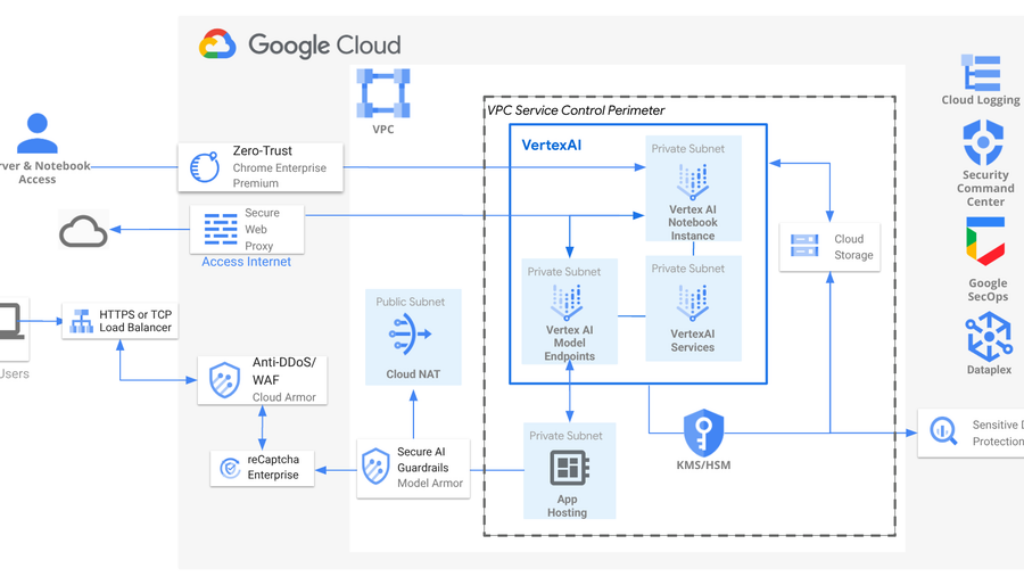

Secure AI/ML deployment reference architecture.

In the architecture shown above, you can see that we recommend designing AI application security with the four key cornerstones in mind.

You can see here that Virtual Private Cloud (VPC) is the foundation of the platform. It isolates AI resources from the public internet, creating a private network for sensitive data, applications and models on Google Cloud.

We recommend using Private Services Connect (PSC) as the private endpoint type for Vertex AI resources (such as Notebooks and Model Endpoints.) These allow Vertex AI resources to be deployed into private VPC subnets to prevent direct internet access. It also allows applications deployed into private subnets within the VPC to securely make inference to the AI models privately.

VPC Service Control perimeters and Firewall Rules are used in addition to Identity and Access Management (IAM) to authorize network communication and block unwanted connections. This enhances the security of cloud resources and data for AI processing. Access levels can be defined based on IP addresses, device policies, user identity, and geographical location to control access to protected services and projects.

If there are requirements for private resources like notebooks to access the internet for updates, we recommend building a Cloud NAT, which can enable instances in private subnets to access the internet (such as for software updates) without exposure to direct inbound connections.

The reference architecture uses a Cloud Load Balancer (LB) as the network entry point for AI applications. The LB distributes traffic securely across multiple instances, ensuring high availability and scalability. Integrated with Cloud Armor, it protects against denial of service (DDoS) and web attacks.

reCAPTCHA Enterprise can prevent fraud and abuse, whether perpetrated by bots or humans. LBs inherent scalability can effectively mitigate DDoS attacks, as we saw when Google Cloud stopped one of the largest DDoS attacks ever seen.

Model Armor is also used to enhance the security and safety of AI applications by screening foundation model prompts and responses for different security and safety risks. It can perform functions such as filters for dangerous harassment, and hate speech. Additionally, Model Armor can identify malicious URLs in prompts and responses as well as injection and jailbreak attacks.

In this design, Chrome Enterprise Premium is used to implement a Zero Trust model, removing implicit trust by authenticating and authorizing every user and device for remote access to AI applications on Google Cloud. Chrome Enterprise Premium enforces inspection and verification of all incoming traffic, while a Secure Web Proxy manages secure egress HTTP/S traffic.

Sensitive Data Protection can secure AI data on Google Cloud by discovering, classifying, and protecting sensitive information and maintaining data integrity. Cloud Key Management is used to provide centralized encryption key management for Vertex AI model artifacts and sensitive data.

In addition to this reference architecture, it is important to remember to implement appropriate IAM roles when using Vertex AI. This enforces Vertex AI resource control and access for different states of the machine learning (ML) workflow. For example roles must be defined for data scientists, model trainers, model deployers etc.

Finally it is important to conduct regular security assessments and penetration testing to identify and address potential vulnerabilities in your Vertex AI deployments. Tools including Security Command Center, Google Security Operations, Dataplex and Cloud Logging can be used to enforce a secure security posture for AI/ML deployments on Google Cloud.

Securing the machine learning workflow on Vertex AI

Building upon the general AI/ML security architecture we’ve discussed, Vertex-based ML workflows present specific security challenges at each state. Address these unique concerns when securing AI workloads following these recommendations:

-

Development and data ingestion: Begin with secure development by managing access with IAM roles, isolate environments in Vertex AI Notebooks, and secure data ingestion by authenticating pipelines and sanitizing inputs to prevent injection attacks.

-

Code and pipeline security: Use IAM to secure code repositories with Cloud Source Repositories for access control and implement branch protection policies. Secure CI/CD pipelines using Cloud Build to control build execution and artifact access with IAM. Use secure image sources, and conduct vulnerability scanning on container images.

-

Training and model protection: Protect model training environments by using private endpoints, controlling access to training data and monitoring for suspicious activity. Manage pipeline components with Container Registry, prioritize private registry access control and container image vulnerability scanning.

-

Deployment and serving: Secure model endpoints with strong authentication and authorization. Implement rate limiting to prevent abuse. Use Vertex Prediction for prediction serving, and implement IAM policies for model access control and input sanitization to prevent prompt injections.

-

Monitoring and governance: Continuous monitoring is key. Use Vertex Model Monitoring to set up alerts, detect anomalies, and implement data privacy safeguards.

Secure MLOPs reference architecture.

By focusing on these key areas within the Vertex AI MLOps workflow — from secure development and code management to robust model protection and ongoing monitoring — clients can significantly enhance the security of AI applications.

Confidential AI on Vertex AI

For highly sensitive customer data on Vertex AI, we recommend using Confidential Computing. It encrypts VM memory, generates ephemeral hardware-based keys unique to each VM that are unextractable, even by Google, and encrypts data in transit between CPUs/GPUs. This Trusted Execution Environment restricts data access to authorized workloads only.

It ensures data confidentiality, enforces code integrity with attestation, and it removes the operator and the workload owner from the trust boundary.

Get started today

We encourage organizations to prioritize AI security on Google Cloud by applying the following key actions:

-

Ensure Google Cloud best practices have been adopted for data governance, security, infrastructure and RAI.

-

Implement security controls such as VPC Service Controls, encryption with CMEK and access control with IAM.

-

Use the RAI Toolkit to ensure a responsible approach to AI.

-

Use Google Safeguards to protect AI models.

-

Ensure the importance of data privacy and secure practices for data management.

-

Apply security best practices across the MLOps workflow.

-

Stay informed: Stay up to date with the latest resources, including the Secure AI Framework (SAIF).

By implementing these strategies, organizations can be empowered to use the benefits of AI while effectively mitigating risks, ensuring a secure and trustworthy AI ecosystem on Google Cloud. Reach out to Google’s accredited Partners to help you implement these practices for your business.

Read More for the details.