GCP – Intelligent code conversion: Databricks Spark SQL to BigQuery SQL via Gemini

As data platforms evolve and businesses diversify their cloud ecosystems, the need to migrate SQL workloads between engines is becoming increasingly common. Recently, I had the opportunity to work on translating a set of Databricks SQL queries to BigQuery SQL — a task that is deceptively complex due to differences in syntax, functions, and execution behavior.

To streamline the process, we turned to Google Gemini, a powerful AI assistant, to help bridge the gap between the two SQL dialects. In this blog post, I’ll walk you through the process, challenges we faced, how Gemini helped, and key takeaways from the experience.

The translation tightrope: Why it’s tricky

To boost operational efficiency and cut costs, we migrate analytics workloads from Databricks SQL (on Delta Lake tables) to Google BigQuery. This required rewriting numerous queries, from simple aggregations to intricate CTEs and window functions.

Databricks, with its powerful Spark SQL capabilities, and BigQuery, a serverless and highly scalable data warehouse, are both titans in the data world. However, their SQL dialects, while sharing common ANSI SQL foundations, have distinct variations. Translating between the two manually was possible, but would have been time-consuming and error-prone. This is where Google Gemini played a crucial role.

Below are some of the data type mappings between Databricks and Bigquery :

|

Category |

Databricks SQL Data Type |

BigQuery SQL Data Type |

Description |

|

Integer Types |

TINYINT |

INT64 |

8-bit integer |

|

SMALLINT |

INT64 |

16-bit integer |

|

|

INT or INTEGER |

INT64 |

32-bit integer |

|

|

BIGINT |

INT64 |

64-bit integer |

|

|

Floating-Point Types |

FLOAT or REAL |

FLOAT64 |

64-bit floating point |

|

DOUBLE |

FLOAT64 |

64-bit floating point (equivalent to BigQuery’s FLOAT64) |

|

|

Decimal/Exact Types |

DECIMAL or NUMERIC |

NUMERIC or BIGNUMERIC |

Fixed-point decimal with user-defined precision and scale. BigQuery has an extended BIGNUMERIC for larger precision. |

|

Boolean Types |

BOOLEAN |

BOOL |

True or False |

|

String Types |

STRING or VARCHAR |

STRING |

Variable-length string |

|

CHAR |

Not Supported |

Fixed-length string is not directly supported in BigQuery; use STRING instead. |

|

|

Date and Time Types |

DATE |

DATE |

Calendar date (year, month, day) |

|

TIMESTAMP |

TIMESTAMP |

Timestamp with time zone information |

|

|

DATETIME |

DATETIME |

Timestamp without time zone |

Syntax difference in Databricks and BigQuery

First_Value :

Databricks

- code_block

- <ListValue: [StructValue([(‘code’, ‘first_value(expr[, ignoreNull]) [FILTER ( WHERE cond ) ]’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e3191b802e0>)])]>

BigQuery

- code_block

- <ListValue: [StructValue([(‘code’, ‘FIRST_VALUE (value_expression [{RESPECT | IGNORE} NULLS])rnOVER over_clausernrnover_clause:rn { named_window | ( [ window_specification ] ) }rnrnwindow_specification:rn [ named_window ]rn [ PARTITION BY partition_expression [, …] ]rn ORDER BY expression [ { ASC | DESC } ] [, …]rn [ window_frame_clause ]’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3e3191b80c40>)])]>

In particular, working with H3 geospatial functions can often present unique translation hurdles. Our resources provide clear mappings, like these:

|

Databricks Function |

BigQuery Equivalent Function |

Description |

|

h3_boundaryasgeojson |

ST_ASGEOJSON(jslibs.h3.ST_H3_ |

Returns the polygonal boundary of the input H3 cell in GeoJSON format. |

|

h3_boundaryaswkb |

ST_ASBINARY(jslibs.h3.ST_H3_ |

Returns the polygonal boundary of the input H3 cell in WKB format. |

|

h3_boundaryaswkt |

ST_ASTEXT(jslibs.h3.ST_H3_ |

Returns the polygonal boundary of the input H3 cell in WKT format. |

Providing precise details for complex functions like these is crucial. In fact, we’ve found that by detailing these H3 translations, even advanced AI models like Gemini can generate more accurate and reliable BigQuery SQL from your original Databricks Spark SQL, ensuring your geospatial analyses remain intact.

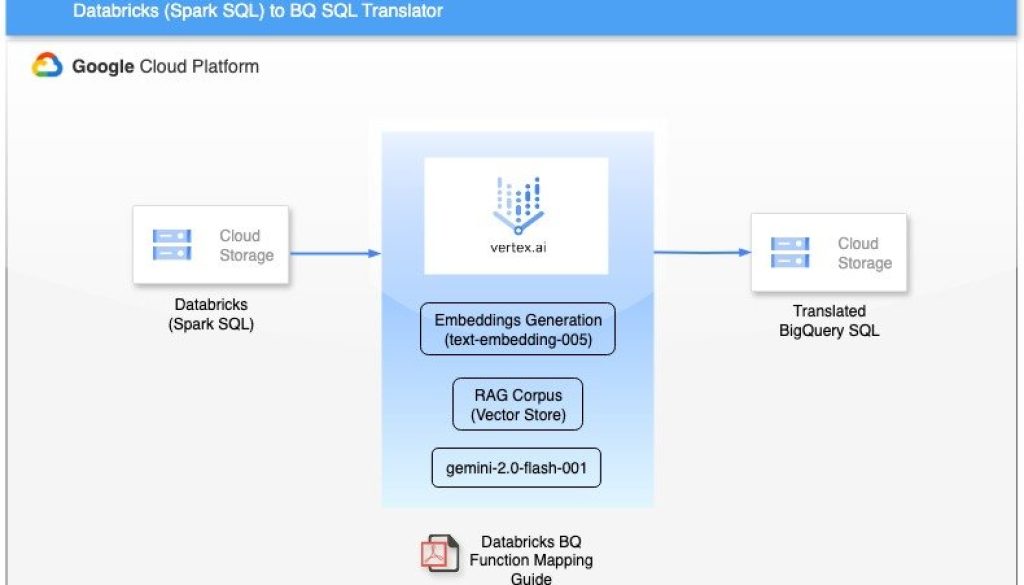

Architecture overview

Before diving into the translation logic, let me show you how the pieces fit together.

Pipeline components

Source SQL Storage:

-

All original Databricks SQL files were stored in Google Cloud Storage .

Function mapping guide:

-

A curated guide that maps Databricks-specific SQL functions (e.g., First_value, UCase,etc) to their BigQuery equivalents (FIRST_VALUE, UPPER, TIMESTAMP etc.)

-

This guide included examples and syntax rules, which were used as input context for Gemini.

Few-shot examples:

-

I selected a set of hand-translated queries to serve as high-quality training prompts to improve Gemini’s consistency.

Retrieval-Augmented Generation (RAG) layer:

-

Before querying Gemini, I leveraged the Vertex AI RAG Engine to retrieve relevant function mappings and example translations. This ensured Gemini had grounded knowledge, improving the accuracy of the output. The RAG-enriched prompt was then sent to Gemini for translation, and the returned SQL was optionally post-processed to fix edge cases.

This ensured Gemini had grounded knowledge, improving the accuracy of the output.

Gemini API integration:

-

The RAG-enriched prompt was sent to Gemini for translation.

-

Returned SQL was optionally post-processed to fix edge cases.

Validation layer:

-

Translated SQL queries were validated by executing them in a BigQuery dry run mode to detect syntax issues.

Architecture diagram

Lessons learned

-

RAG + Gemini = Smart SQL translation: Grounding Gemini with real-world examples and mapping logic made it significantly more accurate.

-

A comprehensive function mapping guide is essential: Invest time in building a robust function mapping resource.

-

Thorough validation is the key: Use BigQuery’s dry run and information schema to ensure translated queries are safe and optimized.

Ready to streamline your SQL migrations?

Stop wrestling with SQL syntax and start leveraging the power of your data, wherever it resides. With the Gemini model, we can streamline your Databricks Spark SQL to BigQuery SQL translation process, making it faster, more reliable, and far less painful.

Dive in and accelerate your journey to cross-platform data success. Click on this link to get more details, and take it forward!

Read More for the details.