GCP – How Baseten achieves 225% better cost-performance for AI inference (and you can too)

Baseten is one of a growing number of AI infrastructure providers, helping other startups run their models and experiments at speed and scale. Given the importance of those two factors to its customers, Baseten has just passed a significant milestone.

By leveraging the latest Google Cloud A4 virtual machines (VMs) based on NVIDIA Blackwell, and Google Cloud’s Dynamic Workload Scheduler (‘DWS’) Baseten has achieved 225% better cost-performance for high-throughput inference and 25% better cost-performance for latency-sensitive inference.

Why it matters: This breakthrough in performance and efficiency enables companies to move powerful agentic AI and reasoning models out of the lab and into production affordably. For technical leaders, this provides a blueprint for building next-generation AI products — such as real-time voice AI, search, and agentic workflows — at a scale and cost-efficiency that has been previously unattainable.

The big picture: Inference is the cornerstone of enterprise AI. As models for multi-step reasoning and decision-making demand exponentially greater compute, the challenge of serving them efficiently has become the primary bottleneck. Enter Baseten, a six-year-old Series C company that partners with Google Cloud and NVIDIA to provide enterprise companies a scalable inference platform for their proprietary models as well as open models like Gemma, DeepSeek ,and Llama, with an emphasis on performance and cost efficiency. Their success hinges on a dual strategy: maximizing the potential of cutting-edge hardware and orchestrating it with a highly optimized, open software stack.

We wanted to share more about how Baseten architected its stack — and what this new level of cost-efficiency can unlock for your inference applications.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud AI and ML’), (‘body’, <wagtail.rich_text.RichText object at 0x3e5d44251580>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

Hardware optimization with the latest NVIDIA GPUs

Baseten delivers production-grade inference by leveraging a wide range of NVIDIA GPUs on Google Cloud, from NVIDIA T4s through the recent A4 VMs (NVIDIA HGX B200). This access to the latest hardware is critical for achieving new levels of performance.

-

With A4 VMs, Baseten now serves three of the most popular open-source models — DeepSeek V3, DeepSeek R1, and Llama 4 Maverick — directly on their Model APIs with over 225% better cost-performance for high throughput inference, and 25% better cost-performance for latency- sensitive inference.

-

In addition to its production-ready model APIs, Baseten provides additional flexibility with NVIDIA B200-powered dedicated deployments for customers seeking to run their own custom AI models with the same reliability and efficiency.

Advanced software for peak performance

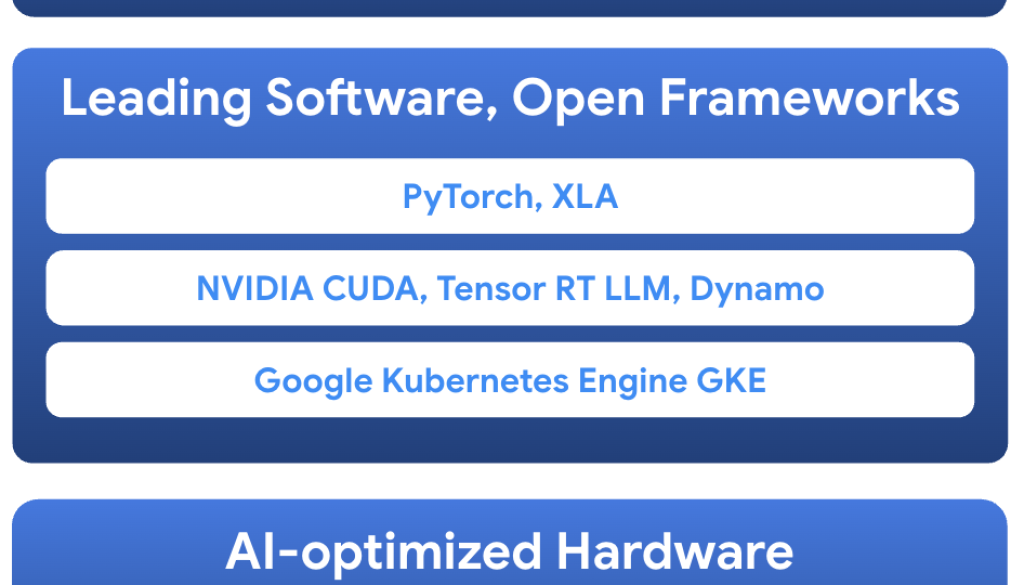

Baseten’s approach is rooted in coupling the latest accelerated hardware with leading and open-source software to extract the most value possible from every chip. This integration is made possible with Google Cloud’s AI Hypercomputer, which includes a broad suite of advanced inference frameworks, including NVIDIA’s open-source software stack — NVIDIA Dynamo and TensorRT-LLM — as well as SGLang and vLLM.

-

Using TensorRT-LLM, Baseten optimizes and compiles custom LLMs for one of its largest AI customers, Writer. This has boosted their throughput by more than 60% for Writer’s Palmyra LLMs. The flexibility of TensorRT-LLM also enabled Baseten to develop a custom model builder that speeds up model compilation.

-

To serve reasoning models like DeepSeek R1 and Llama 4 on NVIDIA Blackwell GPUs, Baseten uses NVIDIA Dynamo. The combination of NVIDIA’s HGX B200 and Dynamo dramatically lowered latency and increased throughput, propelling Baseten to the top GPU performance spot on OpenRouter’s LLM ranking leaderboard.

-

The team leverages techniques such as kernel fusion, memory hierarchy optimization, and custom attention kernels to increase tokens per second, reduce time to first token, and support longer context windows and larger batch sizes — all while maintaining low latency and high throughput.

Building a backbone for high availability and redundancy

For mission-critical AI services, resilience is non-negotiable. Baseten runs globally across multiple clouds and regions, requiring an infrastructure that can handle ad hoc demand and outages. Flexible consumption models, such as the Dynamic Workload Scheduler within the AI Hypercomputer, help Baseten manage capacity similar to on-demand with additional price benefits. This allows them to scale up on Google Cloud if there are outages across other clouds.

“Baseten runs globally across multi-clouds and Dynamic Workload Scheduler has saved us more than once when we encounter a failure,” said Colin McGrath, head of infrastructure at Baseten. “Our automated system moves affected workloads to other resources including Google Cloud Dynamic Workload scheduler and within minutes, everyone is up and running again. It is impressive — by the time we’re paged and check-in, everything is back and healthy. This is amazing and would not be possible without DWS. It has been the backbone for us to run our business.”

Baseten’s scalable inference platform architecture

Unlocking new AI applications for end-users

Baseten’s collaboration with Google Cloud and NVIDIA demonstrates how a powerful combination of cutting-edge hardware and flexible, scalable cloud infrastructure can solve the most pressing challenges in AI inference through Google Cloud’s AI Hypercomputer.

This unique combination enables end-users across industries to bring new applications to market, such as powering agentic workflows in financial services, generating real-time audio and video content in media, and accelerating document processing in healthcare. And it’s all happening at a scale and cost that was previously unattainable.

You can easily get started with Baseten’s platform through the Google Cloud Marketplace, or read more about their technical architecture in their own post.

Read More for the details.