GCP – From legacy to cloud: How Deutsche Telekom went from PySpark to BigQuery DataFrames

In today’s hyper-competitive telecommunications landscape, understanding and maximizing the Customer Lifetime Value (CLV) metric isn’t just a nice-to-have, it’s a strategic imperative. For Deutsche Telekom, accurate CLV calculations are the bedrock of informed decisions, driving crucial initiatives in customer acquisition, retention, and targeted marketing campaigns. The ability to predict and influence long-term customer relationships directly translates to sustained profitability and a competitive edge.

Initially, Deutsche Telekom’s Data Science team processed data within an on-premises data lake environment, leveraging Jupyter notebooks and PySpark. However, this reliance on legacy on-prem data lake systems was creating significant bottlenecks. These systems, designed for a different era of data volume and complexity, struggled to handle the massive datasets required for sophisticated CLV modeling. The result? Extended processing times, limited agility in data science experiments, and a growing gap between potential insights and actionable results.

This challenge demanded a transformative solution, leading Deutsche Telekom to embrace the power of modern cloud infrastructure, specifically Google Cloud’s BigQuery, to unlock the full potential of their data and accelerate their journey towards data-driven innovation.The core of this transformation was the migration of critical data science workloads, beginning with the CLV calculations, to BigQuery.

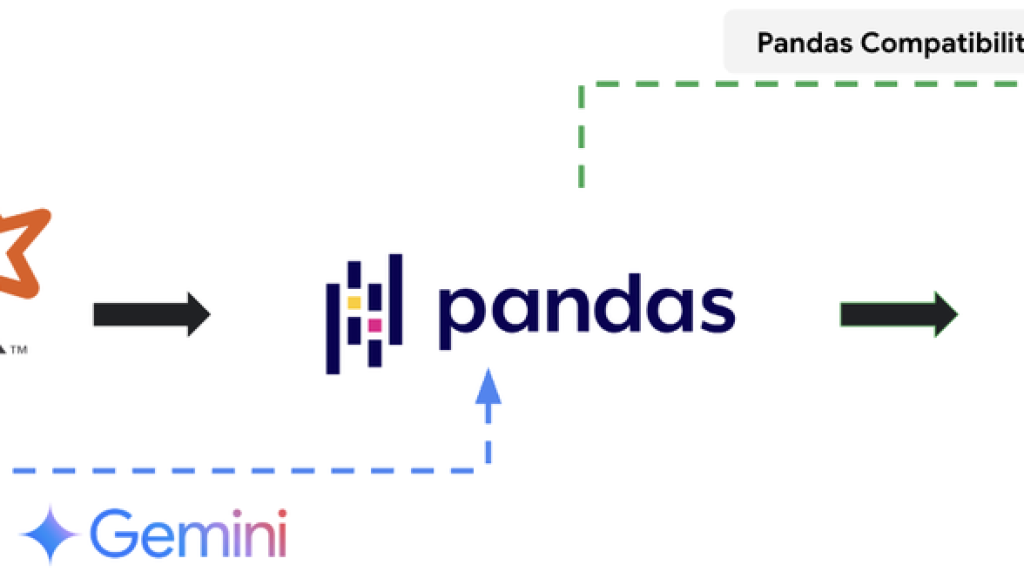

Deutsche Telekom decided that for distributed Python data processing, they wanted to move off of PySpark-based code and adopt BigQuery DataFrames a.k.a. BigFrames. BigFrames is an open-source Python library offered by Google that scales Python data processing by transpiling common Python data science APIs to BigQuery SQL. You can read more about BigFrames in the official introduction to BigFrames and can refer to the public git repository.

This decision was driven by three factors:

- Keep it simple: By moving all the data processing to BigQuery, the company would be standardizing on a single data processing technology. This helps in administration and standardization across the organization.

- Bet on universal skills: Python and Pandas are universal data science skills, but bringing in Spark would introduce additional learning. BigFrames is Pandas-like — it pushes processing to BigQuery. The move reduces the upskilling required to work on data science tasks.

- Focus on business logic: Data science teams can focus on core business logic and less on the infrastructure required to make that logic work.

In other words, this move was not just a technical upgrade — it was a strategic shift towards a more agile, efficient, and insight-driven future. By leveraging BigQuery’s ability to process massive datasets quickly and efficiently, along with the tight integration of BigQuery DataFrames and its compatibility with familiar pandas and scikit-learn APIs, Deutsche Telekom aimed to eliminate the bottlenecks that had hindered their data science initiatives. This solution, centered around BigQuery and BigQuery DataFrames, provided the foundation for faster insights, improved decision-making, and ultimately, enhanced customer experiences.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘Try Google Cloud for free’), (‘body’, <wagtail.rich_text.RichText object at 0x7fc8f9faa250>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

The migration journey

To realize these benefits, Deutsche Telekom meticulously planned a two-phase technical migration strategy, designed to minimize disruption and maximize the speed of achieving tangible business results.

The actual effort required to execute the two phases above was one person-week. Conversion with Gemini was 95% accurate and worked as expected. In the first phase, the most time was spent on manual data validation. Furthermore, around 70% of the pandas code automatically functioned as BigQuery DataFrames code as well. Some adjustments were required for data types and scalar functions.

Phase 1: Accelerated transition with AI-powered code conversion

The initial phase focused on rapidly converting existing code to a format compatible with Google Cloud’s environment. This was significantly accelerated by leveraging advanced AI tools like Gemini, which streamlined the code conversion process. This allowed the team to focus on validating results and ensuring business continuity, rather than getting bogged down in lengthy technical rewrites.

Phase 2: Optimizing for cloud scalability and performance

The second phase involved adapting the data processing to fully leverage BigQuery. This step was crucial for eliminating the performance bottlenecks they had experienced with the legacy systems. By aligning the data processing with BigQuery’s capabilities, Deutsche Telekom was able to unlock significant improvements in processing speed and scalability, allowing for faster and more insightful data analysis.

Key business benefits of the technical approach:

Deutsche Telekom’s technical migration strategy was not just about moving data; it was about strategically enabling faster, more scalable, and more reliable data-driven decisions. Among the benefits that they saw from this approach were:

- Faster time to insight: The accelerated code conversion, powered by AI, significantly reduced the time required to migrate and validate the data, enabling quicker access to critical business insights.

- Improved scalability and performance: The transition to BigQuery’s cloud-native architecture eliminated performance bottlenecks and provided the scalability needed to handle growing data volumes.

- Reduced operational risk: The structured, two-phase approach minimized disruption and ensured a smooth transition, reducing operational risk.

- Leveraging existing expertise: The use of familiar tools and technologies, combined with AI-powered assistance, allowed the team to leverage their existing expertise, minimizing the learning curve.

Of course, a project of this scale presented its own set of unique challenges, but each one was addressed with solutions that further strengthened Deutsche Telekom’s data capabilities and delivered increased business value.

Challenge 1: Ensuring data accuracy at scale

Initially, the test data didn’t fully represent the complexities of their real-world data, potentially impacting the accuracy of critical calculations like CLV.

Solution: During the test phase, they relaxed the filters on the data sources to overcome the data size problem. They implemented the changes in both old and new versions of the code to reliably compare the outputs.

Challenge 2: Maintaining robust security and compliance

Balancing the need for data access with stringent security and compliance requirements was an important consideration. BigQuery DataFrames documentation highlights the need for admin-level IAM privileges for some tasks like Remote Functions, which may not be possible in enterprise environments.

Solution: Deutsche Telekom developed customized IAM roles that met its security standards while enabling data access for authorized users. This helped ensure data security and compliance while supporting business agility.

By addressing these challenges strategically, Deutsche Telekom not only had a successful migration, but it also delivered tangible business benefits. Deutsche Telekom now has a more agile, scalable, and secure data platform, enabling them to make faster, more informed decisions and ultimately enhance their customer experience. This project demonstrates the power of cloud transformation in driving business value.

Deutsche Telekom’s successful migration to BigQuery was a strategic transformation, not just a technical one. By overcoming the limitations of their legacy systems and embracing cloud-based data processing, they’ve established a robust foundation for future innovation. This project underscores the power of strategic partnerships and collaborative problem-solving, showcasing how Google Cloud’s cutting-edge technologies and expert consulting can empower businesses to thrive in the data-driven future.

Ready to unlock the full potential of your data?

Whether you’re facing similar challenges with legacy systems or seeking to accelerate your data science initiatives, Google Cloud’s data platform can provide the solutions you need. Explore the capabilities of BigQuery and DataFrames, and discover how our expert consultants can guide you through a seamless cloud migration.

Contact Google Cloud Consulting today to discuss your specific needs and embark on your own journey towards data-driven innovation.

A special thanks to Googler Rohit Naidu, Strategic Cloud Engineer, for his contributions to this post.

Read More for the details.