GCP – Designing a multi-tenant GKE platform for Yahoo Mail’s migration journey

Yahoo is in the midst of a multi-year journey to migrate its renowned Yahoo Mail application onto Google Cloud. With more than 100 services and middleware components in the application, Yahoo Mail is primarily taking a lift-and-shift approach for its on-premises infrastructure, and strategically transforming and replatforming key components and middleware to leverage cloud-native capabilities.

To ensure a successful migration, Google and The Yahoo Mail team collaborated extensively, collecting information around the current architecture, and making decisions regarding project boundaries, network architecture, and how to configure Google Kubernetes Engine (GKE) clusters. These decisions were critical due to the global nature of the application, which needs to be highly available and redundant to provide uninterrupted service to users worldwide.

As Yahoo Mail progresses towards migrating the first wave of workloads onto the production environment, we are highlighting key elements of the design, in particular its multi-tenant GKE platform, which was instrumental in establishing a robust foundation for the migration. With multi-tenancy, the Yahoo Mail application can operate efficiently on Google Cloud, helping to meet the diverse requirements of its various application tenants.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud containers and Kubernetes’), (‘body’, <wagtail.rich_text.RichText object at 0x3e6e32cd9130>), (‘btn_text’, ‘Start building for free’), (‘href’, ‘http://console.cloud.google.com/freetrial?redirectpath=/marketplace/product/google/container.googleapis.com’), (‘image’, None)])]>

Design process

Google’s professional services organization (PSO) began the design process by taking a detailed analysis of current system usage and capacity requirements. This involved running benchmarks and collecting baseline data of existing workloads, and estimating the number of nodes that would be required based on machine types that would best suit the performance and resource demands of those workloads. Simultaneously, we discussed the optimal number of GKE clusters and cluster types, and how to best position and organize the workloads across the clusters. Another aspect of the design process was defining the number of environments (on top of the production environment). We sought to strike a balance between operational complexity, dependency proliferation to on-prem services at rollouts, and mitigating potential risks due to application defects and bugs.

But prior to making decisions about GKE clusters, we needed to determine the number of projects and VPCs. These decisions were influenced by various factors, including the customer’s workload requirements and scalability objectives, and Google Cloud’s service and quota limitations. At the same time, we wanted to minimize operational overhead. The analysis around the number of GKE clusters, VPCs, etc., was fairly straightforward: simply document the pros and cons of each approach. Nevertheless, we meticulously followed an extensive process, on the basis of the significant and far-reaching impact these decisions would have on the overall architecture.

We used multiple criteria to determine the number of GKE clusters we needed and how to organize workloads across them. The main characteristics we considered were resource consumption requirements of a variety of workloads, inter-services connectivity patterns, and how to strike a balance between operational efficiency and minimizing the blast radius of an outage.

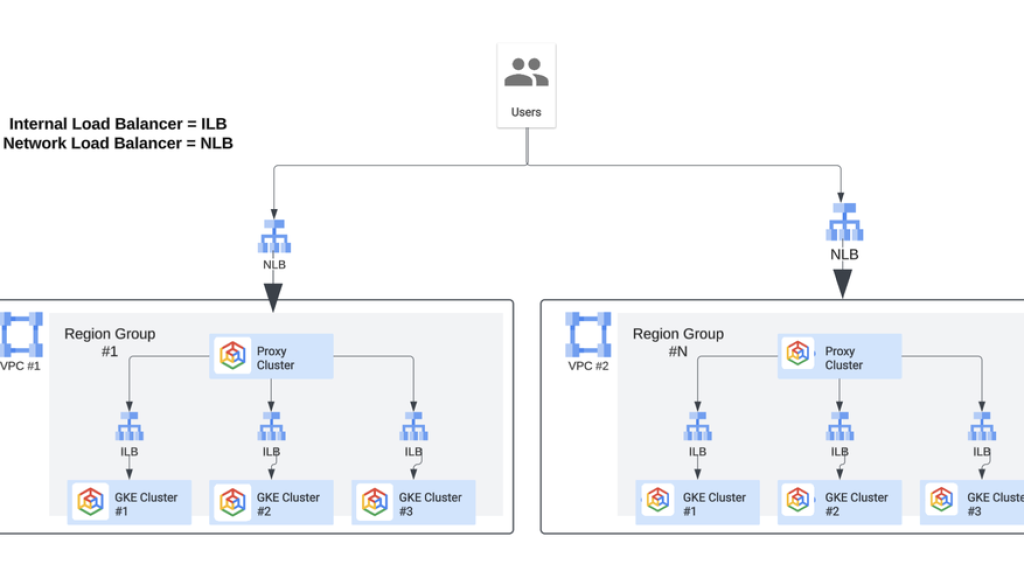

Architecture diagram

Below is a simplified view of the current architecture. The core architecture consists of four GKE clusters per region group (for example, in the us-east regions in one VPC) and per environment. The architecture spans multiple regions to provide fault tolerance and high availability at the service layer. There are four region groups, and thus, there are four VPCs. External user traffic is routed to the closest region via a geolocation policy configured in Cloud DNS public zones. Internally, traffic is routed across region groups via a proxy application while traffic between GKE clusters is routed via an internal load balancer (ILB), where each ILB has a private DNS record.

Multi-tenant GKE platform characteristics

As outlined in Google’s public documentation, there are multiple considerations that need to be addressed when running and operating a GKE cluster. The platform team worked diligently to address the following considerations and satisfy various tenant team’s requirements:

- Workload placement: The platform team assigns labels to node pools to support workload affinity, and tenants use a combination of taints and tolerations to ensure workloads are scheduled on the right node pools. This is necessary as each node pool has distinct firewall requirements based on the type of traffic it handles (HTTPS, POPS, etc). Additionally, Resource Manager Tags are used to govern firewall rules and associate the node pool with the applicable firewall rule.

- Access control: Kubernetes Role-based Access Control (RBAC) is the primary mode of governing and restricting user access to cluster resources. Each tenant has one or more namespaces within their cluster and the cluster is bootstrapped with standard policies during the tenant onboarding process.

- Network policies: All clusters are provisioned under dataplane v2, and Yahoo Mail uses the standard Kubernetes Network Policy to control traffic flow. Under the hood, this uses Cilium, an open-source solution for providing, securing, and observing network connectivity between workloads.

- Resource quotas: To optimize resource utilization and prevent overconsumption, the platform team enforces resource quotas for cpu/memory within a namespace.

- Scaling: This is determined by the platform team based on the tenant’s quota requests during onboarding. Due to certain feature limitations associated with node auto-provisioning around usage of secure tags for firewall rules and defining a specific machine type and shapes, it could not be utilized, but we worked with the engineering team to develop feature requests to address this gap.

Challenges

Through the course of this migration, both Yahoo Mail and Google faced technical constraints and challenges. Below, we outline some of the key challenges faced and the approaches taken to address them:

- Connecting to the control plane: As with most enterprise customers, Yahoo Mail provisioned private GKE clusters and needed connectivity between the control plane and its CI/CD tool (screwdriver) from outside the VPC network. The platform team deployed bastion hosts that were used to proxy the connection to the control plane, but it faced scalability challenges. Google worked with the customer to test out two solutions using Connect gateway and DNS-based endpoint to obviate the need for a bastion host.

- End-to-end mTLS: One of Yahoo Mail key security tenets was to ensure end-to-end mTLS, which the architecture’s overall design and underlying Google Cloud services should be able to accommodate. This resulted in significant problems as one of the key load balancing products (Application Load Balancer) did not offer end-to-end mTLS at the time. We explored alternative measures such as implementing a bespoke proxy application and using Layer 4 load balancers throughout the stack. Professional services also worked with Google Cloud engineering to define the requirements for mTLS support as part of Application Load Balancer.

- Integration with Athenz: Yahoo Mail used an internal tool for identity/access management, Athenz that all Google Cloud services needed to integrate with to perform workload identity federation. Within the Kubernetes context, this meant that users still needed to be authenticated via Athenz, but use workload identity federation as a mediator. As workload identity federation was also a fairly new feature, we needed to collaborate closely with Google Cloud engineering to implement successfully in the Yahoo Mail environment.

- Kubernetes externalTrafficPolicy: One of the distinct features that Yahoo Mail had been most vocal and excited for was weighted routing for load balancers. This feature would allow for optimal routing of incoming traffic to the backends. While this was supported for managed instance groups, there was no native integration with GKE at the time. In its absence, the platform team had to explore and experiment with externalTrafficPolicy modes such as local and cluster mode to determine its performance impact/limitations.

- Capacity planning: Last but not least, Google Professional Services performed capacity planning, a cornerstone of a successful cloud migration. Here, it entailed collaborating across multiple application teams to establish a baseline for capacity needs, making key assumptions, and estimating the resources required to meet both current and future demands. Capacity planning is a highly iterative activity that needs to evolve as workloads and requirements change. Thus, conducting regular reviews and maintaining clear communication with Google was paramount to ensure that the cloud provider could adapt to the customer’s needs.

Yahoo Mail’s next milestone

Migrating an application as big as the Yahoo Mail application is a huge endeavor. With its two-pronged approach — lift and shift for most services, and strategic rearchitecting for some — Yahoo is well on its way to setting its mail system up for the next generation of customers. While a small portion of the Yahoo Mail system is in production, the majority of its services are expected to be onboarded over the next year. For more information on how Google PSO can assist with other similar services, please refer to this page.

We would like to express our gratitude to the Yahoo Mail Compute Infra team for their cooperation in sharing details and collaborating with us on this blog post.

Read More for the details.