GCP – BigQuery under the hood: The power of the Column Metadata index aka CMETA

While petabyte-scale data warehouses are becoming more common, getting the performance you need without escalating costs and effort remain key challenges, even in a modern cloud data warehouse. While many data warehouse platform providers continue to work on these challenges, BigQuery has already moved past petabyte-scale data warehouses to petabyte-scale tables. In fact, some BigQuery users have single tables in excess of 200 petabytes and over 70 trillion rows.

At this scale, even metadata is big data that requires an (almost) infinitely scalable design and high performance. In 2021, we presented the Column Metadata (CMETA) index in a 2021 VLDB paper, which, as the name implies, acts like an index for metadata. Compared to existing techniques, CMETA proved to be superior, meeting both our scalability and performance requirements. Further, BigQuery’s implementation thereof requires no user effort to maintain, and in addition to transparently improving query performance, CMETA may also reduce overall slot usage.

In this blog, we take a look at how CMETA works, the impact it can have on your workloads, and how to maximize its benefits. Let’s jump in.

How BigQuery stores data

All data in BigQuery tables is stored as data blocks that are organized in a columnar format. Data blocks also store metadata about all rows within the block. This includes min and max values for each column in the block and any other necessary properties that may be used for query optimization. This metadata allows BigQuery to perform fine-grained dynamic pruning to improve both query performance and resource efficiency.

This approach is well-known and commonly applied in the data management industry. However, as noted above, BigQuery operates on a vast scale, routinely handling tables that have over a hundred petabytes of data spread across billions of blocks in storage. Metadata for these tables frequently reach terabyte scale — larger than many organizations’ entire data warehouses!

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud data analytics’), (‘body’, <wagtail.rich_text.RichText object at 0x3ed55a935040>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

Enter the Column Metadata index

To optimize queries, especially when large tables are involved, BigQuery now leverages CMETA. This system table is automatically created and managed by BigQuery to maintain a snapshot of metadata for all data blocks of user tables that may benefit from the index. This provides additional data to BigQuery’s planner, allowing it to apply additional fine-grained pruning of data blocks, reducing both resource consumption (slots usage and/or bytes scanned) and query execution time.

CMETA relies on a few key techniques.

Index generation

CMETA is automatically generated and refreshed in the background at no additional cost and does not impact user workloads. Creation and updates to the index occur automatically whenever BigQuery determines the table will benefit from the index based on size and/or volume of change to the data in an existing table. BigQuery ensures the index remains up-to-date with block statistics and column-level attributes with no need for any user action. Using efficient storage and horizontally scalable techniques, BigQuery can maintain these indexes at scale, even for some of our performance sensitive users with tables over 200 petabytes in size.

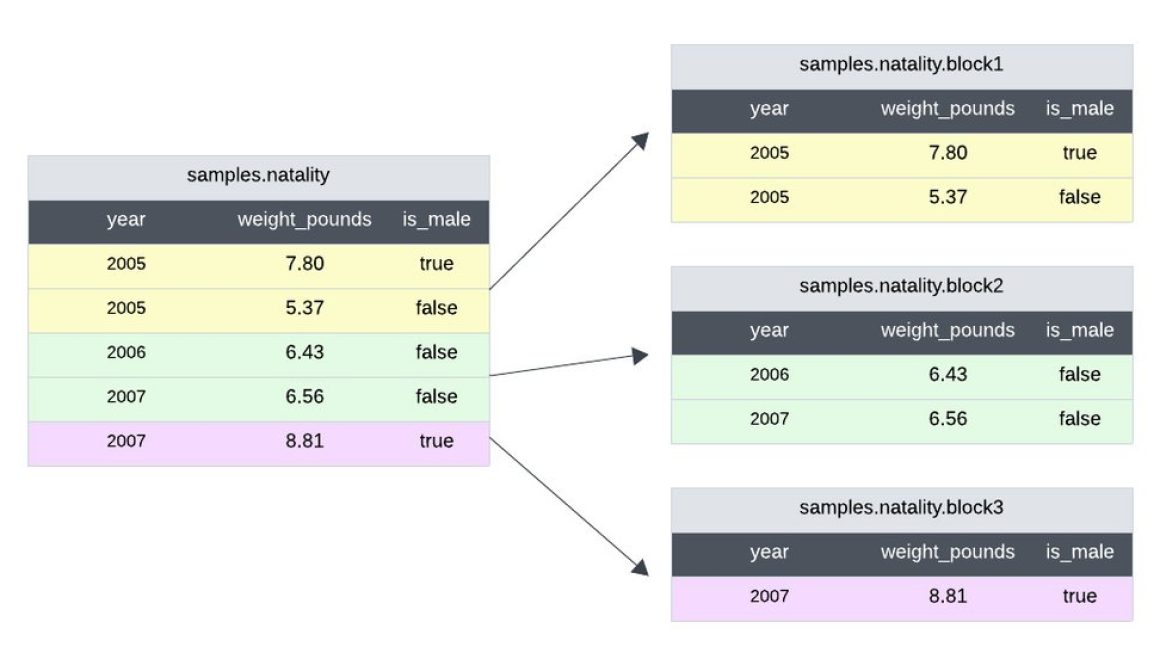

Figure 1

Query serving

To illustrate how the index serves queries in practice, let’s use the `natality` table from BigQuery’s public dataset. Imagine this table’s data is stored in three blocks (see Figure 1), committed at times 110, 120, and 130. Our column metadata index, with a snapshot taken at time 125, includes block- and column-level statistics for blocks 1 and 2.

- code_block

- <ListValue: [StructValue([(‘code’, ‘SELECT * FROM samples.natality WHERE weight_pounds >= 7 and is_male = false’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ed558489970>)])]>

Considering the query above, BigQuery first scans the index to identify relevant blocks. Since the maximum value of `weight_pounds` in block 2 is 6.56 and the query filters on ‘weight_pounds’ >= 7, we know we can safely skip that block without even inspecting it. The original query then runs only against block 1 and any newer block(s) that haven’t been indexed yet — in this case, block 3. The results are combined and returned to the user.

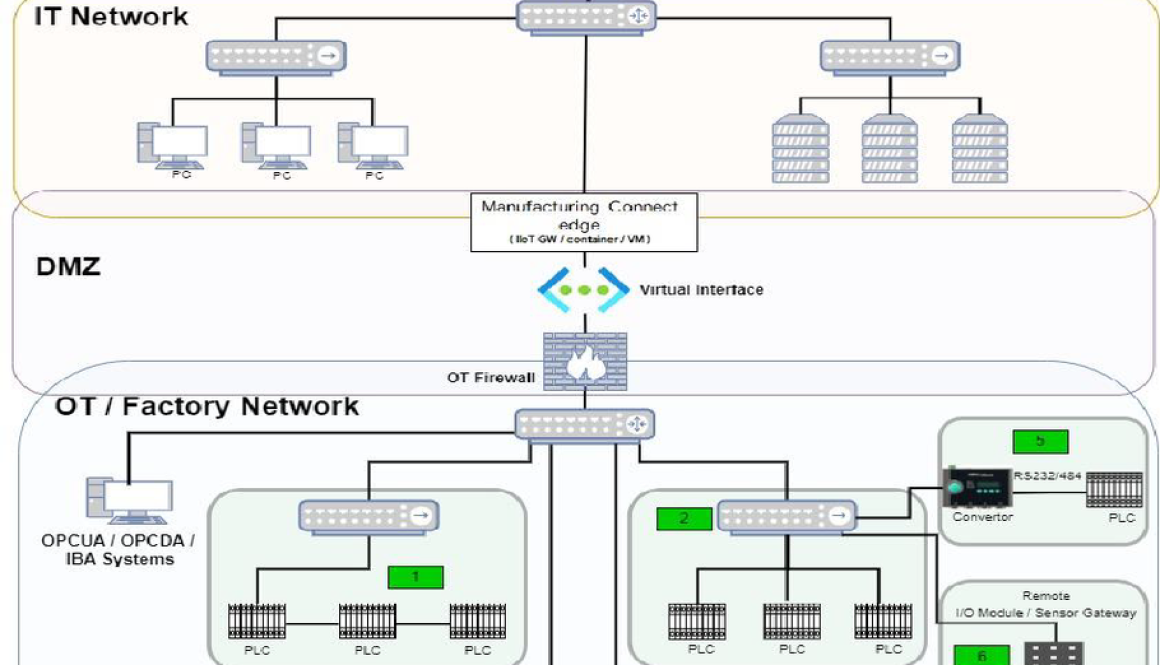

Figure 2

With rich column-level attributes in the index, BigQuery can prune efficiently at the early stage of query processing. Without the index, pruning occurs at later stages when BigQuery opens the data blocks, which involves more computing resources. For large tables, skipping data blocks with this technique significantly benefits selective queries, enabling BigQuery to support much larger tables. Consider the above example but with a table that has billions of blocks. Imagine the time and slot usage savings from pruning unnecessary blocks without even needing to access the block’s header.

BigQuery’s CMETA index is unique in a few ways:

-

Zero maintenance cost or effort: The CMETA index is a fully automated background operation

-

Applicable to all data tables: CMETA works transparently to improve performance regardless of whether the table size is measured in gigabytes or petabytes

-

Integrated with other Google Cloud services: Works with BigQuery tables and BigLake External Tables

-

Safe: Always returns correct results regardless of whether CMETA is available or up-to-date

Measuring CMETA’s impact

Early adopters of CMETA have reported up to 60x improvement in query performance and up to 10x reduction in slot usage for some queries. The benefits are particularly pronounced for queries with more selective filters, especially for filters on clustering columns, as CMETA minimizes the amount of data processed by query workers.

Figure 3

Maximizing CMETA’s benefits

BigQuery currently automatically manages CMETA at no additional cost to users and allocates resources to create or refresh the index in a round robin way. If your tables grow or change very rapidly and you have strict performance requirements, you may choose to use your own resource pool (slots) for CMETA maintenance operations to maximize CMETA’s throughput. This will provide the most consistent experience in query performance improvement via CMETA. To do this, simply create a reservation assignment and allocate slots for background jobs, and CMETA maintenance jobs will automatically use it. More details are available in the documentation.

More to come

While this first iteration of CMETA is now generally available, we’re already working on future iterations to further improve BigQuery’s autonomous query processing capabilities, without any extra effort or cost on your part. Stay tuned for more to come.

Read More for the details.