The retail media landscape has reached an inflection point. What started as a way for retailers to monetize their digital real estate has become the fastest-growing segment of digital advertising, with projections showing 21.9% growth in 2025 and a three-year compound annual growth rate of 19.7% through 2027, according to Dentsu’s Global Ad Spend Forecasts Report, December 2024. Yet, many retailers find themselves caught in a frustrating paradox: they possess rich first-party data but lack the infrastructure to monetize it effectively at scale.

Legacy advertising stacks, fragmented infrastructure, and limited machine learning capabilities prevent them from competing with established leaders like Amazon and Walmart. To build profitable, performant on-site advertising businesses, retailers need real-time personalization and measurable ROI – capabilities that require sophisticated AI infrastructure most don’t have in-house.

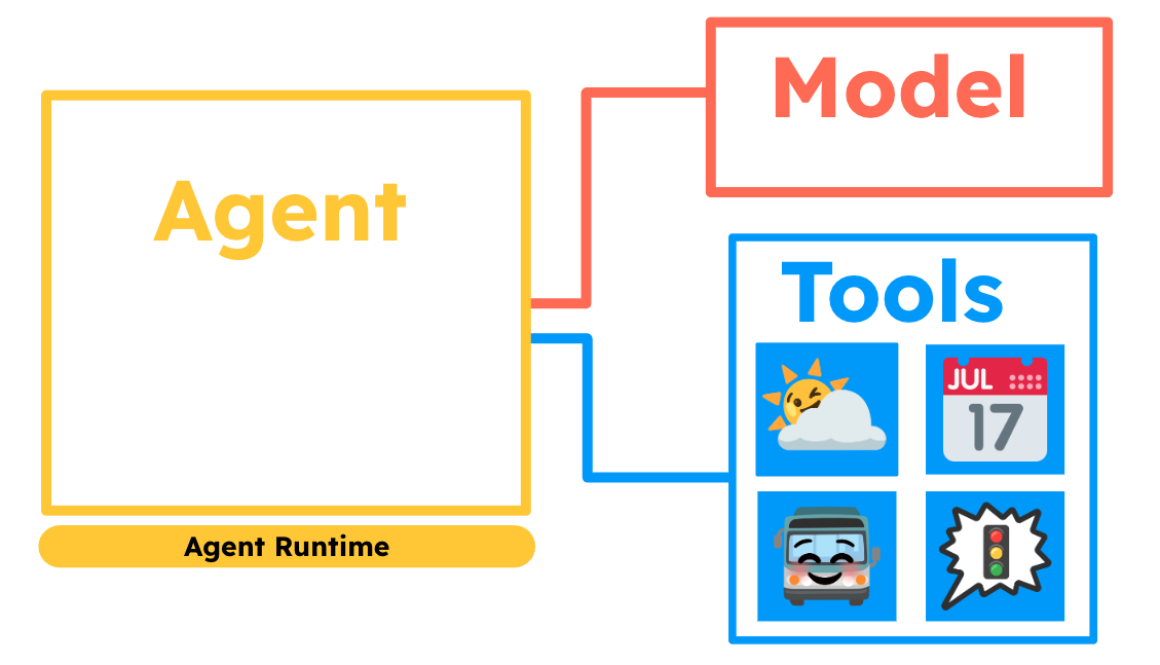

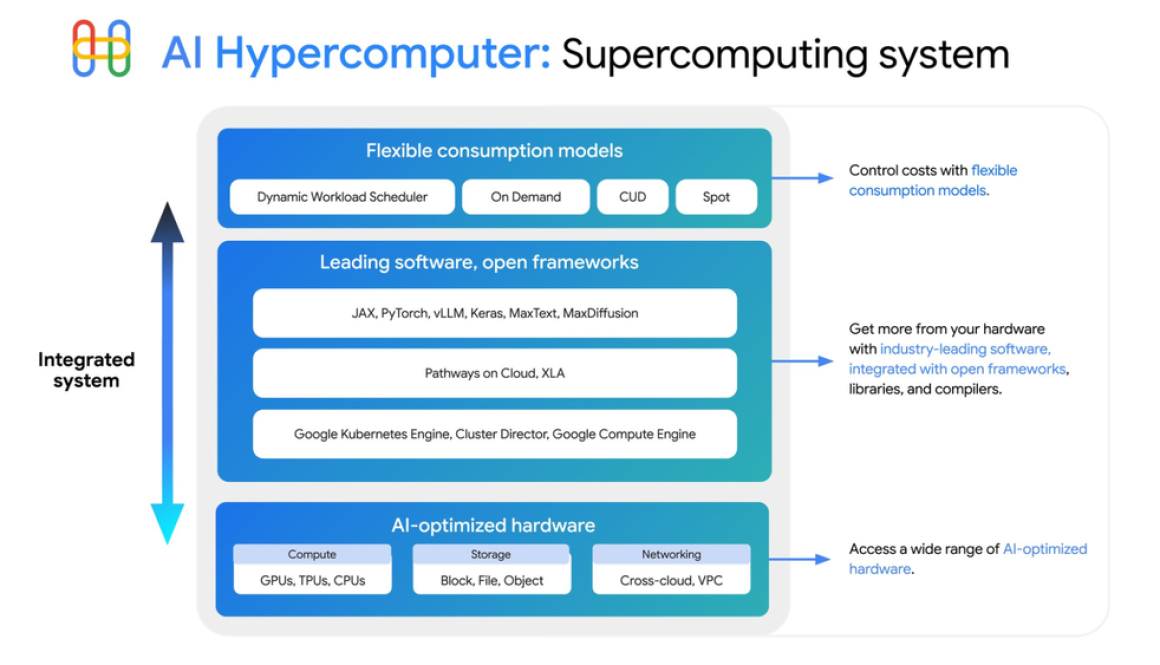

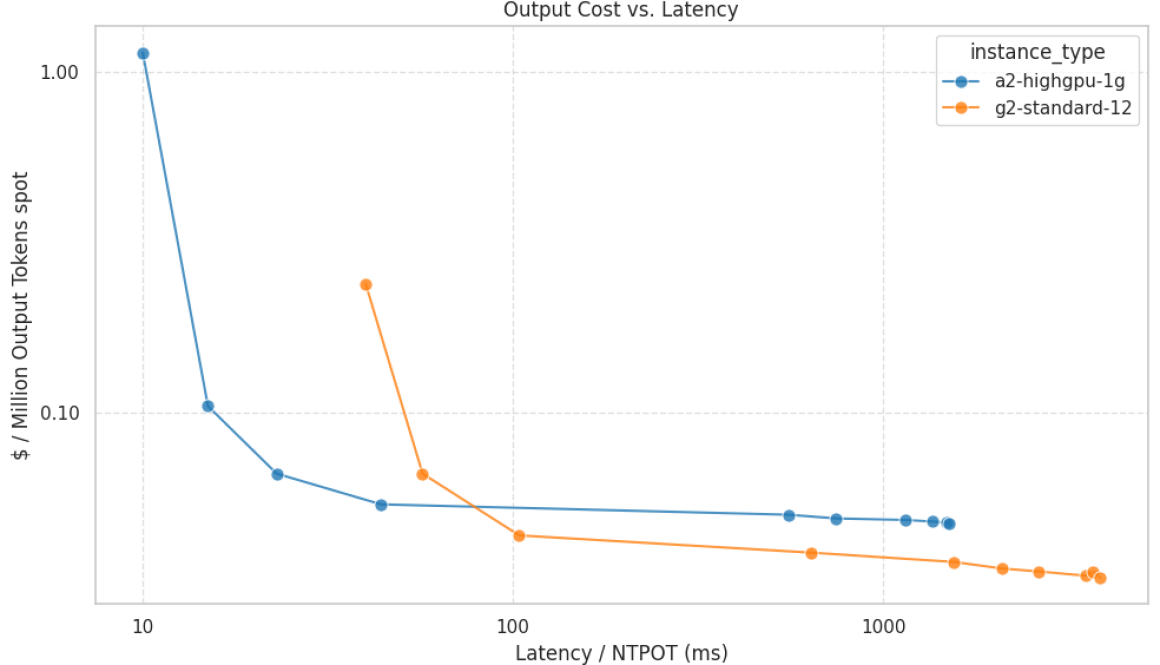

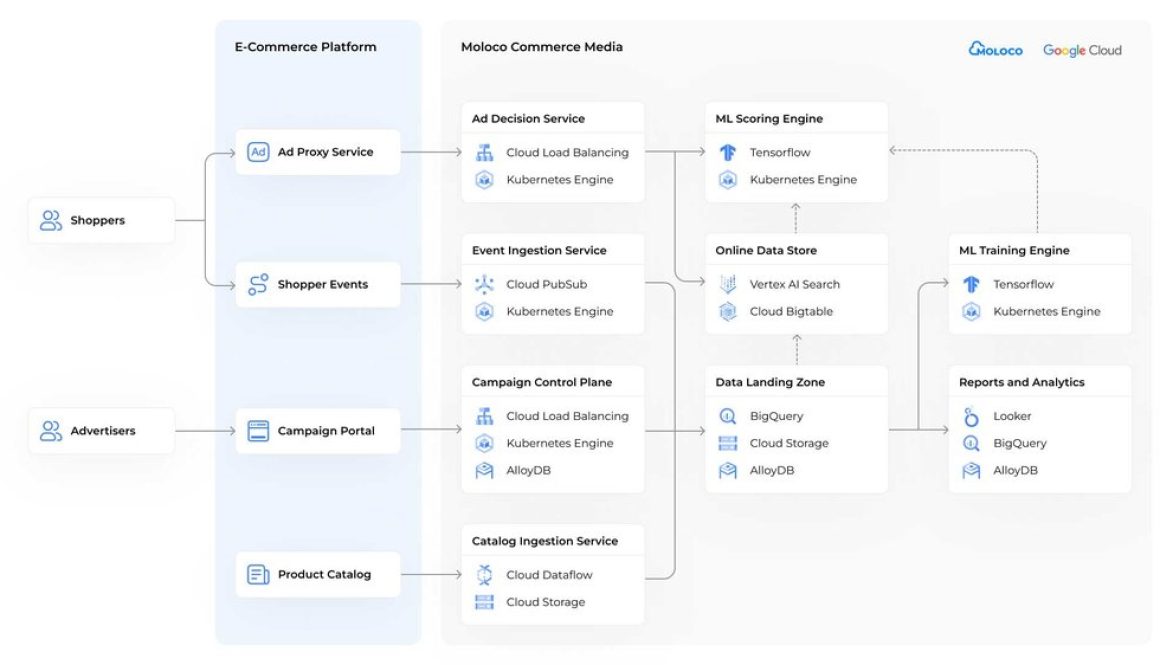

This is where the partnership between Moloco and Google Cloud delivers significant value. Moloco is an AI-native retail media platform, built from the ground up to deliver one-to-one ad personalization in real-time. Leveraging Google Cloud’s advanced infrastructure, the platform uses TPUs (Tensor Processing Units) and GPUs (Graphics Processing Units) for training and scoring, while Vector Search operates efficiently on CPUs (Central Processing Units). This enables outcomes-based bidding at scale, without requiring retailers to build in-house AI expertise.

The results demonstrate clear value: ~10x increase in capacity, up to ~25% lower p95 latency, and 4% revenue uplift. This blog explores how the joint architecture is reshaping retail media and delivering real-world business impact.

High expectations, limited infrastructure

Retailers face mounting pressure from multiple directions. Declining margins drive the need for new revenue streams, while complex ad tech environments make monetizing first-party data more challenging. Many hit effectiveness ceilings as their advertising spend scales, unable to maintain performance without sophisticated personalization.

Meanwhile, advertisers demand closed-loop measurement and proven ROI – capabilities that require advanced infrastructure most retailers simply don’t possess.

“The sheer scale of modern retail catalogs and the expectation of instantaneous, relevant results have pushed traditional search systems to their limits,” Farhad Kasad, an engineering manager for Vertex AI at Google Cloud, said “To solve this, retailers need to think in terms of semantic understanding. Vertex AI vector search provides the foundational infrastructure to handle billions of items and queries with ultra-low latency, enabling the move from simple keyword matching to truly understanding the context behind a search and delivering hyper-personalized results.”

Addressing this infrastructure opportunity enables retailers to build sustainable, competitive retail media businesses.

Moloco Commerce Media meets Vertex AI Vector Search

Moloco offers an end-to-end, AI-native retail media platform specifically designed to address this challenge. Rather than retrofitting existing systems with AI capabilities, Moloco built its platform from the ground up to use artificial intelligence for every aspect of ad delivery and optimization.

The platform’s integration with Google Cloud’s Vertex AI vector search creates a powerful foundation for semantic ad retrieval and matching. This combination supports hyper-personalized ads and product recommendations by understanding the contextual meaning behind user queries and behaviors, not just matching keywords.

The architecture simplifies what was previously a complex, resource-intensive process. Retailers can focus on their core business while leveraging enterprise-grade, fully managed services that scale automatically with their business needs, rather than building and maintaining their own vector databases.

How vector search improves ad performance

Vector Search represents a major shift from traditional keyword-based matching to semantic understanding. Instead of relying solely on exact text matches, Vector Search converts products, user behaviors, and search queries into mathematical representations (vectors) that capture meaning and context.

This approach enables several breakthrough capabilities for retail media:

Real-time relevance at scale: Vector Search can process millions of product-ad combinations in milliseconds, identifying the most contextually relevant matches based on user behavior, product attributes, and advertiser goals.

Semantic understanding: The system understands that a user searching for “running gear” might be interested in advertisements for athletic shoes, moisture-wicking shirts, or fitness trackers – even if those exact terms don’t appear in the query.

Hybrid search architecture: By combining dense vector representations (semantic meaning) with sparse keyword matching (exact terms), the platform delivers both contextual relevance and precision matching.

Production-grade performance: Using Google’s ScaNN algorithm, developed by Google Research and used in popular Google services, retailers gain access to battle-tested infrastructure without the complexity of building it themselves.

Fully managed infrastructure: Reduces operational overhead while providing enterprise-grade security, reliability, and scalability that grows with retail media program success.

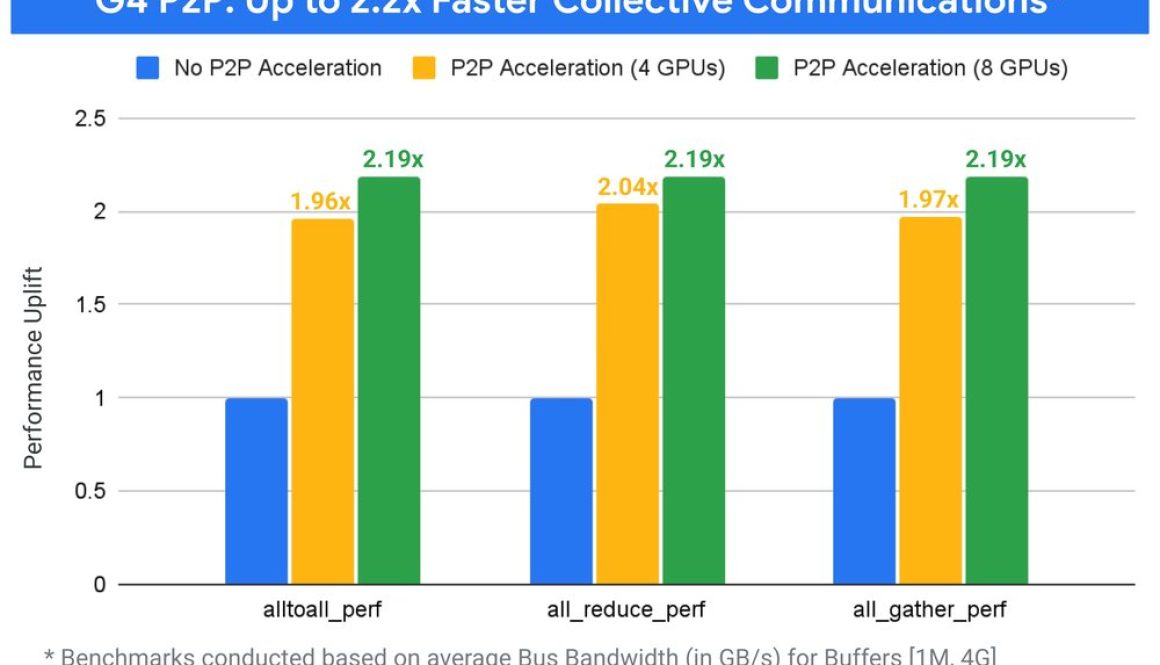

Imsung Choi, Staff Software Engineer at Moloco, explained: “By migrating to Google Cloud Platform’s Vertex AI vector search, we increased capacity by ~10x. Some customers even saw up to ~25% lower p95 latency.”

The operational benefits proved equally significant. As Mingtian Ni, Senior Staff Software Engineer at Moloco, noted: “The managed nature of Google Cloud Platform’s Vector Search simplified our system architecture significantly. We no longer need to manage infrastructure or optimize indexes manually; operations are more stable and scalable.”

Better together with Google Cloud

Moloco’s success stems from deep integration with Google Cloud’s AI infrastructure – specifically Vertex AI Vector Search and ScaNN. By combining Moloco’s deep learning expertise with Google’s scalable, production-grade machine learning tools, retailers can launch performant, AI-native ad businesses faster than ever.

Google Cloud provides the foundation: fast, reliable vector retrieval, managed index updates, and enterprise security. Moloco builds the application layer that transforms these capabilities into measurable business outcomes: increased monetization, reduced latency, and future-proof personalization.

Together, Moloco and Google Cloud help retailers transform first-party data into meaningful, real-time value without requiring substantial internal AI investments or custom infrastructure development. Retailers maintain full control of their first-party data, with our platform processing it strictly according to their instructions and enforcing tenant isolation to ensure security and data separation.

Moloco’s Vector Search migration and impact

In internal benchmarks and select production deployments, migration to Vertex AI Vector Search delivered measurable improvements across key performance metrics:

- ~10x increase in capacity, ensuring even retailers with extensive product catalogs can deliver personalized advertising experiences without performance degradation.

- Up to ~25% lower p95 latency in ad serving, critical for maintaining user engagement and ad effectiveness in real-time bidding scenarios.

- 4.0% monetization uplift in ad revenue across several rollouts.

While results vary by catalog size and traffic, these advancements demonstrate that technical improvements translate directly to business value.

Business impact for retail leaders

For CROs and CMOs, this infrastructure delivers the revenue growth and advertiser ROI that budget approvals depend on. Improved ad relevance drives higher click-through rates and conversion, making advertising inventory more valuable to brand partners. As a result, Moloco consistently enables retailers to achieve ad revenue growth that outpaces traditional solutions by delivering a 4% uplift, making it a truly transformational force for their businesses.

Heads of retail media gain the measurable performance metrics and scalable infrastructure needed to compete with established players. Platform reliability and speed become competitive advantages rather than operational headaches.

CEOs and CFOs see long-term value from platform monetization that doesn’t require ongoing technical investment. The managed infrastructure scales with business growth while providing predictable operational costs.

Technical impact for engineering and data teams

Platform architects benefit from scalable, low-latency infrastructure that avoids custom data pipelines that are difficult to maintain. The managed service reduces technical debt while supporting business growth.

Data scientists gain access to semantically rich, machine learning-ready vector search that improves ad relevance and personalization accuracy without requiring vector database expertise.

Machine learning engineers can use pre-tuned infrastructure like ScaNN to reduce time-to-value on retrieval models, focusing on business logic rather than infrastructure optimization.

DevOps and site reliability engineers appreciate fully managed services that reduce operational overhead while ensuring high availability for revenue-critical systems.

Technical leads value easy integration with existing Google Cloud Platform tools like BigQuery, Vertex AI, and Dataflow for unified data workflows.

Security and compliance teams get end-to-end policy controls and observability across data pipelines and ad targeting logic, essential for handling sensitive customer data.

Industry trends making this a must-have

Several converging trends highlight the strategic value of AI-native retail media infrastructure:

The demise of third-party cookies increases the strategic value of first-party data, but only for retailers who can activate it effectively for advertising personalization and targeting.

Generative AI adoption accelerates the need for vector-powered search and recommendation systems. As customers become accustomed to AI-powered experiences in other contexts, they expect similar sophistication in their shopping experiences.

Rising demand for personalization, shoppable content, and ad relevance creates pressure for real-time, context-aware systems that can adapt quickly to changing user behavior and inventory conditions.

ROI-driven advertising budgets put performance pressure on every aspect of the ad stack. Advertisers demand clear attribution and measurable results, pushing retailers to invest in infrastructure that provides closed-loop measurement and optimization capabilities.

The future of retail media is AI-Native

AI-native advertising technology has evolved from competitive advantage to strategic necessity. Retailers that rely on legacy systems risk falling behind competitors who can deliver superior personalization, measurement, and scale.

The partnership between Moloco and Google Cloud demonstrates how specialized AI expertise can combine with cloud-native infrastructure to deliver capabilities that would be prohibitively expensive and complex for most retailers to build independently. The managed service model ensures that retailers can access cutting-edge AI capabilities while focusing their internal resources on customer experience and business growth.

Moloco represents the next generation of Retail Media Networks (RMN) solutions, expanding what is possible for retailers with AI-powered technology. Google Cloud is proud to partner in helping them scale a differentiated, AI-native retail media solution that delivers real business results. As the retail media landscape continues to evolve, partnerships like this will define which retailers can successfully monetize their first-party data at scale.

Get Started

Moloco’s integration with Vertex AI Vector Search is available through the Google Cloud Marketplace.Explore the capabilities of Google Cloud’s Vertex AI Vector Search.

Visit the Google Cloud Marketplace to learn more about Moloco’s AI-native retail media platform, or go directly to the Moloco Commerce Media SaaS Solution.

Book a demo to see how Moloco can help scale your retail media business with AI-powered personalization and performance optimization.

for the details.