The demand for AI inference infrastructure is accelerating, with market spend expected to soon surpass investment in training the models themselves. This growth is driven by the demand for richer experiences, particularly through support for larger context windows and the rise of agentic AI. As organizations aim to improve user experience while optimizing costs, efficient management of inference resources is paramount.

According to an experimental study of large model inferencing, external key-value caches — KV Cache or, “attention caches” — on high-performance storage like Google Cloud Managed Lustre, can reduce total cost of ownership (TCO) by up to 35%, allowing organizations to serve the same workload with 43% fewer GPUs by offloading prefill compute to I/O. In this blog, we explore the core challenges of managing long-context AI inference and detail how Google Cloud Managed Lustre provides the high-performance external storage solution required to achieve these significant cost and efficiency benefits.

About KV Cache

During the inference phase, a KV Cache is a critical optimization technique for the efficient operation of Transformer-based large language models (LLMs).

The key innovation of the Transformer was the complete elimination of sequential processing (recurrence), which was achieved by introducing the self-attention mechanism to allow every element in a sequence to instantaneously and dynamically compare itself to and assess the relevance of every other element (a global, all-at-once evaluation). Within this self-attention mechanism, the model computes Key (K) and Value (V) vectors of all preceding tokens in the sequence. To generate the next token during the inference phase, the model needs the K and V vectors of all the previous tokens.

This is where the KV Cache comes into play. The KV Cache stores these K and V vectors after the initial context processing (known as the “prefill” stage), thereby avoiding the redundant, costly re-computation of the context sequence when generating subsequent tokens. By eliminating this re-computation, the KV Cache vastly speeds up the overall inference process. While smaller caches can fit in high-bandwidth memory (HBM) or host DRAM — up to a few TBs of memory may be available in a single multi-accelerator server — managing a KV Cache for contexts across multiple concurrent users that exceed the memory capacity often requires external or hierarchical storage solutions.

These large contexts can make the “prefill” computation — the calculation that an AI model performs when processing a large context window — very expensive:

-

For a large context of 100K or more tokens, the prefill computation may cause the time to first token (TTFT) to increase to tens of seconds.

-

Prefill computation requires a high number of floating-point operations (FLOPs). KV Cache reuse saves these costs and makes additional resources available on the accelerator.

The growth of agentic AI is likely to make the challenge of managing a long context even greater. Unlike a simple chatbot, agentic AI is built for action. It moves beyond conversation to solve problems proactively, completing tasks on your behalf. To do this, it actively gathers context from a wide range of digital sources. Agentic AI may, for example: check live flight data, pull a customer’s history from a database, research topics on the web, and/or keep organized notes in its own files. Agentic AI thereby builds a rich understanding of its environment, but often increases context lengths and their associated KV Cache size.

The key to managing performance costs at scale is to ensure that the accelerator is utilized as fully as possible. High-performance, scale-out storage provides the required greater throughput per accelerator and therefore translates into lighter resource requirements.

External KV Cache on Google Cloud Managed Lustre

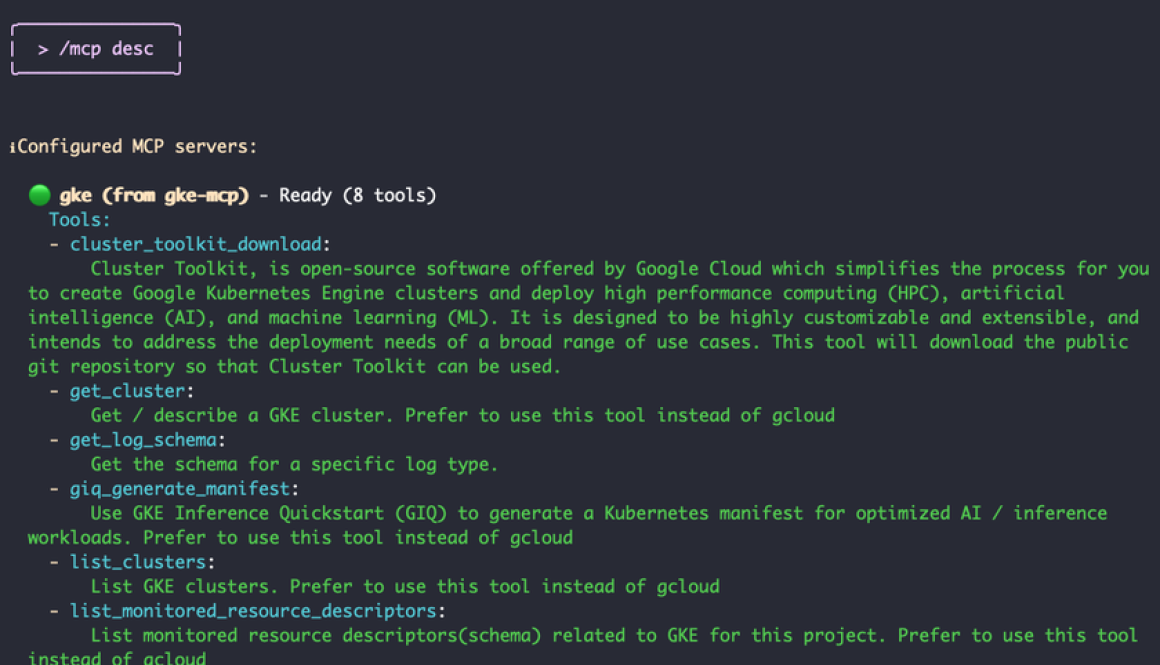

We believe that Google Cloud Managed Lustre should be your primary storage solution for external KV Cache. On GPUs, Lustre is assisted by locally attached SSDs. And on TPUs, where local SSDs are not available, Lustre’s role is even more central.

A recent LMCache blog post by Google’s Danna Wang, “LMCache on Google Kubernetes Engine: Boosting LLM Inference Performance with KV Cache on Tiered Storage,” demonstrates the foundational value of host-level offloading. Our Managed Lustre strategy is the next evolution of this host-offloading concept. While Local SSDs and CPU RAM are effective node-local tiers, they are fixed in size and cannot be shared. Managed Lustre provides a parallel file system to act as the massive, high-throughput external storage, making it a great solution for large-scale, multi-node, and multi-tenant AI inference workloads where the cache exceeds the capacity of the host machine.

Here’s an example of how the performance gains of Managed Lustre can reduce your TCO:

-

In an experiment with a 50K token context and a high cache hit rate (about 75%), using Managed Lustre improved total inference throughput by 75% and reduced mean time to first token by 44% compared to using KV Cache in host memory alone (further detail below).

-

TCO analysis yielded a 35% savings from using an external attention/KV Cache for a workload processing 1 million Tokens per Second (TPS) and leveraging A3-Ultra VMs and Managed Lustre, when compared to a workload leveraging no external storage.

-

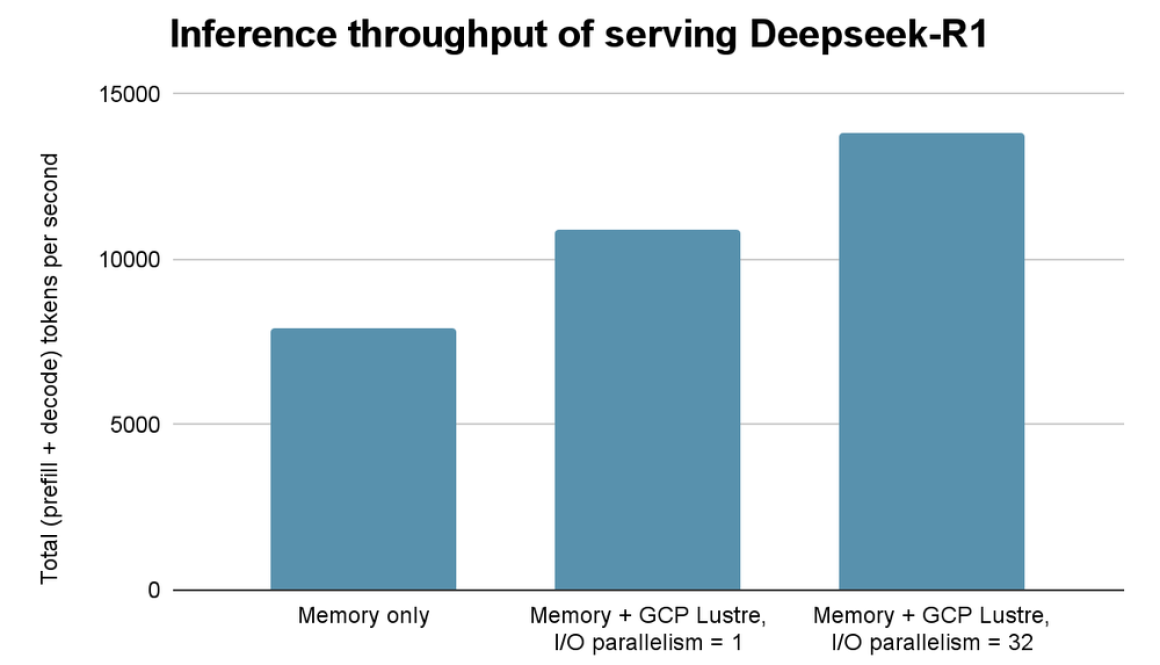

Our experiment demonstrated that with configuration tuning and an improvement in KV Cache software to adopt more I/O parallelism, Managed Lustre can substantially improve inference performance.

Total Cost of Ownership: Analysis

When evaluating a KV Cache solution, it’s critical to consider the TCO, which includes not just compute and storage costs but also operational expenses and potential savings. Our analysis shows that a high performance storage-backed KV Cache, like one built on Managed Lustre, provides a compelling TCO advantage compared to purely memory-based solutions.

Cost savings

After taking incremental storage costs into account, we project that the TCO for a file-system-backed KV Cache solution, processing 1m TPS, is 35% lower compared to a memory-only solution. This makes it a more scalable and economically viable option for large-scale AI inference deployments.

The primary TCO benefit comes from a more efficient utilization of expensive compute resources. By offloading KV Cache to a high-performance storage solution, you can achieve a higher inference throughput per accelerator. This means that fewer accelerators are needed for the same workload: You can handle a specific number of queries per second with 43% fewer accelerators, resulting in direct cost savings.

TCO model assumptions

The TCO calculation includes several key components:

- Storage costs (list price): These are the costs of Managed Lustre. Testing used the 1000 MB/s per TiB Performance Tier. The TCO model includes sufficient Lustre capacity (73 A3-Ultra machines, with 18 TiB Lustre capacity per machine) to hit the 1m TPS target rate.

- Compute costs (list price): A3-Ultra VMs each with 8x H200s GPUs and 8x 141 GB HBM (spot prices will be lower).

Performance benchmarks

Our experiments demonstrated Google Cloud Managed Lustre’s ability to deliver the high-performance I/O necessary with a state-of-the-art LLM. These experiments serve Deepseek-R1 on a Google Cloud A3-Ultra machine (8x H200s; 8x 141GB HBMs). The experiments ran a synthetic serving workload with a 50K token context and a high cache-hit rate (about 75% hit rate) with a total KV Cache size of about 3.4TiB. The memory-only baseline uses 1 TiB host memory for KV Cache. We experimented with two variants of Managed Lustre at high and low I/O parallelism. For high I/O parallelism, we utilized 32 I/O worker threads to read KV Cache data from Lustre in parallel.

for the details.