The past decade of cloud native infrastructure has been defined by relentless change — from containerization and microservices to the rise of generative AI. Through every shift, Kubernetes has been the constant, delivering stability and a uniform, scalable operational model for both applications and infrastructure.

As Google Kubernetes Engine (GKE) celebrates its 10th anniversary, its symbiotic relationship with Kubernetes has never been more important. With the increasing demand for Kubernetes to handle AI at its highest scale, Google continues to invest in strengthening Kubernetes’ core capabilities, elevating all workloads — AI and non-AI alike. At KubeCon North America this year, we’re announcing major advancements that reflect our holistic three-pronged approach:

-

Elevate core Kubernetes OSS for next-gen workloads – This includes proactively supporting the agentic wave with our new Kubernetes-native AgentSandbox APIs for security, governance and isolation. Recently, we also added several capabilities to power inference workloads such as Inference Gateway API, and Inference Perf. In addition, capabilities such as Buffers API, and HPA help address provisioning latency from different angles for all workloads.

-

Provide GKE as the reference implementation for managed Kubernetes excellence – We continuously bring new features and best practices directly to GKE, translating our Kubernetes expertise into a fully managed, production-ready platform that integrates powerful Google Cloud services, and provides unmatched scale and security. We are excited to announce the new GKE Agent Sandbox, and we recently announced GKE custom compute classes, GKE Inference Gateway, and GKE Inference Quickstart. And to meet the demand for massive computation, we are pushing the limits of scale, with support for 130k node clusters. This year, we’re also thrilled to announce our participation in the new CNCF Kubernetes Kubernetes AI Conformance program, which simplifies AI/ML on Kubernetes with a standard for cluster interoperability and portability. GKE is already certified as an AI-conformant platform.

-

Drive frameworks and reduce operational friction – We actively collaborate with the open-source community and partners to enhance support for new frameworks, including Slurm and Ray on Kubernetes. We recently announced optimized open-source Ray for GKE with RayTurbo in collaboration with Anyscale. More recently, we became a founding contributor to llm-d, an open-source project in collaboration with partners to create a distributed, Kubernetes-native control plane for high-performance LLM inference at scale.

Now let’s take a deeper look at the advancements.

Supporting the agentic wave

The Agentic AI wave is upon us. According to PwC, 79% of senior IT leaders are already adopting AI agents, and 88% plan to increase IT budgets in the next 12 months due to agentic AI.

Kubernetes already provides a robust foundation for deploying and managing agents at scale, yet the non-deterministic nature of agentic AI workloads introduces infrastructure challenges. Agents are increasingly capable of writing code, controlling computer interfaces and calling a myriad of tools, raising the stakes for isolation, efficiency, and governance.

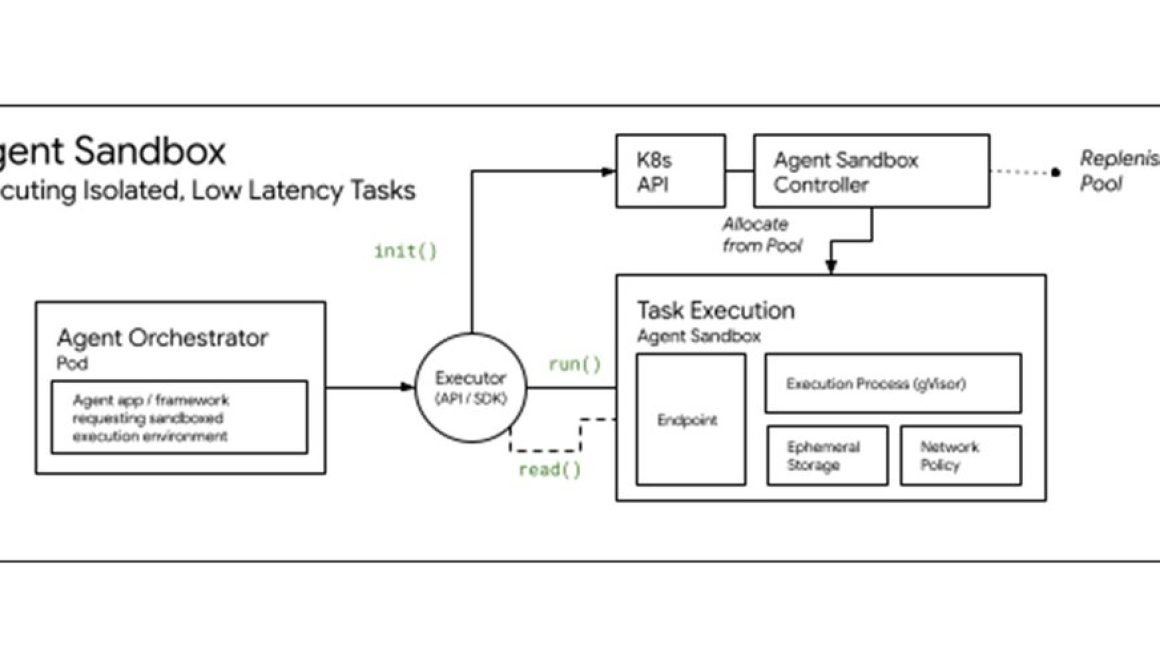

We’re addressing these challenges by evolving Kubernetes’ foundational primitives while providing high performance and compute efficiency for agents running on GKE. Today, we announced Agent Sandbox, a new set of capabilities for Kubernetes-native agent code execution and computer use environments, available in preview. Designed as open source from the get-go, Agent Sandbox relies on gVisor to isolate agent environments, so you can confidently execute LLM-generated code and interact with your AI agents.

For an even more secure and efficient managed experience, the new GKE Agent Sandbox enhances this foundation with built-in capabilities such as integrated sandbox snapshots and container-optimized compute. Agent Sandbox delivers sub-second latency for fully isolated agent workloads, up to a 90% improvement over cold starts. For more details, please refer to this detailed announcement on Supercharging Agents on GKE today.

Unmatched scale for the AI gigawatt era

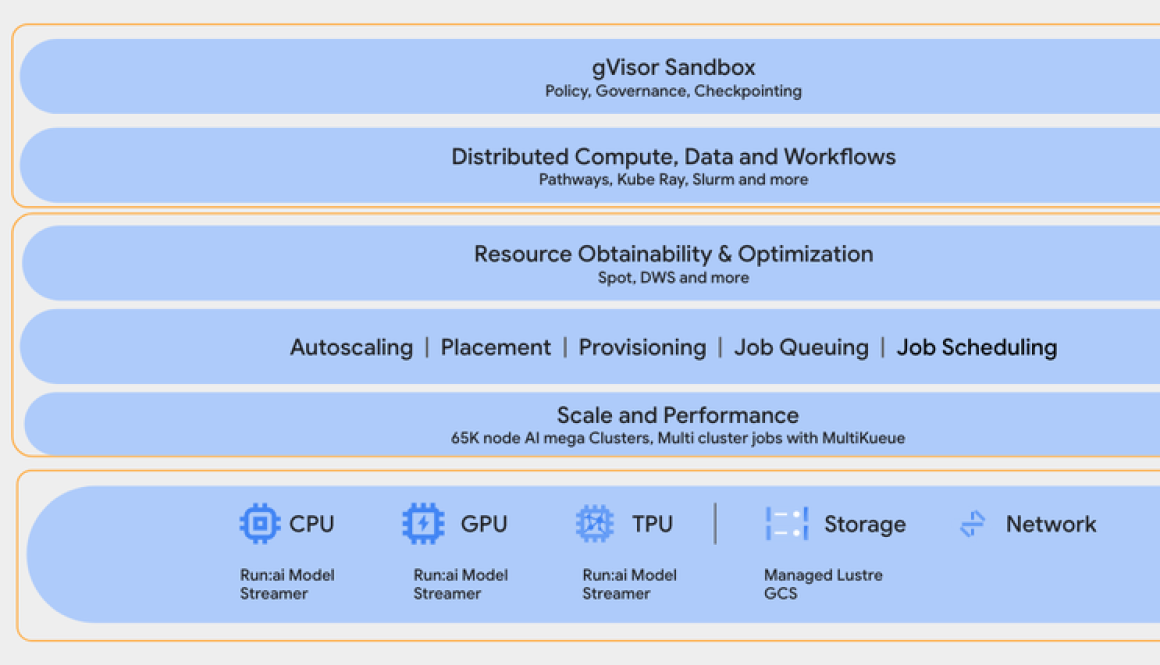

In this ‘Gigawatt AI era,’ foundational model creators are driving demand for unprecedented computational power. Based on internal testing of our experimental-mode stack, we are excited to share that we used GKE to create the largest known Kubernetes cluster, with 130,000 nodes.

At Google Cloud, we’re also focusing on single-cluster scalability for tightly coupled jobs, developing multi-cluster orchestration capabilities for job sharding (e.g., MultiKueue), and designing new approaches for dynamic capacity reallocation — all while extending open-source Kubernetes APIs to simplify AI platform development and scaling. We are heavily investing into the open-source ecosystem of tools behind AI at scale (e.g. Kueue, JobSet, etcd), while making GKE-specific integrations to our data centers to offer the best performance and reliability (e.g., running the GKE control plane on Spanner). Finally, we’re excited to open-source our Multi-Tier Checkpointing (MTC) solution, designed to improve the efficiency of large-scale AI training jobs by reducing lost time associated with hardware failures and slow recovery from saved checkpoints.

Better compute for every workload

Our decade-long commitment to Kubernetes is rooted in making it more accessible and efficient for every workload. However, through the years, one key challenge has remained: when using autoscaling, provisioning new nodes took several minutes — not fast enough for high-volume, fast-scale applications. This year, we addressed this friction head-on, with a variety of enhancements in support of our mission: to provide near-real-time scalable compute capacity precisely when you need it, all while optimizing price and performance.

Autopilot for everyone

We introduced the container-optimized compute platform — a completely reimagined autoscaling stack for GKE Autopilot. As the recommended mode of operation, Autopilot fully automates your node infrastructure management and scaling, with dramatic performance and cost implications. As Jia Li, co-founder at LiveX AI shared, “LiveX AI achieves over 50% lower TCO, 25% faster time-to-market, and 66% lower operational cost with GKE Autopilot.” And with the recent GA of Autopilot compute classes for Standard clusters, we made this hands-off experience accessible to more developers, allowing you to adopt Autopilot on a per-workload basis.

Tackling provisioning latency from every angle

We introduced faster concurrent node pool auto-provisioning, making operations asynchronous and highly parallelized. This simple change dramatically accelerates cluster scaling for heterogeneous workloads, improving deployment latency many times over in our benchmarks. Then, for demanding scale-up needs, the new GKE Buffers API (OSS) allows you to request a buffer of pre-provisioned, ready-to-use nodes, making compute capacity available almost instantaneously. And once the node is ready, the new version of GKE container image streaming gets your applications running faster by allowing them to start before the entire container image is downloaded, a critical boost for large AI/ML and data-processing workloads.

Non-disruptive autoscaling to improve resource utilization

The quest for speed extends to workload-level scaling.

-

The HPA Performance Profile is now enabled by default on new GKE Standard clusters. This brings massive scaling improvements — including support for up to 5,000 HPA objects and parallel processing — for faster, more consistent horizontal scaling.

-

We’re tackling disruptions in vertical scaling with the preview of VPA with in-place pod resize, which allows GKE to automatically resize CPU and memory requests for your containers, often without needing to recreate the pod.

Dynamic hardware efficiency

Finally, our commitment to dynamic efficiency extends to hardware utilization. GKE users now have access to:

-

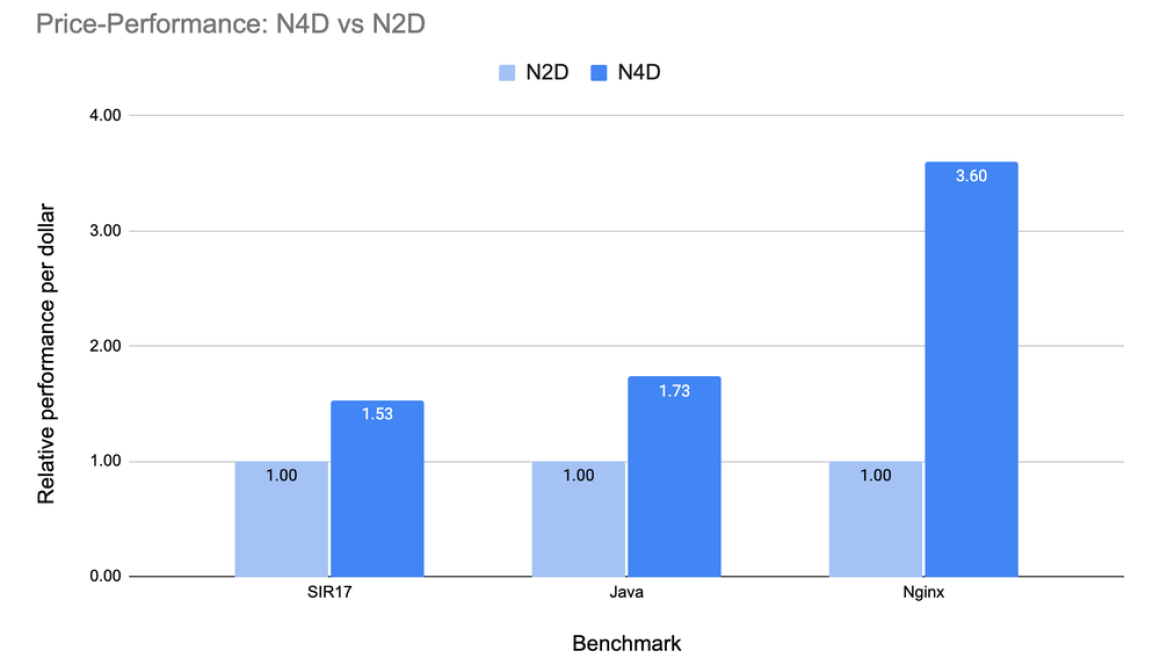

New N4A VMs based on Google Axion Processors (now in preview) and N4D VMs based on 5th Gen AMD EPYC Processors (now GA). Both support Custom Machine Types (CMT), letting you create right-sized nodes that are matched to your workloads.

-

New GKE custom compute classes, allowing you to define a prioritized list of VM instance types, so your workloads automatically use the newest, most price-performant options with no manual intervention.

A platform to power AI Inference

The true challenge of generative AI inference is how to serve billions of tokens reliably, at lightning speed, and without bankrupting the organization?

Unlike web applications, serving LLMs is both stateful and computationally intensive. To address this we have driven extensive open-source investments to Kubernetes including the Gateway API Inference Extension for LLM-aware routing, the inference performance project, providing a benchmarking standard for meticulous model performance insights on accelerators and HPA scaling metrics and thresholds, and Dynamic Resource Allocation (developed in collaboration with Intel and others) to streamline and automate the allocation and scheduling of GPUs, TPUs, and other devices to pods and workloads within Kubernetes. And we formed the llm-d project with Red Hat and IBM to create a Kubernetes-native distributed inference stack that optimizes for the “time to reach SOTA architectures.”

On the GKE side we recently announced the general availability of GKE Inference Gateway, a Kubernetes-native solution for serving AI workloads. It is available with two workload-specific optimizations:

-

LLM-aware routing for applications like multi-turn chat, which routes requests to the same accelerators to use cached context, avoiding latency spikes

-

Disaggregated serving, which separates the “prefill” (prompt processing) and “decode” (token generation) stages onto separate, optimized machine pools

As a result, GKE Inference Gateway now achieves up to 96% lower Time-to-First-Token (TTFT) latency and up to 25% lower token costs at peak throughput when compared to other managed Kubernetes services.

Startup latency for AI inference servers is a consistent challenge with large models taking 10s of minutes to start. Today, we’re introducing GKE Pod Snapshots which drastically improves startup latency by enabling CPU and GPU workloads to be restored from a memory snapshot. GKE Pod Snapshots reduces AI inference start-up by as much as 80%, loading 70B parameter models in just 80 seconds and 8B parameters models in just 16 seconds.

No discussion of inference is complete without talking about the complexity, cost, and difficulty of deploying production-grade AI infrastructure. GKE Inference Quickstart provides a continuous, automated benchmarking system kept up to date with the latest accelerators in Google Cloud, the latest open models, and inference software. You can use these benchmarked profiles to save significant time qualifying, configuring, deploying, as well as monitoring inference-specific performance metrics and dynamically fine-tuning your deployment. You can find this data in this colab notebook.

Here’s to the next decade of Kubernetes and GKE

As GKE celebrates a decade of foundational work, we at Google are proud to help lead the future, and we know it can only be built together. Kubernetes would not be where it is today without the efforts of its contributor community. That includes everyone from members writing foundational new features to those doing the essential, daily work — the “chopping wood and carrying water” — that keeps the project thriving.

We invite you to explore new capabilities, learn more about exciting announcements such as Ironwood TPUs, attend our deep-dive sessions, and join us in shaping the future of open-source infrastructure.

for the details.