- aside_block

- <ListValue: [StructValue([(‘title’, ‘D I S C L A I M E R’), (‘body’, <wagtail.rich_text.RichText object at 0x7f6ea37cd850>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

Modern genomics has made remarkable progress in automating the early stages of analysis. Sequencing technology continues to improve in both cost and throughput, and variant calling pipelines reliably identify millions of genetic differences from reference genomes. But when it comes to interpreting those variants, there’s a bottleneck: iterative data exploration.

When Exploration Becomes the Bottleneck

While the initial processing is increasingly streamlined, variant interpretation remains time-consuming. The iteration cycle looks like this:

A researcher notices several variants in BRCA1 (a cancer-related gene) and wants to compare their frequencies across European vs Asian populations. That analytical question triggers a context switch—from analysis mode to coding mode. Now they’re writing a script to parse the genomic data file, query gnomAD (a population frequency database) for each variant, aggregate results by ancestry, and generate a comparison plot. They wait for results, generate the visualization, and by the time it appears, the flow of analytical thinking has been disrupted by context-switching between interpretation and implementation.

The cycle repeats with each new question. Want to filter for variants that are both rare in the population and predicted to damage protein function in genes affecting the heart? That requires scripting dataframes with multiple conditional filters across different annotation sources—another context switch that breaks analytical flow.

This pattern, where the time between asking a question and seeing results limits hypothesis testing, constrains the iterative exploration that drives genomic discovery. It’s a bottleneck that tools like DeepVariant have highlighted rather than solved—we’ve automated finding variants but not understanding them.

What if you could just ask?

Conversation as the Interface

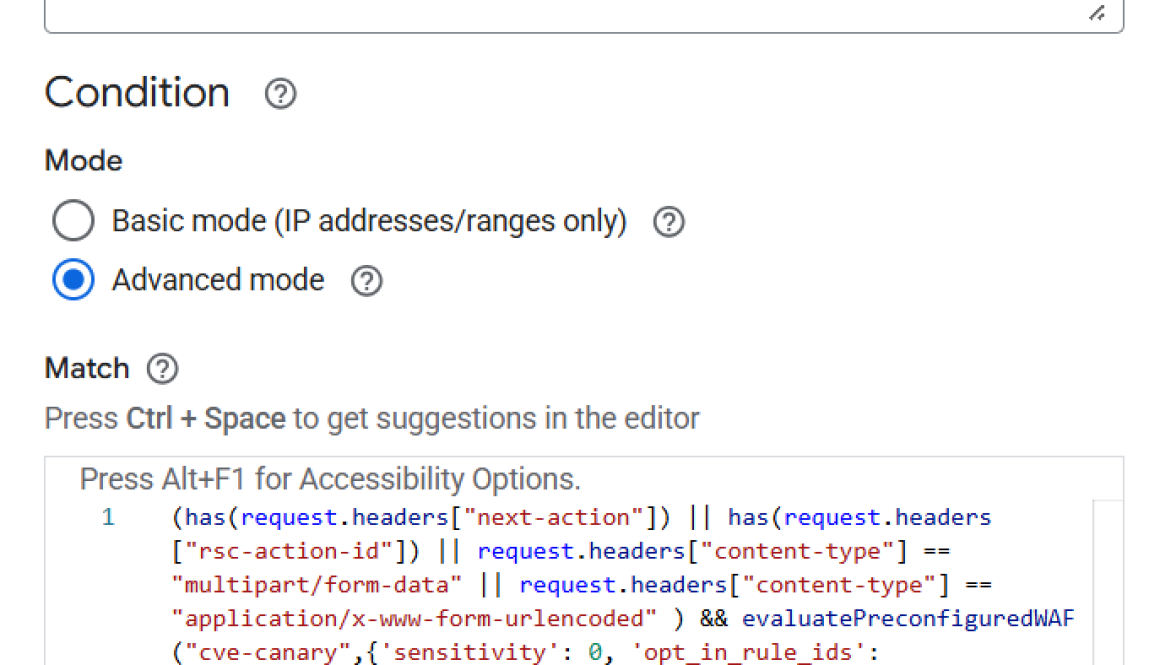

Working with the Google Research Genomics team, we explored whether multi-agent systems could transform this final mile. We saw potential in combining Google’s Agent Development Kit (ADK) with genomic workflows to eliminate the constant context-switching between analysis and coding.

Here’s how it works in practice. A researcher analyzing patient genome HG002 (7.8 million variants) asks:

“Show me pathogenic variants in cardiovascular genes”

Five seconds later, a filtered list appears with population frequencies already integrated from gnomAD:

The researcher notices APOB in the output and immediately follows up:

“Compare APOB across ethnic populations”

Three seconds later, a heatmap visualization materializes showing allele frequencies across 8 ancestries.

“What’s the clinical significance?”

Two seconds later, a comprehensive breakdown appears covering APOB’s role in lipid metabolism, associated conditions such as Familial Hypobetalipoproteinemia and Familial Hypercholesterolemia, and relevant clinical context from configured knowledge sources (in our implementation, NCBI Gene and PubMed).

No scripting. No context switching. Just questions and instant answers.

This is the result of combining Google’s ADK, Gemini, and Cloud infrastructure to rethink how we interact with complex scientific data.

Proving It Works: The APOB Spike Test

To validate accuracy, we designed a controlled experiment that would satisfy the clinical-grade standards the Google Genomics team maintains across all their tools. We recognized that conversational convenience couldn’t compromise precision—the system must detect single-variant changes with minimal or no false positives. In clinical genomics, a single pathogenic variant can determine whether a patient needs immediate medical intervention, making false positives (which could trigger unnecessary treatments or family screening) and false negatives (which could miss actionable conditions) equally problematic.

To validate accuracy, we designed a controlled experiment. Starting with the reference genome HG002 (Genome in a Bottle consortium standard), we deliberately inserted genomic material with known pathogenic variant at a low variant allele fraction (VAF), and verified the system could detect it among 7.8 million background variants—like finding a specific grain of sand on a beach.

| Genome |

Total Found Variants |

Details |

Expected Result |

| HG002_v1.0 (original reference, 2020) |

7,882,234 |

NovaSeq PCR-free 30x coverage, DeepVariant v1.0 |

No APOB variants |

| HG002_v1.0_pathogenic (deliberately spiked) |

7,882,235 (+1) |

Artificially inserted 2:21006087:C>T in APOB gene, associated with Familial Hypercholesterolemia |

Detect the single spike |

| HG002_v1.9 (newer caller, 2025) |

8,861,146 (~979K more) |

Same sample/coverage, DeepVariant v1.9 (improved algorithm) |

No APOB variants |

The Test

The validation required running the standard genomic analysis pipeline—VEP annotation (predicting variant effects), ClinVar integration (checking known pathogenic variants), gnomAD population frequency queries (retrieving ancestry-specific allele frequencies), and clinical report generation. While the pipeline itself runs automatically, exploring and querying results traditionally requires writing scripts for each question. With our conversational system, we simply asked: “Are there any APOB results?”

The Results

The spike-in test validated three critical capabilities. First, it demonstrated single-variant precision. Starting with HG002’s 7,882,234 variants (the original reference), the system correctly reported no APOB findings. When we inserted a single pathogenic variant (2:21006087:C>T), the system detected it immediately—finding our needle in a 7.8-million-variant haystack. Beyond detection, it correctly pulled the variant’s ClinVar pathogenicity classification (Pathogenic/Likely_pathogenic for Familial Hypercholesterolemia) and accurate population frequencies from gnomAD.

The test also explored caller independence. The system handled two different versions of DeepVariant (v1.0 and v1.9) without modification. Version 1.0 identified 7,882,234 variants while version 1.9’s improved sensitivity found 8,861,146—nearly a million additional variants. In both cases, the system correctly reported no APOB findings, demonstrating compatibility across different versions of the same caller. While this demonstrates robustness within the DeepVariant family, testing with different variant callers (e.g., GATK, Freebayes) would be needed to establish broader caller independence.

Finally, processing 8.8 million variants from v1.9 with the same accuracy as 7.8 million validated that the architecture scales. No false positives appeared in either search space in this validation test, and the conversational layer performed consistently regardless of the underlying data volume, though broader testing across variant types and query patterns would further validate these performance characteristics.

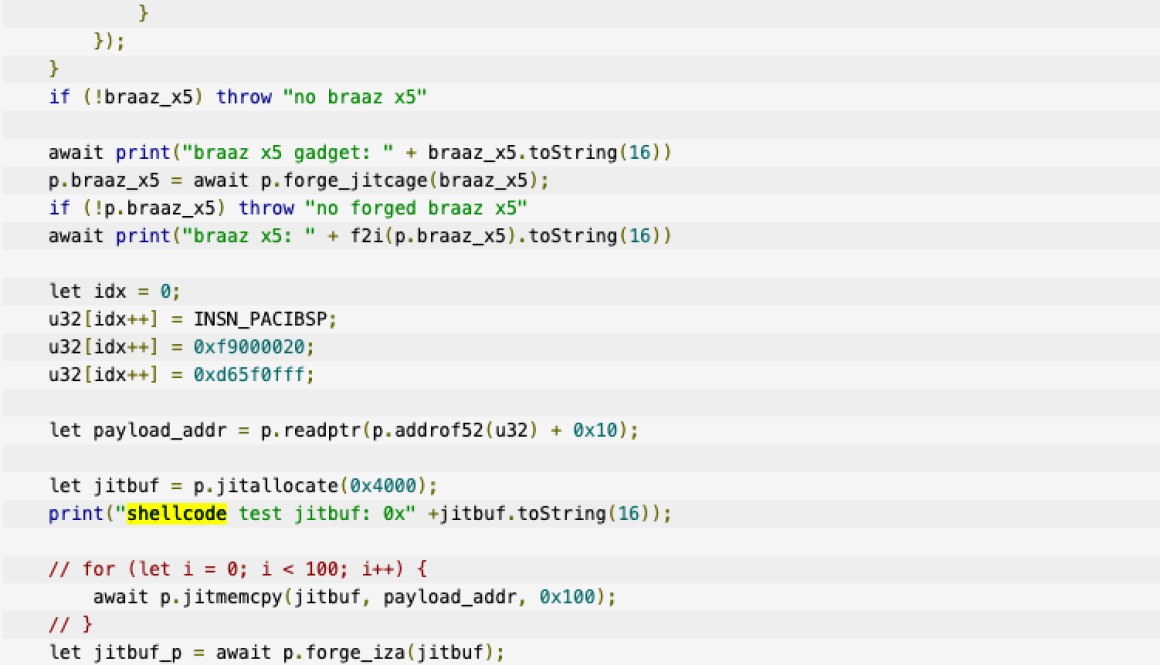

The spike test revealed something else interesting about how we handle scale. Remember those 7.8 million variants? In a hypothetical clinical deployment, when analyzing patient data, the focus would typically be on ACMG secondary findings—medically actionable genes that require immediate attention.

Based on what you’re looking for, the system adapts its approach:

- code_block

- <ListValue: [StructValue([(‘code’, ‘if analysis_mode == “clinical”:rn # ACMG SF v3.3 – 84 genes onlyrn variants_to_annotate = filter_variants_to_acmg_genes(variants)rnelse:rn # All 7.8M variantsrn variants_to_annotate = variants’), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cf6d0>)])]>

Instead of searching the entire beach for our specific grain of sand, clinical mode searches just the lifeguard zones—the 84 genes where findings change medical care. Research mode (analyzing all variants genome-wide) still searches everywhere. Both detected our APOB spike perfectly, but clinical mode did it by examining only variants in ACMG genes—typically less than 0.01% of the total. That’s not just an optimization; it’s how clinical genomics is practiced—prioritizing genes with established clinical actionability for patient care.

The Architecture: Two-Phase Design

The system follows a pattern common to many scientific domains: separate expensive upfront computation from interactive exploration. You do heavy lifting once (minutes to hours), then enable rapid iteration (seconds per query).

Think of analyzing a whole genome, processing mass spectrometry data, running climate simulations, or reconstructing medical images. The computational work happens first, then researchers want to explore results interactively. But traditional workflows often require scripting for each new exploration step.

Our approach applies multi-agent conversational AI to this pattern in genomics:

Phase 1: Computational Analysis (Runs Once)

The system performs standard tertiary genomic analysis—VEP annotation, ClinVar lookups, and gnomAD frequency queries. What’s different is that agents orchestrate and track these workflows:

VEP Annotation (~60 minutes)

-

All 7.8M variants annotated with gene symbols and functional predictions

-

Runs on Google Kubernetes Engine (GKE) with parallelization across available cores

-

Uses Persistent Disk for VEP cache (reduces time from 6+ hours to ~1 hour)

-

Triggered by InitiationPipeline agent, managed via Cloud Tasks

Knowledge Integration (~5-8 minutes)

-

ClinVar: Pathogenicity classifications from local cache

-

gnomAD: Population frequencies via BigQuery across 8 ancestries

-

Clinical Assessment: Gemini generates actionable summaries from patterns

-

Orchestrated by ReportPipeline agent upon VEP completion

The system stores all results using ADK’s GcsArtifactService (annotated variants, clinical findings) in Cloud Storage for instant querying during Phase 2. This provides automatic versioning and persistence across pod restarts.

Phase 2: Interactive Exploration (Real-Time)

Queries leverage both ADK artifacts and real-time data retrieval. Gene-specific questions load stored annotations from the GcsArtifactService (instant), while population frequency comparisons may trigger live BigQuery queries against gnomAD—but BigQuery’s performance makes these feel instantaneous (sub-5 seconds). Visualizations are generated on demand by the QueryAgent.

Think of Phase 1 as indexing a massive book. It takes time upfront, but once complete, you can look up anything instantly. This separation enables conversational speed without sacrificing computational depth.

The Google AI Stack That Powered It

Building a production-grade conversational genomics system required orchestrating multiple Google Cloud services. Here’s what each piece contributes and why it mattered:

Google ADK

ADK enabled us to structure the system as coordinated specialists rather than a monolithic pipeline. The key was using different agent types for different problems.

- code_block

- <ListValue: [StructValue([(‘code’, ‘# Root coordinator uses LLM for flexible routingrncoordinator = LlmAgent(rn name=”GenomicCoordinator”,rn model=”gemini-2.5-flash”,rn description=”Routes variant analysis requests based on user intent”,rn sub_agents=[initiation_pipeline, rn completion_pipeline, rn report_pipeline, rn query_agent]rn)rnrn# Critical workflows use sequential execution for reliabilityrninitiation_pipeline = SequentialAgent(rn name=”InitiationPipeline”, rn sub_agents=[intake_agent, vep_start_agent]rn)’), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cf730>)])]>

This hybrid approach matters because LLM agents excel at understanding ambiguous user intent and making routing decisions, while sequential agents excel at deterministic workflows requiring exact execution order. Use LLMs for flexibility, use sequential workflows for reliability.

When a user says “analyze this VCF for clinical findings,” the LLM coordinator understands that “clinical” implies ACMG secondary findings analysis, routes to InitiationPipeline, which executes a deterministic sequence: validate VCF → parse variants → determine analysis mode → create background job. No guesswork in critical paths.

Google Kubernetes Engine (GKE)

Variant annotation is computationally expensive. VEP (Variant Effect Predictor) processes 7.8 million variants against reference databases. We deployed on n2-highmem-32 nodes (32 vCPU, 256 GB RAM) to parallelize aggressively:

- code_block

- <ListValue: [StructValue([(‘code’, ‘resources:rn requests:rn cpu: “30”rn memory: “120Gi”rn limits:rn cpu: “32” rn memory: “240Gi”rnvolumeMounts:rn – name: vep-cachern mountPath: /mnt/cachern readOnly: true’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cfb20>)])]>

By mounting a 100GB Persistent Disk with pre-downloaded VEP reference data, we eliminated network bottlenecks during annotation. VEP time dropped from 6+ hours to ~1 hour. GKE’s ability to mount persistent volumes to pods made this straightforward.

BigQuery

gnomAD (Genome Aggregation Database) provides population allele frequencies. Google hosts gnomAD v2 and v3 as public BigQuery datasets.

For every pathogenic variant, we query both versions:

- code_block

- <ListValue: [StructValue([(‘code’, “– Query v3 first (GRCh38)rnSELECT allele_freq, popmax, AF_afr, AF_amr, AF_asj, AF_eas, AF_fin, AF_nfe, AF_sas, AF_othrnFROM `bigquery-public-data.gnomad.v3_genomes` rnWHERE chrom = ‘{chrom}’ AND pos = {pos} AND ref = ‘{ref}’ AND alt = ‘{alt}’rnrn– If not found, query v2 (GRCh37)rnSELECT allele_freq, popmax, AF_afr, AF_amr, AF_eas, AF_fin, AF_nfe, AF_asj, AF_othrnFROM `bigquery-public-data.gnomad.v2_1_1_genomes`rnWHERE chrom = ‘{chrom}’ AND pos = {pos} AND ref = ‘{ref}’ AND alt = ‘{alt}'”), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cfd30>)])]>

Cloud Storage and Firestore: State Management

The system uses a three-layer state management architecture, combining ADK’s native services with external storage:

VertexAI Session Service (ADK Native – Conversational State)

ADK’s VertexAiSessionService manages conversational context—user preferences, analysis mode selection (clinical vs research), conversation history. This persists across pod restarts and enables the coordinator agent to route requests intelligently based on session state.

- code_block

- <ListValue: [StructValue([(‘code’, ‘session_service = VertexAiSessionService(rn agent_engine_id=AGENT_ENGINE_IDrn)’), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cfd00>)])]>

GCS Artifact Service (ADK Native – Heavy Data Storage)

ADK’s GcsArtifactService handles large genomic data artifacts—parsed variants, VEP annotations, final reports. These binary objects can be hundreds of megabytes and need persistent, versioned storage:

- code_block

- <ListValue: [StructValue([(‘code’, ‘artifact_service = GcsArtifactService(bucket_name=BUCKET_NAME)rnrn# Save VEP annotationsrnawait artifact_service.save_artifact(rn app_name=APP_NAME,rn user_id=user_id, rn session_id=session_id,rn filename=f”annotated_variants_{task_id}.pkl”,rn data=pickle.dumps(annotations)rn)rnrn# Later queries load instantlyrnannotations = pickle.loads(rn await artifact_service.load_artifact(…, filename=…)rn)’), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cfc10>)])]>

Firestore (External – Async Job Status)

Firestore tracks background job status independently from ADK’s session management. This separation matters because Cloud Tasks workers need to query and update job progress without loading full session context:

- code_block

- <ListValue: [StructValue([(‘code’, “# Worker updates VEP statusrnfirestore_client.collection(‘tasks’).document(task_id).update({rn ‘status’: ‘vep_complete’,rn ‘completed_at’: datetime.now()rn})rnrn# CompletionPipeline polls for completionrntask_doc = firestore_client.collection(‘tasks’).document(task_id).get()rnif task_doc.get(‘status’) == ‘vep_complete’:rn trigger_report_generation()”), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37cfbb0>)])]>

This architecture separates concerns: conversational state lives in ADK sessions, heavy data in ADK artifacts, and async orchestration state in Firestore. Each layer optimizes for its specific use case—lightweight session state, large binary persistence, and independent job tracking respectively.

The system uses a dual-reference strategy because some variants exist in GRCh38 (v3) but not GRCh37 (v2), or vice versa. The system tries v3 first, falls back to v2, ensuring maximum coverage regardless of reference genome mismatches. BigQuery’s free tier (1TB queries/month) covered our usage.

Cloud Tasks

VEP annotation takes ~1 hour. We can’t block the main service waiting. Cloud Tasks manages long-running background jobs:

- code_block

- <ListValue: [StructValue([(‘code’, “task = {rn ‘http_request’: {rn ‘http_method’: ‘POST’,rn ‘url’: f'{worker_url}/worker/run-vep’,rn ‘body’: json.dumps({rn ‘task_id’: task_id,rn ‘input_artifact’: vcf_artifact_namern })rn }rn}rnrntasks_client.create_task(parent=queue_path, task=task)”), (‘language’, ‘lang-py’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f6ea37d20a0>)])]>

The main service returns immediately: “VEP annotation started, check back in 60 minutes.” Cloud Tasks handles:

-

Automatic retries on worker failures

-

Rate limiting (prevents overwhelming workers)

-

Status tracking via Firestore

When VEP completes, another Cloud Task triggers report generation. The coordinator agent monitors status and responds conversationally: “VEP finished! Generating clinical report now (~3 minutes)…”

Next Steps

While the current system integrates ClinVar for known pathogenic variants, the multi-agent architecture naturally extends to incorporate emerging tools. AlphaMissense, Google DeepMind’s tool for predicting missense variant pathogenicity, could provide insights for the millions of variants not yet clinically annotated. When users query novel variants, the system would seamlessly blend ClinVar’s validated annotations with AlphaMissense predictions, clearly distinguishing between established clinical knowledge and AI-driven insights.

Looking further ahead, AlphaGenome initiatives could extend the system beyond point mutations to structural variants and regulatory elements. Because agents orchestrate tools rather than hardcode logic, adding new prediction services or annotation tools requires no architectural changes—just expanded capabilities for the existing agents to leverage.

Conclusion

The bottleneck in many scientific domains has shifted from data generation to data interpretation. In genomics, sequencing costs have plummeted and variant calling is increasingly automated, but exploratory analysis often requires scripting for each new question.

By rethinking analysis as conversation rather than scripting, we eliminate this barrier. Researchers can explore data at the speed of thought, generating insights in seconds that previously required minutes to hours of coding.

Most importantly, the patterns we discovered generalize beyond genomics. The combination of Google ADK for multi-agent orchestration and native state management, Gemini for natural language understanding, GKE for high-performance compute, BigQuery for knowledge integration, and Cloud Tasks for async orchestration provides a blueprint for transforming any compute-intensive scientific workflow into a conversational experience.

The future of scientific computing isn’t just faster—it’s conversational.

Additional resources

For deeper exploration, check out these resources:

for the details.