At Back Market, we’re fighting climate change one electronic device at a time.

Our digital marketplace connects more than 1,800 sellers of professionally refurbished electronic devices — from smartphones and computers to home appliances and gaming consoles — with buyers looking for a good deal. We’re proud to be able to say that since 2014, we’ve averted the emission of 2 million tons of CO2 by helping more than 17 million customers purchase refurbished electronics that work just as well as new ones.

But our carbon-conscious business model poses an interesting IT challenge: Behind every website is a carbon-emitting IT infrastructure of CO2-producing data centers. To combat this, our IT team began to rethink its vendor-agnostic IT strategy. Being able to cherry-pick technologies from any provider was appealing initially, but this wasn’t scalable as the company grew.

Our tech stack was built on Amazon Web Services (AWS) and included Snowflake and Databricks. This created a fragmented data landscape where information was scattered across different platforms. Complex workflows enabled us to write data to various destinations. But BigQuery was the primary one used by all our teams. This meant we were maintaining expensive, performance-draining pipelines for destinations that provided no real value. As a result of juggling disparate technologies, our small engineering team was amassing more and more technical debt.

We had recently migrated all our data to BigQuery, which functions as our company’s enterprise data platform. So when we made the decision to migrate to a managed services model, Google Cloud was the natural choice. Our B Corp commitment also made Google Cloud’s carbon footprint transparency and renewable energy options particularly appealing.

Our long-term relationship with Google Cloud also played a role. This time, we were not only migrating our data platform, we were moving the company’s entire tech stack. The trust we’d established over the years made us comfortable with our choice to unify our data and IT operations on Google Cloud.

A double-run strategy for a super-smooth data migration

Working closely with our team at Google, we built a proof of concept in just two weeks, choosing certain data center locations specifically because they run on renewable energy sources. This proof of concept involved a dedicated team of Google experts working alongside Back Market specialists across infrastructure, database administration, data engineering, security, and backend development. The ambitious goal was to create a clone of our pre-production environment running entirely on Google Cloud within two weeks — and we achieved it.

The core of our migration strategy was to consolidate our diverse data storage systems by making BigQuery the single place for all our historical, raw, and data model needs. This would eliminate the complexity of managing Databricks and Snowflake as part of our previous AWS-based tech stack.

Together we decided on a live double-run migration approach. This involved keeping production running on Databricks while simultaneously writing data to clone tables in BigQuery. We continuously compared the resulting data imports between the old Databricks flows and the new BigQuery setup. Once we were confident that the outputs were identical, we switched off the old pipelines.

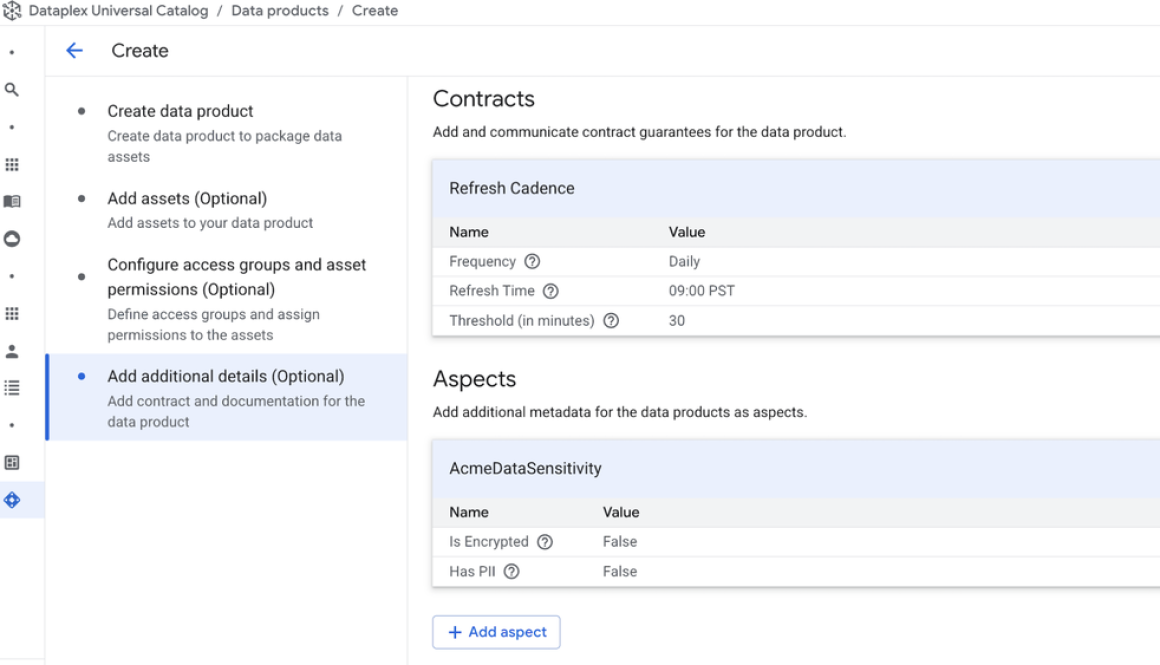

BigQuery now houses all data relating to our business operations: sales, pricing, ecommerce, ordering and delivery, supply chains, and products. In addition, marketers can use third-party data collected from marketing channels and automation platforms to assess the performance of marketing campaigns. For analytics and data engineering teams, the data in BigQuery helps build out and track KPIs — including, importantly, the overall amount of CO2 emitted by IT operations.

Because the user interface for accessing BigQuery data remained the same, the switchover didn’t disrupt the workflow of business-side users. The migration could not have gone more smoothly: We got the most critical components of our stack up and running exclusively on Google Cloud in just six months.

Accelerated dataflows, improved productivity, and lower costs

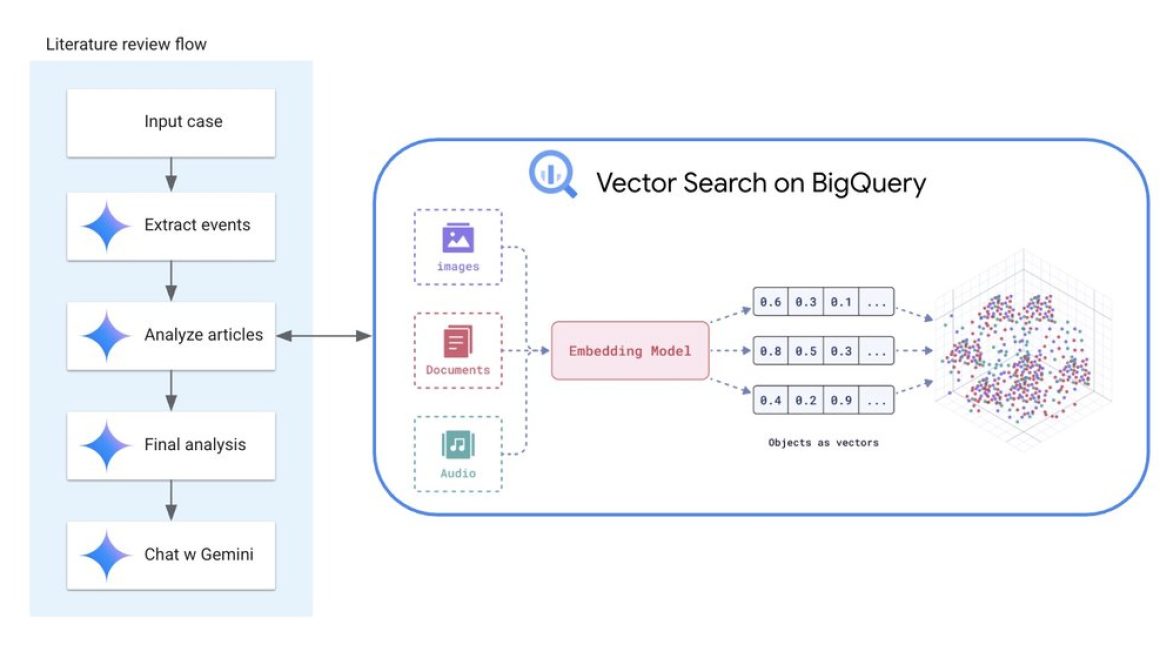

Among the most critical components of our migration was transitioning our change data capture (CDC) pipeline from AWS Database Migration Service to Google Cloud Datastream while maintaining our serverless architecture approach.

However, since Datastream produces numerous small files per minute, it can become costly to merge them one by one into BigQuery. To address this, we implemented hourly batching for cost optimization: Instead of processing each small file individually, we batch these files hourly before loading them into BigQuery.

The final data flow architecture works seamlessly. Transactional data flows from our AlloyDB databases through a proxy to Datastream, which writes the data to Cloud Storage. From there, the batching and transformation process is orchestrated using Cloud Composer via Kubernetes pods, which triggers hourly transformations of the data. After being transformed, the data is finally loaded into BigQuery.

Back Market’s decision to move to Google’s Data Cloud has had a measurable impact on performance, costs, and productivity. Simplifying the setup with Datastream alone lowered CDC costs by 90%, while Google Cloud’s streamlined, integrated data cloud has cut our data processing speeds in half. Instead of paying for machines that need to start up and maintain overhead, queries run directly, eliminating idle time and the overhead of maintaining virtual machines.

Fewer components in a unified ecosystem also translates into better observability of the entire tech stack, improved data governance, and easier-to-manage permissioning and access privileges. And a managed service with fewer components means fewer incidents, less maintenance, and diminished complexity. Onboarding is faster, knowledge-sharing is less complicated, and our team can focus on higher-value tasks like developing new products and features.

Today we rely on Google Cloud instead of building and maintaining infrastructure from scratch. Google Cloud has enabled our entire team to shift its mindset from focusing on internal technology to adding value to our own products and business.

Ready to get started?

Google Cloud offers a comprehensive set of AI-powered BigQuery Migration Services. Get started with a free assessment today.

for the details.