GCP – Announcing General Availability of Duet AI for Developers and Duet AI in Security Operations

Post Content

Read More for the details.

Post Content

Read More for the details.

Today, we’re announcing that Duet AI for Developers is now Generally Available (GA), along with new partner enhancements that will bring even greater capabilities to customers. All of our Duet AI services will incorporate Gemini, our newest and most capable model, over the next few weeks.

Google Cloud’s strategy is to meet developers where they are by embracing third-party tools that help them build applications quickly, accelerate time to value, and remove friction throughout the software development and delivery process. We are committed to extending these capabilities through our partners, and thanks to Cloud Code, you can already use Duet AI with many popular IDEs such as VSCode, and JetBrains IDEs like IntelliJ, PyCharm, GoLand, and Webstorm.

Let’s explore how Duet AI for Developers will empower organizations through our growing, open ecosystem.

Duet AI for Developers is already trained on an extensive collection of publicly available information and code from open-source and third-party platforms. Today, we’re announcing our largest set of partner enhancements with Duet AI yet. More than 25 companies will add support for developers on Google Cloud, enabling us to train and optimize Duet AI for their platforms, so that developers can be more productive and agile. These new capabilities will begin launching in Q1 of 2024.

Our partners will support developers leveraging Google Cloud in two ways:

First, our code-assist partners will help Duet AI provide technology-aware coding assistance for popular products in the developer ecosystem. For example, a developer writing code using MongoDB will be able to ask Duet AI for Developers, “Filter customer orders over $50 in the past 30 days by geography, and then calculate total revenue by location,” and Duet AI for Developers will then use information from MongoDB’s products to suggest code to complete the task, so developers can build even faster.Second, our knowledge-base partners will allow Duet AI to provide easy access to documentation and knowledge sources for popular developer products. With knowledge base partners, Duet AI can easily retrieve partner-specific information, like how to best resolve common production issues and vulnerabilities, or how to automate software tests for quality assurance. This means developers can stay focused on coding, and easily retrieve information from documentation, knowledge base articles, security policies, and more.

Read on to learn more about how our partners are supporting developers with Duet AI.

Partners adding support for coding with Duet AI for Developers are:

Confluent, the widely adopted cloud-native data streaming platform, will help enhance Duet AI by providing knowledge base articles containing best practices on Confluent products and a rich set of coding examples. Through conversational interactions, Duet AI users will be able to receive guidance on topics like setting up auto-balancing for clusters and connecting apps to data systems, which can streamline their development processes.Elastic, a leading search analytics platform, will provide Duet AI with information about its widely-adopted vector search engine and other documentation, including code samples. This will enable developers to get answers about how to best query, test, and sample data in Elastic, without leaving their development workflows.Grafana Labs, which offers an open-source observability platform, will provide Duet AI with product documentation that helps developers more quickly build Grafana dashboards to visualize, query, and help understand their data. Users will also be able to ask questions about how to leverage Grafana Labs’ observability tools to troubleshoot issues and increase application reliability.HashiCorp, a leading provider of cloud infrastructure automation software, will help train Duet AI with product information on the popular HashiCorp Terraform to provide assistance writing configurations and automating infrastructure. Users will be able to ask Duet AI for source documents, educational materials, and configuration samples written in HashiCorp Configuration Language (HCL), allowing users to be more productive and efficient.MongoDB, a leading developer data platform company, will enhance Duet AI by providing product documentation, best practices about building applications, and code samples to help jumpstart the development process. Developers will be able to quickly retrieve information about common architecture patterns and solutions, generate code, and efficiently troubleshoot issues through Duet AI’s intuitive chat interface.Neo4j, a leading graph database and analytics provider, will enhance Duet AI with information about its products and how to best use its Cypher query language, so that developers can receive better guidance and coding assistance for Neo4j’s products. This assistance can help developers uncover hidden relationships and patterns in their data, and learn how to create knowledge graphs for greater LLM accuracy.Pinecone, a leading vector database providing long-term memory for AI, will supply product documents, use cases, learning materials, and code samples to Duet AI. This memory will enable developers to easily get answers to questions about how to optimally build and scale their applications using Pinecone.Redis, which delivers an enterprise-grade data platform, will add its knowledge base, product documentation, and code samples to Duet AI to improve how developers manage and scale their databases. Through natural language chat, developers will be able to quickly understand best practices and generate code, increasing their proficiency in building applications with Redis.SingleStore, a cloud-first database system, will enhance Duet AI by providing product documentation, reference architectures about SingleStoreDB Cloud, and code samples to help developers more effectively build and manage data-intensive applications. Users will be able to ask Duet AI questions about creating applications, generating code, and optimizing use of SingleStore to enhance their data applications and workloads.

Partners adding new knowledge base information for Duet AI for Developers are:

Atlassian, a leading provider of team collaboration and productivity software, will help train Duet AI by providing its developer documentation, UI library, and community articles to assist DevOps, IT teams, and business users in how they collaborate and deliver experiences with Atlassian. Users will be able to ask questions that help them more efficiently develop, deploy, and manage applications with Atlassian Open DevOps platform.Cohesity, an AI-powered data security and management provider, will support training Duet AI by supplying knowledge base resources, product documentation, and best practice content for its products. Customers will be able to use Duet AI to ask questions, like how to set-up Cohesity’s DataProtect product in their environment and how to maintain and operate their disaster recovery solutions.CrowdStrike, a global cybersecurity leader, is committed to supporting Duet AI by providing the resources necessary to help all levels of security practitioners become faster and more efficient users of the AI-native CrowdStrike Falcon platform. Duet AI will deliver useful information on how to best deploy and manage CrowdStrike so users can stay focused on protecting their organizations.Datadog, an observability and security platform for cloud applications, will help train Duet AI on how to use its products and best practices to help users quickly access the information needed to effectively maintain full monitoring coverage with Datadog. Duet AI will enable developers to use natural language to ask questions, like how to resolve common production issues and vulnerabilities, and guidance on how to secure their applications and infrastructure.Dynatrace, a leader in AI-driven observability and application security, will provide Duet AI with its extensive knowledge base and product documentation for training. Developers will be able to ask Duet AI questions about the Dynatrace platform, like how to implement observability and security best practices, and how to set-up auto-remediation for production issues.Egnyte, a cloud-based content security collaboration platform, will provide Duet AI with information to assist developers in generating deployment scripts faster and monitoring complex deployment processes more effectively. Users will be able to quickly get answers that can support faster and cleaner software deployments.Exabeam, a global cybersecurity leader that delivers AI-driven security operations, will support training Duet AI by contributing key information about how to use its cloud-scale security log management and SIEM, powerful behavioral analytics, and automated threat detection, investigation, and response (TDIR) products. This will enable security practitioners to ask Duet AI questions that guide them on how to optimally utilize the Exabeam platform to help them detect threats and defend against cyberattacks.Jasper, an enterprise-grade AI copilot for marketing teams, will enhance Duet AI by contributing its API documentation, knowledge base resources, best practices, and FAQs. Customers will be able to use natural language to rapidly get advice on how to use Jasper to create content campaigns and integrate Jasper’s APIs into their code bases.JetBrains, a leading provider of development tools, will support training Duet AI on its public knowledge base and product documentation, encompassing IntelliJ IDEA, Kotlin, Qodana, and other plugins and solutions. This will enable developers to quickly get information to support writing high quality code faster, debugging issues, and automating tests for quality assurance.Labelbox, a leading data-centric AI platform for building intelligent applications, will help train Duet AI by providing its latest product documentation, knowledge base articles, and API call examples for the Labelbox SDK. Developers will be able to use Duet AI’s natural language interface to quickly learn how to start using Labelbox products, and discover best practices on how to create applications with advanced LLMs that can unlock more value from their unstructured data.LangChain helps developers build context-aware reasoning applications through its popular developer framework. With Duet AI, developers will be able to use natural language to request information and best practices about using LangChain, like requesting code samples that enable them to build applications more efficiently.NetApp, the intelligent data infrastructure company, will support Duet AI by providing product documentation, solution guides, and API code samples. Enterprises will be able to use Duet AI’s natural language interface to learn how to configure and optimize their NetApp enterprise storage in Google Cloud and on-premises.Okta, a leading identity and access management provider, will support Duet AI with information from its knowledge base, sample code, and best practices for utilizing its tools. Duet AI users will be able to retrieve guidance on how to optimally use Okta’s products directly within their IDE, which can accelerate application development and improve time-to-value from software deployments.Snorkel, a data-centric AI platform, will support Duet AI with product documentation and best practices to help developers get information to more effectively build production-quality AI and optimize large language models. Duet AI will be able to provide best-practice guidance on how to address common challenges to accelerate the AI development process in Snorkel.Snyk, a leading developer security platform, will help train Duet AI by providing public information on its security scanning products and vulnerability database to help improve how developers find and fix common vulnerabilities. Users will be able to request best practices on how to bring Snyk into existing development environments and workflows, which can help them set up and operationalize Snyk’s tools faster.Symantec will support Duet AI with product documentation and knowledge base articles for their leading hybrid cybersecurity solutions. This will enable Duet AI to provide users with responses to questions about how to deploy and manage Symantec offerings, including best practices and more.Sysdig, a security monitoring and protection platform, will help train Duet AI by supplying its extensive product knowledge base, FAQs, and best practices. By supporting Duet AI, security practitioners will have specialized information that enhances their understanding of Sysdig’s tools so they can better safeguard their infrastructure and organizations.Thales, a worldwide leader in data protection, will support Duet AI by providing technical documentation, knowledge base articles, and user guides to give developers quick access to information about its technology. Duet AI will provide developers, IT administrators, and security teams with insights that help increase productivity using Thales’ key management, encryption, and confidential computing tools.Weights & Biases, an AI developer platform, will provide its knowledge database, code examples, and best practices to improve how developers build and manage their end-to-end workflows. Customers will be able to leverage Duet AI to learn how to use Weights & Biases’ products and implement API calls into their ML projects.

These enhancements will be available at no additional cost to Duet AI for Developers users as they launch throughout 2024. And, starting today through January 12, 2024, customers can use Duet AI for Developers at no cost. Simply go to the Duet AI for Developers web page and follow the instructions.

Customers can also work with our ecosystem of professional services partners, who play a critical role in helping businesses implement gen AI, including Accenture, Capgemini, Cognizant, Deloitte, HCLTech, Infosys, Kyndryl, ManTech, PwC, Quantiphi, Slalom, TCS, and Wipro. Collectively, these partners have committed to train more than 150,000 experts to help bring Google Cloud gen AI, including Duet AI for Developers, to customers.

To learn more about Duet AI, visit the product page at cloud.google.com/duet-ai.

Read More for the details.

Throughout 2023, we have introduced incredible new AI innovations to our customers and the broader developer and user community, including: AI Hypercomputer to train and serve generative AI models; Generative AI support in Vertex AI, our Enterprise AI platform; Duet AI in Google Workspace; and Duet AI for Google Cloud. We have shipped a number of new capabilities in our AI-optimized infrastructure with notable advances in GPUs, TPUs, ML software and compilers, workload management and others; many innovations in Vertex AI; and an entire new suite of capabilities with Duet AI agents in Google Workspace and Google Cloud Platform.

Already, we have seen tremendous developer and user growth. For example, between Q2 and Q3 this year, the number of active gen AI projects on Vertex AI grew by more than 7X. Leading brands like Forbes, Formula E, and Spotify are using Vertex AI to build their own agents, and Anthropic, AI21 Labs, and Cohere are training their models. The breadth and creativity of applications that customers are developing is breathtaking. Fox Sports is creating more engaging content. Priceline is building a digital travel concierge. Six Flags is building a digital concierge. And Estée Lauder is building a digital brand manager.

Today, we are introducing a number of important new capabilities across our AI stack in support of Gemini, our most capable and general model yet. It was built from the ground up to be multimodal, which means it can generalize and seamlessly understand, operate across, and combine different types of information, including text, code, audio, image, and video, in the same way humans see, hear, read, listen, and talk about many different types of information simultaneously.

Starting today, Gemini is part of a vertically integrated and vertically optimized AI technology stack that consists of several important pieces — all of which have been engineered to work together:

Super-scalable AI infrastructure: Google Cloud offers leading AI-optimized infrastructure for companies, the same used by Google, to train and serve models. We offer this infrastructure to you in our cloud regions as a service, to run in your data centers with Google Distributed Cloud, and on the edge. Our entire AI infrastructure stack was built with systems-level codesign to boost efficiency and productivity across AI training, tuning, and serving.World-class models: We continue to deliver a range of AI models with different skills. In late 2022, we launched our Pathways Language Model (PaLM), quickly followed by PaLM 2, and we are now delivering Gemini Pro. We have also introduced domain-specific models like Med-PaLM and Sec-PaLM.Vertex AI — leading enterprise AI platform for developers: To help developers build agents and integrate gen AI into their applications, we have rapidly enhanced Vertex AI, our AI development platform. Vertex AI helps customers discover, customize, augment, deploy, and manage agents built using the Gemini API, as well as a curated list of more than 130 open-source and third-party AI models that meet Google’s strict enterprise safety and quality standards. Vertex AI leverages Google Cloud’s built-in data governance and privacy controls, and also provides tooling to help developers use models responsibly and safely. Vertex AI also provides Search and Conversation, tools that use a low code approach to developing sophisticated search, and conversational agents that can work across many channels.Duet AI — assistive AI agents for Workspace and Google Cloud: Duet AI is our AI-powered collaborator that provides users with assistance when they use Google Workspace and Google Cloud. Duet AI in Google Workspace, for example, helps users write, create images, analyze spreadsheets, draft and summarize emails and chat messages, and summarize meetings. Duet AI in Google Cloud, for example, helps users code, deploy, scale, and monitor applications, as well as identify and accelerate resolution of cybersecurity threats.

We are excited to make announcements across each of these areas:

As gen AI models have grown in size and complexity, so have their training, tuning, and inference requirements. As a result, the demand for high-performance, highly-scalable, and cost-efficient AI infrastructure for training and serving models is increasing exponentially.

This isn’t just true for our customers, but Google as well. TPUs have long been the basis for training and serving AI-powered products like YouTube, Gmail, Google Maps, Google Play, and Android. In fact, Gemini was trained on, and is served, using TPUs.

Last week, we announced Cloud TPU v5p, our most powerful, scalable, and flexible AI accelerator to date. TPU v5p is 4X more scalable than TPU v4 in terms of total available FLOPs per pod. Earlier this year, we announced the general availability of Cloud TPU v5e. With 2.7X inference-performance-per-dollar improvements in an industry benchmark over the previous generation TPU v4, it is our most cost-efficient TPU to date.

We also announced our AI Hypercomputer, a groundbreaking supercomputer architecture that employs an integrated system of performance-optimized hardware, open software, leading ML frameworks, and flexible consumption models. AI Hypercomputer has a wide range of accelerator options, including multiple classes of 5th generation TPUs and NVIDIA GPUs.

Gemini is also our most flexible model yet — able to efficiently run on everything from data centers to mobile devices. Gemini Ultra is our largest and most capable model for highly complex tasks, while Gemini Pro is our best model for scaling across a wide range of tasks, and Gemini Nano is our most efficient model for on-device tasks. Its state-of-the-art capabilities will significantly enhance the way developers and enterprise customers build and scale with AI.

Today, we also introduced an upgraded version of our image model, Imagen 2, our most advanced text-to-image technology. This latest version delivers improved photorealism, text rendering, and logo generation capabilities so you can easily create images with text overlays and generate logos.

In addition, building on our efforts around domain-specific models with Med-PaLM, we are excited to announce MedLM, our family of foundation models fine-tuned for healthcare industry use cases. MedLM is available to allowlist customers in Vertex AI, bringing customers the power of Google’s foundation models tuned with medical expertise.

Today, we are announcing that Gemini Pro is now available in preview on Vertex AI. It empowers developers to build new and differentiated agents that can process information across text, code, images, and video at this time. Vertex AI helps you deploy and manage agents to production, automatically evaluate the quality and trustworthiness of agent responses, as well as monitor and manage them.

Vertex AI gives you comprehensive support for Gemini, with the ability to discover, customize, augment, manage, and deploy agents built against the Gemini API, including:

Multiple ways to customize agents built with Gemini using your own data, including prompt engineering, adapter based fine tuning such as Low-Rank Adaptation (LoRA), reinforcement learning from human feedback (RLHF), and distillation.Augmentation tools that enable agents to use embeddings to retrieve, understand, and act on real-world information with configurable retrieval augmented generation (RAG) building blocks. Vertex AI also offers extensions to take actions on behalf of users in third-party applications.Grounding to improve quality of responses from Gemini and other AI models by comparing results against high-quality web and enterprise data sources.A broad set of controls that help you to be safe and responsible when using gen AI models, including Gemini.

In addition to Gemini support in Vertex AI, today we’re also announcing:

Automatic Side by Side (Auto SxS), an automated tool to compare models. Auto SxS is faster and more cost-efficient than manual model evaluation, as well as customizable across various task specifications to handle new generative AI use cases.The addition of Mistral, ImageBind, and DITO into Vertex AI’s Model Garden, continuing our commitment to an open model ecosystem.We will soon be bringing Gemini Pro into Vertex AI Search and Conversation to help you create engaging, production-grade applications quickly.

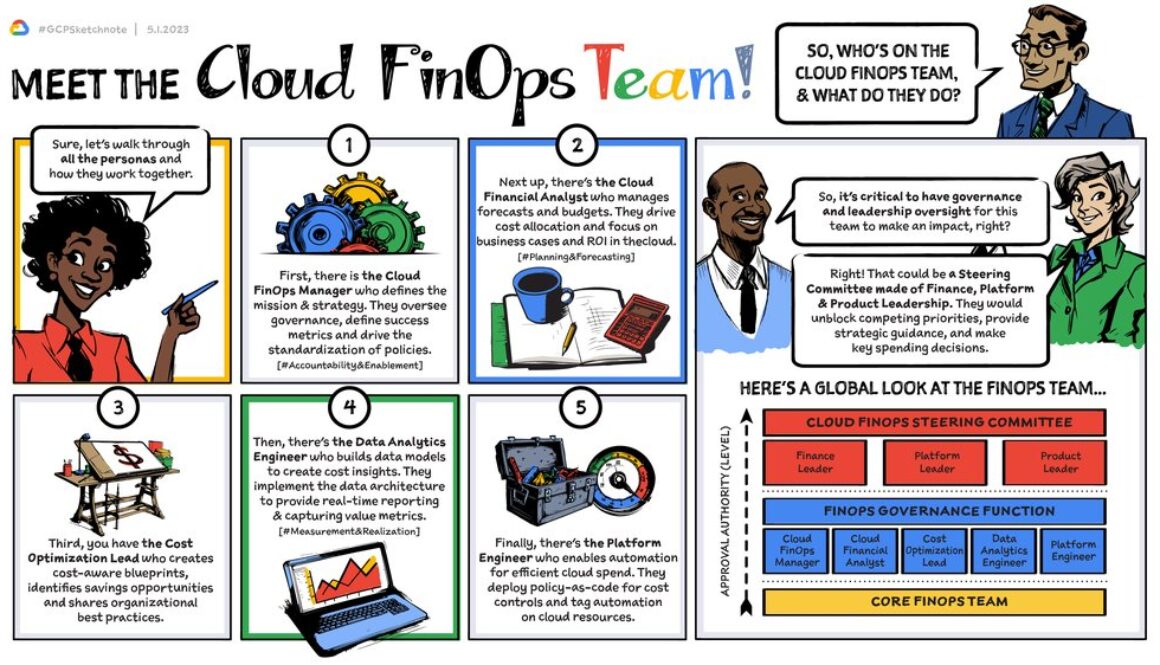

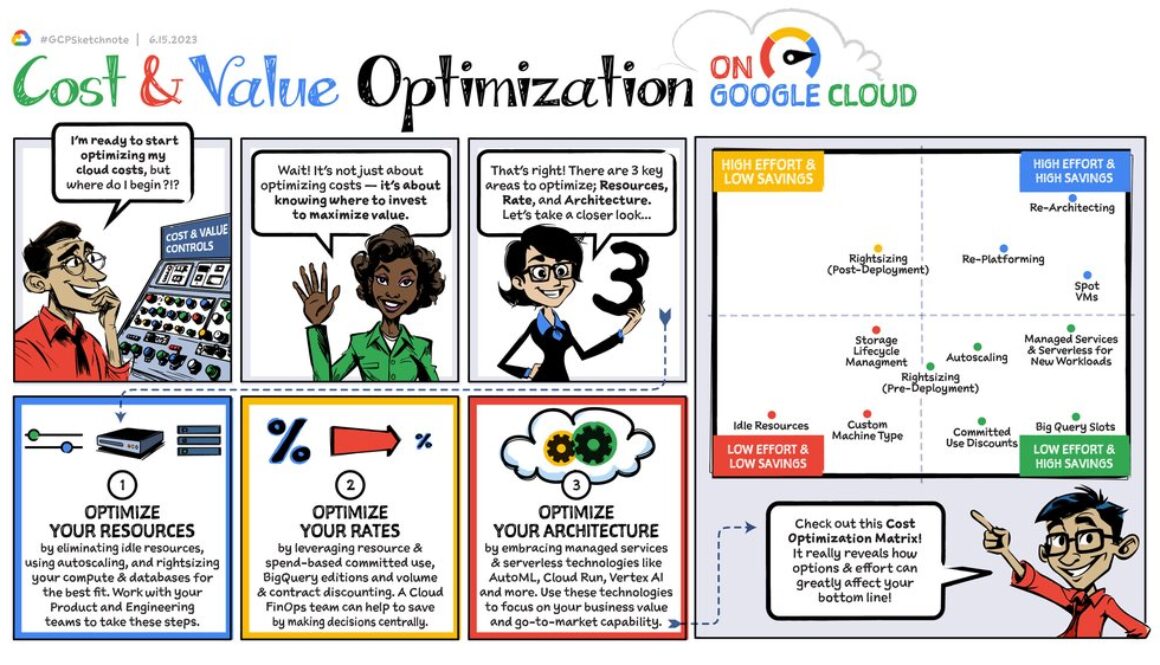

With Duet AI, we are committed to helping our customers boost productivity, gain competitive advantages, and ultimately improve their bottom line. Today, Duet AI for Developers and Duet AI in Security Operations are generally available, and we will be incorporating Gemini across our Duet AI portfolio over the next few weeks.

Duet AI for Developers helps users code faster with AI code completion, code generation, and chat in multiple integrated development environments (IDEs). It streamlines repetitive developer tasks and processes with shortcuts for common tasks, including unit test generation and code explanation, speeds troubleshooting and issue remediation, and it helps reduce context-switching. Duet AI also expedites skills-based learning by giving users the ability to ask questions using natural language chat.

Today, we’re also announcing that more than 25 code-assist and knowledge-base partners will contribute datasets specific to their platforms, so users of Duet AI for Developers can receive AI assistance based on partners’ coding and data models, product documentation, best practices, and other useful enterprise resources.

Duet AI in Security Operations, Google Cloud’s unified security operations platform, can enable defenders to more effectively protect their organizations from cyberattacks. Security teams can elevate their skills and help accelerate threat detection, investigation, and response using the power of gen AI. With Duet AI in Security Operations, we are offering AI assistance first in Chronicle, where users can search vast amounts of data in seconds with custom queries generated from natural language, reduce time-consuming manual reviews, quickly surface critical context by leveraging automatic summaries of case data and alerts, and improve response time using recommendations for next steps to support incident remediation.

Google owns the entire Duet AI technology stack, from the infrastructure and foundation models, to the top-level integration and user experience. We’re proud that our engineers and researchers uniquely collaborate to bring our latest AI technology breakthroughs to customers with a consistent, unified product experience. Early next year, we plan to expand Duet AI across our portfolio, including Duet AI in BigQuery, Looker, our database products, Apigee, and more.

In addition to these new capabilities across our vertically integrated AI technology stack, we have competitive pricing that makes Gemini accessible to more organizations, and are expanding our indemnification to help protect you from copyright concerns.

The release of Gemini, combined with our portfolio of super-scalable AI infrastructure, Vertex AI, and Duet AI offers a comprehensive and powerful cloud for developers and customers. With these innovations, Google Cloud is propelling the next generation of AI-powered agents across every industry, empowering organizations to build, use, and successfully adopt gen AI to fuel their digital transformations.

Read More for the details.

Improving healthcare and medicine are among the most promising use cases for artificial intelligence, and we’ve made big strides since our initial research into medically-tuned large language models (LLMs) with Med-PaLM in 2022 and 2023. We’ve been testing Med-PaLM 2 with healthcare organizations, and our Google Research team continues to make significant progress, including exploring multimodal capabilities.

Now we’re introducing MedLM — a family of foundation models fine-tuned for healthcare industry use cases. MedLM is now available to Google Cloud customers in the United States through an allowlisted general availability in the Vertex AI platform. MedLM is also currently available in preview in certain other markets worldwide.

Currently, there are two models under MedLM, built on Med-PaLM 2, to offer flexibility to healthcare organizations and their different needs. Healthcare organizations are exploring the use of AI for a range of applications, from basic tasks to complex workflows. Through piloting our tools with different organizations, we’ve learned the most effective model for a given task varies depending on the use case. For example, summarizing conversations might be best handled by one model, and searching through medications might be better handled by another. The first MedLM model is larger, designed for complex tasks. The second is a medium model, able to be fine-tuned and best for scaling across tasks. The development of these models has been informed by specific healthcare and life sciences customer needs, such as answering a healthcare provider’s medical questions and drafting summaries. In the coming months, we’re planning to bring Gemini-based models into the MedLM suite to offer even more capabilities.

Many of the companies we’ve been testing MedLM with are now moving it into production in their solutions, or broadening their testing. Here are some examples.

For the past several months, HCA Healthcare has been piloting a solution to help physicians with their medical notes in four emergency department hospital sites. Physicians use an Augmedix app on a hands-free device to create accurate and timely medical notes from clinician-patient conversations in accordance with the Health Insurance Portability and Accountability Act (HIPAA). Augmedix’s platform uses natural language processing, along with Google Cloud’s MedLM on Vertex AI, to instantly convert data into drafts of medical notes, which physicians then review and finalize before they’re transferred in real time to the hospital’s electronic health record (EHR).

With MedLM, the automated performance of Augmedix’s ambient documentation products will increase, and the quality and summarization will continue to improve over time. These products can save time, reduce burnout, increase clinician efficiency, and improve overall patient care. The addition of MedLM also makes it easier to affordably scale Augmedix’s products across health systems and support an expanding list of medical subspecialties such as primary care, emergency department, oncology, and orthopedics.

Drug research and development is slow, inefficient, and extremely expensive. BenchSci is bringing AI to scientific discovery to help expedite drug development, and it is integrating MedLM into its ASCEND platform to further improve the speed and quality of pre-clinical research and development.

BenchSci’s ASCEND platform is an AI-powered evidence engine that produces a high-fidelity knowledge graph of more than 100 million experiments, decoded from hundreds of different data sources. This allows scientists to understand complex connections in biological research, presenting a thorough grasp of empirically validated and ontologically derived relationships, including biomarkers, detailed biological pathways, and interconnections among diseases. The integration of MedLM works to further enhance the accuracy, precision and reliability, and together with ASCEND’s proprietary technology and data sets, it aims to significantly boost the identification, classification, ranking, and discovery of novel biomarkers — clearing the path to successful scientific discovery.

To spur enterprise adoption and value, we’ve also teamed up with Accenture to help healthcare organizations use generative AI to improve patient access, experience, and outcomes. Accenture brings its deep healthcare industry experience, solutions, and the data and AI skills needed to help healthcare organizations make the most of Google’s technology with human ingenuity.

Aimed at healthcare process improvement, Accenture’sSolutions.AI for Processing for Health interprets structured and unstructured data from multiple sources to automate manual processes that were previously time-consuming and prone to error, like reading clinical documents, enrollment, claims processing, and more. This helps clinicians make faster, more informed decisions and frees up more time and resources for patient care. Using Google Cloud’sClaims Acceleration Suite, MedLM, and Accenture’s Solutions.AI for Processing, new insights can be uncovered — ultimately leading to better patient outcomes.

Healthcare organizations are often bogged down with administrative tasks and processes related to documentation, care navigation, and member engagement. Deloitte and Google Cloud are working together with our mutual customers to explore how generative AI can help improve the member experience and reduce friction in finding care through an interactive chatbot, which helps health plan members better understand the provider options covered by their insurance plans.

Deloitte, Google Cloud, and healthcare leaders are piloting MedLM’s capabilities to make it easier for care teams to discover information from provider directories and benefits documents. These inputs will then help contact center agents better identify best-fit providers for members based on plan, condition, medication and even prior appointment history, getting people faster access to the precise care they need.

As we bring the transformative potential of generative AI to healthcare, we’re focused on enabling professionals with a safe and responsible use of this technology. That’s why we’re working in close collaboration with practitioners, researchers, health and life science organizations, and the individuals at the forefront of healthcare every day. We’re excited by the progress we’ve seen in just one year — from building the first LLM to obtain a passing score (>60%) on U.S. medical-licensing-exam-style questions (published in Nature), to advancing it to obtain an expert level score (86.5%), to applying it in real-world scenarios through a measured approach. As we reflect on 2023 — and close out the year by expanding MedLM access to more healthcare organizations — we’re excited for the progress and potential ahead and our continuing work on pushing forward breakthrough research in health and life sciences.

Read More for the details.

Today, we’re sharing a significant upgrade to Google Cloud’s image-generation capabilities with Imagen 2, our most advanced text-to-image technology, which is now generally available for Vertex AI customers on the allowlist (i.e., approved for access).

Imagen 2 on Vertex AI allows our customers to customize and deploy Imagen 2 with intuitive tooling, fully-managed infrastructure, and built-in privacy and safety features. Developed using Google DeepMind technology, Imagen 2 delivers significantly improved image quality and a host of features that enable developers to create images for their specific use case, including:

Generating high-quality, photorealistic, high-resolution, aesthetically pleasing images from natural language promptsText rendering in multiple languages to create images with accurate text overlaysLogo generation to create company or product logos and overlay them in imagesVisual question and answering for generating captions from images, and for getting informative text responses to questions about image details

Importantly, Vertex AI’s indemnification commitment now covers Imagen on Vertex AI, which includes Imagen 2 and future generally available upgrades of the model powering the service. We employ an industry-first, two-pronged copyright indemnification approach that can give customers peace of mind when using our generative AI products.

Imagen 2 on Vertex AI boasts a variety of image generation features to help organizations create images that match their specific brand requirements with the same enterprise-grade reliability and governance customers are used to with Imagen.

New features now available in Imagen 2 include:

High quality Images: Imagen 2 can achieve accurate, high-quality photorealistic outputs with improved image+text understanding and a variety of novel training and modeling techniques.

Text rendering support: Text-to-image technologies often have difficulty correctly rendering text. If a model is prompted to generate a picture of an object with a specific word or phrase, for example, it can be challenging to ensure the correct phrase is part of the output image. Imagen 2 helps solve for this, which can give organizations a deeper level of control for branding and messaging.

Logo generation: Imagen 2 can generate a wide variety of creative and realistic logos — including emblems, lettermarks, and abstract logos — for business, brands, and products. It also has the ability to overlay these logos onto products, clothing, business cards, and other surfaces.

Captions and question-answer: Imagen 2’s enhanced image understanding capabilities enable customers to create descriptive, long-form captions and get detailed answers to questions about elements within the image.

Multi-language prompts: Beyond English, Imagen 2 is launching with support for six additional languages (Chinese, Hindi, Japanese, Korean, Portuguese, Spanish) in preview, with many others planned for release in early 2024. This feature includes the capability to translate between prompt and output, e.g., prompting in Spanish but specifying that the output should be in Portuguese.

Safety: Imagen 2 includes built-in safety precautions to help ensure that generated images align with Google’s Responsible AI principles. For example, Imagen 2 is integrated with our experimental digital watermarking service, powered by Google DeepMind’s SynthID, which enables allowlisted customers to generate invisible watermarks and verify images generated by Imagen. Imagen 2 also includes comprehensive safety filters to help prevent generation of potentially harmful content.

We look forward to organizations using these new features to build on the results they’ve already achieved with Imagen.

Snap is using Imagen to help Snapchat+ subscribers to express their inner creativity. With the new AI Camera Mode, they can tap a button, type in a prompt or choose a pre-selected one, and generate a scene to share with family and friends or post to their story.

“Imagen is the most scalable text-to-image model with the safety and image quality we need,” said Josh Siegel, Senior Director of Product at Snap. “One of the major benefits of Imagen for Snap is that we can focus on what we do best, which is the product design and the way that it looks and makes you feel. We know that when we work on new products like AI Camera Mode, we can really rely on the brand safety, scalability, and reliability that comes with Google Cloud.”

Shutterstock has also emerged as a leading innovator bringing AI to creative production — including being the first to launch an ethically-sourced AI image generator, now enhanced with Imagen on Vertex AI. With the Shutterstock AI image generator, users can turn simple text prompts into unique, striking visuals, allowing them to create at the speed of their imaginations. The Shutterstock website includes a searchable collection of more than 16,000 Imagen pictures, all available for licensing.

“We exist to empower the world to tell their stories by bridging the gap between idea and execution. Variety is critical for the creative process, which is why we continue to integrate the latest and greatest technology into our image generator and editing features—as long as it is built on responsibly sourced data,” said Chris Loy, Director of AI Services, Shutterstock. “The Imagen model on Vertex AI is an important addition to our AI image generator, and we’re excited to see how it enables greater creative capabilities for our users as the model continues to evolve.”

Canva is also using Imagen on Vertex AI to bring ideas to life, with millions of images generated with the model to date. Users can access Imagen as an app directly within Canva and with a simple text prompt, generate a captivating image that fits their design needs.

“Partnering with Google Cloud, we’re continuing to use generative AI to innovate the design process and augment imagination,” said Danny Wu, Head of AI at Canva. “With Imagen, our 170M+ monthly users can benefit from the image quality improvements to uplevel their content creation at scale. The new model and features will further empower our community to turn their ideas into real images with as little friction as possible.”

To get started with Imagen 2 on Vertex AI, find our documentation or reach out to your Google Cloud account representative to join the Trusted Tester Program.

Read More for the details.

A week ago, Google announced Gemini, our most capable and flexible AI model yet. It comes in three sizes: Ultra, Pro and Nano. Today, we are excited to announce that Gemini Pro is now publicly available on Vertex AI, Google Cloud’s end-to-end AI platform that includes intuitive tooling, fully-managed infrastructure, and built-in privacy and safety features. With Gemini Pro, now developers can build “agents” that can process and act on information.

Vertex AI makes it possible to customize and deploy Gemini, empowering developers to build new and differentiated applications that can process information across text, code, images, and video at this time. With Vertex AI, developers can:

Discover and use Gemini Pro, or select from a curated list of more than 130 models from Google, open-source, and third-parties that meet Google’s strict enterprise safety and quality standards. Developers can access models as easy-to-use APIs to quickly build them into applications.Customize model behavior with specific domain or company expertise, using tuning tools to augment training knowledge and even adjust model weights when required. Vertex AI provides a variety of tuning techniques including prompt design, adapter-based tuning such as Low Rank Adaptation (LoRA), and distillation. We also provide the ability to improve a model by capturing user feedback through our support for reinforcement learning from human feedback (RLHF).Augment models with tools to help adapt Gemini Pro to specific contexts or use cases. Vertex AI Extensions and connectors let developers link Gemini Pro to external APIs for transactions and other actions, retrieve data from outside sources, or call functions in codebases. Vertex AI also gives organizations the ability to ground foundation model outputs in their own data sources, helping to improve the accuracy and relevance of a model’s answers. We offer the ability for enterprises to use grounding against their structured and unstructured data, and grounding with Google Search technology.Manage and scale models in production with purpose-built tools to help ensure that once applications are built, they can be easily deployed and maintained. To this end, we’re introducing a new way to evaluate models called Automatic Side by Side (Auto SxS), an on-demand, automated tool to compare models. Auto SxS is faster and more cost-efficient than manual model evaluation, as well as customizable across various task specifications to handle new generative AI use cases.Build search and conversational agents in a low code / no code environment. With Vertex AI, developers across all machine learning skill levels will be able to use Gemini Pro to create engaging, production-grade AI agents in hours and days instead of weeks and months. Coming soon, Gemini Pro will be an option to power search summarization and answer generation features in Vertex AI, enhancing the quality, accuracy, and grounding abilities of search applications. Gemini Pro will also be available in preview as a foundation model for conversational voice and chat agents, providing dynamic interactions with users that support advanced reasoning.Deliver innovation responsibly by using Vertex AI’s safety filters, content moderation APIs, and other responsible AI tooling to help developers ensure their models don’t output inappropriate content.Help protect data with Google Cloud’s built-in data governance and privacy controls. Customers remain in control of their data, and Google never uses customer data to train our models. Vertex AI provides a variety of mechanisms to keep customers in sole control of their data including Customer Managed Encryption Keys and VPC Service Controls.

Google’s comprehensive approach to AI is designed to help keep our customers safe and protected. We employ an industry-first, two-pronged copyright indemnity approach to help give Cloud customers peace of mind when using our generative AI products. Today we are extending our generated output indemnity to now also include model outputs from PaLM 2 and Vertex AI Imagen, in addition to an indemnity on claims related to our use of training data. Indemnification coverage is planned for the Gemini API when it becomes generally available.

The Gemini API is now available. Gemini Pro is also available on Google AI Studio, a web-based tool that helps quickly develop prompts. We will be making Gemini Ultra available to select customers, developers, partners and safety and responsibility experts for early experimentation and feedback before rolling it out to developers and enterprise customers early next year.

Join us today at our Applied AI Summit to learn more.

Read More for the details.

Post Content

Read More for the details.

AppLovin makes technologies that help businesses of every size connect to their ideal customers. The company provides end-to-end software leveraging AI solutions for businesses to reach, monetize and grow their global audiences. AppLovin has been exploring and harnessing AI for product and service enhancements across their ad platform to improve user experience across operations such as monetization, and making effective data-driven marketing decisions.

AppLovin achieved increased automation in critical operations — ad targeting, bid setting, learning phases, and more — that were traditionally reliant on manual adjustments by their team or partners. However, AppLovin encountered a notable challenge: implementing AI, from managing training workloads to processing billions of automated recommendations daily, requires a robust cloud infrastructure. To overcome this challenge, AppLovin upgraded the infrastructure supporting its AI advertising algorithm using the latest, top-of-the-line hardware, including Google Cloud G2 VMs. One of AppLovin’s goals was to modernize its AdTech platform by leveraging Google’s state-of-the-art cloud technology and infrastructure; they were able to achieve this vision and accelerate their AI development timeline.

In 2022, AppLovin was in the middle of a large-scale migration to Google Kubernetes Engine (GKE) to ease the strain on their legacy infrastructure, reduce latency and improve their ability to scale effortlessly. AppLovin began testing G2, the industry’s first cloud VM powered by NVIDIA L4 Tensor Core GPUs, at the beginning of 2023. G2 was specifically built to handle large inference AI workloads like those AppLovin was dealing with, making it an ideal solution.

Leveraging G2 VMs and GKE together, they were able to:

Improve automation and consistency across their application stackReduce training time by leveraging GPU hardwareAchieve the agility and flexibility to launch in new regions at will

By switching from NVIDIA T4 GPUs to G2 VMs, powered by Nvidia L4 GPUs, AppLovin experienced up to a 4x improvement in performance. Additionally, their switch from NVIDIA A10G GPUs to G2 VMs lowered production infrastructure costs by as much as 40%.

“With Google Cloud’s leading infrastructure and GPUs, AppLovin is able to achieve nearly 2x improved price/performance compared with industry alternatives and support the company’s AI techniques developments,” said Omer Hasan, Vice President of Operations, AppLovin.

And, as they’ve moved their advertisers seamlessly to new systems, they’ve been able to pass the benefits of these changes right along to their customers.

Peak Games, for example, describes “substantial growth in ROAS campaigns with AppLovin… with the recent AI advancements.” And DealDash has stated that “[AppLovin’s] AI-powered campaigns and unparalleled support have helped us achieve remarkable efficiency.”

AppLovin may not be a startup anymore – the company went public in April 2021 and using its tools, its customers have achieved more than 16 billion installs (on more than two billion mobile devices) in 130 countries – but its desire to retain a disruptive edge is abundantly clear.

At Next ‘23 Basil Shikin, Chief Technology Officer at AppLovin, shared that he believes “Google was a force multiplier in creating our culture of innovation at AppLovin.” He also stated that,“with the help of Google Cloud’s engine, AppLovin will be able to remain on the forefront of that evolution.”

Omer Hasan, Vice President of Operations at AppLovin, has even directly credited the role of Google’s ongoing innovation in upgrading their core tools: “Because of Google Cloud’s deep commitment to AI technologies, the advancements in our platform, AXON 2.0, were made possible.”

He goes on to say that “we saw really incredible improvements on our team’s agility, our ability to respond to the environment, and updating our training models as fast as possible.”

AppLovin has achieved impressive growth since their establishment in 2012 with annual ad spend exceeding $3.5 billion and over 2.5 million requests per second. This growth has been fueled by their core values of “Move Fast” and “Never Stop Learning” putting them on the forefront of the AI revolution in adtech.

AppLovin continues to leverage AI techniques in its industry-leading products. Scalability, efficiency, and value are essential to the business as they continue to add developers to their customer base, and as their existing customers are emboldened to amp up their advertising efforts.

“We love to iterate, and we love to change. Moving all of our machine learning/AI away from static deployments into an autoscale GKE environment, coupled with the introduction of GPUs, allowed for rapid scaling,” said Omer Hasan, AppLovin Vice President of Operations. “With that elastic infrastructure in place, it became a lot easier to deploy quickly, reliably, and consistently.”

In their relentless pursuit of growth and innovation, AppLovin is harnessing the power of Google Cloud’s security solutions. With a foundation built on Google infrastructure, integrating GKE, Vertex AI, and BigQuery, they’re forging ahead securely, achieving unprecedented speed, scale, and endless possibilities.

The recent implementation of NVIDIA L4 GPUs is a testament to their commitment to future-proofing, demonstrating that for AppLovin, they’re only just getting started when it comes to scaling and reaching new heights of success.

Read More for the details.

We are in the midst of a new era of research and innovation across disciplines and industries. Researchers increasingly rely on computational methods to drive their discoveries, and AI-infused tools supercharge the need for powerful computational resources. Not only is the speed of discovery accelerating, the workflows used to make these discoveries are also rapidly advancing. These increasing and changing demands put tremendous pressure on those responsible for providing research groups with the needed high performance computing (HPC) resources. Helping the teams that provide research computing resources to scientists is where Google Cloud steps in.

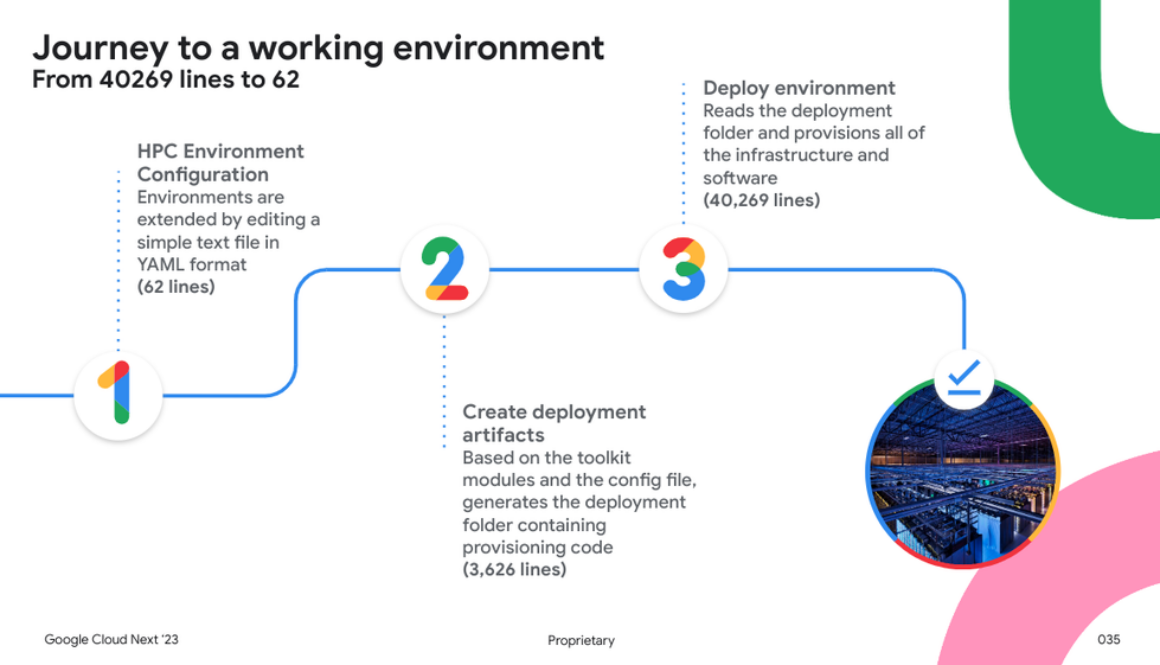

The cloud is made to readily meet changing computational requirements. At Google Cloud, we apply this flexibility to HPC demands such as those posed by research. To make the provisioning, modifying, and decommissioning of those HPC environments in the cloud more accessible, we have developed the Google Cloud HPC Toolkit, an open-source software product for defining HPC environments in Google Cloud.

The Google Cloud HPC Toolkit encapsulates HPC best practices and cleanly exposes relevant configuration parameters in a compact infrastructure-as-code configuration file. At the same time, the user can inspect the complete set of commands in the configuration file used to create the infrastructure and thereby control the process and customize components as needed. This approach caters to research groups and IT staff from research computing facilities alike, helping them build HPC environments that meet dynamic computational science and AI/ML needs.

Cloud HPC Toolkit can dramatically simplify an HPC deployment, using <100 lines of YAML in an HPC blueprint to create an HPC environment that would require 40,000+ lines of code

Stanford School of Sustainability

Stanford was among the earliest adopters of Google’s Cloud HPC Toolkit. They started using it to connect Google Cloud and on-premises resources as soon as it became available.

As the school began adopting cloud computing, they wanted to ensure researchers could maintain the same experience they had on-premises. To do this, they paired a custom startup script with Chrome Remote Desktop to give researchers the same interface they’re familiar with, keeping the process streamlined no matter where research is being done. The researcher logs in to interactive nodes in Chrome Remote Desktop and is greeted with an experience similar to accessing on-premises clusters. Their use of the toolkit has continued to grow, developing their own modules to enable quick, secure use of Vertex AI instances for code development for an ever growing user base.

“Stanford Doerr School of Sustainability is expanding computing capabilities for its researchers through Google Cloud. HPC Toolkit enables us to quickly, securely and consistently deploy HPC systems to run experiments at scale, with blueprints that can be deployed and redeployed with varying parameters, giving us the agility we need to meet the burgeoning needs of our scientists. With the Toolkit, we can stand up clusters with different partitions depending on our users’ needs, so that they can take advantage of the latest hardware like NVIDIA GPUs when needed and leverage Google Cloud’s workload-optimized VMs to reach price-performance targets. Dynamic cluster sizes, the ability to use spot VMs when appropriate in cluster partitions, and the ability to quickly get researchers up and running in environments they are used to have all been enhanced by the Toolkit.”

– Robert Clapp, Senior Research Engineer, Stanford Doerr School of Sustainability

University of California, Riverside

The University of California, Riverside (UCR), has been working to adopt Google Cloud for its infrastructure needs. Ensuring that researchers retain access to high-performance computing was an important consideration with the move.

The UCR’s HPC admin team works with a number of different research groups, each of which has their own specs for what they need clusters to support. To support these groups, the HPC team needed a common set of parameters that could be customized quickly. They also needed to ensure the security and reliability of those clusters. To do this, they created a set of Cloud HPC Toolkit blueprints that allowed their users to customize specific components while maintaining a specific set of standards. This standardization also allows them to share blueprints with other researchers to let other labs more easily reproduce their work and validate findings. They followed up by employing auto-scaling measures to ensure their researchers weren’t limited by storage or compute capacity.

“Through the scale and innovation of Google Cloud and the HPC Toolkit, we’ve revolutionized research at UCR. We achieve goals once deemed impossible by our researchers, in extraordinary timeframes. The HPC Toolkit simplifies the design and administration of HPC clusters, making them standardized and extensible. This enables UCR to offer lab-based HPC clusters that are tailored for specific research and workflow needs. Whether it’s AI/ML HPC GPU Clusters, Large Scale Distributed Clusters, High Memory HPC Clusters, or HPC Visualization Clusters, the HPC Toolkit streamlines the process, making it as easy as configuring a YAML file.”

– Chuck Forsyth, Director Research Computing, UCR

Start your own journey

Stanford and UCR are just two organizations leveraging the power of HPC on Google Cloud to drive innovation forward. Whether you are a research group taking care of your own computing resources or a professional working in a university research computing facility, the Cloud HPC Toolkit provides a powerful approach to manage your infrastructure in the cloud. The Toolkit is open source with a community of users and Google Cloud partners that share ready-made blueprints to help you get started. Visit the Google Cloud HPC Toolkit and the Google Cloud HPC sites to learn more and get started with HPC on Google Cloud today.

What’s more, if you work with a public sector entity, we can help keep costs predictable through our Public Sector Subscription Agreement (PSSA), which reduces the risk of unforeseen expenses by maintaining a fixed monthly price for defined environments, without the variability of month-to-month consumption spikes. The PSSA helps meet the needs of researchers with predetermined budgets and grants that can’t be exceeded.

Connect with your Google Cloud team today to learn more about our Public Sector Subscription Agreement (PSSA), HPC solutions for verticals and HPC tooling aimed at administrative tasks. We’re here to help you get your researchers and their HPC workloads up and running on Google Cloud.

Read More for the details.

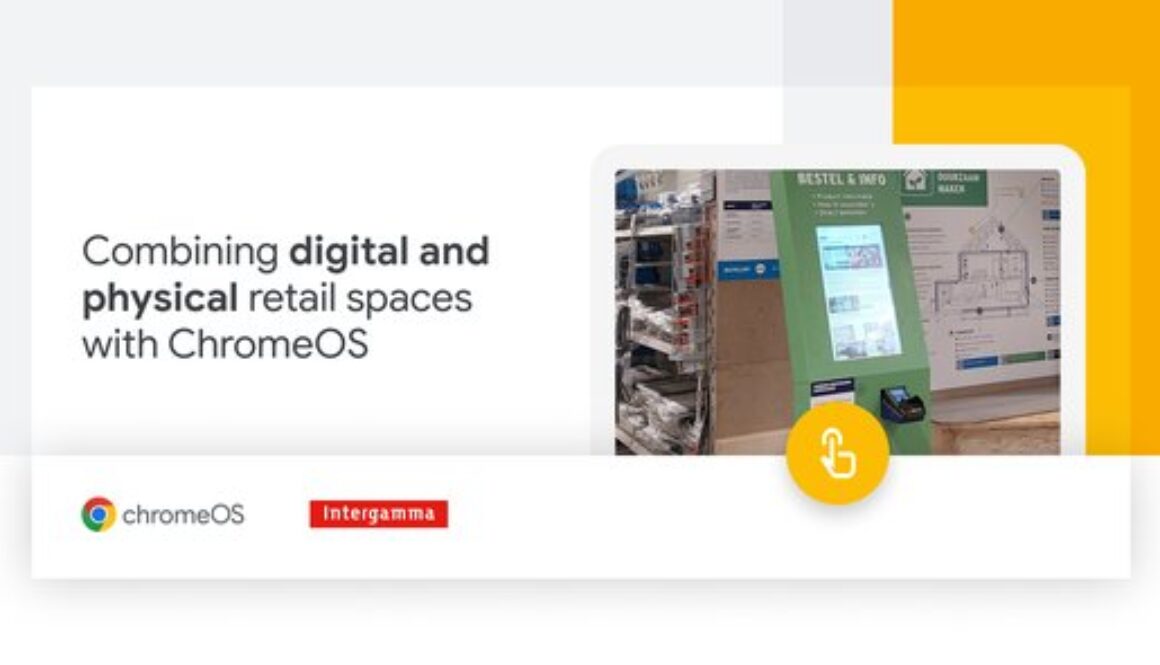

Editor’s note:Today’s post is by Gerrit Klaas van der Vaart, Information Manager for Checkout, Loyalty, and Retention for Intergamma, based in Leusden, Netherlands. Intergamma is the largest DIY franchisee organization in the Benelux region, with almost 400 stores in the Netherlands and Belgium. The company’s stores are using ChromeOS devices to power kiosks where shoppers can customize and order home-decor products.

In our company-owned Gamma and Karwei hardware stores across the Netherlands and Belgium, our 1,500 ChromeOS powered kiosks combine the best of online and in-store shopping. For some products, like made-to-measure curtains, customers need more assistance after they have looked at in-store samples. With an employee at their side, customers get help with measuring, choosing product options, and checking out. Shopping is a breeze – customers can choose and buy products in just a few minutes.

For our 10,000 employees, we get easy-to-use and trouble-free devices. And now that we have seen the value of ChromeOS powered kiosks in stores to both customers and employees, , we plan to roll out fixed and mobile ChromeOS devices across all 400 stores.

Kiosks powering the in-store experience

We’ve always had kiosks in our stores, but we previously ran into technical limitations with payments and printing. Employees were placing the old kiosks in storage since they didn’t want to frustrate customers with technology that didn’t work properly. We were heading toward an end-of-life deadline on the devices, which was a great time to make a change.

We chose Acer Chromeboxes to power the large 26-inch screens in stores for customers to view and choose products. The ChromeOS powered kiosks give customers a visually engaging experience with the ability to choose products and see items in real-time, before making a purchase.

Managing our ChromeOS devices

ChromeOS devices give us more options to keep our kiosks trouble-free for customers and employees. All of our stores have basically the same IT setup. Of course, the number of checkouts can differ and the number of kiosks can differ, but the underlying technical specifications are all the same.

With the Google Admin console, we can set policies for each store which helps employees easily log on to only authorized applications.

An easier and more engaging shopping experience

The ChromeOS devices in our stores are faster and more stable than the HP Windows devices we used to have. We hardly ever have to troubleshoot issues. With more uptime and easier management, we’re confident that both employees and customers are having better experiences.

Read More for the details.

Editor’s note: Today we hear from Space and Time, whose decentralized data warehouse and novel zero-knowledge (ZK) proof for SQL operations was recently integrated into BigQuery. Read on to learn more about how Space and Time is working to improve Blockchain-based smart contracts for the Web3 space, and how to use their technology within the Google Cloud data ecosystem.

Blockchain transactions, which are executed by smart contracts, are cryptographically secured and can be independently verified by any participant in the network. As such, blockchain has vast implications for industries like financial services, supply chain, and healthcare, where the integrity, traceability, and transparency of data is critical. In fact, we believe a blockchain-based Web3 has the potential to revolutionize how these sectors manage data, conduct transactions, and interact with consumers.

However, smart contracts are limited in the types of data they can access, the amount of storage they can leverage, and the compute functions they can perform. Without the help of external solutions, smart contracts can only execute very basic if/then logic against certain data points that exist natively onchain. In order to power enterprise use cases, such as dynamic energy pricing or automated banking compliance, smart contracts need a way to access offchain data and compute.

The data that drives smart contracts often exists offchain in SQL databases and data warehouses, where it’s analyzed and aggregated before being sent to a contract onchain. This allows the smart contract to operate more efficiently and at scale, but poses a dire problem: without a way to verify this data or the processing of it, smart contracts are vulnerable to manipulation, which can result in a contract executing incorrectly, causing value loss onchain.

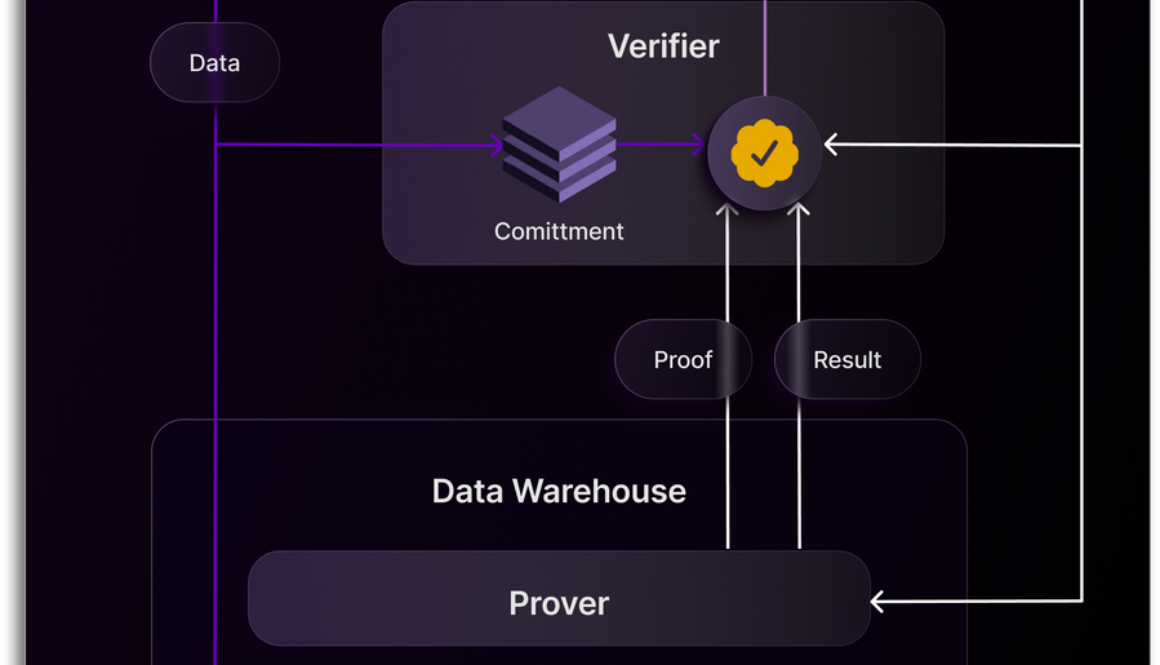

Enter Proof of SQL, a zero-knowledge (ZK) proof that enables tamperproof data processing at scale and provides cryptographic proof that query results have been processed as expected against untampered data. Proof of SQL combines ZK technology with traditional database processing to advance Web3 use cases, including:

Verifiable asset exchangesData-driven decentralized finance (DeFi)Complex earning schemes for Web3 play-to-earn gamesDynamic tokenized real-world assets (RWAs)

Proof of SQL lets developers connect ZK-verified query processing over data in Google Cloud to their smart contracts. This enables smart contracts to execute based on more complex, data-driven logic without the costly risk of tampering.

As a ZK-proof attached to SQL databases, Proof of SQL cryptographically proves to a client that both query execution and underlying tables have not been tampered with. With Proof of SQL, the root of trust is established by creating virtual ‘tamperproof tables’ inside the target attached database. As data gets added to these tables by clients, special hashes (or ‘commitments’) are updated. Later, when validating a query and associated ZK-proof, these commitments are used to confirm its validity. By enabling SK proof for SQL operations with BigQuery, customers can cryptographically ensure that query results were computed accurately and on untampered data.

Figure 1: Proof of SQL architecture

Proof of SQL is composed of two interoperating components: the Prover, which generates the proof-of-query execution, and the Verifier, which validates the generated proof. When a client ingests data into the database, that data is routed to the Verifier. The Verifier creates a commitment to the data, stores it for later use, then routes the data to the database.

When the client sends a query request, that request is routed to the Prover. The Prover parses the query, computes the correct result, and produces a proof of query execution. It then sends both the query result and the proof back to the Verifier.

Once the Verifier has this proof, it uses the commitment to check the proof against the result and verify that the Prover has produced the correct result for the query request. This query result is then routed back to the client along with a ‘success’ flag. If the proof does not pass, the Verifier sends a failure message instead.

Figure 2: Proof of SQL on BigQuery

To enable Proof of SQL to work with BigQuery, a Space and Time Prover node is connected adjacent to the BigQuery engine. When a request for a tamperproof SQL query reaches BigQuery and is directed to the Prover, the result, accompanied by its proof of correctness, is generated. This proof-result pair can either be verified by the Space and Time Gateway, or through a client-side library. The latter shifts the root of trust to the user, which some clients prefer, while others choose to delegate verification to the Space and Time Gateway, which carries out this role on their behalf.

To enable Space and Time’s Proof of SQL service to ZK-prove that queries against BigQuery data were executed accurately and that the underlying data hasn’t been tampered with, a client must simply:

Allow the Space and Time Gateway to be positioned in front of BigQuery as a proxy or load server: BigQuery users have the flexibility to label certain tables as ‘tamperproof’. For tables that require tamperproof queries, the client loads the data through the Space and Time Gateway. During this process, the Gateway creates cryptographic commitments on the data, which are used later for proof verification.Provide the Prover node access to BigQuery storage (local or external): Once a table has been designated as tamperproof and the data is loaded via the Gateway, the next step is granting the Prover node access either to BigQuery storage directly, or to external storage venues like Google Cloud Storage. Regardless of where the tamperproof table is defined, a user has to authorize the Prover to read from it.Load data and route queries executed against BigQuery through the Gateway (only if those queries need to be ZK-verified): For query operations that don’t require Proof of SQL, the BigQuery user experience is no different. For tamperproof queries, requests must be routed through the Space and Time Gateway, in order to ensure that the Proof of SQL proof can be validated against the commitment that was created during loading.

These steps accomplished, customers can get up and running with ZK-proven queries quickly and efficiently.

The ability to ZK-verify queries will enable Google Cloud developers and enterprises to build a wealth of new Web3 use cases powered by verifiable data processing, including verifiable exchanges, data-driven DeFi, complex earning schemes for play-to-earn Web3 games, and dynamic tokenized RWAs.

Proof of SQL can be leveraged to ensure the integrity of metadata related to asset transactions on exchanges. In the case of Golteum, a Web3 trading platform for both digital and real-world assets that recently announced its participation in the Google for Startups Cloud Program, Proof of SQL is able to verify the authenticity and chain of custody of the precious metals being traded, thereby enhancing trust in and security of the platform’s operations, which is paramount for a platform that operates on transparent trading and asset-backed tokens. Proof of SQL allows Golteum to post verifiable cryptographic proofs of its SQL operations, ensuring that the data related to the custody and origin of precious metals traded on Golteum is accurate and unaltered.

Decentralized finance, or DeFi, continues to rapidly evolve. Even so, traditional financial systems still far outpace their DeFi counterparts in complexity and efficiency. With Proof of SQL on BigQuery, a more nuanced approach to DeFi becomes feasible. For example, Proof of SQL can be used to create verifiable dynamic credit scores based on a borrower’s onchain and offchain loan history. This allows for more tailored lending rates, aligning them with a borrower’s credibility. Additionally, BigQuery’s robust data-handling capabilities, combined with Proof of SQL, can facilitate the verifiable analysis of repayment behaviors, enabling lending protocols to set dynamic loan liquidation preferences. This not only enhances the accuracy and fairness of lending rates but also potentially increases the risk-adjusted returns for lending protocols.

Enabling Proof of SQL on BigQuery allows game developers to transform reward systems in play-to-earn Web3 games. By allowing offchain game telemetry to be processed and analyzed in BigQuery, Proof of SQL ensures that onchain reward systems are not based on simple metrics but are instead able to include detailed player analytics. This enables game developers to create more intricate reward logic, such as assessing team cooperation or strategy implementation. For example, in a multiplayer online battle arena game, rewards can be based on a combination of achievements, player behavior, and in-game strategy, all verified by Proof of SQL. This deeper level of engagement, underpinned by BigQuery’s data processing power and the verifiability of Proof of SQL, significantly enhances the gaming experience and broadens the scope of the in-game economy.

Tokenization of real-world assets (RWAs) such as real estate, event tickets, or collectibles, benefits immensely from the Proof of SQL on BigQuery. For assets with dynamic metadata, BigQuery provides an efficient platform for storing and processing fluctuating data, while Proof of SQL ensures that these updates are tamperproof and verifiable. For instance, in the tokenization of event tickets, BigQuery can store and dynamically update ticket prices based on availability, with Proof of SQL guaranteeing the integrity of the underlying data. When a transaction occurs, the smart contract queries the real-time data, executing transactions based on the most current information. This system not only enhances efficiency and transparency but also responds to the growing demand for transparency, particularly in industries like event ticketing, paving the way for a more user-friendly experience.

Space and Time focuses on ‘verifiable compute,’ combining a decentralized data warehouse and a full stack of ZK developer tools to empower Web3 developers to build decentralized applications at scale. Recently they announced a collaboration with Google Cloud. The work centers around enabling Proof of SQL – Space and Time’s novel ZK-proof for SQL operations – to work with BigQuery, and making Space and Time available to deploy from the Google Cloud Marketplace.

Read More for the details.

In 2006, Mike Wiacek stepped into Google’s Mountain View offices, beginning an almost 14-year journey. He founded Google’s Threat Analysis Group and co-founded Chronicle, an Alphabet cybersecurity venture now a part of Google Cloud. Today, Mike summarizes how his experiences as a Googler shaped the way he built Stairwell, his new company.

While cybersecurity may seem to be about erecting digital barriers, at its core cybersecurity is a data analysis challenge. At Stairwell, we comprehend the complexity of this challenge and have designed an adaptive, resilient system to counter adaptive threats.

We collect and store all executable files across an organization’s systems, forever. These files are continuously rescanned and analyzed in light of existing and emerging threat intelligence. This strategy enables real-time monitoring, retrospective threat assessments, bespoke detection engineering workflows, and novel security hunting capabilities.

A year after our general availability launch, Stairwell is tracking more than 8.2 billion file sightings across our customer environments.

In Stairwell’s early days, we chose open-source PostgreSQL for its reliability and ease of use. It was an effective choice for the short term, allowing us to focus on achieving product-market fit by learning from customer feedback.

However, we needed a more robust solution as we scaled up to meet our customer needs. Our database’s limitations started to emerge, which influenced our product development and vision in undeniable ways.

By last year, these limitations had become evident and we made the strategic decision to migrate our key-value data storage to Bigtable, Google’s scalable, high-performance NoSQL database known for its versatility in both batch and real-time workloads. The shift marked a transformative moment for Stairwell’s scaling capabilities.

Scaling challenges with traditional databases can hinder an ambitious engineering team, which is where a platform like Bigtable comes in. Bigtable acts as a catalyst, inspiring teams to aim higher. Within months, we were handling tables so large they would be unimaginable with our previous database. Engineering ambitions can rise to the occasion when given the right infrastructure.

While technically it is possible to use any database as a key-value store, Bigtable’s data model and performance is what made it the clear winner for us. Bigtable’s lexicographically sorted key model is pivotal for our specific use cases. We’ve optimized key schemas and column family qualifiers to reduce both the number of requests and the data volume per read/write operation.

Paired with reader expressions, Bigtable serves as both an indexed data store and a distributed hash table for us. By collocating related data, we gain the efficiency of precise key lookups along with the speed of scanning adjacent rows in a single query. Additionally, we use Bigtable’s garbage collection to auto-prune outdated records, maintaining a lean storage footprint. In sum, a well-designed data model with Bigtable is not just beneficial — it’s empowering.

Our largest table is awe-inspiring: more than 328 million rows with column counts ranging from a solitary 1 to an overwhelming 10,000. This single table manages to house hundreds of billions of data points, while maintaining an average read latency of just 1.9 milliseconds and maxing out at 4 milliseconds. This is borderline magical!

Bigtable isn’t just a gigantic storage vault for us; it’s a high-performance analytics engine capable of executing both batch and streaming queries on a grand scale. We frequently run large Dataflow jobs that populate hundreds of millions of rows, while simultaneously supporting live user-facing queries that return results in single digit milliseconds. In one standout instance, Bigtable effortlessly served more than 22 million rows per second during an intense Dataflow job. This isn’t merely fast — it’s a game-changing level of data processing capability.

Many NoSQL databases boast about their scalability, but there’s often a hidden limitation: the actual speed at which they can scale. Bigtable leverages Google’s Colossus file system, allowing it to add new nodes in real-time, instantly bringing them online to handle incoming data requests, all without the necessity for data rebalancing and resharding. Unlike other NoSQL systems, Bigtable doesn’t suffer from throughput dilution upon downscaling, either.

Bigtable’s autoscaler automates scaling, and adjusts the node count in response to changes in query load, thus ensuring a consistent performance level. This efficiency translates directly into cost-effectiveness: We pay only for what we use, and we experience zero downtime during any of these operations.

Bigtable serves as the backbone for MalEval, our machine learning-based malware detection system. This system utilizes a neural network trained on extensive file metadata, extracted from thousands of file features via our in-house scanning tools.

Bigtable supports our training pipeline, which sifts through gigabytes of data across millions of rows to gather training samples, and our real-time malware scanner. The scanner ingests fresh metadata to feed into the trained model, allowing us to infer the likelihood of a file being malicious. Thanks to Bigtable’s efficiency, we can meet stringent latency requirements while processing millions of customer files daily.

Our entire backend at Stairwell is coded in Go, a language that we have found exceptionally fit for purpose. One remarkable feature of Bigtable that has made our lives easier is the `bttest` package in the Go Cloud SDK. This package provides an in-memory Bigtable server, ideal for both local development and for use within our unit tests. This is in stark contrast to other databases, where setting up similar environments for unit tests can be a resource-intensive task.

Migrating to Bigtable helped us envision a future and function for Stairwell that would have been inconceivable otherwise, but Bigtable isn’t just solving our current data needs. Bigtable is a forward-looking solution that equips us for the future of cybersecurity. It allows us to focus on what really matters: customer security. Bigtable’s scalability ensures we’re not just reacting to today’s security landscape but preparing for the unpredictable challenges of tomorrow.

Stairwell is one of many organizations using Bigtable to level up their goals. Check out the following posts to learn more about other organizations taking a similar approach in retail, FinTech, data quality and social media.

How Discord & Tamr built ML-driven applications with BigtableCredit Karma serves 63 billion personalized recommendations a day with BigtableHome Depot delivers personalized experiences with Bigtable

Get started with a Bigtable free trial today.

Read More for the details.

Food and water security are among the most pressing problems facing the world today. According to the United Nation’s Food and Agriculture Organization (FAO), the world will see a 60% increase in demand for food by 2050. And according to the World Health Organization, since 2019, an additional 122 million people are experiencing hunger, due to multiple ongoing crises. On the 8th day of COP 28, the focus was on how to build resilience into food, agriculture and water systems.

Addressing the food and water security challenge under changing climatic conditions is critical, but it’s also a complex challenge to solve given the scale and magnitude of the problem. The primary issue has been access to data and therefore insights on understanding both the impact of climate on agriculture, and vice versa.

At Google, our mission is to organize the world’s information and make it universally accessible and useful, so that decision makers can ask questions of increasingly large and complex datasets. At Google Cloud, we are partnering with organizations across the agricultural ecosystem to help solve the data and insight accessibility problem. Central to that endeavor is our planetary-scale remote sensing platform, Google Earth Engine.This pioneering analytics platform has 50 years of analysis-ready global satellite data, and the massive computational power to run machine learning (ML) models over the entire terrestrial surface of the globe, bridging the data and insight gap.

Earth Engine is already helping some of the largest organizations around the world, across many industries, including agriculture. For example, remote sensing startup Regrow, based in Australia, is focused on helping their customers transition to regenerative agricultural practices. Using Earth Engine and advanced machine learning models, they’re able to monitor 1.2B acres of land globally. By tracking, modeling, and monitoring agricultural practices, Regrow helps customers promote carbon sequestration in soil, reduce greenhouse gas emissions through low-till and no-till agriculture, and increase biodiversity and water usage. Earth Engine is also central to Unilever’s mission of sustainable sourcing, as part of their target of no deforestation in their palm oil supply chains. Leveraging Earth Engine and our partner solution TraceMark, which is built on Earth Engine, Unilever is able to trace its palm oil purchases back to the plantations, ensuring that they don’t source palm oil from deforested land.