GCP – Generating images with Imagen 2 on Vertex AI

Imagen 2 on Vertex AI brings Google’s state-of-the-art generative AI capabilities to application developers. With Imagen 2 on Vertex AI, application developers can build next-generation AI products that transform their user’s imagination into high-quality visual assets, in seconds.

With Imagen, you can:

Generate novel images using only a text prompt (text-to-image generation).Edit an entire uploaded or generated image with a text prompt.Edit only parts of an uploaded or generated image using a mask area you define.Upscale existing, generated, or edited images.Fine-tune a model with a specific subject (for example, a specific handbag or shoe) for image generation.Get text descriptions of images with visual captioning.Get answers to a question about an image with Visual Question Answering (VQA).

In this blog post, we will show you how to use the Vertex AI SDK to access Imagen 2’s image generation feature. This blog is designed to be a code-along tutorial, so you can code along as you read.

Environment setup

Before you get started, please ensure that you have satisfied the following criteria:

Have an existing Google Cloud Project or create a new one.Set up a billing account for the Google Cloud project,Enable the Vertex AI API on the Google Cloud project.

To use Imagen 2 through the SDK, run the following command in the preferred Python environment to install the Vertex AI SDK:

<ListValue: [StructValue([(‘code’, ‘pip install –upgrade google-cloud-aiplatform’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff0807c0>)])]>

Restarting the runtime is recommended to reflect the installed packages. If you encounter any issues, check this installation guide for troubleshooting. Once the packages are installed properly, use the following code to initialize the Vertex AI with custom configurations: PROJECT_ID and LOCATION.

<ListValue: [StructValue([(‘code’, ‘import vertexairnrnrnPROJECT_ID = ‘PROJECT_ID’ # @param {type:”string”}rnLOCATION = ‘LOCATION’ # @param {type:”string”}rnrnrnvertexai.init(project=PROJECT_ID, location=LOCATION)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080670>)])]>

initialize the image generation model using the following code:

<ListValue: [StructValue([(‘code’, ‘from vertexai.preview.vision_models import ImageGenerationModelrnmodel = ImageGenerationModel.from_pretrained(“imagegeneration@005″)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080d00>)])]>

Now you are ready to start generating images with Imagen 2.

Image generation

Imagen 2 can generate images from text descriptions. It is powered by a massive dataset of text and images, and is able to understand human language to create images that are both accurate and creative.

The generate_images function can be used to generate images using text-prompts:

<ListValue: [StructValue([(‘code’, ‘images = model.generate_images(rnprompt=”A fantasy art of a beautiful ice princess”,rn)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080580>)])]>

The response variable now holds the generated results. Indexing is required because `generate_images` returns a list. To view the generated images, execute the following code:

<ListValue: [StructValue([(‘code’, ‘images[0].show()’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080250>)])]>

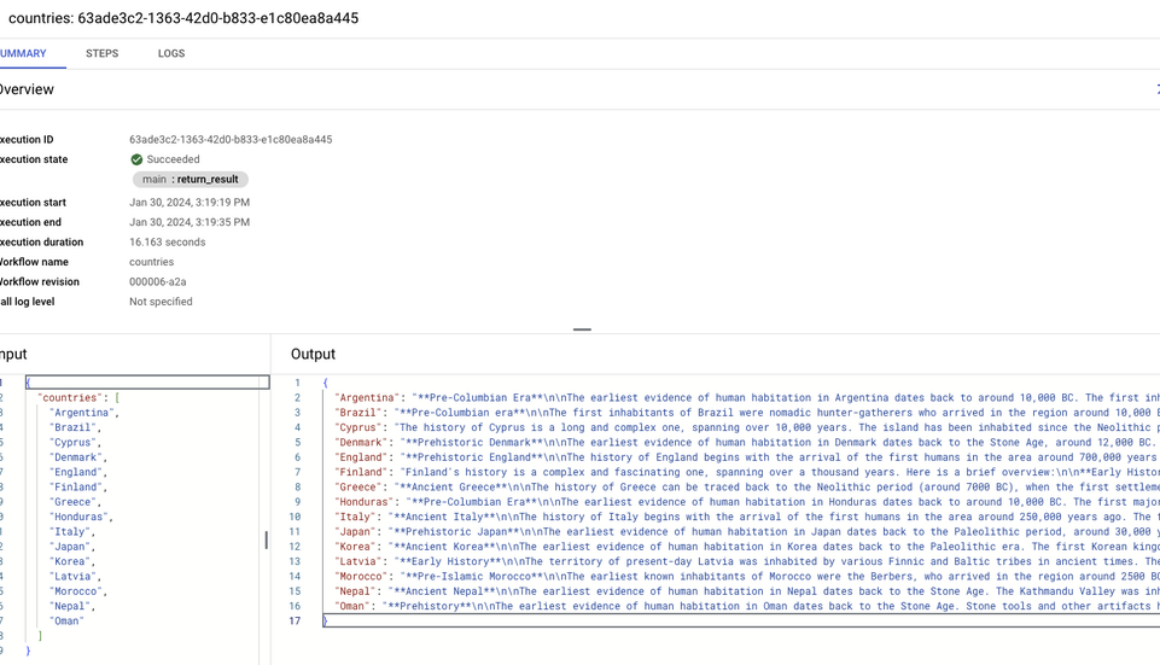

You should see a generated image which is similar to the image shown below:

Image generated using Imagen 2 on Vertex AI from the prompt: A fantasy art of a beautiful ice princess

If you prefer to save the generated images, you can execute the following code:

<ListValue: [StructValue([(‘code’, ‘images[0].save(rnlocation=”./generated-image.png”, rninclude_generation_parameters=Truern)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff0808b0>)])]>

Optional parameters

The generate_images function also accepts a few parameters which can be used to influence the generated images. The following section will explore those parameters individually.

The negative_prompt parameter can be used to omit features and objects when generating the images. For example, if you are generating a studio photo of a pizza and want to omit pepperoni and vegetable, you just need to add “pepperoni” and “vegetables” in the negative prompt.

<ListValue: [StructValue([(‘code’, ‘images = model.generate_images(rnprompt=”A studio photo of a delicious pizza”,rnnegative_prompt=”pepperoni, vegetables”,rn)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080bb0>)])]>

Image generated using Imagen 2 on Vertex AI from the prompt: A studio photo of a delicious pizza

The number_of_images parameter determines how many images will be generated. It defaults to one, but you can set it to create up to four images. However, the total number of output images may not always be the same as what is set in the parameter because images that violate the usage guidelines will be filtered.

<ListValue: [StructValue([(‘code’, ‘images = model.generate_images(rnprompt=”A studio photo of a delicious pizza”,rnnegative_prompt=”pepperoni, vegetables”,rnnumber_of_images=4,rn)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080a00>)])]>

The seed parameter controls the randomness of the image generation process, making generated images deterministic. If you want the model to generate the same set of images every time, you can add the same seed number with your request.

For example, if you used a seed number to generate four images, using the same seed number with the exact same parameters will generate the same set of images, although the ordering of the images might not be the same. Run the following code multiple times and observe the generated images:

<ListValue: [StructValue([(‘code’, ‘images = model.generate_images(rnprompt=”A studio photo of a delicious pizza”,rnnegative_prompt=”pepperoni, vegetables”,rnnumber_of_images=4,rnseed=1rn)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080ee0>)])]>

By setting the language parameter, you can use non-English image prompts to generate images. English (en) is the default value. The following values can be used to set the text prompt language:

Chinese (simplified) (zh/zh-CN)

Chinese (traditional) (zh-TW)

Hindi (hi)

Japanese (ja)

Korean (ko)

Portuguese (pt)

Spanish (es)

To demonstrate that, try executing the code below with a text prompt written in Korean: “A modern house on a cliff with reflective water, natural lighting, professional photo.”

<ListValue: [StructValue([(‘code’, ‘images = model.generate_images(rn prompt=”물에 반사된 절벽 위의 현대적인 집, 자연 채광, 전문적인 사진”,rn language=”ko”, # ko = koreanrn)’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3ecaff080a60>)])]>

Image generated using Imagen 2 on Vertex AI from the prompt: 물 반사, 자연 채광, 전문적인 사진, 절벽 위의 현대적인 집

Head over to the Imagen documentation and the API reference page to learn more about Imagen 2’s features. Additionally, the concepts presented in this blog post can be accessed in the form of a Jupyter Notebook in our Generative AI GitHub repository, providing an interactive environment for further exploration. To keep up with our latest AI news, be sure to check out the newest Gemini models available in Vertex AI and Gemma, Google’s new family of lightweight, state-of-the-art open models.

Read More for the details.