GCP – Ensure website reliability with Synthetic Monitoring broken link checker

Post Content

Read More for the details.

Post Content

Read More for the details.

Google Distributed Cloud Virtual for vSphere (GDCV vSphere) enables customers to deploy the same Kubernetes as Google Kubernetes Engine in the cloud, on their own hardware and data centers, with GKE Enterprise tooling to manage clusters at enterprise scale. Enterprises rely on GDCV (vSphere) to support their primary business applications, providing a scalable, secure, and highly available architecture. By integrating with their existing VMware vSphere infrastructure, GDCV makes it easy to deploy secure, consistent Kubernetes on-premises. GDCV (vSphere) integrates with VMware vSphere to meet high availability requirements, including HA admin and user clusters, auto-scaling, node repair, and now, VMware’s advanced storage framework.

Many VMware customers use aggregation functionalities such as datastore clusters to automate their virtual disk deployment. By combining many datastores into one object, they can let vSphere decide where to put a virtual disk, basically picking the best location for the given requirement.

Storage interactions between the Kubernetes cluster and vSphere are driven through the Container Storage Interface (CSI) driver module. VMware releases its own CSI driver, vSphere CSI. While this integration allows you to get started very quickly, it also comes with limitations, since even the VMware-delivered driver does not support datastore clusters. Instead, VMware relies on Storage Policy Based Management (SPBM) to enable administrators to declare datastore clusters for workloads and provide the placement logic that ensures automatic storage placement. Until this point, SBPM was not supported in GDCV making storage on these clusters harder and less intuitive for VM admins after being used to the flexibility of SBPM for VMs.

With version 1.16, GDCV (vSphere) now supports SPBM, enabling customers to leverage a consistent way to declare datastore clusters and deploy workloads. GDCV’s implementation of SPBM provides the flexibility to maintain and manage the vSphere storage without touching GDCV or Kubernetes. In this way, GDCV lifecycle management fully leverages a modern storage integration on top of vSphere, allowing for higher resilience and a much lower planned maintenance window.

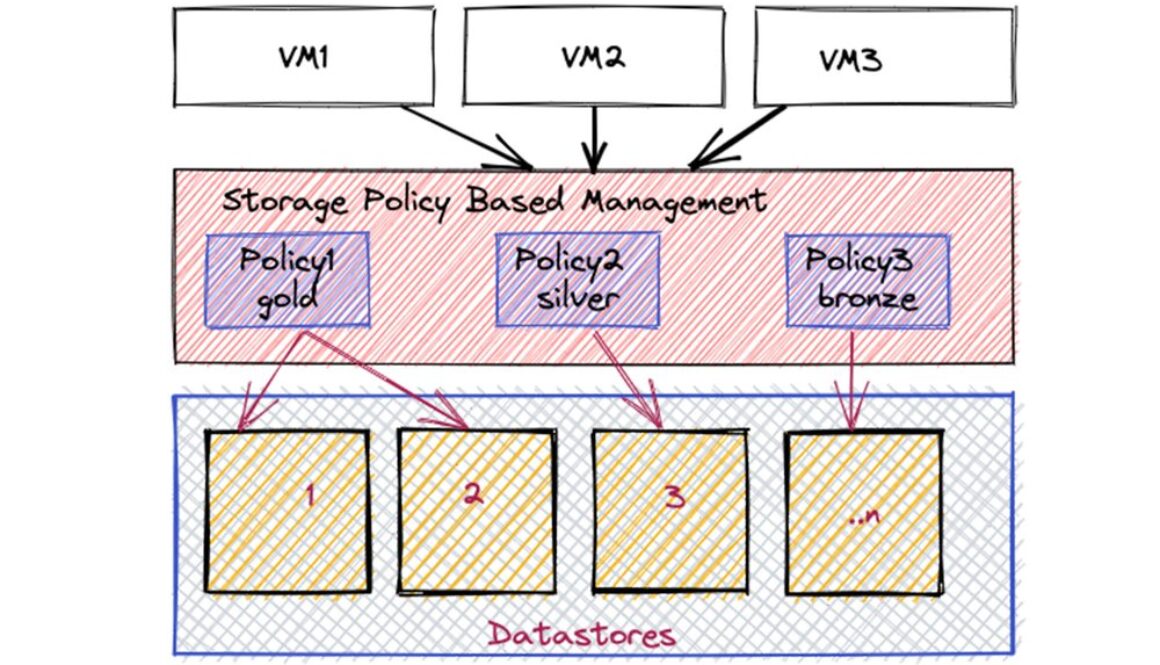

This new model of storage assignment is through the integration of GDCV with VMware’s SPBM, which is also enabled by making advanced usage of the VMware CSI driver. Storage Policy Based Management (SPBM) is a storage framework that provides a single unified control plane across a broad range of data services and storage solutions. The framework helps to align storage with application demands of your virtual machines. Simply put, SPBM lets you create storage policies mapping, linking VMs or applications to their required storage needs.

By integrating with VMware’s SBPM, creating clusters with GDCV from a storage perspective is now just a matter of referencing a particular storage policy in the clusters’ install configurations.

Storage policies can be used to combine multiple single datastores to address them as if they were one, similar to datastore clusters. However, when queried, the policy currently delivers a list of all compliant datastores, but does not favor one for the best placement. In order to do that, GDCV will take care of that for you. It will analyze all compliant datastores in a given policy and pick the optimal datastore for the disk placement. And that is done dynamically for every storage placement using SPBM with GDCV.

The beauty of it is, from an automation point of view, when anything changes from a storage capacity or maintenance perspective — all changes are made from the storage end. Operations like adding more storage capacity can now be done without the need of changing GDCVs configuration files. .

This simplifies storage management within GDCV quite a bit.

With SBPM, a VMware administrator can build different storage policies based on the capabilities of the underlying storage array. They can define one/many datastores to one/many policies — and then assign the VM a policy that best defines its storage requirements. So in practice, if we want gold level storage (e.g. SSD only for production environment) we first create a policy defining all Gold level storage and add all the matching datastores to that policy. We then assign that policy to the VMs. Same for e.g. Bronze level storage. Simply create a Bronze storage policy with bronze level datastores (e.g. HDD only for Dev environment), and apply it to the relevant VMs.

In order to use the storage policy feature in GDCV, The VMware admin needs to set up at least one storage policy in VMware which is compatible with one or more datastores your GDCV cluster can access.

GDCV supports datastore tag-based placement. VMware admins can create specific categories and tags based on pretty much anything related to the available storage — think performance levels, cluster name, and disk types as just a few examples.

Let’s look at two key storage requirements in a cluster — the VM disk placement and the persistent volume claims (PVC) for stateful applications — and how to use SBPM policies to manage these.

VM disk placement

Let’s go back to our Gold and Bronze examples above. We are going to define storage requirements for a GDCV User cluster. Specifically, we want to refer to two different storage policies within the User cluster configuration file:

A cluster wide storage policy – this will be the default policy for the cluster and in our case will be our “Bronze Policy”A storage policy for specific node pools within the user cluster — our “Gold” policy

First, the “Gold” and “Bronze” tags are assigned to the different datastores available to the GDCV node VMs. In this case, “Gold” refers to SSD disks only; “Bronze” refers to HDD disks only.

To create and assign tags, follow the documentation; noting that tags can also be applied to datastore clusters, or datastores within the cluster — read more here.

Once the tags are created, the storage policy is defined as per the official documentation.

After creating a storage policy, the datastores compatible with the policy can be reviewed — see example below:

Now, let’s apply some storage policies to our user cluster configuration files.

Define cluster-wide policy (“Bronze” policy)

In this user cluster config file snippet, the storage policy name “Bronze” is set at the cluster-level. This means all the provisioned VMs in all of the nodepools will use this storage policy to find compatible datastores and dynamically select the one that has sufficient capacity to use.

Define node pool policy (“Gold”)

In this user cluster config file snippet, a storage policy (“Gold”) is at a node-pool level. This policy will be used to provision VMs at that node pool, and all other storage provisioning will use the storage policy specified at the cluster section.

Using storage policies like this abstracts the storage details from the cluster admin. Also, if there is a storage problem — for example with capacity — then more datastores can be tagged so that they are available within the storage policy — typically by the VMware admin. The GDCV cluster admin does not need to do anything, and the extra capacity that is made available through the policy is seamlessly incorporated by the cluster. This lessens the administrative load on the cluster admin and automates the cluster storage management.

Persistent Volume Claims

A user cluster can have one or more StorageClass objects, where one of them is designated as the default StorageClass. When you create the cluster using the documented install guide, we will have created a default storage class.

Additional storage classes can be created, which can be used instead of the default. The vSphere CSI driver allows the creation of storage classes with a direct reference to any existing storage policy within the vCenter where GDCV user cluster runs on.

This means that volumes created by PVCs in the cluster will be distributed across the datastores that are compatible with the storage policy defined in our user clusters. These storage classes can map to VMFS, NFS and vSAN storage policies within vSphere.

The file below configures a StorageClass that references a policy — “cluster-sp-fast”

This storage class can then be referenced in a persistent volume claim. See below:

Volumes with this associated claim will be automatically placed on the optimal datastore included in the “cluster-sp-fast” vSphere storage policy.

So in this post we have discussed the integration of GDCV with VMware’s SPBM framework. This integration is great news for GDCV admins as it allows the automation of storage management by taking it away from hard links between specific datastores and moving it more towards a dynamic storage assignment, managed from the VMware side. This means less overhead and less down times for the GDCV clusters and more flexibility in storage management

Learn more about the Google Distributed Cloud, a product family that allows you to unleash your data with the latest in AI from edge, private data center, air-gapped, and hybrid cloud deployments. Available for enterprise and public sector, you can now leverage Google’s best-in-class AI, security, and open-source with the independence and control that you need, everywhere your customers are.

Learn about visual inspection application at the edge with Google Distributed Cloud EdgeVMware’s SBPM documentationGDCV (vSphere) SBPM integrationGDCV (vSphere) 1.16 documentation – Storage Policy Based Management

Read More for the details.

Live-streaming applications require that streaming be uninterrupted and have minimal delays in order to deliver a quality experience to end users’ devices. Delays in rendering live streams can lead to poor video quality and video buffering, negatively impacting viewership. To ensure a high live-streaming quality, you need a reliable content delivery network (CDN) infrastructure.

Media CDN is a content delivery network (CDN) platform designed for delivering streaming media with low latency across the globe. Notably, Media CDN uses YouTube’s infrastructure to bring video streams (both on-demand video and live) and large file downloads closer to users for fast and reliable delivery. As a CDN, it delivers content to users based on their location from a geographically distributed network of servers, helping to improve performance and reduce latency for users who are located far from the origin server.

In this blog, we look at how live-streaming providers can utilize Media CDN infrastructure to better serve video content, whether the live-streaming application is running within Google Cloud, on-premises, or in a different cloud provider altogether. Media CDN, when integrated with Google Cloud External Application Load Balancer as origin, can be utilized to render streams irrespective of the location of the live-streaming infrastructure. Further, it’s possible to configure Media CDN so that live streams can withstand most kinds of interruptions or outages, to ensure a quality viewing experience. Read on to learn more.

Live streaming is the process of streaming video or audio content in real time to broadcasters and playback devices. Typically live-streaming applications, involve the following components:

Encoder: Compresses the video to multiple resolutions/bitrates and sends the stream to a packager.Packager and origination service: Transfers the transcoded content to different media formats and stores video segments to be rendered via HTTP endpoints.CDN: Streams the video segments from the origination service to playback devices across the globe with minimal latency.

At a high level, Media CDN contains two important components:

Edge cache service: Provides a public endpoint and enables route configurations to route the traffic to specific origin.

Edge cache origin: Configure a Cloud Storage bucket or a Google External Application Load Balancer as an origin.

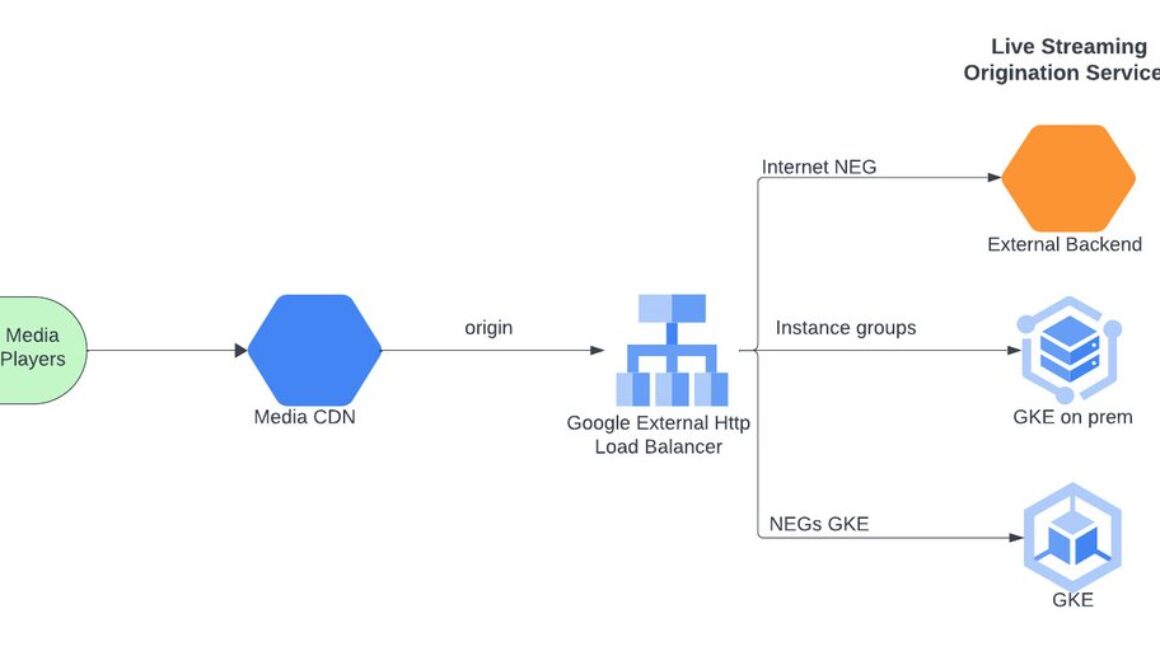

The above figure depicts an architecture where Media CDN can serve a live stream origination service running in Google Cloud, on-prem or on an external cloud infrastructure, by integrating with Application Load Balancer. Application Load Balancer enables connectivity to multiple backend services and provides advanced path- and host-based routing to connect to these backend services. This allows live stream providers to use Media CDN to cache their streams closer to the end users viewing the live channels.

The different types of backend services provided by Load Balancers to facilitate connectivity across infrastructure are:

Internet/Hybrid NEG Backends: Connect to live-streaming origination service running in a different cloud provider or on-premises.Managed Instance Groups: Connect to live-streaming origination service running in Compute Engine across multiple regions.Zonal Network Endpoint Groups: Connect to live-streaming origination service running in GKE.

Since any disruption to live stream traffic can affect viewership, it is essential to plan for disaster recovery to protect against zonal or regional failures. Media CDN provides primary/secondary origin failover to facilitate disaster recovery.

The above figure depicts Media CDN with an Application Load Balancer origin providing failover across regions. This is achieved by creating two “EdgeCacheOrigin” hosts pointing to the same Application Load Balancer with different “host header” values. Every EdgeCacheOrigin is configured to set the host header to a specific value. The Application Load Balancer performs host-based routing to route the live stream traffic requests based on the host header value.

When a playback device requests the stream from Media CDN, it invokes the Application Load Balancer by setting the host header to the primary origin value. The load balancer looks at the host header and forwards the traffic to the primary live stream origination service. When the primary live stream provider fails, the failover origin rewrites the host header to the failover origin value and sends the request to Application Load Balancer. The load balancer matches the host and routes the request to a secondary live stream origination service in a different zone or region.

The below snippet depicts the URL host-rewrite configuration in the EdgeCacheOrigin:

Media CDN is an important part of the live streaming ecosystem, helping to improve performance, reduce latency, and ensure quality for live streams. In this post, we looked at how live stream applications can utilize Google Media CDN across multiple environments and infrastructure. To learn more about Media CDN, please see:

Deploy Streaming Service with Media CDNMedia CDN OverviewMedia CDN with Application Load Balancer

Read More for the details.

Headline-grabbing security incidents in 2023 have shown that cybersecurity continues to be challenging for organizations around the world, even those with skilled practitioners and state-of-the-art tools. To help prepare you for 2024, we are offering the final installment of this year’s Google Cloud Security Talks on Dec. 19.

Join us as our experts show you how you can meaningfully strengthen your security posture and increase your resilience against new and emerging threats with modern approaches, security best practices, generative AI and AI security frameworks, and actionable threat intelligence by your side.

This series of digital sessions — created by security practitioners for security practitioners — will kick off with a deep dive by our Mandiant experts into the two main types of threats they saw emerge this year, how they impacted organizations, and why we think they will have lasting impact into 2024.

Here’s a peek at other sessions where you’ll walk away with a better understanding of threat actors, potential attack vectors and get fresh ideas for detecting, investigating, and responding to threats:

How Google mitigated the largest DDoS attack in history: Learn how Google was able to defend against the largest DDoS attack to date and mitigate the attack using our global load-balancing and DDoS mitigation infrastructure. Plus, discover how you can protect against DDoS attacks going into the new year with security best practices.2024 Cybersecurity Forecast: Get an inside look at the evolving cyber threat landscape, drawn from Mandiant incident response investigations and threat intelligence analysis of high-impact attacks and remediations around the globe.SAIF from Day One: Securing the world’s AI with SAIF: AI is advancing rapidly, and it is important that effective risk management strategies evolve along with it. To help achieve this evolution, Google introduced our Secure AI Framework (SAIF). Join us to learn why SAIF offers a practical approach to addressing the concerns that are top of mind for security and risk professionals like you, and how to implement it.Unlock productivity with Duet AI in Security Operations: AI is the hot topic of the year, but is there a practical application for security teams? Or is it just smoke and mirrors? If we take a step back and look at the pervasive and fundamental security challenges teams face — the exponential growth in threats, the toil it takes for security teams to achieve desired outcomes, and the chronic shortage of security talent — and ask whether AI can effectively move the needle for security teams, the answer is a resounding yes. Join us to see how Duet AI in Security Operations can help teams simplify search, transform investigation, and accelerate response.Duet AI in Google Workspace: Keeping your data private in the era of gen AI. Join this session to get answers to your questions about Duet AI in Google Workspace, learn more about the built-in privacy and security controls in Duet AI, and understand how your organization can achieve digital sovereignty with Sovereign Controls.Meet the ghost of SecOps future. Today’s security operations center (SOC) has an increasingly difficult job protecting growing and expanding organizations. The landscape is changing and the SOC needs to change with the times or risk falling behind the evolution of business, IT, and threats. Join us as we show you a vision of what the SOC will look like in the near future and how to choose the best course of action today.Securing your gen AI innovations. This session will cover the essential Google Cloud security tools crucial for safeguarding your gen AI adoption approaches. The presenters will provide a comprehensive overview of the security challenges inherent in gen AI projects from machine learning models to data processing pipelines and offer practical insights into how to mitigate the most relevant risks.Real-world security for gen AI with Security Command Center. How can you protect your gen AI workloads with strong preventative guardrails, and get near real-time alerting of drift and workload violations? Get a sneak peek of new posture management capabilities coming to Security Command Center and learn how to protect AI applications and training data using opinionated, Google Cloud-recommended controls designed specifically for gen AI workloads.

This year’s final Google Cloud Security Talks is designed to give you the assurance you need heading into 2024 that detecting, investigating, and responding to threats at scale is achievable with modern security operations approaches and actionable threat intelligence. Make sure you join us, live or on-demand.

Read More for the details.

The UAE Consensus agreement that was signed earlier this week at the conclusion of COP28 was not what many participants had hoped for, but it is more than many of us expected. While it failed to definitively call for the phase-out of fossil fuels, it did land agreement on a range of critical issues — from scaling renewables, funding for loss and damage, and more. Still, the risk of catastrophic climate change is high, and the pressure to effect meaningful change continues to fall on individuals and the private sector.

Thankfully, as we wrote over the past two weeks, there’s a lot that businesses and individuals can do. Over the course of 14 blogs, we detailed the numerous ways that Google, Google Cloud and our partners are curtailing our own carbon footprints, and outlined many ways our customers are doing the same, using a variety of techniques, tools and technologies. On the technology front, the rise of generative AI in particular is enabling new ways of surfacing climate-relevant information and driving climate-friendly actions.

To recap, here’s a summary of what we wrote about over the course of the conference:

On Day 1, Adaire Fox-Martin and I, Justin Keeble, laid out our vision for improving access to climate data (e.g., participating in the Net Zero Data Public Utility), building a climate tech ecosystem, and unlocking the power of geospatial analysis.On Day 2, the first-ever COP28 Health Day, Googlers Phil Davis and Daniel Elkabetz highlighted how environmental data can raise awareness, help citizens make better decisions, and spur adoption of solutions for better climate health.On Day 3, as the COP28 community pondered finance and trade, Kevin Ichhpurani and Denise Pearl discussed how partners can adopt programs in Google Cloud Ready – Sustainability catalog to help their customers identify and deploy better solutions.Likewise, EMEA Googlers Tara Brady and Jackie Pynadath highlighted how Google Cloud financial services customers are using generative AI to fund climate transition initiatives.For Day 5, Energy and Industry Day, Google Cloud Consulting leader Lee Moore and Poki Chui showed how businesses are decarbonizing their supply chains and helping consumers make enlightened choices.On Day 6, Urbanization and Transport Day, Umesh Vemuri and Jennifer Werthwein showed off examples of Google Cloud automotive customers using AI to, um, drive innovation in automobile design, supply chain, power and mobility.Denise Pearl then took the pen with our partner mCloud to discuss how we, in addition to shifting away from carbon-based energy, are actually using less energy to begin with.We then heard from the Google Cloud Office of the CTO, where Will Grannis and Jen Bennett relayed the lessons they’ve learned from talking to CTOs about sustainability.In honor of Day 9, Nature Day, Chris Lindsay and Charlotte Hutchinson talked about how geospatial analytics and solutions built on top of Google Earth Engine are helping partners preserve the natural world.Then, Yael Maguire and Eugene Yeh of our Geo Sustainability team looked at the new European Union Deforestation Regulation (EUDR), and how to comply with it using geospatial data and tools.In honor of Day 10, Food, Agriculture and Water Day, Karan Bajwa and Leah Kaplan provided a view from Google Cloud Asia at how Google Earth Engine and AI can support sustainable agriculture; andCarrie Tharp and Prue Mackenzie from the Industries team examined how generative AI can encourage healthier food systems.Product management leads Gabe Monroy and Cynthia Wu described how Google Cloud tools like Carbon Footprint are helping companies decarbonize their cloud footprints; andFinally, Michael Clark and Diane Chaleff shared techniques that software builders can use to bolster sustainable software design, and encourage end users to make better choices from their apps.

This is just a small sample of the many things that we are doing to address the climate crisis, both in our own operations, and through technologies that our customers can use to drive their own efforts. We hope that you take the time to read about what Google Cloud customers and partners are doing to accelerate action on climate, and that you will take inspiration in them for your own business transformation. You can also learn more about Google’s sustainability efforts, and keep up-to-date on Google Cloud’s sustainability news. See you next year for COP29.

Read More for the details.

In today’s competitive business landscape, cost is a crucial factor for success. Spanner is Google’s fully managed database service for both relational and non-relational workloads, offering strong consistency at a global level, high performance at virtually unlimited scale, and high availability with an up to five-nines SLA. Spanner is a versatile database that caters to a vast array of workloads, powering some of the world’s most popular products. And with Spanner’s recent price performance improvements, it’s even more affordable, due to improved throughput and storage per node.

In this blog post, we will demonstrate how we benchmark a subset of these workloads, key-value workloads, using the industry-standard Yahoo! Cloud Serving Benchmark (YCSB) benchmark. YCSB is an open-source benchmark suite for evaluating and comparing the performance of key-value workloads across different databases. While Spanner is often associated with relational workloads, key-value workloads are also common on Spanner among industry-leading enterprises.

The results show that our recent price-performance improvements provide at least a 50% increase in throughput per node, with no change in overall system costs. When running at the recommended CPU utilization in regional configurations, this throughput is delivered with single-digit millisecond latency.

With that out of the way, let’s dig deeper into the YCSB benchmarking setup that we used to derive the above results.

We used the YCSB benchmark on Spanner to run these benchmarks.

We want to mimic typical production workloads with our benchmark. To that end, we picked the following characteristics:

Read staleness: Read workload comparison is based on “consistent/strong reads” and not “stale/snapshot” reads.Read/write ratio: The evaluation was done for a 100% read-only workload and a 80/20 split read/write workload.Data distribution: We use the Zipfian distribution for our benchmarks since it more closely represents real-world scenarios.Dataset size: A workload dataset size of 1TB was used for this benchmark. This ensures that most requests will not be served from memory.Instance configuration: We used a three-node Spanner instance in a regional instance configurationCPU utilization: We present here two sets of benchmarks: the first set of benchmarks run at the recommended 65% CPU utilization for latency-sensitive and fail-over safe workloads; a second set of benchmarks runs at near 100% CPU utilization to maximize Spanner’s throughput. The performance numbers at 100% CPU utilization are also published in our public documentation.Client VMs: We used Google Compute Engine to host the client VMs, which are co-located in the same region as the Spanner instance.

To simplify cost estimates, we introduce a normalized metric called “Dollars per kQPS-Hr.” Simply put, this is the hourly cost for sustaining 1k QPS for a given workload.

Spanner’s cost is determined by the amount of compute provisioned by the customer. For example, a single-node regional instance in us-east4 costs $0.99 per hour. For the results that we present here, we consider the cost of a Spanner node without any discounts for long-term committed usage, e.g., committed use discounts. Those discounts would only make price-performance better, due to reduced cost per node. For more details on Spanner pricing, please refer to the official pricing page.

For simplicity, we also exclude storage costs from our pricing calculations, since we are measuring the cost of each additional “thousand” QPS.

The results below show reduction in costs for different workloads due to the ~50% improvement in throughput per node.

Benchmark 1: 100% Reads

YCSB Configuration: We used the Workloadc.yaml configuration in the recommended_utilization_benchmarks folder to run this benchmark.

Deriving the metric for Spanner: A three-node Spanner instance with a preloaded 1TB of data produced a throughput of 37,500 QPS at the recommended 65% CPU utilization. This equates to 12,500 QPS per Spanner node.

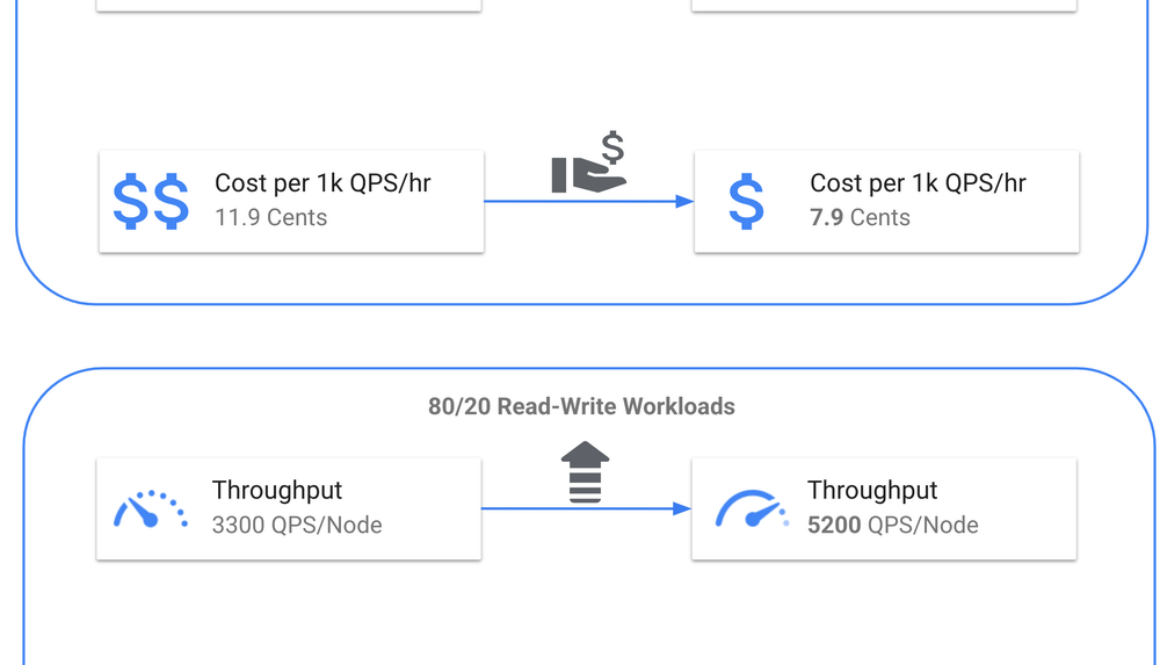

It now costs just 7.9 cents to sustain 1k read QPS for one hour in Spanner.

Benchmark 2: 80% Read-20% Write

YCSB Configuration: We used the ReadWrite_80_20.yaml configuration in the recommended_utilization_benchmarks folder to run this benchmark.

Deriving the metric for Spanner: A three-node Spanner instance with a preloaded 1TB of data produced a throughput of 15,600 QPS at the recommended 65% CPU utilization. This equates to 5,200 QPS per Spanner node.

It now costs just 19 cents to sustain 1k 80/20 Read-Write QPS for one hour in Spanner.

Benchmark 1: 100% Reads

YCSB Configuration: We used the Workloadc.yaml configuration in the throughput_benchmarks folder to run this benchmark.

Deriving the metric for Spanner: A three-node Spanner instance with a preloaded 1TB of data produced a throughput of 67,500 QPS at near 100% CPU utilization. This equates to 22,500 QPS per Spanner node.

It now costs just 4.4 cents to sustain 1k Read QPS for one hour in Spanner at ~100% CPU utilization.

YCSB Configuration: We used the ReadWrite_80_20.yaml configuration in the throughput_benchmarks folder to run this benchmark.

Deriving the metric for Spanner: A three-node Spanner instance with a preloaded 1TB of data produced a throughput of 35,400 QPS at near 100% CPU utilization. This equates to 11,800 QPS per Spanner node.

It now costs just 8.3 cents to sustain 1k 80/20 Read-Write QPS for one hour in Spanner at ~100% CPU utilization.

Please note that benchmark results may vary slightly depending on regional configuration. For instance, in the recommended utilization benchmarks, CPU utilization may deviate slightly from the recommended 65% target due to the test’s fixed QPS. However, this does not impact latency.

The 100% CPU utilization benchmarks represent the approximate theoretical limits of Spanner. In practice, the throughput can vary based on a number of factors such as system tasks, etc.

We recognize the importance of cost-efficiency and remain committed to performance improvements. We want customers to have access to a database that delivers strong performance, near-limitless scalability and high availability. Spanner’s recent performance improvements let customers realize cost savings through improved throughput. These improvements are available for all Spanner customers without needing to reprovision, incur downtime, or perform any user action.

Learn more about Spanner and how customers are using it today. Or try it yourself for free for 90 days, or for as little as $65 USD/month for a production-ready instance that grows with your business.

Read More for the details.

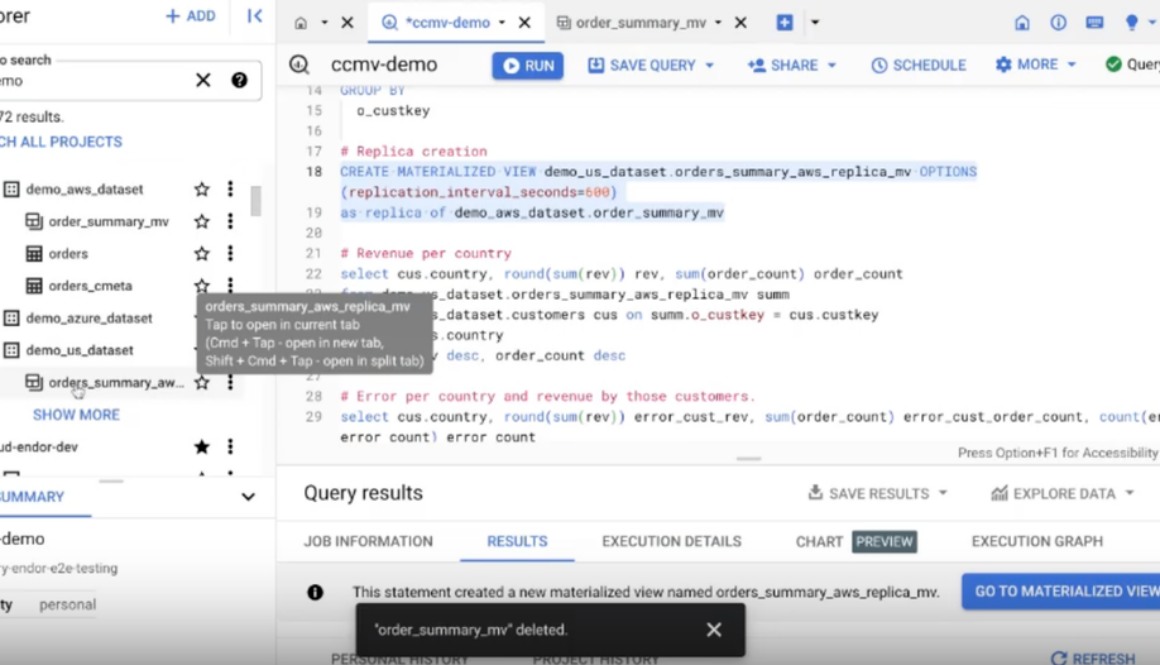

As more and more organizations embrace multi-cloud data architectures, a top request we constantly receive from customers is how to make cross-cloud analytics super simple and cost-effective in BigQuery. To help customers on their cross-cloud analytics journey, today we are thrilled to announce the public preview of BigQuery Omni cross-cloud materialized views (aka cross-cloud MVs). Cross-cloud MVs allow customers to very easily create a summary materialized view on GCP from base data assets available on another cloud. Cross-cloud MVs are automatically and incrementally maintained as base tables change, meaning only a minimal data transfer is necessary to keep the materialized view on GCP in sync. The result is an industry-first, cost-effective and scalable capability that empowers customers to perform frictionless, efficient, and economical cross-cloud analytics.

The demand for cross-cloud MVs has been growing, driven by customers wanting to do more with their data across cloud platforms while leaving large data assets intact in separate clouds. Today, analytics on data assets across clouds is cumbersome, as it usually involves copying or replicating large datasets across cloud providers. This process is not only burdensome to manage, but also incur substantial data transfer costs. By integrating cross-cloud MVs, customers are looking to optimize these processes, seeking both efficiency and cost-effectiveness in their data operations.

Some of the key customer use cases where cross-cloud MVs can greatly simplify workflows while reducing costs include:

Predictive analytics: Organizations are increasingly eager to harness Google Cloud’s cutting-edge AI/ML capabilities with Vertex AI integration. With the ability to effortlessly build ML models on GCP using cross-cloud MVs, — leveraging Google’s large language foundational models like PaLM 2 and Gemini — customers are excited to discover new ways of interacting with their data. To leverage the power of Vertex AI and Google Cloud’s large language models (LLMs), cross-cloud MVs seamlessly ingest and combine data across a customer’s multi-cloud environments.Cross-cloud or cross-region data summarization for compliance: There’s an emerging set of privacy use cases where raw data cannot leave the source region, as it must adhere to stringent data sovereignty regulations. However, there is a viable workaround for cross-regional or cross-cloud data sharing and collaboration: aggregating, summarizing, and roll-ups of the data. This processed data, which complies with privacy standards, can be replicated across regions for data sharing and consumption with other team and partner organizations and kept up to date incrementally through cross-cloud MVs.Marketing analytics: Organizations often find themselves combining data sources from various cloud platforms. A common scenario involves the integration of CRM, user profile, or transaction data on one cloud with campaign management or ads-related data in Google Ads Data Hub. This integration is critical to ensure a privacy-safe method of segmenting customers, managing campaigns, and other marketing analytics requirements. Some of the user profile and transaction data is available in another cloud and oftentimes only a subset or summary of this data is required to be brought through these cross-cloud MVs to join with Ads or campaign data available on Google platform. Customers also want to ensure these integrations meet their high-levels of efficiency, and provide governance controls over their data.Near real-time business analytics: Real-time insights rely on powerful business intelligence (BI) dashboards and reporting tools. These analytical applications are crucial as they aggregate and integrate data from multiple sources. To reflect the most up-to-date business info, these dashboards require regular updates at intervals aligned with business needs — whether that’s hourly, daily, or weekly. Cross-cloud MVs enable consistent updates with the latest data regardless of where data assets live, ensuring that derived insights are relevant and timely. Combining these capabilities with GCP’s powerful Looker platform and semantic models further provides value and updated insights to end users.

BigQuery Omni’s cross-Cloud MV solution has a unique set of features and benefits:

Ease of use: Cross-cloud MVs simplify the process of combining and analyzing data regardless of whether the data assets live on different clouds. They minimize the complexity of running and managing complex analytics pipelines, large scale duplication of data especially when dealing with frequently changing data.Significant cost reduction: It significantly reduces egress costs of bringing data across clouds by only transferring the incremental data when needed.Automatic refresh: Designed for convenience, cross-cloud MVs work out of the box, automatically refreshing and incrementally updating based on user specifications.Unified governance: BigQuery Omni provides secure and governed access to materialized views in both clouds. This feature is crucial for both local analytics and cross-cloud analytics needs.Single pane of glass: The solution provides seamless access through the familiar BigQuery user interface for defining, querying and managing cross-cloud MVs.

Cross-cloud MVs offer significant benefits across a variety of industries and customer scenarios as illustrated below:

Healthcare: Data scientists in one department want to bring summaries of their data in regular intervals (daily or weekly) from AWS to Google Cloud (BigQuery) for aggregate analytics and model building.Media and entertainment: A marketing analyst wants to join, de-duplicate, and segment AdsWhiz data from AWS with listener and audience data on a weekly basis from Google Cloud to expand audience reach.Telecom: A data analyst seeks to centralize log level data from AWS and streaming data from Ads server for revenue targeting periodically.Education: Data analysts need to join product instrumentation data on AWS with enterprise-level data on Google Cloud. As new products are added to their platform, they want to simplify their company ETL pipeline and cost challenges by using cross-cloud MVs.Retail: A marketing analyst needs to join their user profile data from Azure with campaign data in Ads Data Hub in a privacy-safe manner. With new retail users coming into the system daily, they rely on cross-cloud MVs for regular combined analysis, ensuring up-to-date data processing.

With cross-cloud MVs, we are empowering organizations to break down cloud silos and harness the power of their rich, changing data, in near real-time in Google Cloud. This breakthrough capability is not only shaping the future of cross-cloud analytics, but also multi-cloud architectures, enabling customers to achieve new levels of flexibility, cost-effectiveness, and actionable insights. With a powerful combo of cross-cloud analytics with BigQuery Omni and agile semantics with Looker, we are able to bring rich and actionable insights faster and more easily to data consumers.

Ability to create Cross-cloud MV in BQ using SQL:

Ability to perform effective and cost efficient cross-cloud analytics with cross-cloud MVs

To learn more about cross-cloud MVs and how they can transform your organization’s cross-cloud analytics capabilities, watch the demo, explore the public documentation and try the product in action now.

Read More for the details.

Calling all cloud developers. This year there has been a lot of focus on generative AI, with plenty of excitement about the potential it brings to the future of cloud technology. Today we’re launching new training on Duet AI in Google Cloud that can help you get the most out of the new product features so that you can build customer experiences quickly, more securely, and like never before.

Duet AI is an AI-powered collaborator that helps you build secure, scalable applications while providing expert guidance. It’s built on top of Google’s large language models (LLMs) and tuned by Google Cloud experts.

Find out how to incorporate Duet AI in Google Cloud into your day-to-day activities. The new Duet AI in Google Cloud Learning Path consists of a series of videos, quizzes and labs (requiring credits) to test your skills. Each video and quiz can be completed at no-cost. Labs cost 5 credits each in Google Cloud Skills Boost, with a total of 8 labs (for 40 credits total) across the learning path. These resources could help you become more productive in Google Cloud and IDEs, and to get more done, faster.

Duet AI is more than just a developer productivity tool, it’s also a learning companion for developers of all skill levels embarking on the development lifecycle. Whether you’re just starting with cloud technologies or are a seasoned expert, Duet AI is here to guide and enhance your journey to mastery in software development and Google Cloud.

It offers personalized, expert-level advice in natural language on a wide range of topics, from crafting specific queries and scripts, to mastering common Google Cloud tasks, to best practices for application deployment, and cost optimization. Integrated within both the IDE and the Google Cloud console, Duet AI promotes a learning experience that can minimize the need for context-switching.

Here are some examples on how Duet AI can help:

Real-time code creation and editing assistance. Describe a task that you have in mind as a comment or function name, and Duet AI will generate suggested code that can be reviewed and modifiedChat assistance in the IDE for troubleshootingOvercome challenges that many of us experience day-to-day, like friction when integrating new tools and services, hashing over repetitive tasks, and spending time understanding a new code base or projectUse templates to develop a basic app. Then run, test, and deploy to Google Cloud, all with Duet AI assistanceSave time and increase code quality when writing tests

Ready to get started using Duet AI? Go to the Duet AI in Google Cloud Learning Path, or dive directly into each of the role-based courses (1 to 2.5 hours each) within the learning path:

Duet AI for Application DevelopersDuet AI for Cloud ArchitectsDuet AI for Data Scientists and AnalystsDuet AI for Network EngineersDuet AI for Security EngineersDuet AI for DevOps EngineersDuet AI for end-to-end SDLC

Looking to build your cloud skills even more? Google Cloud Innovators Plus is a great way to access the Duet AI in Google Cloud learning path, and the entire catalog of Google Cloud Skills Boost on-demand training. Your membership gets you $1,500 in developer benefits for $299 a year, including Google Cloud Credits, a Google Cloud certification voucher, 1:1 access to experts, and more. Join here.

Read More for the details.

There’s more demand than ever for the digital products and services that people and businesses rely on every day. Greater digital demand in turn requires greater data center capacity, and here at Google we’re committed to finding sustainable ways to deliver that capacity.

Today, we’re sharing our new framework to more precisely evaluate the health of a local community’s watershed and establish a data-driven approach to advancing responsible water use in our data centers. Building on our climate-conscious approach to data center cooling, the framework is an important element of our commitment to water stewardship in the communities where we operate.

When we build a data center, we consider a variety of factors, including proximity to customers or users, the presence of a community that’s excited to work with us, and the availability of natural resources that align with our sustainability and climate goals. Water cooling is generally more energy-efficient than air cooling, but with every campus, we ask an important question: Is it environmentally responsible to use water to cool our data center?

To find the answer in the past, we used publicly available tools to gain high-level insights, or provide an “Earth View” of the water challenges facing large aquifers or river basins, such as the Columbia River in the Pacific Northwest, or the Rhine River in northern Europe. But when we wanted to get more of a local “street view” and dive deeper into the state of a specific water source — like the Dog River in Oregon, or the Eems Canal in Groningen, Netherlands — we struggled to find a tool that sufficiently captured the local water challenges to inform us about how to cool the data center in a climate-conscious way.

We recognize that addressing global water challenges requires local solutions, so we developed a data-driven water risk framework in collaboration with a team of industry-leading environmental scientists, hydrologists, and water sustainability experts.

Our framework provides an actionable and repeatable decision-making process for new data centers and helps us evaluate evolving water risks at existing sites, with the specificity we need to understand watershed health at a hyperlocal level. The evaluation results tell us if a watershed’s risk level is high enough that we should consider alternative solutions like reclaimed water or air-cooling technology, which uses minimal water but consumes more energy.

The framework has two main steps to assess the water risk level for a data center location:

Evaluate responsible use. We compare the current and future demand for water — from both the community and our potential data center — to the available supply, using data from the local utility and water district management plans. In this evaluation, we also consider the recent water-level history compared to levels expected of a healthy watershed using flowstream and groundwater monitoring data, as well as whether the local water authority has rationed water use. Based on these indicators, our watershed health experts determine if a water source is considered at high risk of scarcity or depletion. If it’s high risk, we will pursue alternative sources or cooling solutions at the data center campus.Measure composite risk. We look at the feasibility of treating and delivering water to and from the data center, whether with existing infrastructure or by collaborating with a utility partner to build new solutions such as reclaimed wastewater or an industrial water solution. In addition, we assess community access to water, regulatory risk, local sentiment, and any climate trends that could affect the future water supply, such as reduced precipitation or increased drought.

We designed this framework to take a comprehensive look at the water-related risks for each potential data center location. The results provide context for locally relevant watershed challenges and how our own investments in improved or expanded infrastructure or replenishment projects can help support local watershed health. Given the dynamic nature of water resources, we will repeat these assessments across our portfolio every three to five years to identify new and ongoing risks at existing sites that may require mitigation.

We have integrated the water risk framework into our planning and development processes for all new data center locations.

Notably, the responsible-use evaluation completed during the planning stage for our recently announced data center in Mesa, Arizona, found the local water source was at high risk of depletion and scarcity. Therefore, we opted to air-cool the data center, minimizing our impact on the local watershed. To further support water security in the area, we also donated to Salt River Project’s (SRP) effort focused on watershed restoration and wildfire risk. This collaboration with SRP was a follow-up to our 2021 investment in the Colorado River Indian Tribes system conservation and canal-lining projects to improve water conservation in the Southwest.

Collective effort and transparency are necessary to keep global watersheds thriving and healthy, as we work to deliver products and services that people and businesses use every day. We want to share what we’ve learned through our work with climate-conscious cooling and provide an example for how other industrial water users can make responsible water decisions for their own operations.

Our water risk framework is one of many pieces of responsible water use in action. Implementing this framework is another step on our water stewardship journey and complements our ambitious 24/7 carbon-free energy goal. Watershed health is both complex and dynamic, and as we make progress on our framework, we will continue to refine it, sharing lessons learned with others who aspire to practice responsible water use in their own organizations and communities. Check out our water risk framework white paper for more detail.

Read More for the details.

Ride hailing and cab services have been forever transformed by smartphone apps that digitally match drivers and riders for trips. While some of the biggest names in the ride-hailing industry are digital natives, cab services that have been around for decades are working to modernize and succeed in this competitive and tech-driven industry.

eCabs, a Malta-based digital ride hailing company, started off as a dial-a-cab provider in 2010, working to disrupt the industry with a high-quality, professional, and reliable 24/7 service.

As mobile apps began to be introduced into the industry globally, the company’s leaders began digitally transforming the company to create a tech stack of its own.

“We were keeping our eyes on the development of the international ride-hailing industry from inception,” says Matthew Bezzina, Chief Executive Officer at eCabs. “It was evident that embracing and investing in technology would be critical to our growth and success. We also knew that a powerful combination would come from coupling our hands-on experience of running a mobility operation with a forward-looking technology mindset.”

eCabs built its own tech, creating a platform that could be tailored for other operators and tweaked to accommodate any jurisdiction’s particular needs. The company worked with Google Cloud and partner TIM Enterprise to achieve its vision.

eCabs initially started with an IT environment built on bare metal architecture but quickly recognized the limitations of this approach.

“Having redundancies on bare metal did not match our ambitions, and we knew that a robust international technology service required a borderless solution that did not rely on the limited connectivity of a European island nation like Malta,” says Luca Di Michele, Chief Technology Officer at eCabs. “The requirement to migrate to the cloud with all the invaluable benefits that came with it was very clear.”

eCabs’ platform was first rolled out in its home country of Malta, which has the EU’s densest road network. This made it an ideal sandbox to test and refine its products. The company’s ambitions, however, always stretched beyond its home’s shores. Redundancies were vital to maximize uptime and reliability, while scalability was necessary to manage the massive spikes in demand common in the ride hailing market.

“Migrating to Google Cloud was a natural progression for us because it offers greater flexibility, scalability, and reliability,” says Bezzina.

“This has enabled us to grow locally and meet the demands of our growing international business. It’s also improved our platform’s uptime and makes it easier to manage spikes in demand as the platform met its planned growth across different markets.”

eCabs uses Google Kubernetes Engine (GKE) to power its microservices architecture that includes unique environments for each of the other international tenants it serves.

GKE makes it easy to replicate environments, which has allowed eCabs to quickly get new customers up and running as its platform becomes more popular across Europe.

“When we have a new potential customer, we can easily build a test environment, deploy it, run the setups in accordance with the prospect’s preferences, and show them the value of the platform,” says Di Michele. “The ability to replicate environments at speed while providing redundancy and disaster recovery capabilities enables our growth and grants our tenants peace of mind.”

Google Cloud partner TIM Enterprise has helped eCabs since the outset of its migration, assisting with provisioning, setup, maintenance, and more.

This helped eCabs get through the more challenging elements of the switch from bare metal to GKE architecture, while opening the door to other data cloud solutions such as BigQuery.

With TIM Enterprise’s guidance, eCabs has effectively begun to use BigQuery for all its data needs across regions, allowing it to offer customers insights on platform use for planning and performance review purposes. Given the importance of data analytics in ride hailing businesses, this checked a critical box for eCabs while setting the stage for the introduction of machine learning and generative AI projects.

Now, TIM Enterprise and Google Cloud continue to share new opportunities and insights into eCabs’ use of cloud computing to unlock savings, improve scalability, and reduce the burden of infrastructure management.

More importantly, TIM Enterprise and Google Cloud have enabled eCabs to protect its platform against moments that would have previously presented significant challenges.

“The ease in which we can scale is crucial, because we have customers of all sizes across regions,” says Di Michele.

“If we can’t scale quickly, our customers could see downtime when their riders rely most on their services like during a major sporting event. Google Cloud and TIM Enterprise have helped to position us as an always-on option, which positively impacts our customers and our customers’ customers.”

Given that eCabs is a group that houses a tech company, a 24/7 ride-hailing business, and full fleet, it has real-world experience in the issues legacy operators face and the pains of transitioning to a digital-first operation.

eCabs is enabling digital transformation among legacy cab companies and new entrants into the digital ride-hailing market in Europe and the wider region to compete against the biggest names in digital ride sharing with Google Cloud tools supporting its vision.

“Google Cloud has always been there to help,” says Bezzina. “Google Cloud and TIM Enterprise offer excellent support irrespective of the size of your business. This is a testament to the power of Google Cloud. We know they believe in us and take action to drive our success forward.

Looking ahead, eCabs is continuing its strategy of international expansion as data plays an ever-greater role in the market.

“Eventually, ride hailing platforms like ours will be primarily used as data aggregators,” says Bezzina. “The data we gather and analyze now will eventually be used to inform decisions related to autonomous vehicles, like where fleets should be placed. Google Cloud and TIM Enterprise enable us to support current market opportunities and be prepared for tomorrow.”

Check out more stories on the Google Cloud blogto learn how other companies are using Google Cloud and partners like TIM Enterprise to power their operations.

Read More for the details.

Post Content

Read More for the details.

A week ago, Google announced Gemini, our most capable and flexible AI model yet. It comes in three sizes: Ultra, Pro and Nano. Today, we are excited to announce that Gemini Pro is now publicly available on Vertex AI, Google Cloud’s end-to-end AI platform that includes intuitive tooling, fully-managed infrastructure, and built-in privacy and safety features. With Gemini Pro, now developers can build “agents” that can process and act on information.

Vertex AI makes it possible to customize and deploy Gemini, empowering developers to build new and differentiated applications that can process information across text, code, images, and video at this time. With Vertex AI, developers can:

Discover and use Gemini Pro, or select from a curated list of more than 130 models from Google, open-source, and third-parties that meet Google’s strict enterprise safety and quality standards. Developers can access models as easy-to-use APIs to quickly build them into applications.Customize model behavior with specific domain or company expertise, using tuning tools to augment training knowledge and even adjust model weights when required. Vertex AI provides a variety of tuning techniques including prompt design, adapter-based tuning such as Low Rank Adaptation (LoRA), and distillation. We also provide the ability to improve a model by capturing user feedback through our support for reinforcement learning from human feedback (RLHF).Augment models with tools to help adapt Gemini Pro to specific contexts or use cases. Vertex AI Extensions and connectors let developers link Gemini Pro to external APIs for transactions and other actions, retrieve data from outside sources, or call functions in codebases. Vertex AI also gives organizations the ability to ground foundation model outputs in their own data sources, helping to improve the accuracy and relevance of a model’s answers. We offer the ability for enterprises to use grounding against their structured and unstructured data, and grounding with Google Search technology.Manage and scale models in production with purpose-built tools to help ensure that once applications are built, they can be easily deployed and maintained. To this end, we’re introducing a new way to evaluate models called Automatic Side by Side (Auto SxS), an on-demand, automated tool to compare models. Auto SxS is faster and more cost-efficient than manual model evaluation, as well as customizable across various task specifications to handle new generative AI use cases.Build search and conversational agents in a low code / no code environment. With Vertex AI, developers across all machine learning skill levels will be able to use Gemini Pro to create engaging, production-grade AI agents in hours and days instead of weeks and months. Coming soon, Gemini Pro will be an option to power search summarization and answer generation features in Vertex AI, enhancing the quality, accuracy, and grounding abilities of search applications. Gemini Pro will also be available in preview as a foundation model for conversational voice and chat agents, providing dynamic interactions with users that support advanced reasoning.Deliver innovation responsibly by using Vertex AI’s safety filters, content moderation APIs, and other responsible AI tooling to help developers ensure their models don’t output inappropriate content.Help protect data with Google Cloud’s built-in data governance and privacy controls. Customers remain in control of their data, and Google never uses customer data to train our models. Vertex AI provides a variety of mechanisms to keep customers in sole control of their data including Customer Managed Encryption Keys and VPC Service Controls.

Google’s comprehensive approach to AI is designed to help keep our customers safe and protected. We employ an industry-first, two-pronged copyright indemnity approach to help give Cloud customers peace of mind when using our generative AI products. Today we are extending our generated output indemnity to now also include model outputs from PaLM 2 and Vertex AI Imagen, in addition to an indemnity on claims related to our use of training data. Indemnification coverage is planned for the Gemini API when it becomes generally available.

The Gemini API is now available. Gemini Pro is also available on Google AI Studio, a web-based tool that helps quickly develop prompts. We will be making Gemini Ultra available to select customers, developers, partners and safety and responsibility experts for early experimentation and feedback before rolling it out to developers and enterprise customers early next year.

Join us today at our Applied AI Summit to learn more.

Read More for the details.

Today, we’re sharing a significant upgrade to Google Cloud’s image-generation capabilities with Imagen 2, our most advanced text-to-image technology, which is now generally available for Vertex AI customers on the allowlist (i.e., approved for access).

Imagen 2 on Vertex AI allows our customers to customize and deploy Imagen 2 with intuitive tooling, fully-managed infrastructure, and built-in privacy and safety features. Developed using Google DeepMind technology, Imagen 2 delivers significantly improved image quality and a host of features that enable developers to create images for their specific use case, including:

Generating high-quality, photorealistic, high-resolution, aesthetically pleasing images from natural language promptsText rendering in multiple languages to create images with accurate text overlaysLogo generation to create company or product logos and overlay them in imagesVisual question and answering for generating captions from images, and for getting informative text responses to questions about image details

Importantly, Vertex AI’s indemnification commitment now covers Imagen on Vertex AI, which includes Imagen 2 and future generally available upgrades of the model powering the service. We employ an industry-first, two-pronged copyright indemnification approach that can give customers peace of mind when using our generative AI products.

Imagen 2 on Vertex AI boasts a variety of image generation features to help organizations create images that match their specific brand requirements with the same enterprise-grade reliability and governance customers are used to with Imagen.

New features now available in Imagen 2 include:

High quality Images: Imagen 2 can achieve accurate, high-quality photorealistic outputs with improved image+text understanding and a variety of novel training and modeling techniques.

Text rendering support: Text-to-image technologies often have difficulty correctly rendering text. If a model is prompted to generate a picture of an object with a specific word or phrase, for example, it can be challenging to ensure the correct phrase is part of the output image. Imagen 2 helps solve for this, which can give organizations a deeper level of control for branding and messaging.

Logo generation: Imagen 2 can generate a wide variety of creative and realistic logos — including emblems, lettermarks, and abstract logos — for business, brands, and products. It also has the ability to overlay these logos onto products, clothing, business cards, and other surfaces.

Captions and question-answer: Imagen 2’s enhanced image understanding capabilities enable customers to create descriptive, long-form captions and get detailed answers to questions about elements within the image.

Multi-language prompts: Beyond English, Imagen 2 is launching with support for six additional languages (Chinese, Hindi, Japanese, Korean, Portuguese, Spanish) in preview, with many others planned for release in early 2024. This feature includes the capability to translate between prompt and output, e.g., prompting in Spanish but specifying that the output should be in Portuguese.

Safety: Imagen 2 includes built-in safety precautions to help ensure that generated images align with Google’s Responsible AI principles. For example, Imagen 2 is integrated with our experimental digital watermarking service, powered by Google DeepMind’s SynthID, which enables allowlisted customers to generate invisible watermarks and verify images generated by Imagen. Imagen 2 also includes comprehensive safety filters to help prevent generation of potentially harmful content.

We look forward to organizations using these new features to build on the results they’ve already achieved with Imagen.

Snap is using Imagen to help Snapchat+ subscribers to express their inner creativity. With the new AI Camera Mode, they can tap a button, type in a prompt or choose a pre-selected one, and generate a scene to share with family and friends or post to their story.

“Imagen is the most scalable text-to-image model with the safety and image quality we need,” said Josh Siegel, Senior Director of Product at Snap. “One of the major benefits of Imagen for Snap is that we can focus on what we do best, which is the product design and the way that it looks and makes you feel. We know that when we work on new products like AI Camera Mode, we can really rely on the brand safety, scalability, and reliability that comes with Google Cloud.”

Shutterstock has also emerged as a leading innovator bringing AI to creative production — including being the first to launch an ethically-sourced AI image generator, now enhanced with Imagen on Vertex AI. With the Shutterstock AI image generator, users can turn simple text prompts into unique, striking visuals, allowing them to create at the speed of their imaginations. The Shutterstock website includes a searchable collection of more than 16,000 Imagen pictures, all available for licensing.

“We exist to empower the world to tell their stories by bridging the gap between idea and execution. Variety is critical for the creative process, which is why we continue to integrate the latest and greatest technology into our image generator and editing features—as long as it is built on responsibly sourced data,” said Chris Loy, Director of AI Services, Shutterstock. “The Imagen model on Vertex AI is an important addition to our AI image generator, and we’re excited to see how it enables greater creative capabilities for our users as the model continues to evolve.”

Canva is also using Imagen on Vertex AI to bring ideas to life, with millions of images generated with the model to date. Users can access Imagen as an app directly within Canva and with a simple text prompt, generate a captivating image that fits their design needs.

“Partnering with Google Cloud, we’re continuing to use generative AI to innovate the design process and augment imagination,” said Danny Wu, Head of AI at Canva. “With Imagen, our 170M+ monthly users can benefit from the image quality improvements to uplevel their content creation at scale. The new model and features will further empower our community to turn their ideas into real images with as little friction as possible.”

To get started with Imagen 2 on Vertex AI, find our documentation or reach out to your Google Cloud account representative to join the Trusted Tester Program.

Read More for the details.

Improving healthcare and medicine are among the most promising use cases for artificial intelligence, and we’ve made big strides since our initial research into medically-tuned large language models (LLMs) with Med-PaLM in 2022 and 2023. We’ve been testing Med-PaLM 2 with healthcare organizations, and our Google Research team continues to make significant progress, including exploring multimodal capabilities.

Now we’re introducing MedLM — a family of foundation models fine-tuned for healthcare industry use cases. MedLM is now available to Google Cloud customers in the United States through an allowlisted general availability in the Vertex AI platform. MedLM is also currently available in preview in certain other markets worldwide.

Currently, there are two models under MedLM, built on Med-PaLM 2, to offer flexibility to healthcare organizations and their different needs. Healthcare organizations are exploring the use of AI for a range of applications, from basic tasks to complex workflows. Through piloting our tools with different organizations, we’ve learned the most effective model for a given task varies depending on the use case. For example, summarizing conversations might be best handled by one model, and searching through medications might be better handled by another. The first MedLM model is larger, designed for complex tasks. The second is a medium model, able to be fine-tuned and best for scaling across tasks. The development of these models has been informed by specific healthcare and life sciences customer needs, such as answering a healthcare provider’s medical questions and drafting summaries. In the coming months, we’re planning to bring Gemini-based models into the MedLM suite to offer even more capabilities.

Many of the companies we’ve been testing MedLM with are now moving it into production in their solutions, or broadening their testing. Here are some examples.

For the past several months, HCA Healthcare has been piloting a solution to help physicians with their medical notes in four emergency department hospital sites. Physicians use an Augmedix app on a hands-free device to create accurate and timely medical notes from clinician-patient conversations in accordance with the Health Insurance Portability and Accountability Act (HIPAA). Augmedix’s platform uses natural language processing, along with Google Cloud’s MedLM on Vertex AI, to instantly convert data into drafts of medical notes, which physicians then review and finalize before they’re transferred in real time to the hospital’s electronic health record (EHR).

With MedLM, the automated performance of Augmedix’s ambient documentation products will increase, and the quality and summarization will continue to improve over time. These products can save time, reduce burnout, increase clinician efficiency, and improve overall patient care. The addition of MedLM also makes it easier to affordably scale Augmedix’s products across health systems and support an expanding list of medical subspecialties such as primary care, emergency department, oncology, and orthopedics.

Drug research and development is slow, inefficient, and extremely expensive. BenchSci is bringing AI to scientific discovery to help expedite drug development, and it is integrating MedLM into its ASCEND platform to further improve the speed and quality of pre-clinical research and development.

BenchSci’s ASCEND platform is an AI-powered evidence engine that produces a high-fidelity knowledge graph of more than 100 million experiments, decoded from hundreds of different data sources. This allows scientists to understand complex connections in biological research, presenting a thorough grasp of empirically validated and ontologically derived relationships, including biomarkers, detailed biological pathways, and interconnections among diseases. The integration of MedLM works to further enhance the accuracy, precision and reliability, and together with ASCEND’s proprietary technology and data sets, it aims to significantly boost the identification, classification, ranking, and discovery of novel biomarkers — clearing the path to successful scientific discovery.

To spur enterprise adoption and value, we’ve also teamed up with Accenture to help healthcare organizations use generative AI to improve patient access, experience, and outcomes. Accenture brings its deep healthcare industry experience, solutions, and the data and AI skills needed to help healthcare organizations make the most of Google’s technology with human ingenuity.

Aimed at healthcare process improvement, Accenture’sSolutions.AI for Processing for Health interprets structured and unstructured data from multiple sources to automate manual processes that were previously time-consuming and prone to error, like reading clinical documents, enrollment, claims processing, and more. This helps clinicians make faster, more informed decisions and frees up more time and resources for patient care. Using Google Cloud’sClaims Acceleration Suite, MedLM, and Accenture’s Solutions.AI for Processing, new insights can be uncovered — ultimately leading to better patient outcomes.

Healthcare organizations are often bogged down with administrative tasks and processes related to documentation, care navigation, and member engagement. Deloitte and Google Cloud are working together with our mutual customers to explore how generative AI can help improve the member experience and reduce friction in finding care through an interactive chatbot, which helps health plan members better understand the provider options covered by their insurance plans.

Deloitte, Google Cloud, and healthcare leaders are piloting MedLM’s capabilities to make it easier for care teams to discover information from provider directories and benefits documents. These inputs will then help contact center agents better identify best-fit providers for members based on plan, condition, medication and even prior appointment history, getting people faster access to the precise care they need.

As we bring the transformative potential of generative AI to healthcare, we’re focused on enabling professionals with a safe and responsible use of this technology. That’s why we’re working in close collaboration with practitioners, researchers, health and life science organizations, and the individuals at the forefront of healthcare every day. We’re excited by the progress we’ve seen in just one year — from building the first LLM to obtain a passing score (>60%) on U.S. medical-licensing-exam-style questions (published in Nature), to advancing it to obtain an expert level score (86.5%), to applying it in real-world scenarios through a measured approach. As we reflect on 2023 — and close out the year by expanding MedLM access to more healthcare organizations — we’re excited for the progress and potential ahead and our continuing work on pushing forward breakthrough research in health and life sciences.

Read More for the details.

Throughout 2023, we have introduced incredible new AI innovations to our customers and the broader developer and user community, including: AI Hypercomputer to train and serve generative AI models; Generative AI support in Vertex AI, our Enterprise AI platform; Duet AI in Google Workspace; and Duet AI for Google Cloud. We have shipped a number of new capabilities in our AI-optimized infrastructure with notable advances in GPUs, TPUs, ML software and compilers, workload management and others; many innovations in Vertex AI; and an entire new suite of capabilities with Duet AI agents in Google Workspace and Google Cloud Platform.

Already, we have seen tremendous developer and user growth. For example, between Q2 and Q3 this year, the number of active gen AI projects on Vertex AI grew by more than 7X. Leading brands like Forbes, Formula E, and Spotify are using Vertex AI to build their own agents, and Anthropic, AI21 Labs, and Cohere are training their models. The breadth and creativity of applications that customers are developing is breathtaking. Fox Sports is creating more engaging content. Priceline is building a digital travel concierge. Six Flags is building a digital concierge. And Estée Lauder is building a digital brand manager.

Today, we are introducing a number of important new capabilities across our AI stack in support of Gemini, our most capable and general model yet. It was built from the ground up to be multimodal, which means it can generalize and seamlessly understand, operate across, and combine different types of information, including text, code, audio, image, and video, in the same way humans see, hear, read, listen, and talk about many different types of information simultaneously.

Starting today, Gemini is part of a vertically integrated and vertically optimized AI technology stack that consists of several important pieces — all of which have been engineered to work together:

Super-scalable AI infrastructure: Google Cloud offers leading AI-optimized infrastructure for companies, the same used by Google, to train and serve models. We offer this infrastructure to you in our cloud regions as a service, to run in your data centers with Google Distributed Cloud, and on the edge. Our entire AI infrastructure stack was built with systems-level codesign to boost efficiency and productivity across AI training, tuning, and serving.World-class models: We continue to deliver a range of AI models with different skills. In late 2022, we launched our Pathways Language Model (PaLM), quickly followed by PaLM 2, and we are now delivering Gemini Pro. We have also introduced domain-specific models like Med-PaLM and Sec-PaLM.Vertex AI — leading enterprise AI platform for developers: To help developers build agents and integrate gen AI into their applications, we have rapidly enhanced Vertex AI, our AI development platform. Vertex AI helps customers discover, customize, augment, deploy, and manage agents built using the Gemini API, as well as a curated list of more than 130 open-source and third-party AI models that meet Google’s strict enterprise safety and quality standards. Vertex AI leverages Google Cloud’s built-in data governance and privacy controls, and also provides tooling to help developers use models responsibly and safely. Vertex AI also provides Search and Conversation, tools that use a low code approach to developing sophisticated search, and conversational agents that can work across many channels.Duet AI — assistive AI agents for Workspace and Google Cloud: Duet AI is our AI-powered collaborator that provides users with assistance when they use Google Workspace and Google Cloud. Duet AI in Google Workspace, for example, helps users write, create images, analyze spreadsheets, draft and summarize emails and chat messages, and summarize meetings. Duet AI in Google Cloud, for example, helps users code, deploy, scale, and monitor applications, as well as identify and accelerate resolution of cybersecurity threats.

We are excited to make announcements across each of these areas:

As gen AI models have grown in size and complexity, so have their training, tuning, and inference requirements. As a result, the demand for high-performance, highly-scalable, and cost-efficient AI infrastructure for training and serving models is increasing exponentially.

This isn’t just true for our customers, but Google as well. TPUs have long been the basis for training and serving AI-powered products like YouTube, Gmail, Google Maps, Google Play, and Android. In fact, Gemini was trained on, and is served, using TPUs.

Last week, we announced Cloud TPU v5p, our most powerful, scalable, and flexible AI accelerator to date. TPU v5p is 4X more scalable than TPU v4 in terms of total available FLOPs per pod. Earlier this year, we announced the general availability of Cloud TPU v5e. With 2.7X inference-performance-per-dollar improvements in an industry benchmark over the previous generation TPU v4, it is our most cost-efficient TPU to date.

We also announced our AI Hypercomputer, a groundbreaking supercomputer architecture that employs an integrated system of performance-optimized hardware, open software, leading ML frameworks, and flexible consumption models. AI Hypercomputer has a wide range of accelerator options, including multiple classes of 5th generation TPUs and NVIDIA GPUs.

Gemini is also our most flexible model yet — able to efficiently run on everything from data centers to mobile devices. Gemini Ultra is our largest and most capable model for highly complex tasks, while Gemini Pro is our best model for scaling across a wide range of tasks, and Gemini Nano is our most efficient model for on-device tasks. Its state-of-the-art capabilities will significantly enhance the way developers and enterprise customers build and scale with AI.

Today, we also introduced an upgraded version of our image model, Imagen 2, our most advanced text-to-image technology. This latest version delivers improved photorealism, text rendering, and logo generation capabilities so you can easily create images with text overlays and generate logos.

In addition, building on our efforts around domain-specific models with Med-PaLM, we are excited to announce MedLM, our family of foundation models fine-tuned for healthcare industry use cases. MedLM is available to allowlist customers in Vertex AI, bringing customers the power of Google’s foundation models tuned with medical expertise.