GCP – Symphony handles millions of messages with Bigtable, saving 40% on database costs & increasing resiliency

Post Content

Read More for the details.

Post Content

Read More for the details.

When meeting with Communications Service Providers (CSPs) around the world, there is one clear theme: artificial intelligence (AI) is changing the game, unlocking significant value across the CSP value chain, and changing how CSPs and their customers interact with technology.

MWC’24 is no exception, and we are thrilled to be back in Barcelona this week as the wider industry ecosystem comes together to reimagine the future of the industry, with AI-driven innovation at its core.

It’s been an exciting 12 months since last year’s event — we have been collaborating with CSP customers and partners around the world to digitally transform their business with data and AI. For example:

Accenture, the leading professional services firm, and Google Cloud committed to establishing a Joint Generative AI Center of Excellence, with access to over 130 models through Model Garden on Vertex AI, and the ability to complete rapid prototyping of industry use cases.Bell Canada is leveraging conversational analytics, Contact Center AI (CCAI) to improve the customer experience. In an effort to continue to drive innovation and customer experience improvements, Bell Canada will explore generative AI across personalization, marketing, and customer service.BT Group announced a new strategic security partnership with us, targeting a range of joint innovation opportunities.KDDI will launch an initiative providing services that enrich the lives of customers by enabling them to experience gen AI in their daily lives. KDDI will combine its metaverse and Web3 service αU (Alpha-U) with gen AI, including Google’s high-performance gen AI model Gemini Pro and Immersive Stream for XR.Maxis has expanded its strategic collaboration with Google Cloud by integrating enterprise-grade gen AI into its internal workflows and customer service offerings. It is leveraging Duet AI for Developers for AI-powered coding assistance in natural language, and multimodal capabilities from the Gemini models on Vertex AI. This builds on Maxis’ existing use of Google Cloud’s unified data management and machine learning development platforms, empowering it to further streamline its operations, more quickly bring new digital services to market, and create more differentiated customer experiences.Nokia launched their AVA Data Suite to run on Google Cloud and help CSPs standardize data and facilitate the development of AI and machine learning (ML) software applications, including secure autonomous operations and predictive network maintenance.Smart Communications, Inc. (Smart), the mobile services arm of PLDT Group, has adopted Google Cloud’s Telecom Subscriber Insights, which will help inform the development of services that equip Filipinos to better understand, manage, and optimize their mobile data consumption, and also enable Smart to design more inclusive mobile services for subscribers by identifying both patterns and gaps in connectivity.TM Forum and Google Cloud, along with Accenture, Deutsche Telekom, Reliance Jio, Orange, and Vodafone came together to launch the TM Forum Innovation Hub, with a specific focus during the pilot phase on the potential of gen AI and large language models for CSPs; as well as accelerating the Open Digital Architecture (ODA).

And to support our CSP customers build, deploy, and operate hybrid cloud-native networks:

We are announcing the general availability of Google Cloud’s Telecom Network Automation to provide intent-driven automation for Telecom networks based on the Nephio open-source project. The product is now available from the Google Cloud console.Ericsson has expanded its successful collaboration with Google Cloud to develop an Ericsson Cloud RAN solution on Google Distributed Cloud that offers integrated automation and orchestration, and leverages AI/ML for additional benefits to CSPs.‘’Orange sees GDC Edge with Dataproc and Data Analytics as a perfect solution to analyze customer and network data in a secure on-premise environment across our 26 countries. Google GDC Edge offers Orange an elegant extension from public cloud to on-premise of our tools, employee skills, and operating model.’’ (Steve Jarrett, Chief AI Officer, Orange)Telefónica Germany is partnering with Google Cloud and Nokia to integrate enhanced energy efficiency into their networks.

Gen AI heralds the next pivotal moment in our industry’s AI journey — and will be front and center at MWC’24.

Advancing the innovation of the future AI-enabled telco is Gemini, Google’s largest and most capable AI model. Earlier this month we announced the next chapter of our Gemini era, introducing Gemini Advanced. We are also bringing Gemini’s capabilities to more products including Workspace and Google Cloud — helping companies to boost productivity, developers to code faster, and organizations to protect themselves from cyber attacks, along with other benefits.

And most recently we announced our next-generation model Gemini 1.5, which delivers dramatically enhanced performance with a breakthrough in long-context understanding across modalities, as well as Gemma, a family of lightweight, state-of-the art open models built from the same research and technology used to create the Gemini models.

As CSPs harness the full potential of gen AI to drive value across their organizations, gen AI assistants will play critical roles, augmenting human capabilities with powerful multi-modal data analysis, pattern recognition, and recommendations, all leveraging historical CSP knowledge and using industry best practices to streamline processes and transform customer and employee experiences. We see four key areas where gen AI agents have a critical role to play:

Automating network operations

Telecommunication providers are undergoing a profound transformation, migrating towards cloud-native architectures and intent-based networking automation. This shift lays the foundation for the next generation of networks, characterized by enhanced AI capabilities and increased autonomy. Together, these advancements promise a future where networks can self-optimize, self-heal, and proactively adapt to changing conditions, delivering new levels of reliability and efficiency for telecom providers.

In this new phase of AI, we see gen AI agents having a tremendous opportunity to transform network planning, engineering, deployment, and operations. For example, they could provide engineer assistance via an accessible knowledge base to accelerate learning, generate and optimize network intent and policies, troubleshoot and provide recommendations, and help to quickly triage faults to ensure high network uptime.

Accelerating field services

As networks have become distributed, telecom field services have evolved drastically to maintain network quality. Early services focused on reactive responses — technicians were dispatched only after a failure caused an outage. Later, preventative maintenance improved things somewhat, but relied on scheduled work and workforce expertise. Today, gen AI is helping to unlock significant value for CSPs to improve field operations, which in turn can help them lower costs and increase customer satisfaction.

Multimodal gen AI agents — with their ability to understand and reason about text, images, video, and code — are unleashing exciting possibilities for improving field operations. For example, gen AI agents can help CSPs unlock new value across workforce tasks, skillset gaps, technician assist (e.g. multimodal interactions for troubleshooting), and ticket annotation.

Improving customer care

The use of AI in contact centers has increased both customer and employee satisfaction, providing customers with fast, helpful answers from well-trained virtual agents, as well as from human agents who receive assistance from AI-powered tools. This is driving reductions in call resolution time and average handling time, ultimately resulting in lower call queues and improved net promoter score (NPS).

Gen AI has widened virtual agents’ scope, encompassing more of the customer user journey and driving not just operational efficiency, but also top-line revenue across sales (product recommendations), activation, and retention. Gen AI has also lowered the barrier to entry and time to value in the contact center in meaningful ways: more interactions can be automated in shorter periods of time, human agents can be trained and coached faster, and call insights can be observed and reacted to more effectively.

Transforming sales and marketing

Customer lifetime value starts by building customer experiences with highly personalized interactions that retain, upsell, and drive next best actions and new service offerings. This requires creating better campaigns with AI-driven segmentation, identifying customers with a high propensity for conversion, and generating targeted messages across channels. For example, integrated AI assistants can develop hyper-personalized experiences that allow the CSP to better engage with their customers, leveraging past buy propensities, customer experiences, issues, and call records.

Gen AI further enriches the highly personalized outreach with customers through their smartphone devices. From offers to campaigns, CSPs can enhance the effectiveness by generating rich content with text, images and videos. For example, Google’s Imagen can create high resolution images from user’s textual prompts and experience patterns into various styles aligned to the CSPs brand guidelines. Better contextualization and content can be integrated to drive richer advertising, including Google Ads.

We look forward to seeing you on the ground in Barcelona! Be sure to join Google DeepMind CEO Demis Hassabis and renowned tech journalist Steven Levy on 26 February from 3:15-3:45pm on the MWC Main Stage for a fireside chat around how Artificial Intelligence can change the world, from tackling major scientific problems like energy, climate change and drug discovery, to transforming the way people create, communicate, and do business.

We’re also excited to bring a dynamic demo showcase to this year’s MWC, providing real-world examples of how Google Cloud and our gen AI portfolio are driving the next phase of cloud transformation and innovation for CSPs, including use cases across network transformation, AI-powered customer experiences, and monetization. Be sure to reach out to your dedicated Google Cloud representative to schedule a demo tour.

In addition, we’re proud to collaborate with our ecosystem of partners who will showcase CSP-focussed AI use cases, including gen AI, in their booths. This impressive lineup of Global System Integrators, ISV Partners, and Network Equipment Providers include Accenture, Anritsu, Capgemini, Carto, Datatonic, Dell, Ericsson, Intel, Nokia, Optiva, Radcom, Subex, Unacast, Viavi Solutions, and more.

For more information on how Google Cloud is partnering with CSPs on their journey to the AI-enabled telco, click here.

Read More for the details.

Securing cloud credentials has emerged as a challenge on the scale of Moby Dick: It presents an enormous problem, and simple solutions remain elusive. Credential security problems are also widespread: More than 69% of cloud compromises were caused by credential issues, including weak passwords, no passwords, and exposed APIs, according to Google Cloud’s Q3 2023 Threat Horizons Report. However, unlike Moby Dick, this story may have a happy ending. Organizations can get started on reducing the risk they face from credential-related compromises by protecting service accounts.

Service accounts are essential tools in cloud management. They make API calls on behalf of applications and they rely on IAM roles to make those calls.They also make an appealing target for attackers to establish initial access in a cloud environment.

“Nearly 65% of alerts across organizations were related to risky use of service accounts. These accounts have associated permissions where, if compromised, could lead to attackers gaining persistence and subsequently using this access for privilege escalation in cloud environments,” we wrote in the report.

This assessment follows a similar conclusion from our Q1 2023 Threat Horizons Report, where we detailed how attackers can abuse service account keys — and what organizations can do to stop them. That same report noted that 68% of service accounts had overly permissive roles, service account keys are often found hardcoded on public repositories, and project owners did not take corrective action after Google attempted to contact them in 42% of leaked key incidents.

Here are some mitigation tips that can help you avoid creating service account keys at scale, monitor for key usage, and respond to alerts quickly. We strongly encourage you to assess all of the techniques highlighted in the Q1 2023 report.

This section assumes that you have the necessary permissions to manage Organization Policies, guardrails that set broad yet unbendable limits for cloud engineers before they start creating and working with resources. To learn more, see creating and managing organization policies.

One of the best practices for managing service account keys is to use Organization Policy constraints to prevent creating new service account keys, and allow exceptions only for projects that have demonstrated that they cannot use a more secure alternative.

The easiest way to enforce this Organization Policy Constraint is in your Google Cloud console.

Before proceeding, ensure that you are logged in to the correct Google account. If you want to enforce this constraint across the entire Organization, be sure to select the Organization in the Resource Manager explorer:

Then click on “Manage Policy”:

And enforce the policy:

Subsequently, any time a user attempts to create a service account key, the user will be presented with the following error:

Now that service account key creation is disabled, the next step is to ensure that no one has altered this policy and covertly created new service account keys.

You can find evidence of policy changes using the Google Cloud Operations Suite. These next two examples assume that all logs are stored in a centralized Google Cloud Project and that you have the required permissions to query logs and set alerts. To learn more, see:

Routing and Storage OverviewCollate and route organization-level logs to supported destinationsBuild queries by using the Logging query languageConfigure log-based alerts

Visit the Logs Explorer. Use the Refine scope button in the Action toolbar and select the Scope that represents the location of your Organization’s centralized logs.

Use the following query to find instances of the “iam.disableServiceAccountKeyCreation” Organization Constraint being changed:

Log entry of an Org Policy Change Affecting “iam.disableServiceAccountKeyCreation”

To create an alert based on this query, proceed to the “Create Alerts for Rapid Response” section.

Visit the Logs Explorer. Use the Refine scope button in the Action toolbar and select the Scope that represents the location of your Organization’s centralized logs.

Use the following query to find instances of a new service account key:

Log entry demonstrating a new service account key was created

This log entry signifies that:

At some point, the Org Policy Constraint preventing service account key creation was disabledSomeone created a new service account key

The previous sections described how you can find instances of either new service account keys or Organization Policy Changes. To promote rapid and reactive response, create alerts so you may respond quickly to such events.

This section assumes you understand the following:

How Alerting WorksCreate and Manage Notification ChannelsManage Incidents for Log-Based Alerts

Click on “Create Alert”

Name the Alert and confirm that the logs to include in the alert match the query in the Log Explorer. Click “Preview Logs” to confirm the entries are desirable. Set the notification frequency and autoclose duration.

Choose the notification channel. If you need to create a notification channel, follow these instructions.

Below is an example of an alert, sent via email, listing an incident triggered after I created a service account key.

Clicking “View Incident” directs me to the Alerting page:

Clicking on the incident directs me to a view listing:

the conditionthe log querythe set of logs matching the querythe date and time the incident was triggered

Incident Details

In examining the risks of using service account keys, enforcing Organization Policies that limit service account key creation at scale, and using continuous monitoring to detect policy violations, we have shown that you can better manage one of the biggest potential risks in your environment.

We highly encourage everyone to read “Build a Collaborative Incident Management Process,” which can help your organization operationalize responses to this and other types of incidents. Besides, who needs the drama of chasing a great white whale? Ditching service account keys is a much simpler way to boost your cloud security.

Read More for the details.

Private Service Connect is a Cloud Networking offering that creates a private and secure connection from your VPC networks to a service producer, and is designed to help you consume services faster, protect your data, and simplify service management. However, like all complex networking setups, sometimes things don’t work as planned. In this post, you will find useful tips that can help you to tackle issues related to Private Service Connect, even before reaching out to Cloud Support.

Before we get into the troubleshooting bits, let’s briefly discuss the basics of Private Service Connect. Understanding your setup is key for isolating the problem.

Private Service Connect is similar to private services access, except that the service producer VPC network doesn’t connect to your (consumer) network using VPC network peering. A Private Service Connect service producer can be Google, a third-party, or even yourself.

When we talk about consumers and producers, it’s important to understand what type of Private Service Connect is configured on the consumer side and what kind of managed service it intends to connect with on the producer side. Consumers are the ones who want the services, while producers are the ones who provide them. The various types of Private Service Connect configurations are:

Private Service Connect endpoints are configured as forwarding rules which are allocated with an IP address and it is mapped to a managed service by targeting a Google API bundle or a service attachment. These managed services can be diverse, ranging from global Google APIs to Google Managed Services, third-party services, and even in-house, intra-organization services.

When a consumer creates an endpoint that references a Google APIs bundle, the endpoint’s IP address is a global internal IP address – the consumer picks an internal IP address that’s outside all subnets of the consumer’s VPC network and connected networks.

When a consumer creates an endpoint that references a service attachment, the endpoint’s IP address is a regional internal IP address in the consumer’s VPC network – from a subnet in the same region as the service attachment.

Private Service Connect backends are configured with a special Network Endpoint Group of the type Private Service Connect which refers to a locational Google API, or to a published service service attachment. A service attachment is your link to a compatible producer load balancer.

And Private Service Connect interfaces, a special type of network interface that allows service producers to initiate connections to service consumers.

Network Address Translation (NAT) is the underlying network technology that powers up Private Service Connect using Google Cloud’s software-defined networking stack called Andromeda.

Let’s break down how Private Service Connect works to access a published service based on an internal network-passthrough load balancer using a connect endpoint. In this scenario, you set up a Private Service Connect endpoint on the consumer side by configuring a forwarding rule that targets a service attachment. This endpoint has an IP address within your VPC network.

When a VM instance in the VPC network sends traffic to this endpoint, the host’s networking stack will apply client-side load balancing to send the traffic to a destination host based on the location, load and health.The packets are encapsulated and routed through Google Cloud’s network fabric.At the destination host, the packet processor will apply Source Network Address Translation (SNAT) and Destination Network Address Translation (DNAT) using the NAT subnet configured and the producer IP address of the service, respectively.The packet is delivered to the VM instance serving as the load balancer’s backend.

All of this is orchestrated by Andromeda’s control plane; with a few exceptions, there are no middle box or intermediaries involved in this process, enabling you to achieve line rate performance. For additional details, see Private Service Connect architecture and performance.

With this background, you should be already able to identify the main components where issues could occur: the source host, the network fabric, the destination host, and the control-plane.

The Google Cloud console provides you with the following tools to troubleshoot most of the Private Service Connect issues that you might encounter.

Connectivity Tests is a diagnostics tool that lets you check connectivity between network endpoints. It analyzes your configuration and, in some cases, performs live data-plane analysis between the endpoints.

Configuration Analysis supports Private Service Connect: Consumers can check connectivity from their source systems to PSC endpoints (or consumer load balancers using PSC NEG backends), while producers can verify that their service is operational for consumers.

Live Data Plane Analysis supports both Private Service Connect endpoints for published services and Google APIs: Verify reachability and latency between hosts by sending probe packets over the data plane. This feature provides baseline diagnostics of latency and packet loss. In cases where Live Data Plane Analysis is not available, consumers can coordinate with a service producer to collect simultaneous packet captures at the source and destination using tcpdump.

Cloud Logging is a fully managed service that allows you to store, search, analyze, monitor, and alert on logging data and events.

Audit logs allow you to monitor Private Service Connect activity.

Use them to track intentional or unintentional changes to Private Service Connect resources, find any errors or warnings and monitor changes in connection status for the endpoint.

These are mostly useful when troubleshooting issues during the setup or updates in the configuration.

In this example, you can track endpoint connection status changes (pscConnectionStatus) by examining audit logs for your GCE forwarding rule resource:

Producers can enable VPC Flow Logs at the target load balancer subnet to monitor traffic ingressing their VM instances backends.

Consider that VPC Flow Logs are sampled and may not capture short-lived connections. To get more detailed information, run a packet capture using tcpdump.

Another member of the observability stack, Cloud Monitoring can help you to gain visibility into the performance of Private Service Connect.

Producer metrics to monitor Published services.

Take a look at the utilization of service attachment resources like NAT ports, connected forwarding rules and connections by service attachment ID to correlate with connectivity and performance issues.

See if there are any dropped packets at the producer side (Preview feature).

Received packets dropped count are related to NAT resource exhaustion.

Sent packets dropped count indicate that a service backend is sending packets to a consumer after the NAT translation state has expired.

When this occurs, make sure you are following the NAT subnets recommendations. A packet capture could bring more insights on the nature of the dropped packets.

Using this MQL query, producers can monitor NAT subnet capacity for a specific service attachment:

For more information, see Monitor Private Service Connect connections.

TIP: Be proactive and set alerts to inform you when you are close to exhausting a known limit (including Private Service Connect quotas).

In this example, you can use this MQL query to track PSC Internal LB Forwarding Rules quota usage.

Consult the Google Cloud documentation to learn about the limitations and supported configurations.

Follow the Private Service Connect guides.

Especially for new deployments, it is common to misconfigure a component or find that it is not compatible or supported yet. Ensure that you have gone through the right configuration steps, and go through the limitations and compatibility matrix.Take a look at the VPC Release notes.

See if there are any known issues related to Private Service Connect, and look for any new features that could have introduced unwanted behavior.

Selecting the right tool depends on the specific situation you encounter and where you are in the life cycle of your Private Service Connect journey. Before you start, gather consumer and producer project details, and that in fact, this is a Private Service Connect issue, and not a Private services access problem.

Generally, you can face issues during setup or update of any related component or additional capability, or the issues could be present during runtime, when everything is configured but you run into connectivity or performance issues.

Make sure that you are following the configuration guide and you have an understanding of the scope and limitations.

Check for any error message or warning in the Logs Explorer.Verify that the setup is compatible and supported as per the configuration guides.See if there is any related quota exceeded like the Private Service Connect forwarding rules.Confirm whether there is an organization policy that could prevent the configuration of Private Service Connect components.

Isolate the issue to the consumer or the producer side of the connection.

If you are on the consumer side, check if your endpoint or backend is accepted in the connection status at the Private Service Connect page. Otherwise, review in the producer side the accept/reject connection list and the connection reconciliation setup.If your endpoint is unreachable, check bypassing DNS resolution and run a Connectivity Test to validate routes and firewalls from the source endpoint IP address to the PSC endpoint as destination. On the service producer side, check if the producer service is reachable within the producer VPC network, and from an IP address in the Private Service Connect NAT subnet.If there is a performance issue like network latency or packet drops, check if Live Data Plane Analysis is available to determine a baseline and isolate an issue with the application or service. Also, check the Metrics Explorer for any connections or port exhaustion and packet drops.

Once that you have pinpointed the issue and you have analyzed the problem, you may need to reach out to Cloud Support for further assistance. To facilitate a smooth experience, be sure to explain your needs, clearly describe the business impact and give enough context with all the information collected.

Read More for the details.

Spanner has emerged as a compelling choice for enterprises seeking to address the limitations of traditional databases. With the general availability and increasing popularity of Spanner PostgreSQL dialect, there is growing interest in migrating from PostgreSQL to Spanner. Migrating from a PostgreSQL database to Spanner (PostgreSQL dialect) can bring significant benefits, including:

Horizontal scalability

Strong consistency

99.999% availability offering

Familiar syntax and semantics for PostgreSQL developers

Elimination of maintenance tasks like VACUUMing, tuning shared buffers and managing connection pooling

While the benefits of Spanner are undeniable, migrating a production database can be a daunting task. Organizations fear downtime and disruptions to operations. Fortunately, with careful planning and the Spanner Migration Tool (SMT), it is possible to migrate from PostgreSQL to Spanner with minimal downtime.

The complete migration process is in the official guide. This blog post demonstrates a minimal downtime migration of a sample application.

Follow this guide to create a Cloud SQL for PostgreSQL database (named example) and this guide to set up a destination Spanner instance.

This demo migration will be performed using Cloud Shell. Launch Cloud Shell and authenticate:

The sample app is available in github:

This app works with a schema of three tables: singers, albums and songs. It performs periodic data inserts, to simulate a production like traffic.

Configure the app to connect to PostgreSQL. Gather the instance’s connection name from the Cloud SQL instance overview page. Assign the correct values below and run:

Start the dockerized application:

This should insert records into Cloud SQL PostgreSQL. After verification, stop the application:

Follow this quickstart guide to launch SMT’s web UI and connect to Spanner by entering project-id and instance-id created in step#1.

To allow SMT to connect to the PostgreSQL database, follow this IP allowlisting guide.

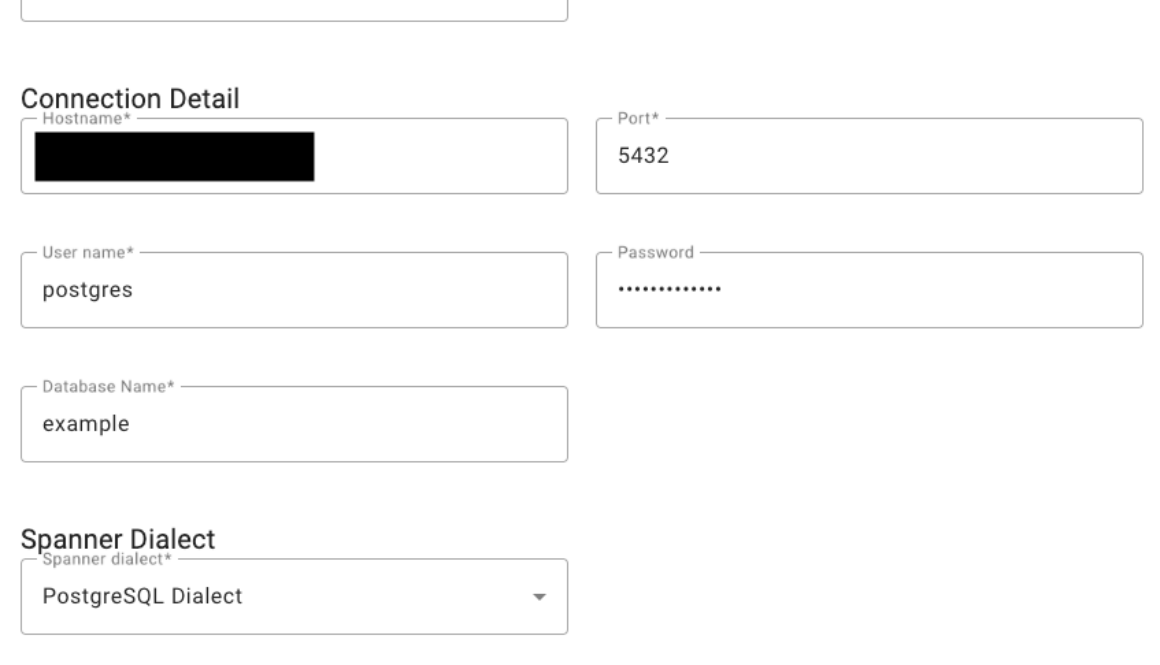

To Connect to source Database, enter:

Hostname: PostgreSQL instance’s Public or Private IP

Port: 5432

PostgreSQL Database Name: example

Spanner Dialect: PostgreSQL

In the “Configure Schema” view, clicking on each table shows the schema for PostgreSQL and Spanner in a side-by-side comparison.

Spanner tables albums and songs can leverage interleaving for better query optimization. This can be done through the “INTERLEAVE” tab.

Next, plan for all the issues displayed by SMT to ensure a smooth migration.

In Spanner all tables need a primary key. The singers table had no primary key, but it had a UNIQUE constraint on singer_id. SMT cleverly migrated that column to be our primary key.

Some columns have SERIAL data types (for IDs). Since Spanner does not support this, we can use SEQUENCEs. Spanner SEQUENCEs are not monotonically increasing to prevent hotspotting. We will need to manually create those later.

Spanner only supports 8 byte integrals, so int4/SERIAL columns were migrated to int8s. They will affect the refactoring of the application later.

We can remove the identified redundant index by selecting it in the songs table and skipping its creation.

With a plan to solve all issues at hand, save the work by clicking “Save Session”. Continue by clicking on “Prepare Migration”.

At first, we will only migrate the Schema to validate our application for Spanner. Enter:

Migration mode: Schema

Spanner database: example

Click on “Migrate”. We will get a link to the created database on completion.

Finally, apply the schema updates that weren’t automated by SMT. Run statements from this file on the migrated database. You can do it through Spanner Studio.

We needed to do some minor updates to have our application working with Spanner, including:

Configuring PGAdapter for converting the PostgreSQL wire-protocol into its Spanner equivalent.

Updating queries, because Spanner supports jsonb in lieu of the json type.

You can learn more about these updates here.

To test the application against Spanner, we configure it first:

We then start it:

After verifying that the application has inserted a few rows successfully, we can stop it and delete the sample data.

SMT orchestrates the “Minimal Downtime Migration” process by:

Loading initial data from the source database into the destination.Applying a stream of change data capture (CDC) events.

SMT will set up:

Cloud Storage bucket to store CDC events while the initial data loading occurs.

Datastream job for bulk loading of CDC data and streaming incremental data to Storage bucket.

Dataflow job to migrate CDC events into Spanner.

To allow SMT to deploy this pipeline, make sure you have all the necessary permissions.

Next, follow this guide to set up the source PostgreSQL CDC. You can then restart the application pointing to PostgreSQL to simulate live traffic:

Jump back to the SMT, resume the saved session and continue to “Prepare Migration”. Enter:

Migration Mode: Data

Migration Type: Minimal downtime Migration.

Input the source PostgreSQL database details (example) and the destination Spanner database (example).

Input the connection profile for the source database (from the PostgreSQL CDC configuration) and follow the IP allowlisting instructions.

Finally, set up the target connection profile and click on “Migrate” to start the migration.

SMT generates useful links for monitoring the migration.

Stop the application that is using PostgreSQL:

Wait for the Spanner to catch up, once Dataflow’s backlog reaches zero, switch to the Spanner application:

With that we successfully completed our demo app migration using SMT!

Now you can click on “End Migration” and clean up the jobs.

Through careful planning and utilizing SMT, we can minimize downtime during the migration to Spanner, ensuring an efficient transition.

Read More for the details.

Imagen 2 on Vertex AI brings Google’s state-of-the-art generative AI capabilities to application developers. With Imagen 2 on Vertex AI, application developers can build next-generation AI products that transform their user’s imagination into high-quality visual assets, in seconds.

With Imagen, you can:

Generate novel images using only a text prompt (text-to-image generation).Edit an entire uploaded or generated image with a text prompt.Edit only parts of an uploaded or generated image using a mask area you define.Upscale existing, generated, or edited images.Fine-tune a model with a specific subject (for example, a specific handbag or shoe) for image generation.Get text descriptions of images with visual captioning.Get answers to a question about an image with Visual Question Answering (VQA).

In this blog post, we will show you how to use the Vertex AI SDK to access Imagen 2’s image generation feature. This blog is designed to be a code-along tutorial, so you can code along as you read.

Before you get started, please ensure that you have satisfied the following criteria:

Have an existing Google Cloud Project or create a new one.Set up a billing account for the Google Cloud project,Enable the Vertex AI API on the Google Cloud project.

To use Imagen 2 through the SDK, run the following command in the preferred Python environment to install the Vertex AI SDK:

Restarting the runtime is recommended to reflect the installed packages. If you encounter any issues, check this installation guide for troubleshooting. Once the packages are installed properly, use the following code to initialize the Vertex AI with custom configurations: PROJECT_ID and LOCATION.

initialize the image generation model using the following code:

Now you are ready to start generating images with Imagen 2.

Imagen 2 can generate images from text descriptions. It is powered by a massive dataset of text and images, and is able to understand human language to create images that are both accurate and creative.

The generate_images function can be used to generate images using text-prompts:

The response variable now holds the generated results. Indexing is required because `generate_images` returns a list. To view the generated images, execute the following code:

You should see a generated image which is similar to the image shown below:

Image generated using Imagen 2 on Vertex AI from the prompt: A fantasy art of a beautiful ice princess

If you prefer to save the generated images, you can execute the following code:

The generate_images function also accepts a few parameters which can be used to influence the generated images. The following section will explore those parameters individually.

The negative_prompt parameter can be used to omit features and objects when generating the images. For example, if you are generating a studio photo of a pizza and want to omit pepperoni and vegetable, you just need to add “pepperoni” and “vegetables” in the negative prompt.

Image generated using Imagen 2 on Vertex AI from the prompt: A studio photo of a delicious pizza

The number_of_images parameter determines how many images will be generated. It defaults to one, but you can set it to create up to four images. However, the total number of output images may not always be the same as what is set in the parameter because images that violate the usage guidelines will be filtered.

The seed parameter controls the randomness of the image generation process, making generated images deterministic. If you want the model to generate the same set of images every time, you can add the same seed number with your request.

For example, if you used a seed number to generate four images, using the same seed number with the exact same parameters will generate the same set of images, although the ordering of the images might not be the same. Run the following code multiple times and observe the generated images:

By setting the language parameter, you can use non-English image prompts to generate images. English (en) is the default value. The following values can be used to set the text prompt language:

Chinese (simplified) (zh/zh-CN)

Chinese (traditional) (zh-TW)

Hindi (hi)

Japanese (ja)

Korean (ko)

Portuguese (pt)

Spanish (es)

To demonstrate that, try executing the code below with a text prompt written in Korean: “A modern house on a cliff with reflective water, natural lighting, professional photo.”

Image generated using Imagen 2 on Vertex AI from the prompt: 물 반사, 자연 채광, 전문적인 사진, 절벽 위의 현대적인 집

Head over to the Imagen documentation and the API reference page to learn more about Imagen 2’s features. Additionally, the concepts presented in this blog post can be accessed in the form of a Jupyter Notebook in our Generative AI GitHub repository, providing an interactive environment for further exploration. To keep up with our latest AI news, be sure to check out the newest Gemini models available in Vertex AI and Gemma, Google’s new family of lightweight, state-of-the-art open models.

Read More for the details.

From the front lines of cyber investigations, the Mandiant Managed Defense team observes how cybercriminals find ways to avoid defenses, exploit weaknesses, and stay under the radar. We work around the clock to develop new techniques to identify attacks and stop breaches for our clients.

Our aim is to help organizations better protect themselves against the security challenges of today, as well as the future. To minimize the impact of an incident, organizations must prioritize a robust detection posture and a well-structured response plan. This blog highlights our key observations from the many engagements we were involved with in 2023.

Infostealer, information stealing malware, was the most prevalent type of malware observed by Managed Defense in 2023. The trend coincides with the observed demand and supply of stolen data within underground forums and markets. Infostealers are lucrative for cybercriminals, as stolen data can be used for extortion or to facilitate advancing multiple stages of an intrusion.

For example, infostealers can obtain credentials for initial access or lateral movement, and they also gather information about an environment. This reconnaissance data can be used to tailor future attacks, significantly escalating a breach’s severity and impact.

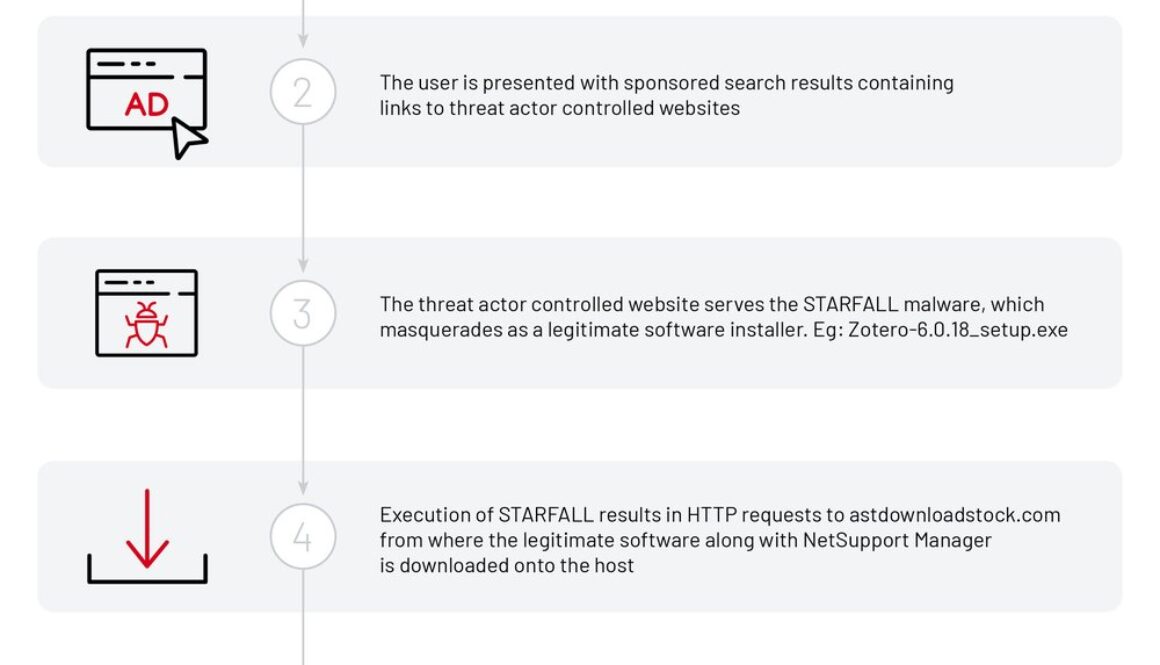

Infostealers are often distributed through cracked or pirated software as a lure. Cybercriminals used social engineering and search engine optimization (SEO) tactics to more effectively distribute their malware. In July, Managed Defense uncovered a campaign that used fake Zotero and Notion installers (free, open-source reference management tools) to gain unauthorized access to targeted machines. These thematically-relevant lures demonstrate how easily cybercriminals can tailor their attacks to specific industries and organizations.

Figure 1: UNC4864 Infection Lifecycle: Leveraging Fake Installers – You can find this research piece from Managed Defense.

An effective way for organizations to reduce the risks of infostealers is to implement application allowlisting that restricts employees to installing only authorized software from approved sources.

Threat actors linked to the People’s Republic of China (PRC-nexus) are relying on removable storage devices extensively to achieve their initial intrusion of targeted systems. Managed Defense has responded to numerous intrusions stemming from this initial access method. Investigations into these incidents revealed a wide range of distinct malware families being used.

PRC-nexus cyber espionage actor TEMP.HEX used removable drives to spread SOGU, also known as PlugX to steal sensitive information. You can read more about how this specific threat group targeted the public and private sectors globally, and what mitigation strategies we recommend to defend against them.

Russian groups also included removable drive propagation in their malware. QUICKGAME is the latest in an arsenal of USB-driven malware that TEMP.Armageddon has relied upon to gain and further its access in victim environments.

The proven effectiveness of removable drives as malware distribution tools for strategic espionage outweighs the potential inconvenience for users. Managed Defense recommends that organizations restrict removable storage device access for workstations where such access is not essential.

Zero-day exploits are a major security risk due to their ability to facilitate undetected attacks. These zero-day vulnerabilities were usually uncovered through either threat hunting or incident response activities.

As risky as zero-day attacks are, the real surge in threat activity occurs when vulnerabilities are publicly disclosed and exploit proof-of-concepts are made available. These “open doors” provide low-effort, high-reward opportunities for threat actors engaged in both financial crime and cyber espionage. This highlights the critical importance of rapid patching to mitigate the impact of known vulnerabilities.

This table lists some of the exploits responded to by Managed Defense in 2023, highlighting the continuous trend of cybercriminals and espionage actors leveraging vulnerabilities for initial access.

Vulnerability

Vendor / Product

Consequence

Fortra / Goanywhere Managed File Transfer

Code Execution

Microsoft / Office (Application)

Security Bypass

Barracuda / Email Security Gateway

Command Execution

3CX

Code Execution

Rarlab / Winrar

Command Execution

Progress MOVEit Cloud

Command Execution

VMware Tools

Security Bypass

Ivanti / Mobileiron Sentry

Command Execution

JetBrains / TeamCity

Code Execution

Confluence Data Center and Server

Privilege Escalation

Citrix / NetScaler ADC and Gateway

Info Disclosure

Cisco / IOS XE

Unauthorized Access

F5 / BIG-IP Configuration Utility

Unauthorized Access

Confluence Data Center and Server

Unauthorized Access

Sysaid On-Premises

Code Execution

There’s no “golden window” to patch, so prioritizing software updates immediately is crucial to stay ahead of attackers.

New ransomware malware families continue to emerge with the ultimate goal of financial gain. Businesses and services are disrupted until a payment is made.

Though ransomware remains a common threat to organizations, recent developments in wiper malware exhibit a disturbing trend of sophistication and widespread use.

In 2023, Managed Defense tracked the continuous deployment of new wiper malware families, particularly during wartime or conflict. Wiper malware became a weapon of choice for crippling critical infrastructure from power grids to communication networks. The malware sophistication demonstrates that threat actors are investing significant time and effort into developing them.

We also saw a pattern shift in how it was used. Initially deployed as a targeted destructive tool, wiper malware has evolved into a weapon wielded by vigilante groups motivated by disagreement or conflict.

Figure 2: A wiper malware masquerading as a fake update seen in December 2023 underscores the steady emergence of wiper malware in the evolving threat landscape targeting businesses and governments.

Figure 3: Analysis of the code reveals the “wiper”, file deletion, functionality.

The increasing use of Wiper malware alongside today’s ransomware threat, organizations are at greater risk of losing their data. We recommend organizations implement a robust data protection strategy.

The prevalence of malware distribution through social media, scam calls, and email phishing schemes continues unabated. These methods are designed to lure users into downloading malware or unknowingly surrendering their credentials on phishing pages, and they remain a persistent and concerning reality that can result in monetary loss for both businesses and individuals.

Threat actors like UNC3944 (also known as Scattered Spider) have mastered these tactics, using phone-based social engineering and smishing to infiltrate victim organizations, exfiltrate sensitive data, and extort victims with ransomware. We have published a guide to how to mitigate threats from this specific threat group.

Other threat groups also use social engineering to further their goals, including:

FIN6 and UNC4962 have employed lures such as resume-themed files and fake job postings on fabricated personal websites to lead users into downloading malicious software.The BazarCall campaign initiated by UNC3753 stands out because it used phone calls to fake businesses as part of its phishing scheme, ultimately tricking victims into downloading remote access tools that grant the attackers an initial foothold.UNC4214 has been using social media and messaging platforms, and malicious social media advertisements, to distribute credential-stealing malware. Their ultimate goal is to infiltrate organizations, gain control of social media business accounts, and then use those accounts for financial gain.

As threat actors regularly evolve their tactics to use new and emerging technologies, we anticipate that cybercriminals will integrate AI more deeply into their toolkits. Realistic, AI-generated content can enhance the persuasiveness of these social media lures, making them more difficult to identify and avoid. However, while there is threat actor interest in this technology, adoption has been limited so far.

Organizations should explore the implementation of security frameworks like the Principle of Least Privilege (PoLP) and Zero Trust security. PoLP centers on granting user access to only what is strictly necessary, while Zero Trust adds a focus on continuous verification and access control. Organizations also may benefit from investing in a strong detection and response program, underpinned by modern Security Operations tools such as Chronicle. An organization’s ability to demonstrate resilience through rapid and effective incident response will foster community confidence and build trust in its products and services.

The Managed Defense team is committed to continuous innovation that empowers our customers. This commitment drives our ongoing development of robust, helpful solutions, such as the recently-launched Mandiant Hunt for Chronicle, which can provide direct proactive threat hunting directly on Chronicle data. Our commitment also motivates our partnerships with industry leaders including Microsoft Defender for Endpoint, CrowdStrike, and SentinelOne, so we can offer flexibility and choice within your security stack.

We are excited about the future, reimagining security operations to find new ways to relentlessly protect our customers from impactful cyberattacks.

Read More for the details.

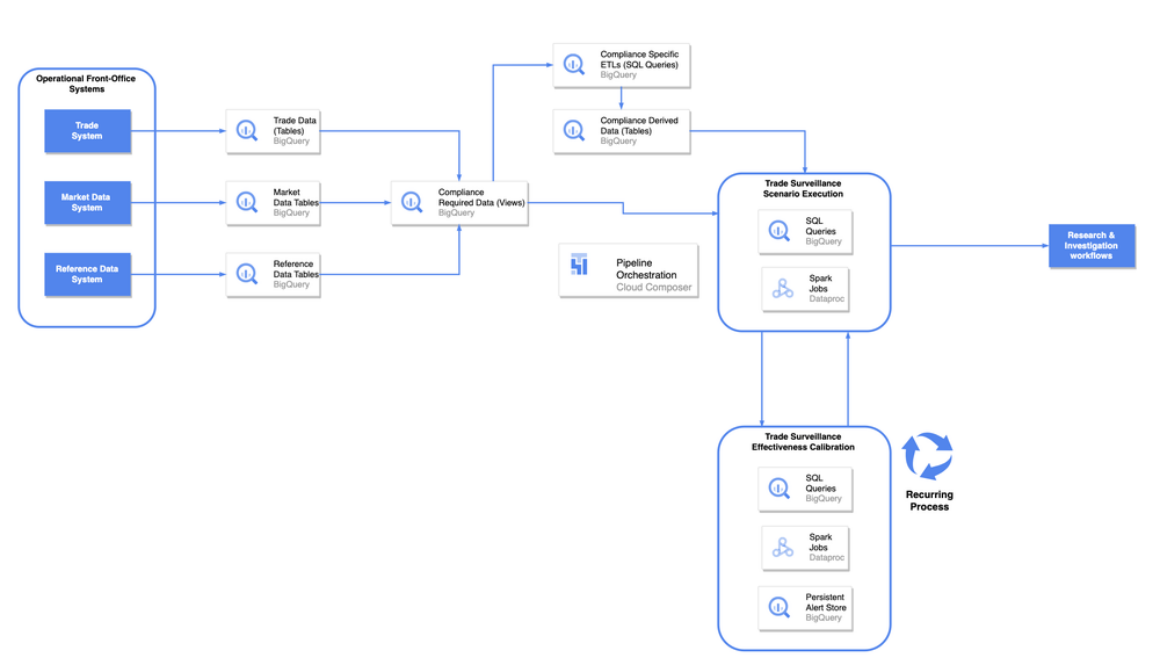

Generative AI has become the number one technology of interest across many industries over the past year. Here at Google Cloud for Games, we think that online game use cases have some of the highest potential for generative AI, giving creators the power to build more dynamic games, monetize their games better, and get to market faster. As part of this, we’ve explored ways that games companies can train, deploy, and maintain GenAI utilizing Google Cloud. We’d like to walk you through what we’ve been working on, and how you can start using it in your game today. While we’ll focus on gen AI applications, the framework we’ll be discussing has been developed with all machine learning in mind, not just the generative varieties.

Long term, the possibilities of gen AI in Games are endless, but in the near term, we believe the following are the most realistic and valuable to the industry over the next 1-2 years.

Game productionAdaptive gameplayIn-game advertising

Each of these helps with a core part of the game development and publishing process. Generative AI in game production, mainly in the development of 2D textures, 3D assets, and code, can help decrease the effort to create a new game, decrease the time to market, and help make game developers more effective overall. Thinking towards sustaining player engagement and monetizing existing titles, ideas like adaptive dialogue and gameplay can keep players engaged, and custom in-game objects can keep them enticed. In-game advertising opens a new realm of monetization, and allows us not only the ability to hyper-personalize ads to views, but to personalize their placement and integration into the game, creating seamless ad experiences that optimize views and engagement. If you think about the time to produce a small game, never mind a AAA blockbuster, development of individual game assets consumes an immense amount of time. If generative models can help reduce developer toil and increase the productivity of studio development teams by even a fraction, it could represent a faster time to market and better games for us all.

As part of this post, we introduce our Generative AI Framework for Games, which provides templates for running gen AI for games on Google Cloud, as well as a framework for data ingest and storage to support these live models. We walk you through a demo of this framework below, where we specifically show two cases around image generation and code generation in a sample game environment.

But before we jump into what we’re doing here at Google Cloud, let’s first tackle a common misconception about machine learning in games.

It’s a common refrain that running machine learning in the cloud for live game services is either cost prohibitive or prohibitive in terms of the induced latency that the end user experiences. Live games have always run on a client-server paradigm, and it’s often preferable that compute-intensive processes that don’t need to be authoritative run on the client. While this is a great deployment pattern for some models and processes, it’s not the only one. Cloud-based gen AI, or really any form of AI/ML, is not only possible, but can result in significantly decreased toil for developers, and reduced maintenance costs for publishers, all while supporting the latencies needed for today’s live games. It’s also safer — cloud-based AI safeguards your models from attacks, manipulation, and fraud.

Depending on your studio’s setup, Google Cloud can support complete in-cloud or hybrid deployments of generative models for adaptive game worlds. Generally, we recommend two approaches depending on your technology stack and needs;

If starting from scratch, we recommend utilizing Vertex AI’s Private Endpoints for low latency serving, which can work whether you are looking for a low ops solution, or are running a service that does not interact with a live game environment.If running game servers on Google Cloud, especially if they are on Google Kubernetes Engine (GKE), and are looking to utilize that environment for ultra-low latency serving, we recommend deploying your models on GKE alongside your game server.

Let’s start with Vertex AI. Vertex AI supports both public and private endpoints, although for games, we generally recommend utilizing Private Endpoints to achieve the appropriate latencies. Vertex AI models utilize what we call an adaptor layer, which has two advantages: you don’t need to call the entire model when making a prediction, and any fine tuning conducted by you, the developer, is contained in your tenant. Compared to running a model yourself, whether in the cloud or on prem, this negates the need to handle enormous base models and the relevant serving and storage infrastructure to support them. As mentioned, we’ll show both of these in the demo below.

If you’re already running game servers on GKE, you can gain a lot of benefit from running both proprietary and open-source machine learning models on GKE as well as taking advantage of GKE’s native networking. With GKE Autopilot, our tests indicate that you can achieve prediction performance in the sub-ms range when deployed alongside your game servers. Over the public internet, we’ve achieved low millisecond latencies that are consistent, if not better, with what we have seen in classic client side deployments. If you’re afraid of the potential cost implications of running on GKE, think again — the vast majority of gaming customers see cost savings from deploying on GKE, alongside a roughly 30% increase in developer productivity. If you manage both your machine learning deployments and your game servers with GKE Autopilot, there’s also a significant reduction in operational burden. In our testing, we’ve found that whether you are deploying models on Vertex or GKE, the cost is roughly comparable.

AI/ML driven personalization thrives on large amounts of data regarding player preferences, gameplay, and the game’s world and lore. As part of our efforts in gen AI in games, we’ve developed a data pipeline and database template that utilizes the best of Google Cloud to ensure consistency and availability.

Live games require strong consistency, and models,whether generative or not, require the most up-to-date information about a player and their habits. Periodic retraining is necessary to keep models fresh and safe, and globally available databases like Spanner and BigQuery ensure that the data being fed into models, generative or otherwise, is kept fresh and secure. In many current games, users are fragmented by maps/realms, with hard lines between them, keeping experiences bounded by firm decisions and actions. As games move towards models where users inhabit singular realms, these games will require a single, globally available data store. In-game personalization also requires the live status of player activity. A strong data pipeline and data footprint is just as important for running machine learning models in a liveops environment as the models themselves. Considering the complexity of frequent model updates across a self-managed data center footprint, we maintain it’s a lighter lift to manage the training, deployment, and overall maintenance of models in the cloud.

By combining a real-time data pipeline with generative models, we can also inform model prompts about player preferences, or combine them with other models that track where, when, and why to personalize the game state. In terms of what is available today, this could be anything from pre-generated 3D meshes that are relevant to the user, retexturing meshes to different colors, patterns or lighting to match player preferences or mood, or even the giving the player the ability to fully customize the game environment based natural language. All of this is in service of keeping our players happy and engaged with the game.

Let’s jump into the framework. For the demo, we’ll be focusing on how Google Cloud’s data, AI, and compute technology can come together to provide real-time personalization of the game state.

The framework includes:

Unity for the client and server

Open source:

TerraformAgones

Google Cloud:

GKEVertex AIPub/SubDataflowSpannerBigQuery

As part of this framework, we created an open-world demo game in Unity that uses assets from the Unity store. We designed this to be an open world game — one where the player needs to interact with NPCs and is guided through dynamic billboards that assist the player in achieving the game objective.. This game is running on GKE with Agones, and is designed to support multiple players. For simplicity, we focus on one player and their actions.

Now, back to the framework. Our back-end Spanner database contains information on the player and their past actions. We also have data on their purchasing habits across this make-believe game universe, with a connection to the Google Marketing Platform. This allows us in our demo game to start collecting universal player data across platforms. Spanner is our transactional database, and BigQuery is our analytical database, and data flows freely between them.

As part of this framework, we trained recommendation models in Vertex AI utilizing everything we know about the player, so that we can personalize in-game offers and advertising. For the sake of this demo, we’ll forget about those models for a moment, and focus on two generative AI use cases: image generation, NPC chat, and code generation for our adaptive gameplay use case. To show you both deployment patterns that we recommend for games, deploying on GKE alongside the game server, and utilizing Vertex AI. For image generation, we host an open-source Stable Diffusion model on GKE, and for code generation and NPC chat we’re using the gemini-pro model within Vertex AI. In cases where textures need to be modified or game objects are repositioned, we are using the Gemini LLM to generate code that can render, position, and configure prefabs within the game environment.

As the character walks through the game, we adaptively show images to suggest potential next moves and paths for the player. In practice, these could be game-themed images or even advertisements. In our case, we display images that suggest what the player should be looking for to progress game play.

In the example above, the player is shown a man surrounded by books, which provides a hint to the player that maybe they need to find a library as their next objective. That hint also aligns with the riddle that the NPC shared earlier in the game. If a player interacts with one of these billboards, which may mean moving closer to it or even viewing the billboard for a preset time, then the storyline of our game adapts to that context.

We can also load and configure prefabs on the fly with code generation. Below, you’ll see our environment as is, and we ask the NPC to change the bus color to yellow, which dynamically updates the bus color and texture.

Once we make the request, either by text or speech, Google Cloud GenAI models generate the exact code needed to update the prefab in the environment, and then renders it live in the game.

While this example shows how code generation can be used in-game, game developers can also use a similar process to place and configure game objects within their game environment to speed up game development.

If you would like to take the next step and check out the technology, then we encourage you to explore the Github link and resources below.

Additionally, we understand that not everyone will be interested in every facet of the framework. That’s why we’ve made it flexible – whether you want to dive into the entire project or just work with specific parts of the code to understand how we implemented a certain feature, the choice is yours.

If you’re looking to deepen your understanding of Google Cloud generative AI, check out this curated set of resources that can help:

Generative AI on Google CloudGetting started with generative AI on Vertex

Last but not least, if you’re interested in working with the project or would like to contribute to it, feel free to explore the code on Github, which focuses on the GenAI services used as part of this demo:

Read More for the details.

Customers in retail and manufacturing are looking for ways to improve legacy on-premises development for applications such as point-of-sale systems and factory operations, while also building rich new experiences like automated order taking, AI-based visual inspection, and cashierless checkout. However, they struggle to build, deploy, and manage modern workloads while sustaining current applications that can be running in tens or thousands of locations.

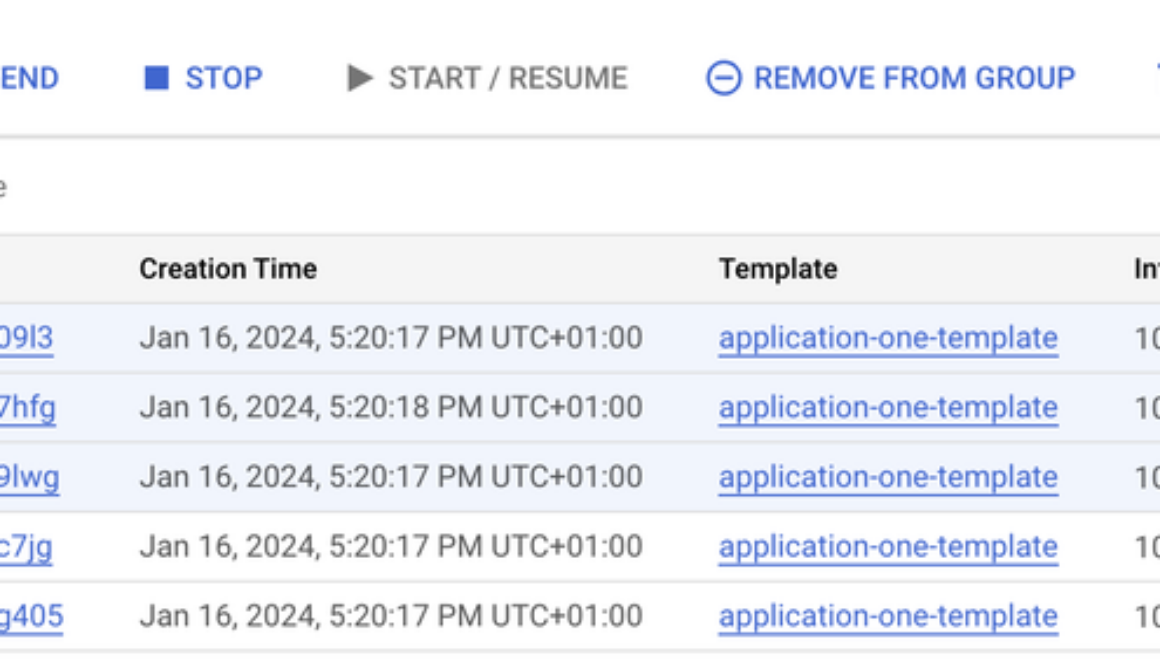

Today we are happy to announce general availability Google Distributed Cloud Edge servers, a new configuration of Google Distributed Cloud Edge that is optimized for installation in retail stores, restaurants, or manufacturing facilities. Consisting of three small-form-factor servers that directly connect to a location’s network equipment, Google Distributed Cloud Edge servers lets customers easily deploy fully managed, highly available Google Kubernetes Engine clusters in tens and thousands of locations, sometimes in environments with limited or intermittent internet access, so they can scalably manage business-critical applications that support local operations, including AI-assisted applications like AI visual inspection.

Applications are critical to providing quality experiences to retail customers and keep manufacturing lines running. However, customers with multiple manufacturing plants or thousands of retail locations struggle to deploy, manage, and ensure the high availability of these applications at scale. Some customers today optimize for management by centralizing applications in the cloud. However, periods of limited bandwidth or intermittent connectivity can result in application slowness or even outages. Others deploy servers locally, only to struggle with high operational cost, because each location needs to be separately managed. There’s also increased operational risk, because servers are often a single point of failure, and application fixes can take weeks to months to deploy to all locations if there is insufficient deployment tooling. Lastly, as customers add new AI-based applications, they struggle with platform sprawl because these applications require net-new servers with additional compute and graphics capabilities. You can learn more about the key considerations driving these decisions and more in these customer insight reports for Manufacturing and Retail IT decision makers.

Build apps on the cloud; deploy and scale on-premises

With Google Distributed Cloud Edge servers, customers can deploy a modern and fully managed application platform to their locations, with Google Cloud’s best-in-class tools to manage applications at scale. Google Distributed Cloud Edge servers are designed and optimized to run business-critical applications locally, keeping operations running even during times of limited or intermittent internet connectivity. Further, Google Distributed Cloud Edge servers are fully-managed, leveraging Google’s industry-leading automation and site reliability engineering practices to keep operational costs low, even at scale, with built-in redundancy to enable non-stop operations even when a server fails. Google Distributed Cloud Edge servers include Google Kubernetes Engine (GKE) by default, and a suite of application orchestration tools that allow customers to rapidly and reliably deploy new applications — and updates to existing applications — to all locations. These capabilities let customers centralize applications in the cloud, while enjoying the latency and connectivity advantages of running applications locally. Finally, Google Distributed Cloud Edge servers support both VMs and containers, with optional GPU, allowing customers to run both existing and new AI-based applications on a single platform, reducing sprawl. You can learn more about the opportunities and use cases that edge computing unlocks for retailers in this blog.

Google Distributed Cloud Edge server configuration

Ruggedized Edge Rack server

Size (WxHxD in): 17 x 1.7 (1RU) x 19

Temp: -5°C to 55°C

Acoustics: 38 dBA (Typical), 58 dBA (Max)

Right-sized configurations

16c/32vCPU, 64GB RAM, 1.6TB User Disk

32c/64vCPU, 128GB RAM, 3.2TB User Disk

“While cloud computing has enabled unprecedented scalability, simplicity, and agility for customers across a wide variety of industries, adoption for local applications has been limited because of the need to run applications at each location,” said Ankur Jain, Vice President of Engineering for Google Distributed Cloud. “With Google Distributed Cloud Edge servers, we combine the best of both worlds: the scalability and agility of the cloud with the availability of local processing.”

Google Distributed Cloud Edge servers conveniently placed in retail outlets, fast casual storefronts, or manufacturing floors can help IT decision makers in retail and manufacturing in a few ways:

Scale cloud enabled modern infrastructure on-premises. Build modern cloud-based applications in the cloud and deploy and scale from a single retail store or warehouse to thousands of locations.

Reduce cost of operations. Improve and optimize IT cost and resource spend with fully managed hardware and software from Google.

Customers can start today by ordering one or more Google Distributed Cloud Edge servers configurations directly from Google Cloud. Trained technicians perform initial installations on-site, after which Google Cloud fully manages the entire platform, including installing software updates, optimizing the configuration, and monitoring the hardware. Developers can launch and update containers or VMs at scale, in a controlled manner to a single location, a whole region or the whole world, using the same Google Cloud tools that they use to manage large cloud environments, such as GKE Config Sync. In case of hardware failure, the system continues to operate while Google automatically dispatches a technician to replace the server.

You can learn more about Google Distributed Cloud Edge configuration pricing here.

Read More for the details.

Monitoring microservices in the cloud has become an increasingly cumbersome exercise for teams struggling to keep pace with developers’ rapid application release velocity. One way to make things easier for overloaded security teams is to use the open-source runtime security platform Falco to quickly identify suspicious behavior in Linux containers and applications. The overarching goal of Falco is to uncomplicate visibility in rapidly-deployed, cloud-first workloads everywhere, making life less stressful for your engineering organization.

Falco can help with other vital security tasks, too. You can use it with Google Kubernetes Engine (GKE), for example, to monitor cluster and container workload security. Like a Swiss army knife, Falco can detect abnormal behavior in all types of cloud-first workloads. Its plugin architecture can be used to monitor almost anything that emits a data stream in a known format, including a wide array of Google Cloud services.

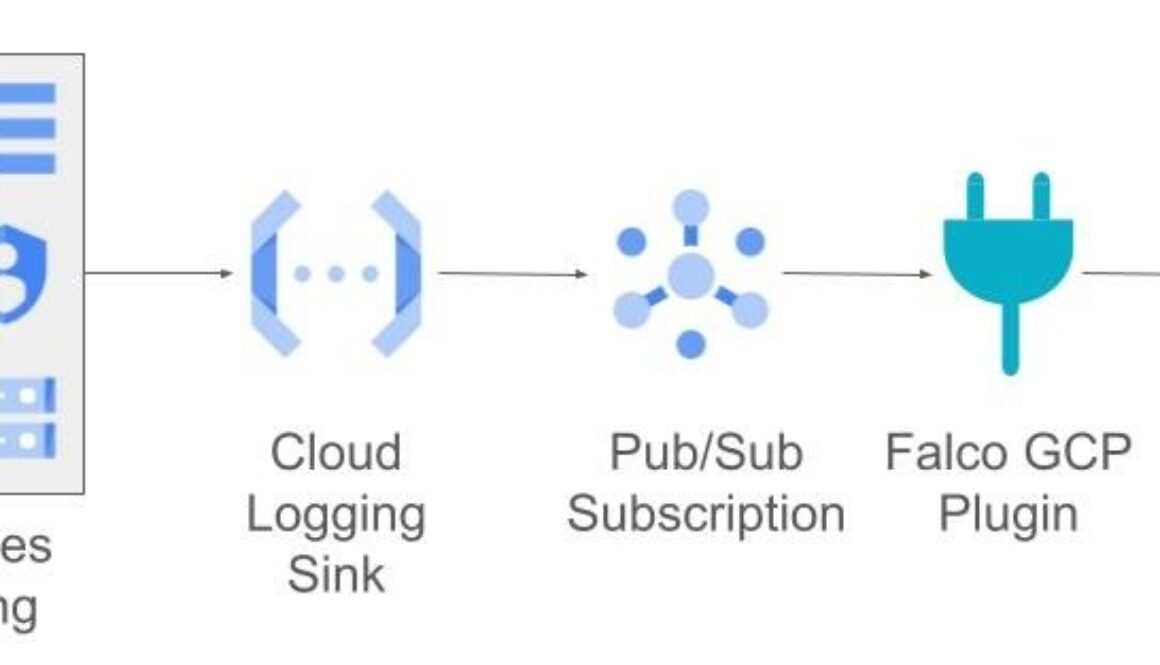

Let’s examine how Falco and the gcpaudit plugin enables runtime security monitoring across a wide range of Google Cloud services.

Before we go much further, it’s important to understand the plugin model. Falco’s plugins are shared libraries that conform to a documented API, hooking into Falco’s core functionality. You can use this model to add new event sources, define new fields to be extracted from events, parse content extracted from a data stream, and inject events asynchronously in a given data stream. Officially maintained Falco plugins can be found in its plugin registry.

The gcpaudit plugin ingests audit logs for several key Google Cloud services, including:

Compute EngineKMSCloud Armor WAFIAMCloud StorageBigQueryCloud SQLPub/SubCloud LoggingCloud Functions

This plugin uses an optimized Google Cloud logging filter or “sink” to send the most critical events from a monitored service to a Pub/Sub topic running within an organization’s project. The gcpaudit plugin acquires events from thePub/Sub topic, applies filters and enrichment (using custom metadata) which operators can then access in Falco.

This filtering process is based on a set of custom Falco rules authored with the MITRE ATT&CK framework in mind. For example, when an action, such as deleting a virtual machine (VM) or removing a database backup, triggers one of these rules, Falco sends out an alert. The plugin provides a centralized view of security events across multiple Google Cloud services and can help detect unauthorized access, data exfiltration, and other types of malicious activity.

To support your other security operations processes, such as building playbooks, event correlation, and triaging incidents, these alerts can then be sent to a security information and event management (SIEM) system such as Chronicle Security Operations. Falco sends alerts to stdout, syslog, an HTTP/HTTPS client or gRPC endpoint by default, but you can leverage a companion project, FalcoSidekick, to send alerts to other destinations in your environment.

There are some important factors to consider with Falco. First, you can’t use Falco on GKE clusters running in Autopilot mode, as it requires privileged access to install its driver. To avoid duplication, only one instance of the gcpaudit plugin should be deployed per monitored environment. For example, you wouldn’t install the plugin on each node in a GKE cluster. Cloud Logging aggregates alerts for a set of monitored services, then forwards to the plugin. The plugin can be installed directly into a compute instance VM, or it can be run as a pod in GKE.

With over 60 outputs such as messaging applications, observability tools, object stores, and databases, you have many options. Since Falco’s an open source project, you can contribute your own outputs, too.

Microservices can be complex, and it can be difficult to ensure alerts get to the right team early enough to have a meaningful impact. Getting the necessary visibility required to secure them can be a challenge, especially when using Kubernetes. By taking advantage of the gcpaudit plugin, security teams can more quickly identify events and respond to incidents in these Google Cloud workloads and improve their organization’s security posture.

To get started and help make your applications “secure every second,” you can try out Falco’s gcpaudit plugin with this detailed walkthrough.

Read More for the details.

The next generation of Autocomplete is now available in Preview, offering seamless integration with Address Validation, more intuitive pricing, and support for expanded place types from the new Places API. It’s available to all developers at no cost during Preview. Autocomplete builds on our recent launch of the new Places API, which offers new features in Text Search, Place Details and Photos, and Nearby Search, to make it easier for you to surface helpful information about the world in the products you build.

What sets apart Google’s Autocomplete

With Autocomplete, you can help your users quickly find the exact place they’re looking for by automatically suggesting businesses and points of interest as they type. Autocomplete utilizes the speed of Google Search to quickly search and suggest addresses from one of the most accurate and comprehensive models of the world, with over 50 million daily updates and coverage in more than 250 countries and territories. In addition to our extensive address data, Autocomplete helps you bring rich location data for over 250 million places to your users, including opening hours, price levels, user ratings, and much more.

Improvements to the next generation Autocomplete

Seamless integration with Address Validation

Global ecommerce this year will reach almost $6 trillion dollars in sales.1 But ecommerce growth can be challenged, in part due to complications with checkout. In fact, 70% of all online shopping carts are abandoned2. There are also challenges with fulfillment. According to a recent survey, 71% of surveyed shoppers have contacted customer service due to shipping and/or delivery issues regarding online orders3 . To address this, we’ve created a new Autocomplete with Address Validation product that helps you decrease cart abandonment, increase customer repeat rate, and delight customers with faster checkout. It also helps you drive operational efficiencies by catching and fixing address errors earlier in the process to make faster deliveries, save costs, and avoid customer churn due to incorrect deliveries. At a CPM of $25 per 1,000 sessions, this product also provides significant cost savings when compared to using the new Autocomplete and Address Validation APIs separately, which is a CPM of $32 per 1,000 sessions.

Supports expanded place types from new Places API

The next generation Autocomplete supports all of the expanded place types from the new Places API. Places API now provides even more details and place types, which means your users will be able to find detailed information about places of interest by type, such as best sushi or nearby hiking areas. With the new Places API, we’ve nearly doubled the number of supported place types since the previous version. Now there are nearly 200 place types including coffee shops, playgrounds, EV charging stations, and more. You’ll also be able to provide updated accessibility information for places, including wheelchair-accessible seating, restrooms, and parking.

Simpler and more intuitive pricing

We’ve changed our pricing to make it simpler, more intuitive, and better match how your users use Autocomplete. For example, sometimes your users don’t always finish their checkout, or they can select a pickup point and they accidentally close their browser. With the new Autocomplete session-based pricing, if your user doesn’t complete their session (e.g. they enter a single character in your search box and then close their browser), you will only be charged for an Autocomplete request instead of a full session with Address Validation or Place Details.

We now offer three Autocomplete SKUs that line up to the most common use cases

Checkout and delivery–the Autocomplete with Address Validation product is designed for e-commerce use cases. It helps you provide a seamless checkout and frictionless fulfillment experience, helping drive more revenue and an enhanced customer experience.Discover new places–the Autocomplete with Place Details Preferred product is ideal for when you want to help users not only find places, but discover more about those places with rich place details–such as opening hours, user ratings and reviews, and price levels–to make decisions about where to go.Find the exact place a user is looking for–the Autocomplete with Place Details Location product can be used to help users quickly find the exact place they’re looking for by automatically suggesting addresses, businesses and points of interest as they type, and then showing the chosen location on a Google map.

New Google Cloud service infrastructure

Like the other products offered with the new Places API, the new Autocomplete offers modern security settings with OAuth-based authentication and is built on Google Cloud’s service infrastructure to give you even more peace of mind as you build with us. This provides an alternative to API key-based authentication of requests to the new Places API, which are still supported.

Get started with next generation Autocomplete

Try out the next generation Autocomplete at no cost during the Preview phase. To learn more, check out the documentation and the updated pricing. To learn more about the new Places API, check out our webpage and documentation.

To get started with the next generation Autocomplete, ensure “Places API (New)” is enabled on your Google Cloud project. If you are using the Place Autocomplete web service, follow the link to the docs to learn how to update your API call to the next generation of Autocomplete. If you are calling Place Autocomplete via our Maps JavaScript API, or Android, or iOS SDKs, we will be launching the next generation Autocomplete on these platforms in the coming months.

We’d love to see what you’re creating with the next generation Autocomplete and Places API, so be sure to tag us at @GMapsPlatform on X and #GoogleMapsPlatform on YouTube and LinkedIn. We can’t wait to see what you build.

Sources

eMarketer, March 2023Baymard Institute, 2023UPS Capital, April 2023

Read More for the details.

Everyone is excited about generative AI (gen AI) nowadays and rightfully so. You might be generating text with PaLM 2 or Gemini Pro, generating images with ImageGen 2, translating code from language to another with Codey, or describing images and videos with Gemini Pro Vision.

No matter how you’re using gen AI, at the end of the day, you’re calling an endpoint either with an SDK or a library or via a REST API. Workflows, my go-to service to orchestrate and automate other services, is more relevant than ever when it comes to gen AI.

In this post, I show you how to call some of the gen AI models from Workflows and also explain some of the benefits of using Workflows in a gen AI context.

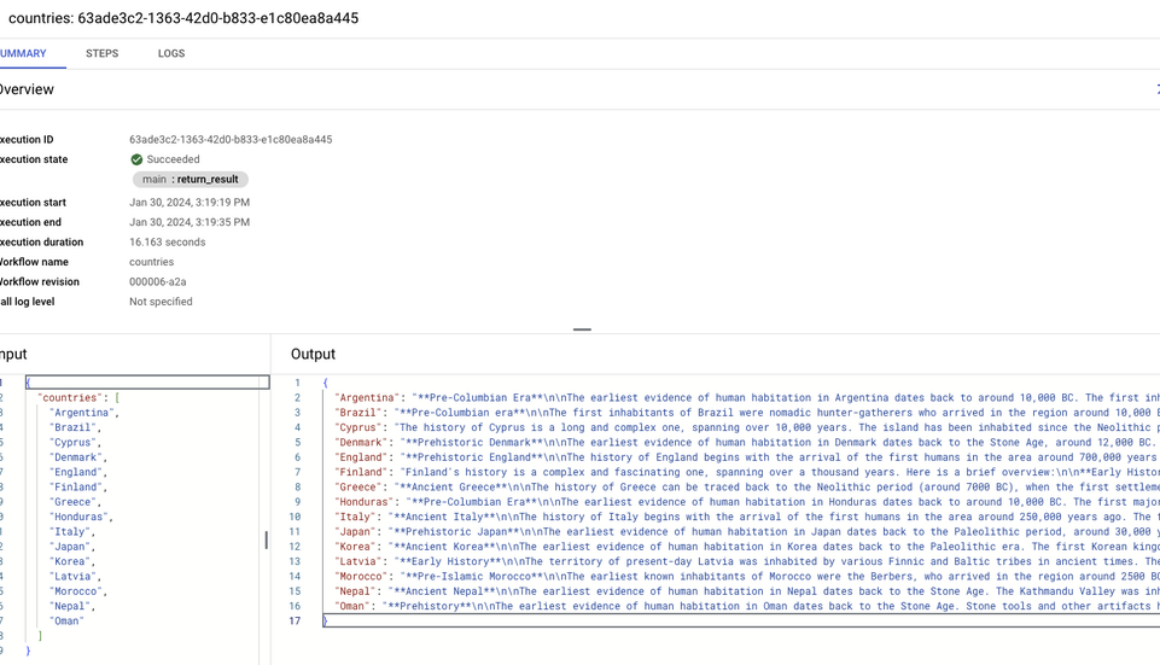

Let’s start with a simple use case. Imagine you want the large language model (LLM) to generate a paragraph or two on histories of a list of countries and combine them into some text.