Azure – Azure Front Door log scrubbing of sensitive data is general available

Azure Front Door supports removing sensitive data from Azure Front Door access log.

Read More for the details.

Azure Front Door supports removing sensitive data from Azure Front Door access log.

Read More for the details.

Geographical redundancy is a fundamental part of building a resilient cloud-based data strategy. For many years, BigQuery has offered an industry-leading 99.99% uptime service-level agreement (SLA) for availability within a single geographical region. Full redundancy across two data centers within a single region is included with every BigQuery dataset you create and is managed in a completely transparent manner.

For customers looking for enhanced redundancy across large geographic regions, we are now introducing managed disaster recovery for BigQuery. This feature, now in preview, offers automated failover of compute and storage and a new cross-regional SLA tailored for business-critical workloads. This feature enables you to ensure business continuity in the unlikely event of a total regional infrastructure outage. Managed disaster recovery also provides failover configurations for capacity reservations, so you can manage query and storage failover behavior. This feature is available through BigQuery Enterprise Plus edition.

Customers using BigQuery’s enterprise plus edition can now configure their capacity reservations to enable automated failover across distinct geographic regions. Extending the capabilities of BigQuery’s cross-region dataset replication, failover reservations ensure that the location of both data and compute resources are coordinated during a disaster recovery event.

Slot capacity in the secondary region for enterprise plus edition reservations are provisioned and maintained automatically at no additional cost. Some competitive products require customers to duplicate their compute clusters in the secondary location.

In the event of a total regional outage, the secondary region can be promoted to the primary role for both compute and data. With BigQuery’s query routing layer, failover is completely transparent to end users and tools.

Primary region: The region containing the current primary replica of a dataset. This is also the region where the dataset data can be modified (e.g. loads, DDL, or DML).

Secondary region: The region where the failover reservation standby capacity and replicated datasets are available in the case of a regional outage.

Failover reservation: An enterprise plus edition reservation configured with a primary/secondary region pair. Note: Datasets are attached to failover reservations.

The dataset replica in the primary region is the primary replica, and the replica in the secondary region is the secondary replica. These roles are swapped during the failover process.

The primary replica is writeable, and the secondary replica is read-only. Writes to the primary replica are asynchronously replicated to the secondary replica. Within each region, the data is stored redundantly in two zones. Network traffic never leaves the Google Cloud network.

A region pair in BigQuery’s managed disaster recovery is a pair of regions that are geographically supported by turbo replication and compute redundancy. Within the defined region pair, BigQuery replicates data between the two regions and manages secondary available capacity. This replication allows BigQuery managed disaster recovery to provide high availability and durability for data. Customers are able to define their desired region pair (based on the supported regions) per failover reservation.

BigQuery’s managed disaster recovery feature supports failover reservations across specific region pairs (similar to Cloud Storage, for regions within a geographic area). You can designate either region in a pair for your initial primary or secondary region.

BigQuery ensures that the capacity of your primary region will be available in your secondary region within five minutes of a failover. This assurance applies to your reservation baseline, whether it’s used or not. BigQuery also provides the same level of autoscaling availability as provided in the primary.

BigQuery’s managed disaster recovery feature is available with the Enterprise Plus edition. Standby compute capacity in the secondary region is included with the per slot-hour price with no requirement to purchase separate standby capacity. As an option, you may choose to provision additional Enterprise Plus reservations in the secondary region, specifically for read-only queries.

Managed disaster recovery customers are billed for replicated storage in the primary and secondary regions for associated datasets. At GA, this feature will automatically use turbo replication for data transfer between regions.

SKU

Billing description

Enterprise Plus Edition

$0.10 / slot-hr (ex. US Pricing)

Storage

Storage bytes in the secondary region are billed at the same list price as storage bytes in the primary region. See BigQuery Storage pricing for more information.

Data transfer

Managed disaster recovery uses turbo replication*

Data transfer used during replication:

is charged based on physical bytes

is charged on a per physical GB replicated basis.

Note: Turbo replication will be 2x pricing of “default replication”

* Turbo replication is not available during preview but will be enabled automatically at general availability (GA).

Promotion of a secondary reservation and associated datasets takes less than five minutes, even if the primary region is down. All queries in flight are canceled and rejected during the RTO timeline.

Data will be less than 15 minutes old in secondary dataset replicas configured for failover reservation between supported region pairs, turbo replication enabled and only after initial replication is completed (also known as backfill).

Note: Turbo replication and RPO/RPO with SLA are not available during preview.

During preview, managed disaster recovery configuration is supported via the BigQuery Console (UI) and SQL. The following workflow shows how you can set up and manage disaster recovery in BigQuery:

Create a replica for a given dataset

To replicate a dataset, use the ALTER SCHEMA ADD REPLICA DDL statement.

After you add a replica, it takes time for the initial copy operation to complete. You can still run queries referencing the primary replica while the data is being replicated, with no reduction in query-processing capacity.

Configure a failover reservation + attach a dataset

The first step is to create a failover reservation and specify its secondary location. Specifying a secondary location can also be done for existing Enterprise Plus reservations.

The next step is to associate one or more datasets with the failover reservation. The dataset needs to be replicated in the same primary / secondary region as specified in the reservation.

Promote the failover reservation + dataset in the secondary

Fail over the reservation and associated datasets. This must be performed from the secondary region.

Fail back to original primary

Fail back the reservation and associated datasets (performed from the new secondary/old primary).

Business continuity is paramount for customers with mission-critical data environments. We are excited to make the preview of BigQuery’s managed disaster recovery feature available for your testing. You can learn more about managed disaster recovery and how to get started in the BigQuery managed disaster recovery QuickStart.

Read More for the details.

For decades, threat intelligence solutions have had two main challenges: They lack a comprehensive view of the threat landscape, and to get value from intelligence, organizations have to spend excess time, energy, and money trying to collect and operationalize the data.

Today at the RSA Conference in San Francisco, we are announcing Google Threat Intelligence, a new offering that combines the unmatched depth of our Mandiant frontline expertise, the global reach of the VirusTotal community, and the breadth of visibility only Google can deliver, based on billions of signals across devices and emails. Google Threat Intelligence includes Gemini in Threat Intelligence, our AI-powered agent that provides conversational search across our vast repository of threat intelligence, enabling customers to gain insights and protect themselves from threats faster than ever before.

“While there is no shortage of threat intelligence available, the challenge for most is to contextualize and operationalize intelligence relevant to their specific organization,” said Dave Gruber, principal analyst, Enterprise Strategy Group. “Unarguably, Google provides two of the most important pillars of threat intelligence in the industry today with VirusTotal and Mandiant. Integrating both into a single offering, enhanced with AI and Google threat insights, offers security teams a new means to operationalize actionable threat intelligence to better protect their organizations.”

Google Threat Intelligence provides unparalleled visibility into the global threat landscape. We offer deep insights from Mandiant’s leading incident response and threat research team, and combine them with our massive user and device footprint and VirusTotal’s broad crowdsourced malware database.

Google threat insights: Google protects 4 billion devices and 1.5 billion email accounts, and blocks 100 million phishing attempts per day. This provides us with a vast sensor array and a unique perspective on internet and email-borne threats that allow us to connect the dots back to attack campaigns.

Frontline intelligence: Mandiant’s eIite incident responders and security consultants dissect attacker tactics and techniques, using their experience to help customers defend against sophisticated and relentless threat actors across the globe in over 1,100 investigations annually.

Human-curated threat intelligence: Mandiant’s global threat experts meticulously monitor threat actor groups for activity and changes in their behavior to contextualize ongoing investigations and provide the insights you need to respond.

Crowdsourced threat intelligence: VirusTotal’s global community of over 1 million users continuously contributes potential threat indicators, including files and URLs, to offer real-time insight into emerging attacks.

Open-source threat intelligence: We use open-source threat intelligence to enrich our knowledge base with current discoveries from the security community.

Google Threat Intelligence boasts a diverse set of sources that provide a panoramic view of the global threat landscape and the granular details needed to make informed decisions.

This comprehensive view allows Google Threat Intelligence to help protect your organization in a variety of ways, including external threat monitoring, attack surface management, digital risk protection, Indicators of Compromise (IOC) analysis, and expertise.

Traditional approaches to operationalizing threat intelligence are labor-intensive and can slow down your ability to respond to evolving threats, potentially taking days or weeks to respond.

By combining our comprehensive view of the threat landscape with Gemini, we have supercharged the threat research processes, augmented defense capabilities, and reduced the time it takes to identify and protect against novel threats. Customers now have the ability to condense large data sets in seconds, quickly analyze suspicious files, and simplify challenging manual threat intelligence tasks.

Gemini 1.5 Pro is a valuable part of Google Threat Intelligence, and we’ve integrated it so that it can more efficiently and effectively assist security professionals in combating malware.

Gemini 1.5 Pro offers the world’s longest context window, with support for up to 1 million tokens. It can dramatically simplify the technical and labor-intensive process of reverse engineering malware — one of the most advanced malware-analysis techniques available to cybersecurity professionals. In fact, it was able to process the entire decompiled code of the malware file for WannaCry in a single pass, taking 34 seconds to deliver its analysis and identify the killswitch.

We also offer a Gemini-driven entity extraction tool to automate data fusion and enrichment. It can automatically crawl the web for relevant open source intelligence (OSINT), and classify online industry threat reporting. It then converts this information to knowledge collections, with corresponding hunting and response packs pulled from motivations, targets, tactics, techniques, and procedures (TTPs), actors, toolkits, and Indicators of Compromise (IoCs).

Google Threat Intelligence can distill more than a decade of threat reports to produce comprehensive, custom summaries in seconds.

Google Threat Intelligence is just one way we can help you in your threat intelligence journey. Whether you need cyber threat intelligence training for your staff, assistance with prioritizing complex threats, or even a dedicated threat analyst embedded in your team, our experts can act as an extension of your own team.

Google Threat Intelligence is part of Google Cloud Security’s comprehensive security portfolio, which includes Google Security Operations, Mandiant Consulting, Security Command Center Enterprise, and Chrome Enterprise. With our offerings, organizations can address security challenges with the same capabilities Google uses to keep more people and organizations safe online than anyone else in the world.

To learn more about Google Threat Intelligence and the rest of Google Cloud Security’s comprehensive portfolio, come meet us in person at our RSA Conference booth (N5644), and catch us at our keynotes, presentations, and meetups. You can also register for our upcoming Google Threat Intelligence use-cases webinar series, and read our expert analysis and in-depth research at the Google Cloud Threat Intelligence blog.

Read More for the details.

The modern workplace relies on web-based applications and cloud services, making browsers and their sensitive data a primary target for attackers. While the risks are significant, Chrome Enterprise can help organizations simplify and strengthen their endpoint security with secure enterprise browsing.

Following our recent Chrome Enterprise Premium launch, today at the RSA Conference in San Francisco, we’re announcing a growing ecosystem of security providers who are working with us to extend Chrome Enterprise’s browser-based protections and help enterprises protect their users working on the web and across corporate applications.

Chrome Enterprise Premium offers advanced security across SaaS and private web applications for enterprises. Many organizations rely on Zscaler Private Access (ZPA) as an improved option over VPNs and firewalls to provide secure, Zero Trust access to private applications on-premises and in the cloud. Now security operations teams can add a layer of additional safeguards through Chrome Enterprise Premium, including:

Data protections: Critical DLP functions including data exfiltration controls, copy, paste, and print restrictions, and watermarking capabilities. This extends Zscaler’s data protection across endpoints, email, SaaS and cloud.

Threat prevention: Advanced malware scanning, real-time phishing security, and credential protections, augmenting Zscaler’s inline inspection of encrypted traffic and built in threat protections.

Security insights: Additional telemetry and reporting across insider and external risks.

Google has collaborated with Zscaler to provide enterprises with a solution guide that enables organizations to configure their network security products alongside Chrome Enterprise Premium for deeper security and protections.

As attacks targeting end-users become more sophisticated, a multi-layered defense that includes a strong device access policy is crucial. Signals including user identity, device security, and location can enable dynamic, risk-based access decisions that further protect corporate data.

Enterprises can now use Duo Trusted Endpoints policy to enforce device trust using built-in Chrome Enterprise signals to deny access from unknown devices — without having to deploy additional agents and extensions. This integration allows organizations to:

Verify endpoint trust at login, and block unknown devices

Manage device access from a centralized Duo dashboard

Adjust granular policies for an organization of any size in a few clicks

Duo’s Trusted Endpoints feature lets organizations grant secure access to applications with policies that verify systems using signals from Chrome.

Data loss remains a top concern for enterprises, and the browser is a critical point for stopping data leaks. Trellix DLP for Chrome Enterprise is now available as an integration to customers managing Chrome from the cloud. With the Trellix DLP integration, organizations can prevent data leaks in Chrome by:

Monitoring and blocking file uploads with sensitive content

Tracking and preventing sensitive content from being copied and pasted to websites

Controlling print activity in Chrome browser and on local workstations

When sensitive information is detected in Chrome, the user is immediately notified with a pop-up.

Current Trellix DLP and Cisco Duo customers can implement these integrations by enrolling browsers into Chrome Enterprise Core and setting up a one-time configuration, at no additional cost. Learn more about the Trellix DLP integration here and Cisco Duo integration here.

To learn more about Chrome Enterprise, and the rest of Google Cloud Security’s comprehensive portfolio including our RSAC announcements on Google Cloud Security and AI, Google Threat Intelligence, and Google Security Operations, come meet us in person at our RSA Conference booth (N5644), and catch us at our keynotes, presentations, and meetups. You can also learn more about Chrome Enterprise here.

Read More for the details.

The advent of generative AI has unlocked new opportunities to empower defenders and security professionals. We have already seen how AI can transform malware analysis at scale as we work to deliver better outcomes for defenders. In fact, using Gemini 1.5 Pro, we were recently able to reverse engineer and analyze the decompiled code of the WannaCry malware in a single pass — and identify the killswitch — in only 34 seconds.

Our vision for AI is to accelerate your ability to protect and defend against threats by shifting from manual, time-intensive efforts to assisted and, ultimately, semi-autonomous security — while providing you with curated tools and services to secure your AI data, models, applications, and infrastructure. We do this by empowering defenders with Gemini in Security, which uses SecLM, our security-tuned API, as well as providing tools and services to manage AI risk to your environment. Our Mandiant experts are able to help you secure your AI journey wherever you are.

Managing AI risk and empowering defenders with gen AI.

Today at the RSA Conference in San Francisco, we’re sharing more on our vision for the intersection between AI and cybersecurity, including how we help organizations secure AI systems and provide AI tools to support defenders. We are introducing new AI offerings from Mandiant Consulting and new features in Security Command Center Enterprise to help address security challenges when adopting AI. We are also announcing the general availability of Gemini across several security offerings including Google Threat Intelligence and Google Security Operations to further empower defenders with generative AI.

As customers integrate AI into every area of their business, they tell us that securing their use of AI is essential. The recent State of AI and Security Survey Report from the Cloud Security Alliance highlighted that while many professionals are confident in their organization’s ability to protect AI systems, there is still a significant portion that recognize the risks of underestimating threats.

Our Secure AI Framework (SAIF) provides a taxonomy of risks associated with AI workloads and recommended mitigations. Today we are announcing new offerings from Mandiant Consulting that can help organizations support SAIF and secure the use of AI. Mandiant’s AI consulting services can help assess the security of your AI pipelines and test your AI defense and response with red teaming. These services can also help your defenders identify and implement ways to use AI to enhance cyber defenses and streamline investigative capabilities.

“The use of AI opens up a world of possibilities and enterprises recognize that in order to take advantage of the potential of these innovations, they need to get ahead of new security risks,” said Jurgen Kutscher, vice president, Mandiant Consulting, Google Cloud. “From helping secure training data to assessing AI applications for vulnerabilities, our Mandiant Consulting experts can provide recommendations based on Google’s own experience protecting and deploying AI. We’re excited to bring these new services to market to help our clients leverage AI more securely and transform their operations.”

Notebook Security Scanner identifies package vulnerabilities and recommends next steps to remediate individual packages.

We are also announcing new AI-protection capabilities that can help our customers implement SAIF by building on our release of Security Command Center Enterprise — our cloud risk-management solution that fuses cloud security and enterprise security operations:

Notebook Security Scanner, now available in preview, detects and provides remediation advice for vulnerabilities introduced by open-source software installed in managed notebooks.

Model Armor, expected to be in preview in Q3, can enable customers to inspect, route, and protect foundation model prompts and responses. It can help customers mitigate risks such as prompt injections, jailbreaks, toxic content, and sensitive data leakage. Model Armor will integrate with products across Google Cloud, including Vertex AI.

If you’d like to learn more about early access for Model Armor, you can sign up here.

Model Armor allows users to configure policies and set content safety filters to help block or redact inappropriate model prompts and responses.

Today, we’ve also shared how security teams can better defend against threats with Google Security Operations, our AI-powered platform to help empower SOC teams to more easily detect and respond to threats. Gemini in Security Operations now includes a new assisted investigation feature that navigates users through the platform based on the context of an investigation. It can help hunt for the latest threats with vital information from Google Threat Intelligence and MITRE, analyze security events, create detections using natural language, and recommend next steps to take.

Users can also ask Gemini to create a response playbook using natural language, which can simplify the time-consuming task of manually constructing one. The user can further refine the generated playbook and simulate its execution. These new enhancements can give security teams a boost across the detection and response lifecycle.

“Gemini in Security Operations is enabling us to enhance the efficiency of our Cybersecurity Operations Center program as we continue to drive operational excellence,” said Ronald Smalley, senior vice president, cybersecurity operations, Fiserv. “Detection engineers can create detections and playbooks with less effort, and security analysts can find answers quickly with intelligent summarization and natural language search. This is critical as SOC teams continue to manage increasing data volumes and need to detect, validate, and respond to events faster.“

Gemini in Security Operations aids investigations and helps users easily create rules for detections.

We also are introducing Google Threat Intelligence, a new offering that can help you reduce the time it takes to identify and protect against novel threats by bringing together investigative learnings from Mandiant frontline experts, the VirusTotal intel community, and Google threat insights from protecting billions of devices and user accounts.

With Gemini in Threat Intelligence, analysts can now conversationally search Mandiant’s vast frontline research to understand threat actor behaviors in seconds, and read AI-powered summaries of relevant open-source intelligence (OSINT) articles the platform automatically ingests to help reduce investigation time.

“Our main objective is to understand the purpose of the threat actor. The AI summaries provided by Gemini in Threat Intelligence make it easy to get an overview of the actor, information about relevant entities, and which regions they’re targeting,” said the director of information security at a leading multinational professional services organization. “The information flows really smoothly and helps us gather the intelligence we need in a fraction of the time.”

Plus, Gemini in Threat Intelligence includes Code Insight, which can inspect more than 200 file types, summarize their unique properties, and identify potentially malicious code. Gemini makes it easier for security professionals to understand the threats that matter most to their organization and take action to respond.

Gemini in Google Threat Intelligence allows users to conversationally search Mandiant’s vast corpus of frontline research.

With rapid advances in AI technology, the line of what is possible is a moving target. We have a vision for a world in which the practice of “doing security” is less laborious and more durable, as AI offloads routine tasks and frees the experts to focus on the most complex issues.

Organizations can now address security challenges with the same capabilities that Google uses to keep more people and organizations safe online than anyone else in the world

To learn more about AI and security, and the rest of Google Cloud Security’s comprehensive portfolio, come meet us in person at our RSA Conference booth (N5644). You can also catch us at our RSA Conference keynotes, presentations, and meetups, and get the latest AI and Security updates here.

Read More for the details.

In the generative AI-era, security teams are looking for a fully-operational, high-performing security operations solution that can drive productivity while empowering defenders to detect and mitigate new threats.

Today at the RSA Conference in San Francisco, we’re announcing AI innovations across the Google Cloud Security portfolio, including Google Threat Intelligence, and the latest release of Google Security Operations. Today’s update is designed help to reduce the do-it-yourself complexity of SecOps and enhance the productivity of your entire Security Operations Center.

At Next ‘24, we shared how Applied Threat Intelligence can help teams turn intelligence into action, uncover more threats with less effort, and unlock deeper threat hunting and investigation workflows. Today we are unveiling new features that will use AI to automatically generate detections based on new threat discoveries. Coming later this year, this new capability will help enable you to identify malicious activity operating in your environment, and share clear directions that guide you through triage and response.

“Google Security Operations provides access to unique threat intelligence and advanced capabilities that are highly integrated into the platform. It enables security teams to surface the latest threats in a turnkey way that doesn’t require complicated engineering,” said Michelle Abraham, research director, IDC. ”Google is a potential partner for organizations in the fight against existing and emerging threats.”

Google Security Operations is a unified, AI and intel-driven platform for threat detection, investigation, and response.

To help reduce manual processes and provide better security outcomes for our customers, Google Security Operations includes a rich set of curated detections. Developed and maintained regularly by Google and Mandiant experts, curated detections can enable customers to detect threats relevant to their environment. Notable new curated detections include:

Cloud detections can addresses serverless threats, cryptomining incidents across Google Cloud, all Google Cloud and Security Command Center Enterprise findings, anomalous user behavior rules, machine learning-generated lists of prioritized endpoint alerts (based on factors such as user and entity context), and baseline coverage for AWS including identity, compute, data services, and secret management. We have also added detections based on learnings from the Mandiant Managed Defense team. Detections are now available in Google Security Operations Enterprise and Enterprise Plus packages.

Frontline threat detections can provide coverage for recently-detected methodologies, and is based on threat actor tactics, techniques and procedures (TTPs), including from nation-states and newly-detected malware families. New threats discovered by Mandiant’s elite team, including during incident response engagements, are then made available as detections. It is now available in the Google Security Operations Enterprise Plus package.

The addition of Gemini in Security Operations can elevate the skills of your security team. It can help reduce the time security analysts spend writing, running, and refining searches and triaging complex cases by approximately sevenfold. Security teams can search for additional context, better understand threat actor campaigns and tactics, initiate response sequences and receive guided recommendations on next steps — all using natural language. Today we are sharing two exciting updates to Gemini in Security Operations.

Now generally available, the Investigation Assistant feature can help security professionals make faster decisions and respond to threats with more precision and speed by answering questions, summarizing events, hunting for threats, creating rules, and receiving recommended actions based on the context of investigations.

Investigation Assistant can help answer questions, summarize events, hunt for threats, create rules, and recommend actions.

Playbook Assistant, now in preview, can help teams easily build response playbooks, customize configurations, and incorporate best practices — helping simplify time-consuming tasks that require deep expertise.

Playbook Assistant can help build response playbooks, customize configurations, and incorporate best practices.

Getting data into the system and maintaining the pipeline is a critical yet time consuming task in security operations. As log sources change and new fields need to be extracted, security engineers and architects are often required to spend considerable time writing new parsing logic and ensuring backward compatibility.

Today we are excited to announce that Google Security Operations can now automatically parse log files by extracting all key-value pairs to make them available for search, rules, and analytics. Available in preview, automatic parsing can help reduce the maintenance overhead of parsers in general, and also reduce the time consuming task of creating custom parsers. It supports JSON-based logs, and we will be adding support for other log formats. Automatically parsing log files can help security teams have the right data and context, making for faster and more effective investigations and detection authoring.

For customers in need of expert support for managing Google Security Operations, we’ve got you covered. Google Security Operations can also work in concert with Mandiant Managed Defense and Mandiant Hunt, which can help you to reduce risks to your organization. Mandiant’s team of seasoned defenders, analysts, and threat hunters work seamlessly with your security team and the AI-infused capabilities of Google Security Operations to quickly and effectively hunt or monitor, detect, triage, investigate, and respond to incidents.

And for our public sector customers that may have more specialized requirements, we offer Google SecOps CyberShield to help governments worldwide build an enhanced cyber threat capability.

To learn more about Google Security Operations, and the rest of Google Cloud Security’s comprehensive portfolio including an expanded Chrome Enterprise ecosystem, come meet us in person at our RSA Conference booth (N5644). You can also catch us at our keynotes, presentations, and meetups including our session, “Bye-Bye DIY: Frictionless Security Operations with Google,” on Tuesday, May 7, at 1:15 p.m. PDT.

Not attending RSAC? Join us for our upcoming webinar, “Stay ahead of the latest threats with intelligence-driven security operations,” on Wednesday, May 22, at 11:00 a.m. PDT.

Read More for the details.

Azure Classic roles will be retired by 31st August, 2024 – transition to using only additional email addresses for Azure Autoscale Notifications as Azure Autoscale is dependent on the Azure Classic Roles.

Read More for the details.

Today, AWS introduces a new EC2 API to retrieve the public endorsement key (EkPub) for the Nitro Trusted Platform Module (NitroTPM) of an Amazon EC2 instance.

Read More for the details.

Amazon Pinpoint now offers country rules, a new feature that allows developers to control the specific countries they send SMS and voice messages to. This enhancement helps organizations align their message sending activities to the precise list of countries where they operate.

Read More for the details.

Amazon Personalize now makes it easier than ever to remove users from your datasets with a new deletion API. Amazon Personalize uses datasets provided by customers to train custom personalization models on their behalf. This new capability allows you to delete records about users from your datasets including user metadata and user interactions. This helps to maintain data for your compliance programs and keep your data current as your user base changes. Once the deletion is complete, Personalize will no longer store information about the deleted users and therefore will not consider the user for model training.

Read More for the details.

Amazon RDS for SQL Server now supports SQL Server Analysis Services (SSAS) in Multidimensional mode for SQL Server 2019. There is no additional cost to install SSAS directly on your Amazon RDS for SQL Server DB instance.

Read More for the details.

Customers in AWS Canada West (Calgary) Region can now use AWS Transfer Family.

Read More for the details.

Starting today, you can use Amazon Route 53 Resolver DNS Firewall in the Canada West (Calgary) Region.

Read More for the details.

Amazon DynamoDB on-demand is a serverless, pay-per-request billing option that can serve thousands of requests per second without capacity planning. Previously, the on-demand request rate was only limited by the default throughput quota (40K read request units and 40K write request units), which uniformly applied to all tables within the account, and could not be customized or tailored for diverse workloads and differing requirements. Since on-demand mode scales instantly to accommodate varying traffic patterns, a piece of hastily written or unoptimized code could rapidly scale up and consume resources, making it difficult to keep costs and usage bounded.

Read More for the details.

Starting today, customers can use AWS Control Tower in the AWS Canada West (Calgary) Region. With this launch, AWS Control Tower is available in 29 AWS Regions and the AWS GovCloud (US) Regions. AWS Control Tower offers the easiest way to set up and govern a secure, multi-account AWS environment. It simplifies AWS experiences by orchestrating multiple AWS services on your behalf while maintaining the security and compliance needs of your organization. You can set up a multi-account AWS environment within 30 minutes or less, govern new or existing account configurations, gain visibility into compliance status, and enforce controls at scale.

Read More for the details.

Amazon CloudWatch Internet Monitor, an internet traffic monitoring service for AWS applications that gives you a global view of traffic patterns and health events, now displays IPv4 prefixes in its console dashboard. Using this data in Internet Monitor, you can get more details about your application traffic and health events. For example, you can do the following:

View the IPv4 prefixes associated with a client location that is impacted by a health event

View IPv4 prefixes associated with a client location

Filter and search traffic data by the network associated with an IPv4 prefix or IPv4 address

Read More for the details.

The intricate hierarchical data structures in data warehouses and lakes sourced from diverse origins can make data modeling a protracted and error-prone process. To quickly adapt and create data models that meet evolving business requirements without having to rework them excessively, you need data models that are flexible, modular and adaptable enough to accommodate many requirements. This requires advanced technologies, proficient personnel, and robust methodologies.

The advancements in generative AI offer numerous opportunities to address these challenges. Multimodal large language models (LLMs) can analyze examples of data in the data lake, including text descriptions, code, and even images of existing databases. By understanding this data and its relationships, LLMs can suggest or even automatically generate schema layouts, simplifying the laborious process of implementing the data model within the database, so developers can focus on higher value data management tasks.

In this blog, we walk you through how to use multimodal LLMs in BigQuery to create a database schema. To do so, we’ll take a real-world example of entity relationship (ER) diagrams and examples of data definition languages (DDLs), and create a database schema in three steps.

For this demonstration, we will use Data Beans, a fictional technology company built on BigQuery that provides a SaaS platform to coffee sellers. Data Beans leverages BigQuery’s integration with Vertex AI to access Google AI models like Gemini Vision Pro 1.0 to analyze unstructured data and integrate it with structured data, while using BigQuery to help with data modeling and generating insights.

The first step is to create an ER diagram using your favorite modeling tool, or to take a screenshot of an existing ER diagram. The ER diagram can contain primary key and foreign key relationships, and will then be used as an input to the Gemini Vision Pro 1.0 model to create relevant BigQuery DDLs.

Next, to create the DDL statements in BigQuery, write a prompt to take an ER image as an input. The prompt should include detailed and relevant rules that the Gemini model should follow. In addition, make sure the prompt captures learnings from the previous iterations — in other words, be sure to update your prompt as you experiment and iterate it. These can be provided as examples to the model, for example a valid schema description for BigQuery. Providing a working example for the model to follow will help the model create a data definition DDL that follows your desired rules.

Now you have an image of an ER diagram to present to your LLM.

After creating a prompt in Step 2, you are now ready to call the Gemini Pro 1.0 Vision model to generate the output by using the image of your ER diagram as an input (left side of Figure 1). You can do this in a number of ways — either directly from Colab notebooks using Python, or through BigQuery ML, leveraging its integration with Vertex AI:

In this demonstration, we saw how the multimodal Gemini model can streamline the creation of data and schemas. And while manually writing prompts is fine, it can be a daunting task when you need to do it at enterprise scale to create thousands of assets such as DDLs. Leveraging the above process, you can parameterize and automate prompt generation, dramatically speeding up the workflow and providing consistency across thousands of generated artifacts. You can find the complete Colab Enterprise notebook source code here.

BigQuery ML includes many new features to let you use Gemini Pro capabilities; for more, please see the documentation. Then, check out this tutorial to learn how to apply Google’s models to your data, deploy models, and operationalize ML workflows — all without ever moving your data from BigQuery. Finally, for a behind-the-scenes look on how we made this demo, watch this video on how to build an end-to-end data analytics and AI application using advanced models like Gemini directly from BigQuery.

Googlers Luis Velasco, Navjot Singh, Skander Larbi and Manoj Gunti contributed to this blog post. Many Googlers contributed to make these features a reality

Read More for the details.

In the realm of modern application design, developers have a range of choices available to them for crafting architectures that are not only simple, but also scalable, performant and resilient. Container platforms like Kubernetes (k8s) offer users the ability to seamlessly adjust node and pod specifications, so that services can scale. This scalability does not come at the expense of elasticity, and also ensures consistent performance for service consumers. So it’s no surprise that Kubernetes has become the de facto standard for building distributed and resilient systems in medium-to-large organizations.

Unfortunately, the level of maturity and standardization in the Kubernetes space available to system designers in the application layer doesn’t usually extend to the database layer that powers these services. And it goes without saying that the database layer also needs to be elastic, scalable, highly available, and resilient.

Further, the challenges are amplified when these services:

Are required to manage (transactional) states or

Orchestrate distributed processes across multiple (micro-) services.

Traditional relational database management software (RDBMS) brings with it side effects that are not aligned with a microservices way of thinking, and entails fairly significant trade-offs. In the sections below, we dive deeper into the scalability, availability and operational challenges faced by application designers specifically within the database tier. We then conclude with a description of how Spanner can help you build microservices-based systems without the often unspoken “impedance mismatch” between the application layer and the database layer.

We look at this problem from a scalability and availability perspective, specifically in the context of databases that cater to OLTP workloads. We explore the intricacies involved in accommodating highly variable workloads, shedding light on the complexities associated with managing higher demands through the utilization of both replicas and sharding techniques.

When it comes to scaling a traditional relational database, you have two choices (leaving aside caching strategies):

Scale up: To scale a database vertically, you typically augment its resources by adding more CPU power, increasing memory, or adding faster disks. However, these scale-up procedures usually incur downtime, affecting the availability of dependent services.

Scale out: Although vertically scaling up databases can be effective initially, it eventually encounters limitations. The alternative is to scale out database traffic, by introducing additional read replicas, employing sharding techniques, or even a combination of both. These methods come with their own trade-offs and introduce complexities, which lead to operational overhead.

In terms of availability, databases require maintenance, resulting in the need to coordinate regular periods of downtime. Relational databases can also be prone to hardware defects, network partitions, or subject to data center outages that bring a host of DR scenario challenges that you need to address and plan for.

Examples of planned downtime:

OS or database engine upgrades or patches

Schema changes – Most database engines require downtime for the duration of a schema change

Examples of unplanned downtime

Zonal or regional outages

Network partitions

Most “mature” practices for handling traditional RDBMSs run counter to modern application design principles and can significantly impact the availability and performance of services. Depending on the nature of the business, this can have consequences for revenue streams, compliance with regulations, or adversely impact customer satisfaction.

Let’s go over some of the key challenges associated with RDBMSs.

Database read replicas are a suitable tool for scaling out read operations and mitigating planned downtime, so that reads are at least available to the application layer.

In order to reduce load on the primary database instance, replicas can be created to distribute read load across multiple machines and thus handle more read requests concurrently.

Replication between the primary and secondary replicas is usually done asynchronously. This means there can be a lag between when data is written to the primary database and when it is replicated to the read replicas. This can result in read operations getting slightly outdated (stale) data if they are directed to the replicas. This also dictates that guaranteed consistent queries need to be directed to primary instances. Synchronous replication is rarely an option, in particular, not in geo-distributed topologies, as it is complex, and comes with a range of issues such as:

Limiting the scalability of the system, as every write operation must wait for confirmation from the replica, causing performance bottlenecks and increasing latency

Introducing a single point of failure — if the replica becomes unavailable or experiences issues, it can impact the availability of the primary database as well

And lastly, write throughput can become bottlenecked due to the limit on how much write traffic a single database can handle without performance degradation. Scaling writes still requires vertical scaling (more powerful hardware) or sharding (splitting data across multiple databases), which can lead to downtime, additional costs, and limits imposed by non-linearly escalating operational toil. Now let’s look at sharding challenges in a bit more detail.

Sharding is a powerful tool for database scalability. When implemented correctly, it can enable applications to handle a much larger volume of read and write transactions. However, sharding does not come without its challenges and brings its own set of complexities that need careful navigation.

There are multiple ways to shard databases. For instance,

they can be split by user id ranges,

regions or

channels (e.g. web, mobile) etc..

As shown in the above example, sharding by user id or region can lead to significant performance improvements, as smaller data ranges are hosted by individual databases and the traffic can be spread across these databases.

Key considerations:

Deciding on the “right” kind of sharding: One of the primary challenges of sharding is the initial setup. Deciding on a sharding key, whether it be user ID, region, or another attribute, requires a deep understanding of your data access patterns. A poorly chosen sharding key can result in uneven data distribution, known as “shard imbalance,” which can significantly dull the performance benefits of sharding.

Data integrity is another significant concern. When data is spread across multiple shards, maintaining foreign-key relationships becomes difficult. Transactions that span multiple shards become complex and can result in increased latency and decreased integrity.

Operational complexity: Sharding introduces operational complexity. Managing multiple databases requires a more sophisticated approach to maintenance, backups, and monitoring. Each shard may need to be backed up separately, and restoring a sharded database to a consistent state can be challenging.

Re-sharding: As an application grows, the chosen sharding scheme might need to change. This process involves redistributing the data across a new set of shards, which can be time-consuming and risky, often requiring significant downtime or degraded performance during the transition.

Increased development complexity: Application logic can become more complex because developers must account for the distribution of data. This could mean additional logic for routing queries to the correct shard, handling partial failures, and ensuring that transactions that need to operate across shards maintain consistency.

Over time, database complexity can grow along with increased traffic, adding further toil to operations. For large systems, a combination of sharding along with attached scale-out read replicas might be required to help ensure cost-effective scalability and performance.

This combined dual-strategy approach, while effective in handling increasing traffic, significantly ramps up the complexity of the system’s architecture. The above illustration captures the need to add scalability and availability to a transactional relational database powering a service. It doesn’t even include full details on DR (e.g. backups), or geo-redundancy, nor does it cater to zero-to-low RPO/RTO requirements.

Furthermore, the dual-strategy approach described above can:

negatively impact the ease of service maintenance

increase operational demands, and

elevate the risk associated with the resolution of incidents

NoSQL databases began to emerge in the early 2000s as a response to traditional RDBMSs’ above-mentioned limitations. In the new era of big data and web-scale applications, NoSQL databases were designed to overcome the challenges of scalability, performance, flexibility and availability that were imposed by the growing volume of semi-structured data.

However, the key tradeoff they made was to drop sound relational models, SQL, and support for ACID-compliant transactions. However, many prominent system architects have questioned the wisdom of abandoning these well-worn relational concepts for OLTP workloads, as they are essential features that still power mission-critical applications. As such, there’s been a recent trend to (re)introduce relational database features into NoSQL databases, such as ACID transactions in MongoDB and Cassandra Query Language (CQL) in Cassandra.

Spanner eliminates much of this complexity and helps facilitate a simple and easy-to-maintain architecture without most of the above-mentioned compromises. It combines relational concepts and features (SQL, ACID transactions) with seamless horizontal scalability, providing geo-redundancy with up to 99.999% availability that you want when designing a microservices-based application.

We want to emphasize that we’re not arguing that Spanner is only a good fit for microservices. All the things that make Spanner a great fit for microservices also make it great for monolithic applications.

To summarize, a microservices architecture built on Spanner allows software architects to design systems where both the application and database provide:

“Scale insurance” for future growth scenarios

An easy way to handle traffic spikes

Cost efficiency through Spanner’s elastic and instant compute provisioning

Up to 99.999% availability with geo-redundancy

No downtime windows (for maintenance or other upgrades)

Enterprise-grade security such as encryption at rest and in-transit

Features to cater for transactional workloads

Increases in developer productivity (e.g. SQL)

You can learn more about what makes Spanner unique and how it’s being used today. Or try it yourself for free for 90-days or for as little as $65 USD/month for a production-ready instance that grows with your business without downtime or disruptive re-architecture.

Read More for the details.

Ensuring application and service teams have the resources they need is crucial for platform administrators. Fleet team management features in Google Kubernetes Engine (GKE) make this easier, allowing each team to function as a separate “tenant” within a fleet. In conjunction with Config Sync, a GitOps service in GKE, platform administrators can streamline resource management for their teams across the fleet.

Specifically, with Config Sync team scopes, platform admins can define fleet-wide and team-specific cluster configurations such as resource quotas and network policies, allowing each application team to manage their own workloads within designated namespaces across clusters.

Let’s walk through a few scenarios.

Let’s say you need to provision resources for frontend and backend teams, each requiring their own tenant space. Using team scopes and fleet namespaces, you can control which teams access specific namespaces on specific member clusters.

For example, the backend team might access their bookstore and shoestore namespaces on us-east-cluster and us-west-cluster clusters, while the frontend team has their frontend-a and frontend-b namespaces on all three member clusters.

Unlocking Dynamic Resource Provisioning with Config Sync

You can enable Config Sync by default at the fleet level using Terraform. Here’s a sample Terraform configuration:

Note: Fleet defaults are only applied to new clusters created in the fleet.

This Terraform configuration enables Config Sync as a default fleet-level feature. It installs Config Sync and instructs it to fetch Kubernetes manifests from a Git repository (specifically, the “main” branch and the “fleet-tenancy/config” folder). This configuration automatically applies to all clusters subsequently created within the fleet. This approach offers a powerful way of configuring manifests across fleet clusters without the need for manual installation and configuration on individual clusters.

Now that you’ve configured Config Sync as a default fleet setting, you might want to sync specific Kubernetes resources to designated namespaces and clusters for each team. Integrating Config Sync with team scopes streamlines this process.

Setting team scope

Following this example, let’s assume you want to apply a different network policy for the backend team compared to the frontend team. Fleet team management features simplify the process of provisioning and managing infrastructure resources for individual teams, treating each team as a separate “tenant” within the fleet.

To manage separate tenancy, as shown in the above team scope diagram, first set up team scopes for the backend and frontend teams. This involves defining fleet-level namespaces and adding fleet member clusters to each team scope.

Now, let’s dive into those Kubernetes manifests that Config Sync syncs into the clusters.

Applying team scope in Config Sync

Each fleet namespace in the cluster is automatically labeled with fleet.gke.io/fleet-scope: <scope name>. For example, the backend team scope contains the fleet namespaces bookstore and shoestore, both labeled with fleet.gke.io/fleet-scope: backend.

Config Sync’s NamespaceSelector utilizes this label to target specific namespaces within a team scope. Here’s the configuration for the backend team:

Applying NetworkPolicies for the backend team

By annotating resources with configmanagement.gke.io/namespace-selector: <NamespaceSelector name>, they’re automatically applied to the right namespaces. Here’s the NetworkPolicy of the backend team:

This NetworkPolicy is automatically provisioned in the backend team’s bookstore and shoestore namespaces, adapting to fleet changes like adding or removing namespaces and member clusters.

Extending the concept: ResourceQuotas for the frontend team

Here’s how a ResourceQuota is dynamically applied to the frontend team’s namespaces:

Similarly, this ResourceQuota targets the frontend team’s frontend-a and frontend-b namespaces, dynamically adjusting as the fleet’s namespaces and member clusters evolve.

Delegating resource management with Config Sync: Empowering the backend team

To allow the backend team to manage their own resources within their designated bookstore namespace, you can use Config Sync’s RepoSync, and a slightly different NamespaceSelector.

Targeting a specific fleet namespace

To zero in on the backend team’s bookstore namespace, the following NamespaceSelector targets both the team scope and the namespace name by labels:

Introducing RepoSync

Another Config Sync feature is RepoSync, which lets you delegate resource management within a specific namespace. For security reasons, RepoSync has no default access; you must explicitly grant the necessary RBAC permissions to the namespace.

Leveraging the NamespaceSelector, the following RepoSync resource and its respective RoleBinding can be applied dynamically to all bookstore namespaces across the backend team’s member clusters. The RepoSync points it to a repository owned by the backend team:

Note: The .spec.git section would reference the backend team’s repository.

The backend team’s repository contains a ConfigMap. Config Sync ensures that the ConfigMap is applied to the bookstore namespaces across all backend team’s member clusters, supporting a GitOps approach to management.

Managing resources across multiple teams within a fleet of clusters can be complex. Google Cloud’s fleet team management features, combined with Config Sync, provide an effective solution to streamline this process.

In this blog, we explored a scenario with frontend and backend teams, each requiring their own tenant spaces and resources (NetworkPolicies, ResourceQuotas, RepoSync). Using Config Sync in conjunction with the fleet management features, we automated the provisioning of these resources, helping to ensure a consistent and scalable setup.

Next steps

Learn how to use Config Sync to sync Kubernetes resources to team scopes and namespaces.

To experiment with this setup, visit the example repository. Config Sync configuration settings are located within the config_sync block of the Terraform google_gke_hub_feature resource.

For simplicity, this example uses a public Git repository. To use a private repository, create a Secret in each cluster to store authentication credentials.

To learn more about Config Sync, see Config Sync overview.

To learn more about fleets, see Fleet management overview.

Read More for the details.

One challenge with any distributed system, including Workflows, is ensuring that requests sent from one service to another are processed exactly once, when needed; for example, when placing a customer order in a shipping queue, withdrawing funds from a bank account, or processing a payment.

In this blog post, we’ll provide an example of a website invoking Workflows, and Workflows in turn invoking a Cloud Function. We’ll show how to make sure both Workflows and the Cloud Function logic only runs once. We’ll also talk about how to invoke Workflows exactly once when using HTTP callbacks, Pub/Sub messages, or Cloud Tasks.

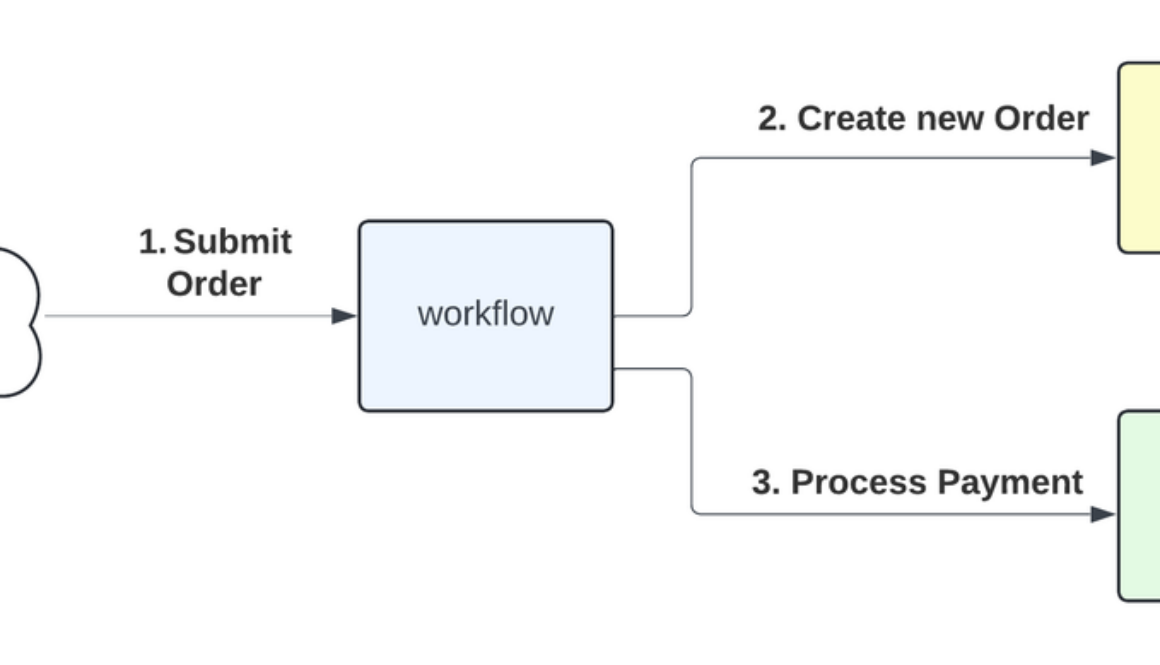

Imagine you have an online store and you’re using Workflows to create new orders, save to Firestore, and process payments by calling a Cloud Function:

A new customer order comes in, the website makes an API call to Workflows but receives an error. Two possible scenarios are:

(1) The request is lost and the workflow is never invoked:

(2) The workflow is invoked and executes successfully, however the response is lost:

How can you make sure the workflow executes once?

To solve this, the website retries the same request. One easy solution is to check if a document already exists in Firestore:

The processPayment step will execute only if a document is successfully created. This is effectively a 1-bit state machine, idempotent, and a valid solution. The downside of this solution is that it’s not extensible. We might want to complete additional work in this handler before changing states, or expand the number of states within the system. Next, let’s continue with a more advanced solution for the same problem.

Let’s see what happens when the workflow uses a Cloud Function to process the payment. You might have the following step to call Cloud Functions:

By default, Workflows offers at-most-once delivery (no retries) with HTTP requests. That’s usually OK because 99.9+% of the time, the call is successful, and a response is received.

In the rare case of failure, a ConnectionError might be raised. As in the website-to-workflow situation discussed previously, the workflow can’t tell which scenario occurred. Similarly, you can add retries.

Let’s add a default retry policy to handle this:

Let’s say the second delivery scenario occurs where the request is received by the Cloud Function but the response is lost. By adding retries, Workflows will likely invoke the Cloud Function multiple times. When this happens, how do you ensure that the code in the Cloud Function only runs once?

You’ll need to add extra logic to the Cloud Function to check and update the payment state in Firestore:

Let’s also assume you want to track the workflow EXECUTION_ID in Firestore and use the following order_state enum to allow for additional flexibility in payment processing:

You can expand on the previous workflow and call a Cloud Function to process the payment:

Here’s the Cloud Function (Node.js v20) that processes the payment:

package.json

The key takeaway is that all payment processing work occurs within a transaction, making all actions idempotent. The code within the transaction might run multiple times due to Workflows retries, but it’s only committed once.

So far, we’ve talked about how to make website-to-workflow and Workflows to Cloud Functions requests, exactly once. There are other ways of invoking or resuming Workflows such as HTTP callbacks, Pub/Sub messages or Cloud Tasks. How do you make those requests exactly once? Let’s take a look.

The good news is that Workflows HTTP callbacks are fully idempotent by default. It’s safe to retry a callback if it fails. For example:

Let’s assume that the first callback returns an error to the external caller. Based on the error, the caller might not know if the workflow callback was received, and should retry the callback. On the second callback, the caller will receive one of the following HTTP status codes:

429 indicates that the first callback was received successfully. The second callback is rejected by the workflow.

200 indicates that the second callback was received successfully. The first callback was either never received, or was received and processed successfully. If the latter, the second callback is not processed because await_callback is called only once. The second callback is discarded at the end of the workflow.

404 indicates that a callback is not available. Either the first callback was received and processed and the workflow has completed, or the workflow is not running (and has failed, for example). To confirm this, you’ll need to send an API request to query the workflow execution state.

For more details, see Invoke a workflow exactly once using callbacks.

When using Pub/Sub to trigger a new workflow execution, Pub/Sub uses at-least-once delivery with Workflows, and will retry on any delivery failure. Pub/Sub messages are automatically deduplicated. You don’t need to worry about duplicate deliveries in that time window (24 hours).

Cloud Tasks is commonly used to buffer workflow executions and provides at-least-once delivery but it doesn’t have message deduplication. Workflow handlers should be idempotent.

Exactly-once request processing is a hard problem. In this blog post, we’ve outlined some scenarios where you might need exactly-once request processing when you’re using Workflows. We also provided some ideas on how you can implement it. The exact solution will depend on the actual use case and the services involved.

Read More for the details.