GCP – Zeotap’s big win: 46% TCO reduction and enhanced real-time performance with Bigtable

In today’s fast-paced, data-driven landscape, the ability to process, analyze, and act on vast amounts of data in real time is paramount. For businesses aiming to deliver personalized customer experiences and optimize operations, the choice of database technology is a critical decision.

At Zeotap — a leading Customer Data Platform (CDP) — we empower enterprises to unify their data from disparate sources to build a comprehensive, unified view of their customers. This enables businesses to activate data across various channels for marketing, customer support, and analytics. Zeotap handles more than 10 billion new data points a day from more than 500 data sources across our clients, while orchestrating through more than 2000 workflows — one-third of those in real time with milliseconds latency. To meet stringent SLAs for data freshness and end-to-end latencies, performance is crucial.

However, as Zeotap grew, our ScyllaDB-based infrastructure faced scaling challenges, especially as the business needed to evolve towards real-time use cases and increasingly spiky workloads. We needed a more flexible, performant, cost-effective, and operationally efficient solution, which led us to Bigtable, a low-latency, NoSQL database service from Google Cloud for machine learning, operational analytics, and high-throughput applications. The migration resulted in significant benefits, including a 46% reduction in Total Cost of Ownership (TCO).

The challenge of scaling real-time analytics

Zeotap’s platform demands a database capable of handling a high write throughput of over 300,000 writes per second and nearly triple that in reads during peaks.

As our platform evolved, the initial architecture presented several hurdles:

-

Scalability limitations: We initially self-managed ScyllaDB, on-prem, and later on in the cloud. We use Spark and BigQuery for analytical batch processing, but managing these different tools and pipelines across our own environment and customer environments reached a peak where scaling became increasingly harder.

-

Operational overhead: Managing and scaling our previous database infrastructure required significant operational effort. We had to run scripts in the background to add nodes when resource alerts came up and had to map hardware to different kinds of workloads.

-

Deployment complexity: Embedding third-party technology in our stack complicated deployment. The commercial procurement process was also cumbersome.

-

Cost predictability: Ensuring predictable costs for us and our clients was a growing concern as our business grew.

These challenges drove us to re-evaluate our data infrastructure and seek a cloud-native solution that could meet our streaming first, “zero-touch” ops philosophy, while supporting our demanding OLAP and OLTP workloads.

Why Bigtable? Performance, scalability, and efficiency

Zeotap’s decision to migrate to Bigtable was driven by four key requirements:

-

Operational simplicity: Moving from ScyllaDB cluster to Bigtable meant eliminating a significant operational burden and achieving “zero-touch ops”. Bigtable abstracts away hardware mapping and node management. This eliminates the need for maintenance windows and helps ensure data rebalancing.

-

Performance: Zeotap needed predictable performance, even in the face of regularly unpredictable workloads to meet our stringent SLAs. Bigtable’s ability to deliver low latencies for both reads and writes at scale was crucial — especially with spiky traffic patterns.

-

Efficient scalability: Managing ScyllaDB cluster scaling, rebalancing, and hotspots was operationally intensive. Zeotap handles very spiky and bursty workloads at times exceeding 300,000 writes per second. Bigtable disaggregates compute and storage, allowing for rapid scaling (further enhanced by autoscaling), which automatically adjusts cluster size in response to demand. This lead to more cost efficiency and helped eliminate idle resources.

-

Total cost of ownership (TCO): A significant driver of this migration was the need for cost efficiency and predictability. By moving from ScyllaDB to Bigtable, we achieved a significant 46% reduction in our TCO. This stems from Bigtable’s efficient storage and the ability to combine use cases, such as using Bigtable as a hot store and BigQuery as a warm store.

- Tight integration: Bigtable’s integration with other Google Cloud services, particularly BigQuery, was a major advantage in reducing operational overhead. Features like reverse ETL directly into Bigtable greatly simplifies data pipelines and reduces Zeotap’s operational footprint by 20%.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘Build smarter with Google Cloud databases!’), (‘body’, <wagtail.rich_text.RichText object at 0x7f583d15dd00>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

Zeotap’s architectural evolution to cloud-native

Zeotap’s transition to Bigtable wasn’t an overnight lift-and-shift, but part of a strategic plan to build a streaming real-time analytics platform that could meet the needs of an evermore demanding customer landscape:

- 2020: After running one of the largest graphs with JanusGraph-on-ScyllaDB and a heavy processing operation with Spark on AWS, we made the strategic move to migrate to Google Cloud.

- 2022: Adopted a Lambda architecture, heavily pivoting into BigQuery, and moving away from graph due to performance issues. ScyllaDB was acting now as a pure key-value store.

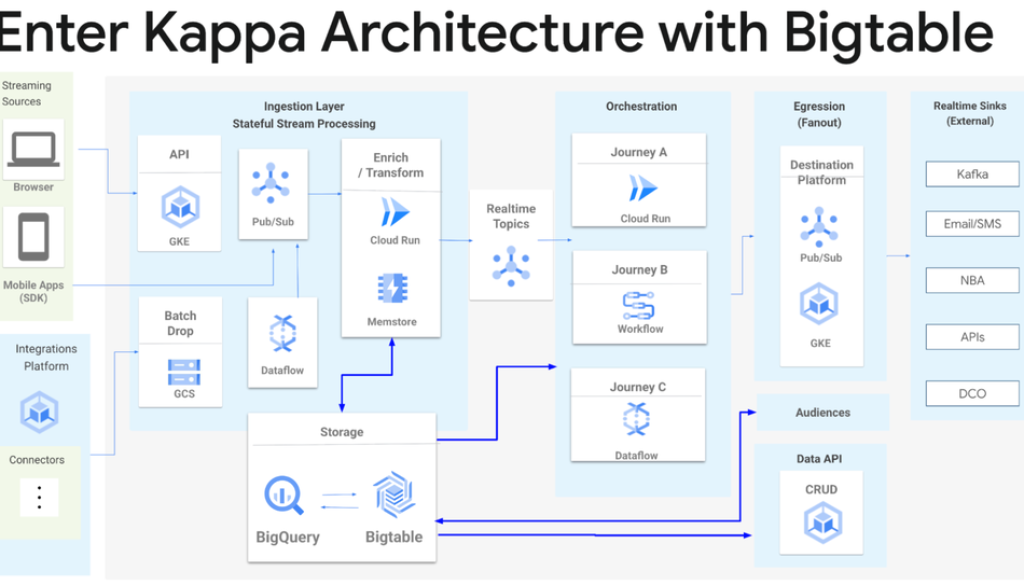

- 2023: Shifted to a Kappa architecture, prioritizing real-time ingestion and streaming. This was a major network redesign to meet the needs of clients for real-time use cases.

- 2024: Fully committed to a cloud-native model with Bigtable and BigQuery as its core, while eliminating Spark from our stack.

In our current architecture, Zeotap’s ingestion layer runs via Dataflow and a home-grown streaming engine with a combination of Memorystore and Bigtable powering inline enrichment, transformation, and ingestion. We used Memorystore as a lightning-fast cache layer to speed up read-heavy workloads, while helping to reduce strain on Bigtable. Bigtable serves as the hot store for real-time ingestion and data API for low-latency point lookups, while BigQuery acts as the warm and cold store for analytics, inferencing, and batch processing.

This architectural transformation, with Bigtable at its heart, enables us to:

-

Consolidate fragmented data: Bigtable handles the complex multi-read/write operations required to build single customer views. The data derives from hundreds of different channels, ERP, CRM, web apps, and data warehouses. The data have different types of ID that need to get stitched together as they get consolidated into Bigtable.

-

Deliver real-time customer 360: Serves comprehensive customer profiles, including identities, attributes, streaming events, calculated attributes, and consent data — all through our Bigtable-backed data API. This enables the same unified assets available across the entire customer lifecycle — empowering customer support, marketers, and data analysts alike.

-

Optimize AI pipelines: The synergy between Bigtable as a feature store, and BigQuery as our inferencing platform by leveraging BQML, has dramatically shrunk our time to market for AI model deployment for clients — down from multiple weeks to less than a week.

Results and looking forward

Migrating to Bigtable has delivered substantial, quantifiable benefits for Zeotap. Most notably, we achieved a 46% decrease in Total Cost of Ownership (TCO) compared to our previous infrastructure. This cost efficiency was paired with a 20% reduction in overall operational tasks and overhead — a direct result of the tight integration between Bigtable and BigQuery. Beyond resource savings, the platform now offers enhanced performance and reliability — with lower latencies — enabling us to confidently meet our stringent Service Level Agreement (SLA) commitments. Furthermore, Bigtable has improved our agility, allowing for faster deployment of AI/ML models across various environments with efficient resource utilization, such as reading batch workloads off our Disaster Recovery (DR) cluster.

Transform your data infrastructure with Bigtable

Zeotap’s migration is a compelling example of how choosing the right database can address the challenges of scale, performance, and operational complexity in the era of real-time data and AI. By leveraging Bigtable’s capabilities for high throughput, low-latency reads, and efficient handling of demanding workloads, coupled with its seamless integration with BigQuery, Zeotap built a more flexible, efficient, and cost-effective platform that empowers customers’ real-time data initiatives.

Learn more

-

Check out the power of Bigtable and begin planning your migration today.

-

Discover Bigtable’s Cassandra API and tools for no-downtime, no code-change migrations from ScyllaDB and Cassandra

- Read more about new Bigtable features like SQL support, distributed counters, continuous materialized views, tiered storage and data boost.

Read More for the details.