GCP – Evolving Ray and Kubernetes together for the future of distributed AI and ML

Ray is an OSS compute engine that is popular among Google Cloud developers to handle complex distributed AI workloads across CPUs, GPUs, and TPUs. Similarly, platform engineers have long trusted Kubernetes, and specifically Google Kubernetes Engine, for powerful and reliable infrastructure orchestration. Earlier this year, we announced a partnership with Anyscale to bring the best of Ray and Kubernetes together, forming a distributed operating system for the most demanding AI workloads. Today, we are excited to share some of the open-source enhancements we have built together across Ray and Kubernetes.

Ray and Kubernetes label-based scheduling

One of the key benefits of Ray is its flexible set of primitives that enable developers to write distributed applications without thinking directly about the underlying hardware. However, there are some use cases that weren’t very well covered by the existing support for virtual resources in Ray.

To improve scheduling flexibility and empower the Ray and Kubernetes schedulers to perform better autoscaling for Ray applications, we are introducing label selectors to Ray. Ray label selectors are heavily inspired by Kubernetes labels and selectors, and intend to offer a familiar experience and smooth integration between the two systems. The Ray Label Selector API is available starting on Ray v2.49 and offers improved scheduling flexibility for distributed tasks and actors.

With the new Label Selector API, Ray now directly helps developers accomplish things like:

-

Assign labels to nodes in your Ray cluster (e.g.

gpu-family=L4, market-type=spot, region=us-west-1). -

When launching tasks, actors or placement groups, declare which zones, regions or accelerator types to run on.

-

Use custom labels to define topologies and advanced scheduling policies.

For scheduling distributed applications on GKE, you can use Ray and Kubernetes label selectors together to gain full control over application and the underlying infrastructure. You can also use this combination with GKE custom compute classes to define fallback behavior when specific GPU types are unavailable. Let’s dive into a specific example.

Below is an example Ray remote task that could run on various GPU types depending on available capacity. Starting in Ray v2.49, you can now define the accelerator type to bind GPUs with fallback behavior in cases where the primary GPU type or market type is not available. In this example, the remote task is targeting spot capacity with L4 GPUs but with a fallback to on-demand:

- code_block

- <ListValue: [StructValue([(‘code’, ‘@ray.remote(rn label_selector={rn “ray.io/accelerator”: “L4″rn “ray.io/market-type”: “spot”rn },rn fallback_strategy=[rn {rn “label_selector”: {rn “ray.io/accelerator”: “L4″rn “ray.io/market-type”: “on-demand”rn }rn },rn ]rn)rndef func():rn pass’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f30c3798fa0>)])]>

On GKE, you can couple the same fallback logic using custom compute classes such that the underlying infrastructure for the Ray cluster matches the same fallback behavior:

- code_block

- <ListValue: [StructValue([(‘code’, ‘apiVersion: cloud.google.com/v1rnkind: ComputeClassrnmetadata:rn name: gpu-compute-classrnspec:rn priorities:rn – gpu:rn type: nvidia-l4rn count: 1rn spot: truern – gpu:rn type: nvidia-l4rn count: 1rn spot: falsern nodePoolAutoCreation:rn enabled: truern whenUnsatisfiable: DoNotScaleUp’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f30c3798c40>)])]>

Refer to the Ray documentation to get started with Ray label selectors.

Advancing accelerator support in Ray and Kubernetes

Earlier this year we demonstrated the ability to use the new Ray Serve LLM APIs to deploy large models such as DeepSeek-R1 on GKE with A3 High and A3 Mega machine instances. Starting on GKE v1.33 and KubeRay v1.4, you can use Dynamic Resource Allocation (DRA) for flexible scheduling and sharing of hardware accelerators, enabling the use of the next-generation of AI accelerators with Ray. Specifically, you can now use DRA to deploy Ray clusters on A4X series machines utilizing the NVIDIA GB200 NVL72 rack-scale architecture. To use DRA with Ray on A4X, create an AI-optimized GKE cluster on A4X and define a ComputeDomain resource representing your NVL72 rack:

- code_block

- <ListValue: [StructValue([(‘code’, ‘apiVersion: resource.nvidia.com/v1beta1rnkind: ComputeDomainrnmetadata:rn name: a4x-compute-domainrnspec:rn numNodes: 18rn channel:rn resourceClaimTemplate:rn name: a4x-compute-domain-channel’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f30c3798940>)])]>

And then specify the claim in your Ray worker’s Pod template:

- code_block

- <ListValue: [StructValue([(‘code’, ‘workerGroupSpecs:rn …rn template:rn…rnspec:rn …rn volumes:rn …rn containers:rn – name: ray-containerrn …rn resources:rn limits:rn nvidia.com/gpu: 4rnt claims:rn – name: compute-domain-channelrn …rnresourceClaims:rn – name: compute-domain-channelrn resourceClaimTemplateName: a4x-compute-domain-channel’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f30c3798850>)])]>

Combining DRA with Ray ensures that Ray worker groups are correctly scheduled on the same GB200 NVL72 rack for optimal GPU performance for the most demanding Ray workloads.

We’re also partnering with Anyscale to bring a more native TPU experience to Ray and closer ecosystem integrations with frameworks like JAX. Ray Train introduced a JAXTrainer API starting in Ray v2.49, streamlining model training on TPUs using JAX. For more information on these TPU improvements in Ray, read A More Native Experience for Cloud TPUs with Ray.

Ray-native resource isolation with Kubernetes writable cgroups

Writable cgroups allow the container’s root process to create nested cgroups within the same container without requiring privileged capabilities. This feature is especially critical for Ray, which runs multiple control-plane processes alongside user code inside the same container. Even under the most intensive workloads, Ray can dynamically reserve a portion of the total container resources for system critical tasks, which significantly improves the reliability of your Ray clusters.

Starting on GKE v1.34.X-gke.X, you can enable writable cgroups for Ray clusters by adding the following annotations:

- code_block

- <ListValue: [StructValue([(‘code’, ‘metadata:rn annotations:rn node.gke.io/enable-writable-cgroups.test-container: “true”‘), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f30c3798790>)])]>

To enable Ray resource isolation using writable cgroups, set the following flags in ray start:

- code_block

- <ListValue: [StructValue([(‘code’, ‘ray start –head –enable-resource-isolation’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x7f30c3798160>)])]>

This capability is one such example of how we’re evolving Ray and Kubernetes to improve reliability across the stack without compromising on security.

In the near future, we plan to also introduce support for per-task and per-actor resource limits and requirements, a long requested feature in Ray. Additionally, we are collaborating with the open-source Kubernetes community to upstream this feature..

Ray vertical autoscaling with in-place pod resizing

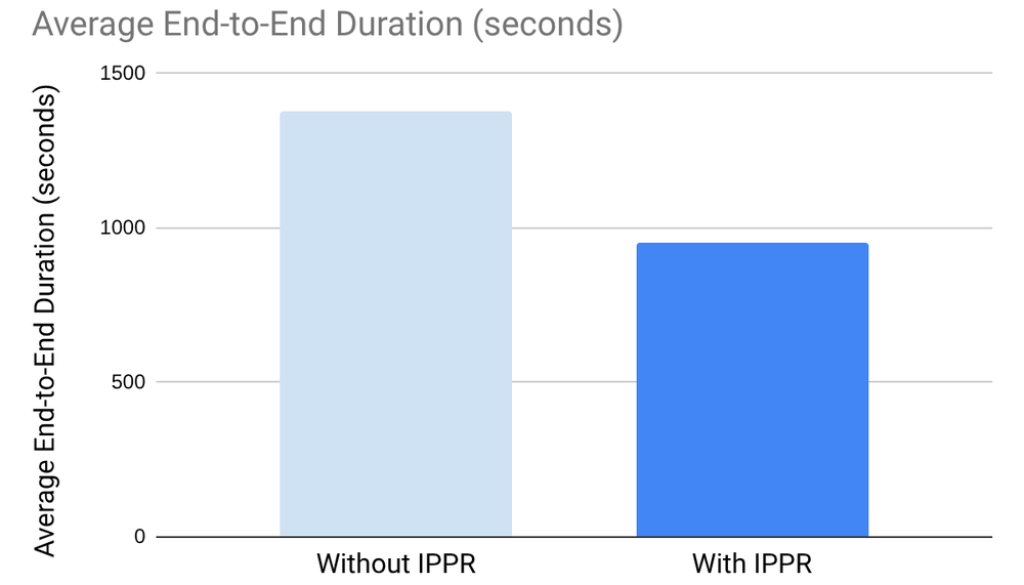

With the introduction of in-place pod resizing in Kubernetes v1.33, we’re in the early stages of integrating vertical scaling capabilities for Ray when running on Kubernetes. Our early benchmarks show a 30% increase in workload efficiency due to scaling pods vertically before scaling horizontally.

Benchmark based on completing two TPC-H workloads (Query 1 and 5) with Ray, 3 times on a GKE cluster with 3 worker nodes, each with 32 CPUs and 32 GB of memory.

In-place pod resizing enhances workload efficiency in the following ways:

-

Faster task/actor scale-up: With in-place resizing, Ray workers can scale up their available resources in seconds, an improvement over the minutes it could take to provision new nodes. This capability significantly accelerates the scheduling time for new Ray tasks.

-

Enhanced bin-packing and resource utilization: In-place pod resizing enables more efficient bin-packing of Ray workers onto Kubernetes nodes. As new Ray workers scale up, they can reserve smaller portions of the available node capacity, freeing up the remaining capacity for other workloads.

-

Improved reliability and reduced failures: In-place scaling of memory can significantly reduce out-of-memory (OOM) errors. By avoiding the need to restart failed jobs, this capability improves overall workload efficiency and stability.

Ray + Kubernetes = The distributed OS for AI

We are excited to highlight the recent joint innovations from our partnership with Anyscale. The powerful synergy between Ray and Kubernetes positions them as the distributed operating system for modern AI/ML. We believe our continued partnership will accelerate innovation within the open-source Ray and Kubernetes ecosystems, ultimately driving the future of distributed AI/ML.

Together, these updates are a significant step toward Ray working seamlessly on GKE. Here’s how to get started:

-

Request capacity: Get started quickly with Dynamic Workload Scheduler Flex Start for TPUs and GPUs, which provides access to compute for jobs that run for less than 7 days.

-

Get started with Ray on GKE

- Try out JaxTrainer with TPUs

Read More for the details.