GCP – BigQuery under the hood: Scalability, reliability and usability enhancements for gen AI inference

People often think of BigQuery in the context of data warehousing and analytics, but it is a crucial part of the AI ecosystem as well. And today, we’re excited to share significant performance improvements to BigQuery that make it even easier to extract insights from your data with generative AI.

In addition to native model inference where computation takes place entirely in BigQuery, we offer several batch-oriented generative AI capabilities that combine distributed execution in BigQuery with distributed execution with remote LLM inference on Vertex AI, with functions such as:

- ML.GENERATE_TEXT to generate text via Gemini, other Google-hosted partner LLMs (e.g., Anthropic Claude, Llama) or any open-source LLMs

- ML.GENERATE_EMBEDDING to generate text or multimodal embeddings

- AI.GENERATE_TABLE to generate structured tabular data via LLMs and their constrained decoding capabilities.

In addition to the above table-valued functions, you can use our row-wise functions such as AI.GENERATE for more convenient SQL query composition. All these functions are compatible with text data in managed tables and unstructured object files, such as images and documents. Thanks to the performance improvements we are unveiling today, users can expect dramatic gains in scalability, reliability, and usability across BigQuery and BigQuery ML:

-

Scalability: Over 100x gain for first-party LLM models (tens of millions of rows per six-hour job), over 30x gain for first-party embedding models (tens to hundreds of millions rows per six-hour job), and added support for Provisioned Throughput quotas.

-

Reliability: Over 99.99% LLM inference query completion without any row failures; and over 99.99% row-level success rate across all jobs, with the rare per-row failures being easily retriable without failing the query.

-

Usability: Enhanced user experience by supporting default connections, enabling global endpoints. We also enabled a single place for quota management (in Vertex AI) by automatically retrieving quota from Vertex AI to BigQuery.

Let’s take a closer look at these improvements.

- aside_block

- <ListValue: [StructValue([(‘title’, ‘$300 in free credit to try Google Cloud data analytics’), (‘body’, <wagtail.rich_text.RichText object at 0x7f536c5d4850>), (‘btn_text’, ”), (‘href’, ”), (‘image’, None)])]>

1. Scalability

We’ve dramatically improved throughput for our users on pay-as-you-go (PayGo) pricing model: ML.GENERATE_TEXT function throughput on first-party LLMs has increased by over 100x, and ML.GENERATE_EMBEDDING throughput by over 30x. For users requiring even higher performance, we’ve also added support for Vertex AI’s Provisioned Throughput (PT).

Google embeddings LLMs

In BigQuery, each project has a fixed default quota for first-party text embedding models, including the most popular text-embedding-004/005 models. To enhance utilization and scalability, we introduced dynamic token-based batching to pack as many rows as possible into a single request, under the token constraint. Combined with other optimizations, this boosts scalability from 2.7 million to approximately 80 million rows (30x) per six-hour job (based on a default quota of 1500 QPM and 50 tokens per row dataset). You can further increase this capacity by raising your Vertex AI quota to 10,000 QPM without manual approval, which enables embedding over 500 million rows in a six-hour job.

Early access customers such as Faraday are excited for this scalability boost:

“I just did 12,678,259 embeddings in 45 min with BigQuery’s built-in Gemini. That’s about 5000 per second. Try doing that with an HTTP API!” – Seamus Abshere, Co-founder and CTO, Faraday

Google Gemini: PayGo users via dynamic shared quota

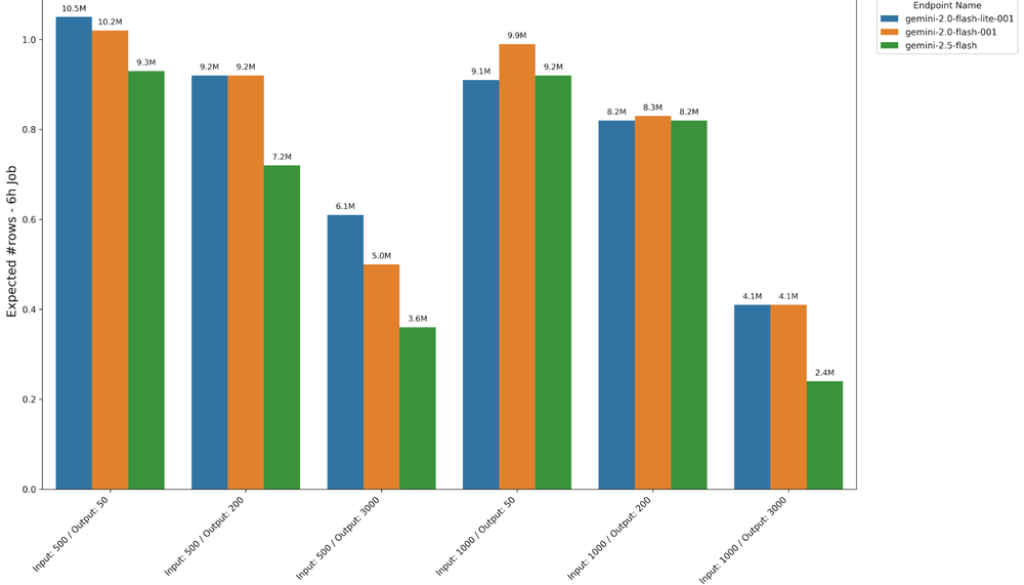

Gemini models served via Vertex AI now use dynamic shared quota (DSQ) for pay-as-you-go requests. DSQ provides access to a large, shared pool of resources, with throughput dynamically allocated based on real-time availability and demand across all customers. We rebuilt our remote inference system with adaptive traffic and producer-consumer-based error-retry mechanism. This enabled us to effectively leverage the higher amount of, but less-guaranteed, quota from DSQ. Based on internal benchmarking results, now we can process roughly 10.2 million rows in a six-hour job with gemini-2.0-flash-001, or 9.3 million rows with gemini-2.5-flash, where each row has an average of 500 input and 50 output tokens. Specific numbers depend on factors such as token count and model. See the chart below for more details.

Google Gemini: Dedicated quota via provisioned throughput

While our generative AI inference with Dynamic Shared Quota offers high throughput, it has an inherent upper bound and the potential for quota errors due to its non-guaranteed nature. To overcome these limitations, we’ve added support for Provisioned Throughput from Vertex AI. By purchasing dedicated capacity, you can help ensure consistently high throughput for demanding workloads, and get a reliable and predictable user experience. After purchasing Vertex AI provisioned throughput, you can easily leverage it in BigQuery gen AI queries by setting the “request_type” argument to “dedicated”.

2. Reliability

Facing limited and non-guaranteed generative AI inference quotas, we implemented a partial failure mode to allow queries to succeed, even if some rows fail. Via adaptive traffic control and a robust retry mechanism, across all users’ query traffic, we’ve now achieved 1) over 99.99% generative AI queries can finish without a single row failure, and 2) a row success rate of over 99.99%. This greatly enhanced BigQuery gen AI reliability.

Note that the above row-level success rate is achieved based on independent queries that invoke our generative AI functions, including both table-valued functions and row-wise scalar functions, where the error retry takes place implicitly. If you have even large workloads that see occasional errors you can use this simple SQL script or the Dataform package, which allow you to iteratively retry failed rows to get to a 100% row success rate in almost all cases.

3. Usability

We have also made Gen AI experience on BigQuery more user-friendly. We’ve streamlined complex workflows like quota management and BQ connection/permission setup, and enabled global endpoint more accessible experiences for users in all regions.

3.1 Default connection for remote model creation

Previously, setting up gen AI remote inference required a series of manual steps for users to configure a BigQuery connection and grant permissions to its service account. To solve this, we launched a new default connection. This feature automatically creates the connection and grants the necessary permissions, eliminating all manual config steps.

3.2 Global endpoint for first-party models

BigQuery gen AI inference now supports the global endpoint from Vertex AI, in addition to dozens of regional endpoints. This global endpoint provides higher availability across the world, which is particularly useful for smaller regions that may not have immediate access to the latest first-party gen AI models. Users without a data residency requirement for ML processing can use the global endpoint.

The SQL below illustrates how to create a Gemini model with the global endpoint and the BigQuery default connection.

- code_block

- <ListValue: [StructValue([(‘code’, “CREATE OR REPLACE MODEL `myproject.mydataset.mymodel`rnREMOTE WITH CONNECTION DEFAULTrnOPTIONS(rn endpoint = ‘https://aiplatform.googleapis.com/v1/projects/my_project/locations/global/publishers/google/models/gemini-2.5-flash’rn)”), (‘language’, ‘lang-sql’), (‘caption’, <wagtail.rich_text.RichText object at 0x7f536c58c190>)])]>

3.3 Automatic quota sync from Vertex AI

BigQuery now automatically syncs with Vertex AI to fetch your quota. Thus, if you have a higher than default quota, BigQuery uses it automatically, without any manual configuration on your part.

Get started today

Ready to build your next AI application? Start generating text, embedding, or structured tables using gen AI directly in BigQuery today. Dive into our gen AI overview page and its linked documentation for more details. If you have any questions or feedback, reach out to us at bqml-feedback@google.com.

Read More for the details.